Reducing Cold Start Latency for LLM Inference with NVIDIA Run:ai Model Streamer

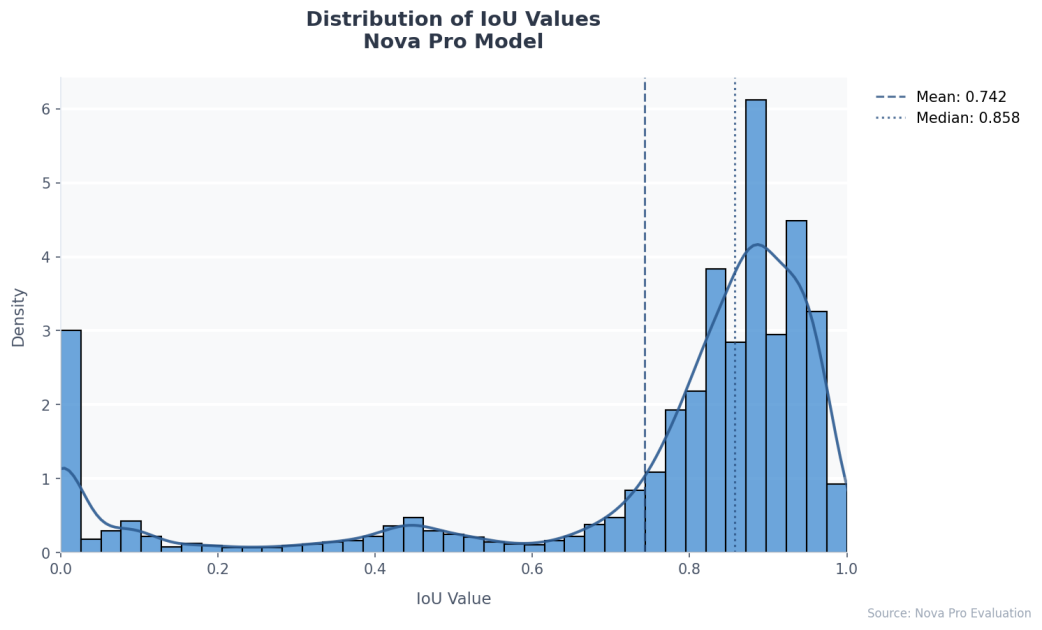

A detailed look at how NVIDIA Run:ai Model Streamer lowers cold-start times for LLM inference by streaming weights into GPU memory, with benchmarks across GP3, IO2, and S3 storage.