How to Scale Your LangGraph Agents in Production From a Single User to 1,000 Coworkers

Sources: https://developer.nvidia.com/blog/how-to-scale-your-langgraph-agents-in-production-from-a-single-user-to-1000-coworkers, https://developer.nvidia.com/blog/how-to-scale-your-langgraph-agents-in-production-from-a-single-user-to-1000-coworkers/, NVIDIA Dev Blog

Overview

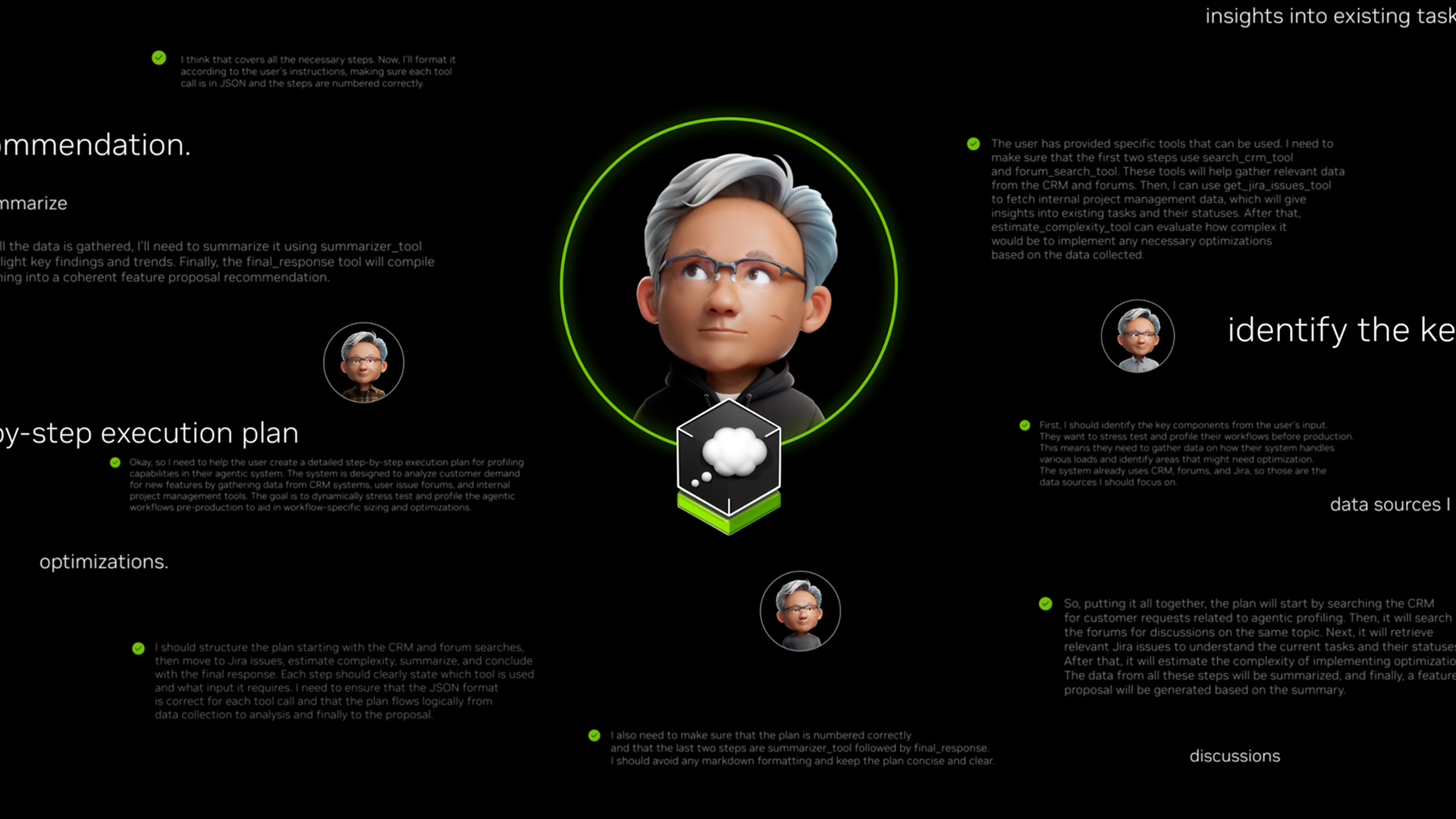

You’ve built a powerful AI agent and are ready to share it with your colleagues, but you have one big fear: will the agent work as more users join? This article describes a three-step approach to deploying and scaling a LangGraph-based agentic application using the NeMo Agent Toolkit, with a production setup based on an on-prem NVIDIA blueprint and the AI factory reference architecture. The deep-research AI-Q agent used in the example supports user document uploads (and metadata extraction), access to internal data sources, and web search to generate research reports. The blueprint for this deep-research application is open source and implemented with the NeMo Agent Toolkit, leveraging NVIDIA NeMo Retriever models for document ingest, retrieval, and LLM invocations. In production, the deployment runs on an internal OpenShift cluster and uses NVIDIA NIM microservices along with third-party observability tools. Our challenge was to determine what components need to scale to support hundreds of users across different NVIDIA teams. This article outlines the tools and techniques from the NeMo Agent Toolkit used at each phase of scaling, and how they informed our architecture and rollout plan. The AI-Q research agent demonstrates how a LangGraph application can integrate document ingestion, internal data access, and web search to produce research outputs. The blueprint is designed to be deployed on-premise and is built with the NeMo Agent Toolkit, including retriever models for data handling and LLM invocations. The production deployment emphasizes observability, profiling, and measured scaling as core pillars of a successful rollout.

Key features

- Evaluation and profiling system in the NeMo Agent Toolkit to gather data and quantify behavior across common usage scenarios.

- Easy-to-add evaluation sections in an application config, including a dataset of sample user inputs to profile variability and non-determinism.

- Function wrappers and simple decorators to automatically capture timing and token usage for key parts of the application.

- The evaluation workflow (eval) runs across the input dataset and produces metrics, including visualizations such as Gantt or Waterfall charts that show which functions execute during a user session.

- Identification of bottlenecks (for example, calls to the NVIDIA Llama Nemotron Super 49B reasoning LLM) to guide where to scale deployments (e.g., replicating the LLM deployment with NVIDIA NIM).

- Custom metrics and benchmarking to compare versions of the application code while ensuring output quality remains high.

- Exportable results to visualization platforms like Weights & Biases to track experiments over time.

- Load testing across multiple users using the NeMo Agent Toolkit sizing calculator, simulating parallel workflows to estimate hardware requirements.

- Metrics such as p95 timing for LLM invocations and overall workflow timing to inform capacity planning.

- Observability tooling with OpenTelemetry (OTEL) collectors and Datadog to capture logs, performance data, and LLM traces, enabling session-level tracing.

- A phased rollout approach (start with small teams, then scale) to observe performance, fix issues, and validate scalability before broad deployment.

- An AI factory reference architecture and integration with internal NVIDIA microservices (NIM) to support a production-grade deployment.

Common use cases

- The AI-Q research agent enables users to upload documents, extract metadata, and search internal data sources, then synthesize findings into research reports.

- Users can perform web searches to augment internal data, helping generate more comprehensive analyses.

- On-prem deployments enable teams to work with confidential information while maintaining required security and access controls.

- The NeMo Agent Toolkit provides profiling, evaluation, and observability tooling to improve performance and reliability as user concurrency grows.

- A phased rollout supports gradual adoption across teams, allowing performance data to inform ready-to-scale capacity planning.

Setup & installation

The article emphasizes a three-phase approach to scaling, each using tools from the NeMo Agent Toolkit:

- Phase 1: Profiling and evaluation for a single user to establish a performance baseline.

- Phase 2: Load testing across multiple concurrent users to forecast hardware needs and identify bottlenecks.

- Phase 3: Phased deployment across teams with observability to ensure performance remains within latency targets. Setup involves adding an eval section to the application’s configuration to enable profiling, running the eval workflow over a representative input dataset, and using the toolkit’s sizing calculator to plan hardware requirements. The profiling captures timing and token usage, and the results can be collected in a visualization platform for analysis. Observability tooling (OTEL collector with Datadog) is used to collect traces and logs, including per-session insights.

Note: The source describes these steps and components but does not provide exact command syntax or configuration fragments in the article.

Exact commands are not provided in the source article.# Example placeholder: no commands are provided by the source.

# The article references config-based eval sections and a sizing calculator.Quick start

A minimal runnable example is not provided as executable code in the source. Instead, the article presents a workflow to scale an agentic application using the NeMo Agent Toolkit:

- Start with a single-user profiling run by adding an eval section to your app’s config and executing the eval workflow on a representative input set.

- Use the produced metrics to identify bottlenecks (e.g., LLM invocations) and plan replication of critical components (such as the LLM backend).

- Perform load testing with the toolkit’s sizing calculator to estimate how many GPUs or replicas are needed for your target concurrency.

- Deploy with a phased rollout, monitoring traces and metrics via OTEL and Datadog to ensure performance remains within targets while expanding to more users.

Pros and cons

- Pros

- Data-driven scaling: profiling and load testing inform hardware and deployment decisions.

- Early bottleneck detection enables targeted optimization (e.g., LLM deployment replication).

- Observability and traceability across user sessions improve reliability and debugging.

- Phased rollout reduces risk when expanding to more teams.

- On-prem deployment with an AI factory reference architecture helps protect confidential information.

- Cons

- The article does not explicitly enumerate trade-offs or drawbacks; implied considerations include setup complexity, integration with OpenShift and NIM microservices, and the need for profiling before scaling.

Alternatives (brief comparisons)

- The article presents a profiling- and load-testing driven scaling approach using the NeMo Agent Toolkit. It does not explicitly document alternative deployment strategies within the text. The described workflow emphasizes data-driven decisions, bottleneck identification, and phased rollout as core principles for scaling LangGraph agents in production.

Pricing or License

- Pricing or license details are not explicitly provided in the article.

References

More resources

CUDA Toolkit 13.0 for Jetson Thor: Unified Arm Ecosystem and More

Unified CUDA toolkit for Arm on Jetson Thor with full memory coherence, multi-process GPU sharing, OpenRM/dmabuf interoperability, NUMA support, and better tooling across embedded and server-class targets.

Cut Model Deployment Costs While Keeping Performance With GPU Memory Swap

Leverage GPU memory swap (model hot-swapping) to share GPUs across multiple LLMs, reduce idle GPU costs, and improve autoscaling while meeting SLAs.

Improving GEMM Kernel Auto-Tuning Efficiency with nvMatmulHeuristics in CUTLASS 4.2

Introduces nvMatmulHeuristics to quickly select a small set of high-potential GEMM kernel configurations for CUTLASS 4.2, drastically reducing auto-tuning time while approaching exhaustive-search performance.

Fine-Tuning gpt-oss for Accuracy and Performance with Quantization Aware Training

Guide to fine-tuning gpt-oss with SFT + QAT to recover FP4 accuracy while preserving efficiency, including upcasting to BF16, MXFP4, NVFP4, and deployment with TensorRT-LLM.

How Small Language Models Are Key to Scalable Agentic AI

Explores how small language models enable cost-effective, flexible agentic AI alongside LLMs, with NVIDIA NeMo and Nemotron Nano 2.

Getting Started with NVIDIA Isaac for Healthcare Using the Telesurgery Workflow

A production-ready, modular telesurgery workflow from NVIDIA Isaac for Healthcare unifies simulation and clinical deployment across a low-latency, three-computer architecture. It covers video/sensor streaming, robot control, haptics, and simulation to support training and remote procedures.