How to spot and fix 5 pandas bottlenecks with cudf.pandas (GPU acceleration)

Sources: https://developer.nvidia.com/blog/how-to-spot-and-fix-5-common-performance-bottlenecks-in-pandas-workflows, https://developer.nvidia.com/blog/how-to-spot-and-fix-5-common-performance-bottlenecks-in-pandas-workflows/, NVIDIA Dev Blog

Overview

Slow data loads, memory-intensive joins, and long-running operations are common pain points in pandas workflows. This guide covers five frequent bottlenecks, how to recognize them, and practical workarounds you can apply on CPU with a few code tweaks. It also introduces a GPU-powered drop-in accelerator, cudf.pandas, which delivers order-of-magnitude speedups with no code changes. If you don’t have a GPU on your machine, you can use cudf.pandas for free in Google Colab, where GPUs are available and the library comes pre-installed.

Key features

- Drop-in GPU acceleration for pandas workflows via cudf.pandas, enabling parallelism across thousands of GPU threads with no code changes required for many operations.

- Fast I/O and data processing: CPU fixes include using a faster parser like PyArrow and strategies such as loading only needed columns or reading in chunks; GPU fixes leverage cuDF to accelerate CSV/Parquet reads and writes.

- Large-join optimizations: indexed joins and pre-merge column pruning on CPU; GPU acceleration for joins when cudf.pandas is enabled.

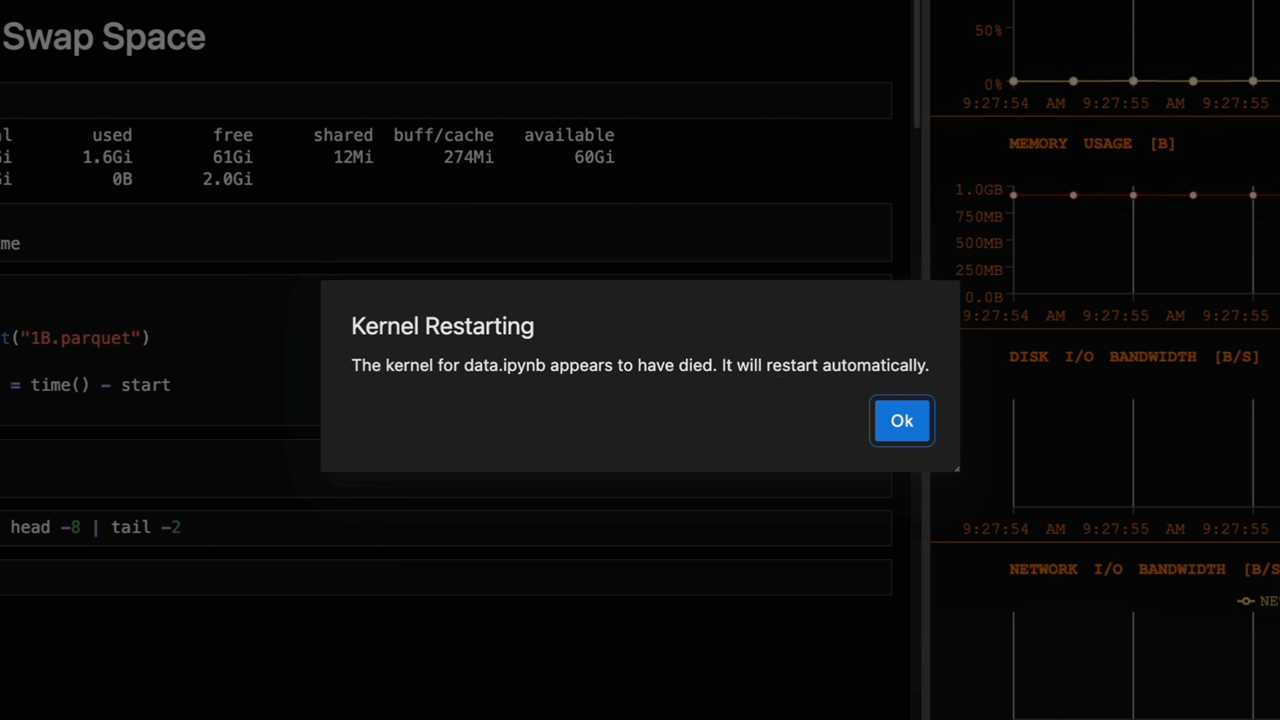

- Memory efficiency: downcasting numeric types and converting low-cardinality strings to category to reduce RAM; unified memory optimizations on GPU for larger-than-GPU-memory datasets.

- String and high-cardinality data acceleration: cuDF provides GPU-optimized kernels for string operations like .str.len(), .str.contains(), and string-key joins.

- Practical examples and reference notebooks: concrete notebooks show the effect of the accelerators on representative workloads.

Common use cases

- Data loading and parsing: replacing or augmenting pandas CSV parsing with faster engines, and considering Parquet/Feather formats for faster I/O.

- Large joins and merges: reducing memory movement by dropping unused columns before merges, and using indexed joins where possible.

- Handling wide object/string columns: transforming low-cardinality strings to category, while keeping high-cardinality columns as strings.

- Groupby and aggregations on big datasets: pre-filter data and drop unneeded columns to shrink the grouped dataset; observe=True for categorical keys can reduce unnecessary category combinations.

- Memory-constrained workflows: when datasets exceed CPU RAM, use cudf.pandas with Unified Virtual Memory (UVM) to combine GPU VRAM and CPU RAM into a single pool, with automatic paging between GPU and system memory.

Setup & installation

The approach is designed to be non-disruptive: enable the cudf.pandas extension and continue using pandas code where possible.

%load_ext cudf.pandasNote: In Colab, you can access GPUs for free and use cudf.pandas without rewriting your code.

Quick start

The following demonstrates using the same pandas code after enabling cudf.pandas. You can run typical DataFrame operations and a simple groupby, with GPU acceleration applied under the hood.

import pandas as pd

# After enabling the cudf.pandas extension, you can use pandas-like code as usual

df = pd.DataFrame({"city": ["New York", "San Francisco", "New York"],

"value": [1, 2, 3]})

# Same pandas API, now accelerated on GPU when cudf.pandas is active

result = df.groupby("city").sum()

print(result)For larger, real-world workloads, the same approach scales to millions of rows and complex aggregations, delivering substantial speedups without changing your code.

Pros and cons

- Pros

- No code changes required for many operations when using cudf.pandas; drop-in acceleration across pandas-like syntax.

- Massive speedups for large-scale joins, groupbys, and string operations when running on GPU.

- Unified memory approach allows processing data larger than GPU memory with automatic paging.

- Free GPU access in Google Colab makes hands-on experimentation accessible.

- Cons

- Requires GPU-backed hardware or Colab access to realize GPU benefits.

- Some edge-cases or exotic operations may require adjustments or validation to ensure parity with CPU behavior.

- The setup relies on the cudf.pandas extension being loaded; not all environments may have it pre-installed.

Alternatives (brief comparisons)

The article discusses several approaches alongside cudf.pandas:

- PyArrow CSV parsing: faster CSV parsing than pandas default parser on CPU.

- Parquet/Feather formats: faster reads for columnar data, reducing I/O bottlenecks.

- Polars with cuDF-powered GPU engine: drop-in acceleration for joins, groupbys, aggregations, and I/O without changing existing Polars queries (GPU-backed path). Table: quick comparison | Approach | Typical benefit | When to use |---|---|---| | cudf.pandas (GPU) | Large speedups on reads, joins, groupbys, and string ops | When GPU is available and code parity is preferred |PyArrow CSV | Faster CPU CSV parsing | When reading CSVs aggressively on CPU |Parquet/Feather | Faster I/O and reads | When data is stored in columnar formats |Polars with cuDF engine | Similar drop-in acceleration for Polars workflows | If using Polars but need GPU speedups |

Pricing or License

Not specified in this article.

References

More resources

CUDA Toolkit 13.0 for Jetson Thor: Unified Arm Ecosystem and More

Unified CUDA toolkit for Arm on Jetson Thor with full memory coherence, multi-process GPU sharing, OpenRM/dmabuf interoperability, NUMA support, and better tooling across embedded and server-class targets.

Cut Model Deployment Costs While Keeping Performance With GPU Memory Swap

Leverage GPU memory swap (model hot-swapping) to share GPUs across multiple LLMs, reduce idle GPU costs, and improve autoscaling while meeting SLAs.

Improving GEMM Kernel Auto-Tuning Efficiency with nvMatmulHeuristics in CUTLASS 4.2

Introduces nvMatmulHeuristics to quickly select a small set of high-potential GEMM kernel configurations for CUTLASS 4.2, drastically reducing auto-tuning time while approaching exhaustive-search performance.

Make ZeroGPU Spaces faster with PyTorch ahead-of-time (AoT) compilation

Learn how PyTorch AoT compilation speeds up ZeroGPU Spaces by exporting a compiled model once and reloading instantly, with FP8 quantization, dynamic shapes, and careful integration with the Spaces GPU workflow.

Fine-Tuning gpt-oss for Accuracy and Performance with Quantization Aware Training

Guide to fine-tuning gpt-oss with SFT + QAT to recover FP4 accuracy while preserving efficiency, including upcasting to BF16, MXFP4, NVFP4, and deployment with TensorRT-LLM.

How Small Language Models Are Key to Scalable Agentic AI

Explores how small language models enable cost-effective, flexible agentic AI alongside LLMs, with NVIDIA NeMo and Nemotron Nano 2.