Unified Memory with OpenACC for Ocean Modeling on GPUs (NVIDIA HPC SDK v25.7)

Sources: https://developer.nvidia.com/blog/less-coding-more-science-simplify-ocean-modeling-on-gpus-with-openacc-and-unified-memory, https://developer.nvidia.com/blog/less-coding-more-science-simplify-ocean-modeling-on-gpus-with-openacc-and-unified-memory/, NVIDIA Dev Blog

Overview

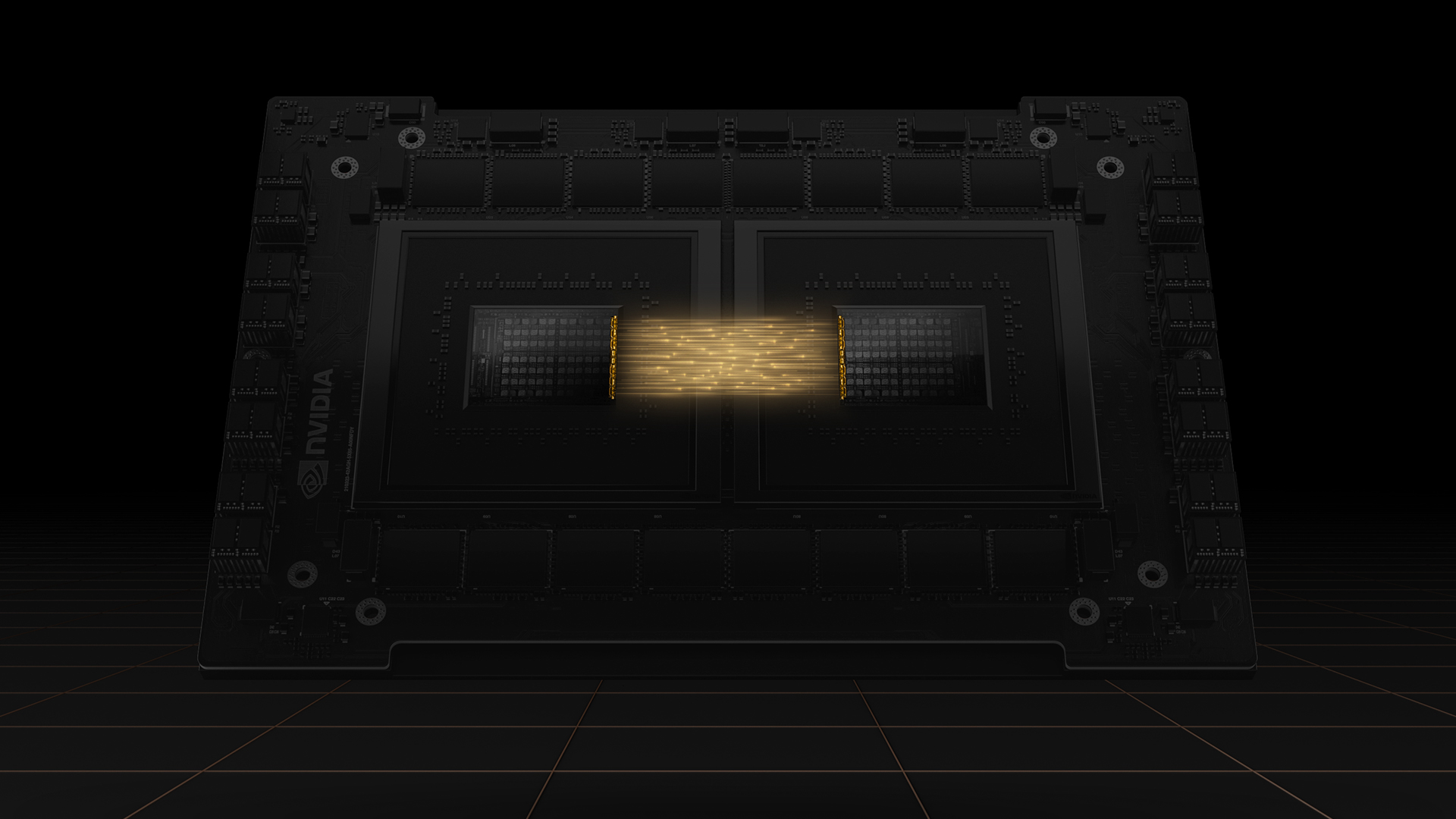

NVIDIA HPC SDK v25.7 delivers a major productivity boost for developers building HPC applications that run on GPUs. The release centers on unified memory programming, providing a complete toolset that automates data movement between CPU and GPU. With a shared address space on coherent platforms such as the Grace Hopper architecture family, programmers can focus on parallelization rather than manual data transfers, thanks to automatic data movement managed by the CUDA driver. This approach accelerates GPU porting cycles, reduces bugs, and gives scientists and engineers more flexibility when optimizing workloads. Notable deployment examples include the NVIDIA GH200 Grace Hopper Superchip and GB200 NVL72 systems, now used at the ALPS supercomputer (CSCS) and JUPITER (JSC). These platforms combine high bandwidth with tight CPU-GPU coherence, enabling more productive GPU acceleration for scientific codes. The unified memory model removes many of the data-management complexities that have historically slowed GPU porting. In practical terms, deep-copy patterns, derived types, and complex data layouts can often be parallelized directly without explicit, hand-written data transfers. The CUDA driver handles most data movements, and system memory is accessible from both CPU and GPU through a single address space. Since CUDA 12.4, automatic page migrations can improve locality for frequently accessed pages, further boosting performance on Grace Hopper and similar platforms. A key driver of productivity is the ability to port real-world codes with less boilerplate data management. As one researcher observed, unified memory programming lets teams move faster when porting ocean models to GPUs and to experiment with running more workloads on GPUs compared with traditional approaches. The NEMO ocean modeling framework has served as a real-world case study for these benefits in HPC blog coverage and demonstrations tied to Grace Hopper-enabled workflows. The article also discusses how OpenACC and unified memory address common GPU-programming challenges. For example, the classic deep-copy pattern, where a derived-type allocatable array in a Fortran structure requires an extra transfer loop, becomes simpler when the memory is unified. Similarly, the growing use of object-oriented C++ with STL containers can be problematic on GPUs without unified memory; relying on a raw pointer to allocation can be necessary in such cases, whereas unified memory simplifies access to both container metadata and elements. In the NEMO port project described in the post, most memory management is automated by the CUDA driver on Grace Hopper platforms. The workflow demonstrates a gradual port strategy: begin with CPU code, annotate hot loops with OpenACC, and progressively move computations to the Hopper GPU while preserving correctness via asynchronous execution. Early results showed speedups ranging from roughly 2x to 5x for ported sections, yielding an overall end-to-end improvement around 2x when the full workflow is partially accelerated. These outcomes illustrate how unified memory can bring forward performance gains during porting and help teams explore greater GPU utilization earlier in redevelopment projects. For researchers and developers, the key takeaway is that unified memory—paired with high-bandwidth interconnects and coherent CPU-GPU memory—reduces the cognitive load of GPU programming, enabling faster iteration, fewer bugs, and more flexible optimization strategies in complex scientific codes. References to Grace Hopper deployments and related HPC blog coverage provide practical context for these architectural and compiler-driven capabilities.

Source: NVIDIA Dev Blog: Less Coding, More Science: Simplify Ocean Modeling on GPUs With OpenACC and Unified Memory. Link: https://developer.nvidia.com/blog/less-coding-more-science-simplify-ocean-modeling-on-gpus-with-openacc-and-unified-memory/

Key features

- Complete unified memory toolset in NVIDIA HPC SDK v25.7 that automates CPU-GPU data movement.

- Shared address space on coherent CPU-GPU platforms (Grace Hopper, GB200 NVL72) enabling automatic page migration and data coherence.

- CUDA driver manages data movement; explicit host-device transfers are often unnecessary, reducing boilerplate and bugs.

- OpenACC improvements tailored to unified memory, including asynchronous execution strategies to improve concurrency and avoid data races.

- Support for real-world codes with complex data layouts (e.g., derived types, dynamic allocatable arrays, STL containers) without manual data-copy boilerplate.

- Demonstrated productivity gains in ocean-model porting (NEMO) with end-to-end speedups, and notable per-section speedups (roughly 2x–5x for ported portions).

- Real-world deployment context: Grace Hopper-based systems (GH200, GB200) at ALPS/CSCS and JUPITER/JSC, highlighting high-bandwidth interconnects and strong memory coherence.

- Integration with CUDA 12.4 migration and memory-management improvements that enhance page locality and migration efficiency on unified memory platforms.

- Practical guidance on porting workflows: focus on parallelizing critical loops with OpenACC while relying on unified memory to manage data automatically.

Common use cases

- Porting ocean and climate models (e.g., NEMO) to GPUs to accelerate diffusion and advection kernels and other performance-critical regions.

- Memory-bound workloads where diffusion and tracer advection are hotspots, benefiting from unified memory’s reduced data-management burden.

- Codes using advanced C++ features (std::vector, STL containers) that previously required rewriting to raw pointers for GPU offloading.

- Projects seeking earlier GPU-porting productivity gains, enabling experimentation with running more workloads on GPUs earlier in the porting process.

- Workflows where overlapping CPU and GPU work via asynchronous kernels leads to better resource utilization and reduced synchronization overhead.

Setup & installation

# Setup and installation details are not provided in the source.Quick start

Not a runnable Quick Start example is provided in the source. The discussion focuses on porting strategy and architectural benefits rather than a ready-to-run snippet. See the References for the primary article.

Pros and cons

- Pros

- Significant productivity gains due to automated data movement and unified memory.

- Earlier and easier GPU porting cycles with less boilerplate code.

- Ability to experiment with more workloads on GPUs without rewriting data-management logic.

- Reduced risk of data races through OpenACC 3.4 capture modifiers and asynchronous execution patterns.

- Real-world evidence of speedups in ported code segments and end-to-end workflows.

- Grace Hopper and similar architectures offer high-bandwidth interconnects and a coherent memory space that enhances locality.

- Cons

- Practical benefits depend on hardware support for unified memory (Grace Hopper-like systems).

- Heterogeneous Memory Management (HMM) approaches remain an alternative in some platforms and workloads.

- While automatic, page migrations and unified memory still require careful performance tuning for optimal locality.

- Some legacy or highly specialized C++ patterns may require adjustments if unified memory behavior diverges from host expectations.

Alternatives

| Approach | Description | When to consider | Pros | Cons |---|---|---|---|---| | Manual CPU-GPU data management | Explicit transfers and memory copies annotated with OpenACC/CUDA directives | Legacy codes with strict control over transfers | Full control over data movement; potential for optimal locality | High complexity, more bugs, longer porting cycles |Heterogeneous Memory Management (HMM) | Driver/OS-assisted memory migration without unified memory shared address space | Systems without unified memory or where hardware coherence is limited | Works on a broader set of platforms | May involve higher latency, less seamless programming model |Traditional CPU-only port | Keep computation on CPU, ported kernels for GPU as needed | Small workloads or late-stage porting | Simpler code path on CPU side | Missed GPU acceleration benefits; longer runtimes |

Pricing or License

Pricing and licensing details are not explicitly provided in the source material.

References

More resources

CUDA Toolkit 13.0 for Jetson Thor: Unified Arm Ecosystem and More

Unified CUDA toolkit for Arm on Jetson Thor with full memory coherence, multi-process GPU sharing, OpenRM/dmabuf interoperability, NUMA support, and better tooling across embedded and server-class targets.

Cut Model Deployment Costs While Keeping Performance With GPU Memory Swap

Leverage GPU memory swap (model hot-swapping) to share GPUs across multiple LLMs, reduce idle GPU costs, and improve autoscaling while meeting SLAs.

Improving GEMM Kernel Auto-Tuning Efficiency with nvMatmulHeuristics in CUTLASS 4.2

Introduces nvMatmulHeuristics to quickly select a small set of high-potential GEMM kernel configurations for CUTLASS 4.2, drastically reducing auto-tuning time while approaching exhaustive-search performance.

Make ZeroGPU Spaces faster with PyTorch ahead-of-time (AoT) compilation

Learn how PyTorch AoT compilation speeds up ZeroGPU Spaces by exporting a compiled model once and reloading instantly, with FP8 quantization, dynamic shapes, and careful integration with the Spaces GPU workflow.

Fine-Tuning gpt-oss for Accuracy and Performance with Quantization Aware Training

Guide to fine-tuning gpt-oss with SFT + QAT to recover FP4 accuracy while preserving efficiency, including upcasting to BF16, MXFP4, NVFP4, and deployment with TensorRT-LLM.

How Small Language Models Are Key to Scalable Agentic AI

Explores how small language models enable cost-effective, flexible agentic AI alongside LLMs, with NVIDIA NeMo and Nemotron Nano 2.