AGI Is Not Multimodal: Embodiment-First Intelligence

Sources: https://thegradient.pub/agi-is-not-multimodal, https://thegradient.pub/agi-is-not-multimodal/, The Gradient

Overview

Despite rapid demonstrations of generative capabilities across language and vision, this piece argues that such progress does not constitute a straightforward path to Artificial General Intelligence (AGI). The central claim is that true AGI will not emerge simply by scaling models or by gluing together multiple modalities; rather, it requires a form of intelligence that is fundamentally situated in and grounded by a physical world. The author contends that modality-centric architectures—where different data streams are fused in hopes of producing generality—risk becoming patchworks that fall short of sensorimotor reasoning, motion planning, and social coordination. The envisioned direction is an embodiment-first approach: treat interaction with the environment as primary and view modality integration as emergent, not foundational. The piece opens with a prefatory critique of disembodied, purely symbolic definitions of general intelligence. It asserts that a true AGI must be capable across domains that originate in physical reality—think repairing a car, untying a knot, cooking a meal—tasks that require a grounded world model. From there, the argument develops that language-based models like LLMs do not inherently learn a robust world model; at best they may memorize complex symbolic rules or heuristics that help predict tokens. The author cautions against equating linguistic prowess with genuine understanding, arguing that a model’s grasp of syntax can masquerade as semantics when confronted with real-world, embodied tasks. A recurring point is the distinction between world modeling and token prediction. Although LLMs can exhibit human-like performance on certain benchmarks, the evidence does not universally support the claim that they are building high-fidelity models of the physical world. The OthelloGPT example—where a transformer trained on moves can predict a board state—illustrates why sequence prediction on symbolic data does not automatically generalize to the physical world. The article notes that many physical tasks resist full representation through symbolic descriptions alone and demand perceptual grounding, causal understanding, and interaction with material reality. In other words, the success of next-token prediction should not be taken as a stand-in for environmental understanding or world-modeling capability. The TLDR emphasizes that major advancements often arise from thoughtful examination of intelligence structure rather than blind scale. It cautions against over-attributing world-modeling to language-trained systems simply because they can predict a sequence of tokens. The piece argues for a more explicit focus on embodiment and environment interaction as primary sources of intelligent behavior, with modality fusion treated as an emergent property rather than a prerequisite for generality. The discussion ultimately positions embodied cognition and world-model groundedness as the promising direction for achieving AGI, rather than continuing to pursue a predominantly modality-centered, patchwork approach. The Gradient.

Key features

- Embodiment-first: Prioritize interaction with the physical world as the core driver of intelligence rather than relying on multimodal integration alone.

- Grounded world models: Seek representations that enable prediction of high-fidelity observations in the real world, not just token sequences.

- Distinction between syntax and semantics: Recognize that syntactic proficiency in language does not automatically confer semantic understanding or real-world grounding.

- Limitations of next-token thinking: Question whether world-models are learned through token prediction or if memorized symbolic rules drive apparent intelligence.

- Critique of patchwork multimodal systems: View modality-bridging as emergent rather than foundational, reducing reliance on simply gluing disparate modalities.

- Sensorimotor and social competencies: Identify essential capabilities such as sensorimotor reasoning, motion planning, and social coordination as core AGI requirements.

Common use cases

- Research direction setting: Guide AI researchers and product teams to reframe goals toward embodied intelligence and environment interaction.

- Robotics and embodied AI: Inform exploration of systems that learn through physical interaction rather than purely symbolic inputs.

- Progress evaluation: Encourage assessment frameworks that benchmark performance on tasks requiring physical grounding, not only cross-modal capabilities.

- Strategic planning for AI policy: Warn against overreliance on scale as a proxy for general intelligence and promote emphasis on real-world grounding.

Setup & installation

# Fetch the article for offline study

curl -L -o agi_not_multimodal.html https://thegradient.pub/agi-is-not-multimodal/

# Optional: convert to Markdown for offline reading (requires pandoc)

pandoc agi_not_multimodal.html -t gfm -o agi_not_multimodal.mdQuick start

# Minimal runnable example: print a concise synthesis of the article's thesis

python3 - << 'PY'

summary = [

"AGI progress may be overstated if limited to multimodal patchwork architectures.",

"True AGI requires embodiment and interaction with a physical world model.",

"The success of LLMs may stem from memorizing syntactic rules rather than robust world understanding."

]

print('\n'.join(summary))

PYPros and cons

- Pros

- Grounds intelligence in embodied interaction, aligning with real-world problem solving.

- Challenges assumptions that scale and cross-modal fusion suffice for AGI.

- Encourages explicit consideration of world modeling and environmental dynamics.

- Cons

- Embodiment-first approaches can be more challenging to implement and evaluate at scale.

- The article does not provide a concrete, universally applicable roadmap or algorithm; it offers a design philosophy.

- Transitioning from symbolic, language-based methods to embodied systems may require new data, benchmarks, and tooling.

Alternatives (brief comparisons)

| Approach | Core claim | Potential trade-offs |---|---|---| | Multimodal patchwork AGI | Glue together modalities (vision, language, etc.) to achieve generality | May fail to deliver sensorimotor reasoning, motion planning, social coordination; can lack grounding |Embodiment-first intelligence | Prioritize environment interaction and world modeling as the source of intelligence | Harder to implement; requires embodied data and robust evaluation in physical settings |

Pricing or License

Not explicitly provided by the article. No licensing information is stated within the source content.

References

- AGI Is Not Multimodal. The Gradient. https://thegradient.pub/agi-is-not-multimodal/

More resources

CUDA Toolkit 13.0 for Jetson Thor: Unified Arm Ecosystem and More

Unified CUDA toolkit for Arm on Jetson Thor with full memory coherence, multi-process GPU sharing, OpenRM/dmabuf interoperability, NUMA support, and better tooling across embedded and server-class targets.

Cut Model Deployment Costs While Keeping Performance With GPU Memory Swap

Leverage GPU memory swap (model hot-swapping) to share GPUs across multiple LLMs, reduce idle GPU costs, and improve autoscaling while meeting SLAs.

Fine-Tuning gpt-oss for Accuracy and Performance with Quantization Aware Training

Guide to fine-tuning gpt-oss with SFT + QAT to recover FP4 accuracy while preserving efficiency, including upcasting to BF16, MXFP4, NVFP4, and deployment with TensorRT-LLM.

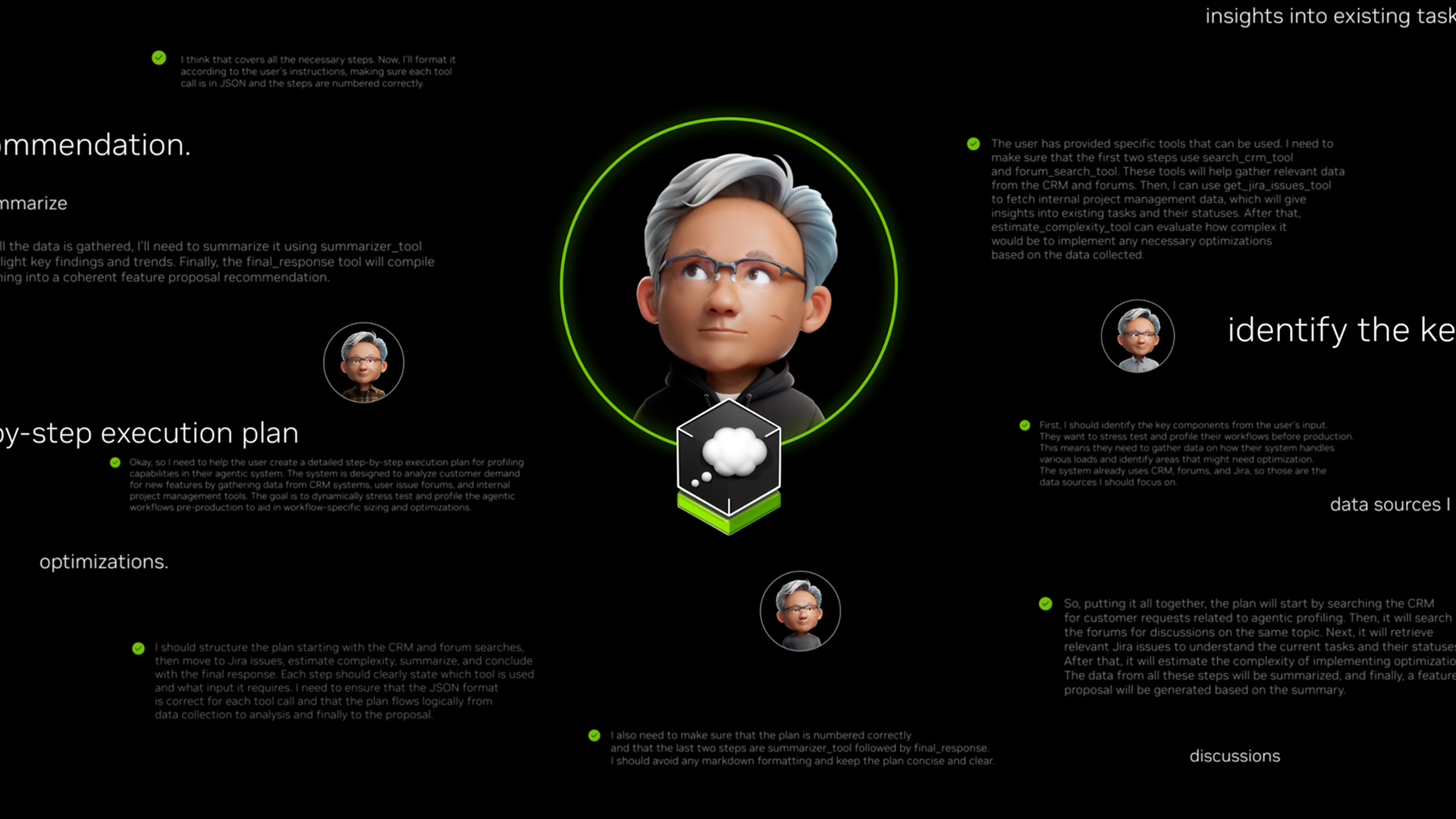

How Small Language Models Are Key to Scalable Agentic AI

Explores how small language models enable cost-effective, flexible agentic AI alongside LLMs, with NVIDIA NeMo and Nemotron Nano 2.

Getting Started with NVIDIA Isaac for Healthcare Using the Telesurgery Workflow

A production-ready, modular telesurgery workflow from NVIDIA Isaac for Healthcare unifies simulation and clinical deployment across a low-latency, three-computer architecture. It covers video/sensor streaming, robot control, haptics, and simulation to support training and remote procedures.

How to Scale Your LangGraph Agents in Production From a Single User to 1,000 Coworkers

Guidance on deploying and scaling LangGraph-based agents in production using the NeMo Agent Toolkit, load testing, and phased rollout for hundreds to thousands of users.