Anthology: Conditioning LLMs with Rich Backstories to Create Virtual Personas

Sources: http://bair.berkeley.edu/blog/2024/11/12/virutal-persona-llm, http://bair.berkeley.edu/blog/2024/11/12/virutal-persona-llm/, BAIR Blog

Overview

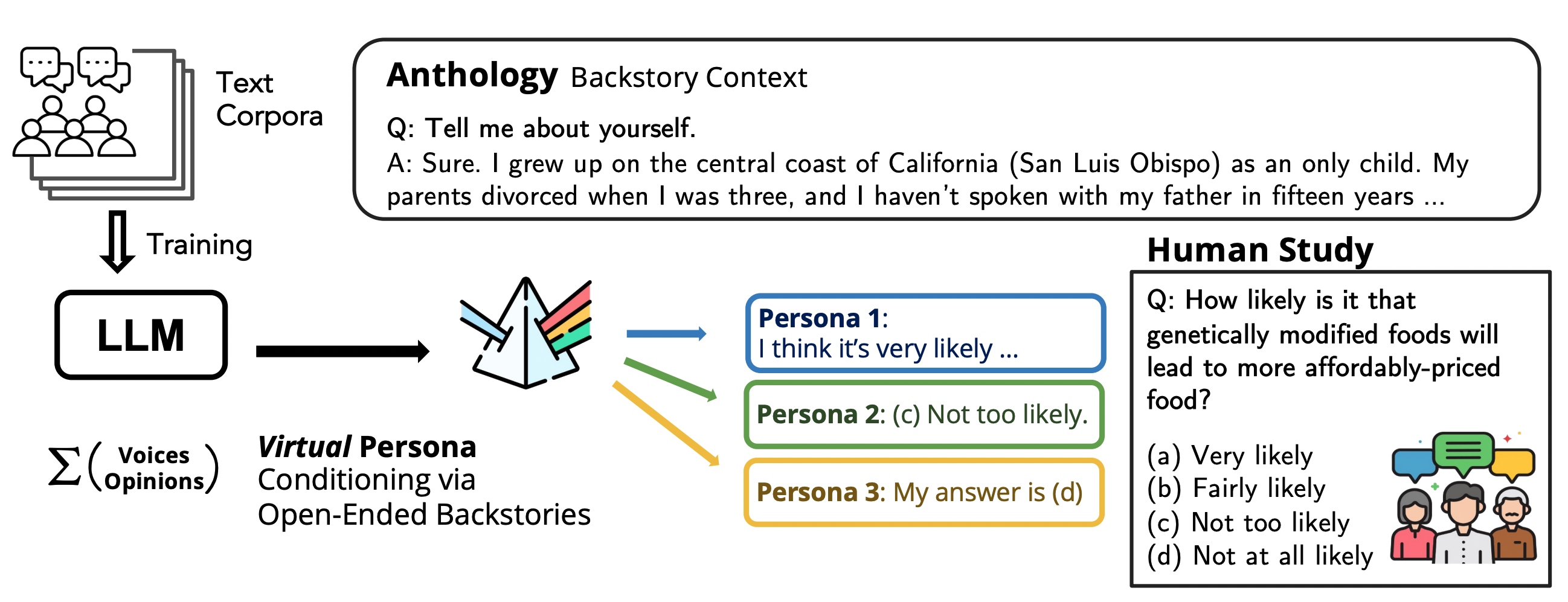

Anthology is a method for conditioning large language models (LLMs) to representative, consistent, and diverse virtual personas by providing richly detailed life narratives as conditioning context. It builds on the notion that modern LLMs, given appropriate textual context, can behave as agent models and reflect characteristics of a particular voice. By grounding responses in a single, coherent backstory rather than a mixture of voices, Anthology aims to simulate individual human samples with higher fidelity. The backstories encode explicit and implicit markers of personal identity—demographic traits, cultural background, values, experiences—and serve as conditioning context for the model. A key practical idea is to generate backstories at scale, with the LLMs themselves contributing to the creation of diverse narratives representing a wide range of demographics. Once a backstory is created, the virtual persona conditioned on that backstory is evaluated by matching its responses to real-world survey samples. In the reported work, the authors compare virtual personas against Pew Research Center ATP surveys Waves 34, 92, and 99, using evaluation metrics such as the lower bounds of each metric derived from random population splits and average Wasserstein distances. The approach is demonstrated with multiple model backends, notably Llama-3-70B and Mixtral-8x22B. Anthology emphasizes that the richness of backstories leads to more nuanced responses than baselines that rely on simple demographic prompts (e.g., “I am a 25-year-old from California…”). The authors also discuss practical matching methods, including greedy matching and one-to-one maximum weight matching, and note how the choice of matching algorithm can affect demographic similarity in the matched pairs. Beyond performance gains, Anthology envisions broad applicability for user research, public opinion surveys, and other social science endeavors, offering a scalable and ethically conscious alternative to traditional human surveys. At the same time, it acknowledges risks such as amplified biases and privacy concerns, urging cautious interpretation and responsible use. Looking ahead, the work points to expanding backstory diversity, enabling free-form responses, and exploring longer-term effects by allowing virtual personas to model changes over time. Learn more about the work via the referenced blog post and linked full paper.

Key features

- Rich, naturalistic backstories as conditioning context for LLMs.

- Backstories encode demographic attributes, cultural background, values, and life experiences.

- Backstories can be generated by LLMs themselves to produce large, diverse datasets.

- Conditioning enables approximation of individual human samples, not just population-level summaries.

- Evaluation against real survey data (Pew ATP Waves 34, 92, 99) using multiple metrics, including Wasserstein distance.

- Demonstrated improvements over baselines in LLM backends such as Llama-3-70B and Mixtral-8x22B.

- Discussion of matching strategies (greedy vs maximum weight matching) and their impact on demographic alignment.

- Potential applications in user research, public opinion surveys, and social science, with ethical considerations.

- Acknowledgement of risks (bias amplification, privacy concerns) and a call for cautious use.

- Identified future directions: broader backstory diversity, open-ended responses, and longer-term dynamic studies.

Common use cases

- User research and product studies using virtual personas to pilot-test surveys, questionnaires, or interview prompts before engaging real participants.

- Public opinion research and social science experiments where scalable, cost-effective pilot data is valuable.

- Ethically grounded pilot studies that align with Belmont principles of justice and beneficence by using virtual subjects instead of real participants in early-stage research.

- Explorations of longer-term effects by simulating how personas evolve in response to stimuli or over time.

- Methodological investigations into how backstory richness influences responses and the fidelity of modeled agents.

Setup & installation (exact commands)

# Setup details not provided in sourceQuick start (minimal runnable example)

Note: This section outlines a conceptual workflow based on Anthology’s approach. It is a high-level illustration rather than a ready-to-run script.

- Generate backstories for a broad demographic range.

- Prompt the model with an unrestricted request such as “Tell me about yourself.” to obtain richly detailed life narratives that include demographics, values, experiences, and social context.

- Condition the LLM with a backstory to form a virtual persona.

- Use the backstory as conditioning context embedded in a system prompt, for example: “You are a person with the following backstory: [BACKSTORY_TEXT]. Answer questions as this persona would respond.” Then pose survey-style questions.

- Collect responses from the persona and compare to real survey samples.

- For each backstory-conditioned persona, capture answers to a fixed set of questions and prepare them for comparison with Pew ATP Wave responses.

- Evaluate fidelity against human samples.

- Compute metrics such as distributional similarity and Wasserstein distance across waves, and consider demographic alignment using a one-to-one matching approach.

- Compare conditioning methods.

- Contrast rich backstory conditioning with simple demographic prompts, noting the reported improvements in fidelity and the potential trade-offs of matching strategies.

- Iterate and expand.

- Increase backstory diversity, explore free-form responses, and consider longer-term effects with evolving narratives.

Quick run example (illustrative pseudocode)

# Pseudocode (illustrative)

backstory = "I am a 34-year-old from the Midwest, with a college degree, values fairness and community, and worked as a teacher."

system_prompt = f"You are a person with the following backstory: {backstory}"

user_prompt = "Please answer the following survey questions: Do you support policy X? Why or why not?"

response = llm_call(system_prompt=system_prompt, user_prompt=user_prompt)This pseudocode demonstrates the core idea: provide a richly detailed backstory as the conditioning context and then query the model to generate persona-specific responses.

Pros and cons

- Pros

- Higher fidelity to individual responses by grounding in rich backstories.

- Scales to large, diverse demographics via backstory generation.

- Potentially reduces cost and ethical complexity of early human surveys by enabling pilot studies with virtual subjects.

- Applicable to user research, public opinion surveys, and social science contexts.

- Cons

- Risks of perpetuating biases or privacy concerns if backstories are misused or misinterpreted.

- Requires careful interpretation of results and acknowledgment of the simulated nature of responses.

- Effectiveness depends on the quality and breadth of generated backstories and the matching process.

Alternatives (brief comparisons)

| Approach | Description | Strengths |

| Limitations |

|---|

| --- |

| --- |

| --- |

| Anthology (rich backstories) |

| Needs careful handling of biases; privacy considerations |

| Baseline demographic prompts |

| Limited fidelity; may miss nuanced identity markers |

| Traditional human surveys |

| Expensive; slower; ethical and logistical constraints |

Pricing or License

License or pricing details are not specified in the source.

References

- BAIR Blog: http://bair.berkeley.edu/blog/2024/11/12/virutal-persona-llm/

- Notes: The work discusses evaluation against Pew Research ATP surveys Waves 34, 92, and 99 and references broader concepts of conditioning LLMs as agent models; full paper is linked in the post.

More resources

Cut Model Deployment Costs While Keeping Performance With GPU Memory Swap

Leverage GPU memory swap (model hot-swapping) to share GPUs across multiple LLMs, reduce idle GPU costs, and improve autoscaling while meeting SLAs.

Fine-Tuning gpt-oss for Accuracy and Performance with Quantization Aware Training

Guide to fine-tuning gpt-oss with SFT + QAT to recover FP4 accuracy while preserving efficiency, including upcasting to BF16, MXFP4, NVFP4, and deployment with TensorRT-LLM.

How Small Language Models Are Key to Scalable Agentic AI

Explores how small language models enable cost-effective, flexible agentic AI alongside LLMs, with NVIDIA NeMo and Nemotron Nano 2.

How to Scale Your LangGraph Agents in Production From a Single User to 1,000 Coworkers

Guidance on deploying and scaling LangGraph-based agents in production using the NeMo Agent Toolkit, load testing, and phased rollout for hundreds to thousands of users.

Introducing NVIDIA Jetson Thor: The Ultimate Platform for Physical AI

Jetson Thor combines edge AI compute, MIG virtualization, and multimodal sensors for flexible, real-time robotics at the edge, with FP4/FP8 acceleration and support for Isaac GR00T and large language/vision models.

NVFP4 Trains with Precision of 16-Bit and Speed and Efficiency of 4-Bit

NVFP4 is a 4-bit data format delivering FP16-level accuracy with the throughput and memory efficiency of 4-bit precision, extended to pretraining for large language models. This profile covers 12B-scale experiments, stability, and industry collaborations.