Visual Haystacks (VHs): Benchmark for Visual Multi-Image Reasoning

Sources: http://bair.berkeley.edu/blog/2024/07/20/visual-haystacks, http://bair.berkeley.edu/blog/2024/07/20/visual-haystacks/, BAIR Blog

Overview

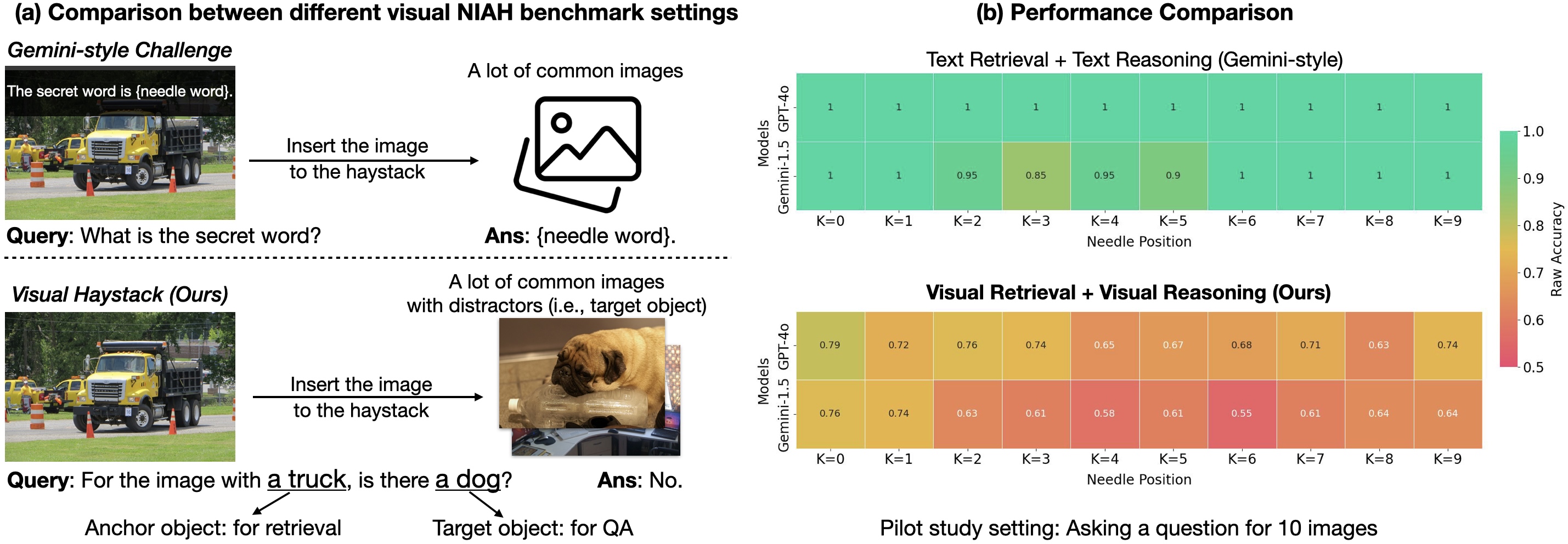

Visual Haystacks (VHs) is the first visual-centric Needle-In-A-Haystack (NIAH) benchmark designed to rigorously evaluate Large Multimodal Models (LMMs) on processing long-context visual information. It targets Visual Question Answering beyond a single image by testing retrieval and reasoning across large, uncorrelated image sets. The dataset centers on identifying the presence of specific visual content (e.g., objects) in images drawn from the COCO dataset, with roughly 1K binary question–answer pairs embedded in haystacks that can contain from 1 to 10K images. The task is divided into two main challenges: Single-Needle and Multi-Needle. The benchmark highlights three core deficiencies in current LMMs when faced with extensive visual inputs: struggles with visual distractors, difficulty reasoning across multiple images, and sensitivity to where the needle image appears in the input sequence (the so‑called “lost-in-the-middle” effect). In response, the authors introduce MIRAGE (Multi-Image Retrieval Augmented Generation), an open-source, single-stage training paradigm that extends LLaVA to handle MIQA tasks. MIRAGE compresses encodings, employs an in-line retriever to filter out irrelevant images, and augments training data with multi-image reasoning data. The result is a model that can handle 1K or 10K images and demonstrates strong recall and precision on multi-image tasks, outperforming several baselines on many single-needle tasks while maintaining competitive single-image QA performance. This work also compares a two-stage baseline (captioning with LLaVA followed by a text-based answer using Llama3) to end-to-end, visually aware models, illustrating that long-context caption integration by LLMs can be effective, while existing LMMs often struggle to integrate information across multiple images. The VHs benchmark and MIRAGE aim to push the field toward robust visual retrieval and reasoning over large image collections. For more details and data, see the source: https://bair.berkeley.edu/blog/2024/07/20/visual-haystacks/

Key features

- Long-context visual reasoning across large, uncorrelated image sets (1K QA pairs; haystacks from 1 to 10K images).

- Two challenges: Single-Needle (one needle image) and Multi-Needle (2–5 needles).

- Visual content detection tasks drawn from COCO annotations (e.g., presence of a target object).

- Open-source MIRAGE paradigm (Multi-Image Retrieval Augmented Generation) built on top of LLaVA to handle MIQA.

- Query-aware compression that reduces visual encoder tokens by about 10x, enabling larger haystacks within the same context length.

- In-line retriever trained with the LLM fine-tuning workflow to filter irrelevant images dynamically.

- Multi-image training data augmentation (including synthetic multi-image reasoning data).

- MIRAGE achieves state-of-the-art performance on most single-needle tasks and demonstrates strong recall and precision on multi-image tasks, outperforming strong baselines like GPT-4, Gemini-v1.5, and LWM in many settings.

- Co-trained retriever outperforms CLIP in this specific MIQA setting, highlighting that generic retrievers may underperform when the task hinges on question-like text and visual relevance.

- A simple, open-source single-stage training paradigm designed to address core MIQA challenges: distractors, long contexts, and cross-image reasoning.

- Identified phenomena such as the “needle position” effect, where model performance varies significantly based on the needle image’s position in the input sequence (e.g., up to ~26.5% drop for some LMMs when not placed immediately before the question; proprietary models show up to ~28.5% drop when not at the start).

Common use cases

- Analyzing patterns across large medical image collections (e.g., finding instances of a particular finding across many images).

- Monitoring deforestation or ecological changes via satellite imagery over time.

- Mapping urban changes using data from autonomous navigation and vehicle sensors.

- Examining large art collections for specific visual motifs or objects.

- Understanding consumer behavior from large-scale retail surveillance imagery.

Setup & installation

Setup and installation details are not provided in the source material.

# Setup details not provided in sourceQuick start

Note: The source describes a conceptual workflow rather than a ready-to-run recipe. A high-level outline based on the described approach is:

- Assemble a haystack: gather a large, uncorrelated image collection (1K–10K images) with COCO-derived annotations for target objects.

- Prepare a query: define the anchor object and the target object for the needle task.

- Use MIRAGE as the single-stage pipeline: apply query-aware compression to reduce encoding tokens, run the inline retriever to filter out irrelevant images, and leverage multi-image training data to fine-tune the LMM.

- Run evaluation: assess single-needle and multi-needle tasks, comparing against baselines such as captioning+LLM or other LMMs.

# Pseudo-workflow (illustrative; not an executable script)

images = load_dataset("COCO", count=1000_to_10000)

qa = create_binary_qa(anchor_object, target_object)

model = MIRAGE()

answer = model.answer(images, qa)

print(answer)Pros and cons

- Pros:

- Enables long-context reasoning across thousands of potentially unrelated images.

- Compressed encodings allow larger haystacks within context limits.

- Inline retriever reduces irrelevant data, improving retrieval quality.

- Multi-image training data improves generalization to MIQA tasks.

- MIRAGE achieves strong performance on single-needle tasks and competitive results on multi-needle tasks; the retriever can outperform CLIP in this setting.

- Cons:

- Accuracy degrades with larger haystacks for many models due to visual distractors.

- Across 5+ images and multi-needle setups, many LMMs underperform compared to a captioning+LLM baseline in some cases.

- Needle-position effects can significantly influence performance (up to ~26.5–28.5% changes depending on model and position).

Alternatives (brief comparisons)

- Traditional single-image VQA: Limited to one image; does not address multi-image retrieval or long-context reasoning.

- Text-only NIAH or Gemini-style benchmarks: Focus on textual retrieval or reasoning; VHs extends to multi-image visual data and cross-image reasoning.

- Captioning baseline (LLaVA + Llama3): Uses a two-stage approach (image captioning followed by a text-based QA model); can outperform some end-to-end LMMs on certain multi-image tasks but may lose when visual cues are essential. | Approach | Focus | Strengths | Weaknesses |---|---|---|---| | MIRAGE (VHs baseline) | Multi-image QA with retrieval augmentation | Strong recall/precision on MIQA; open-source; outperforms several baselines on many single-needle tasks | Requires imaging data preparation and MIQA-specific fine-tuning; needle-position effects observed |Captioning + LLM (two-stage) | Text-based QA from image captions | Robust when captions capture essential info | May underutilize visual detail; may fail when visual nuance is critical |End-to-end LMMs (e.g., GPT-4o, Gemini-v1.5) | Direct visual QA over multiple images | Potentially strong in some settings | Showed weak results in 5+ image multi-needle settings in this work; context and payload limits can constrain performance |

Pricing or License

Pricing details are not provided in the source. MIRAGE is described as open-source in the context of the benchmark and approach, but no licensing terms are specified here.

References

- Visual Haystacks: Are We Ready for Multi-Image Reasoning? Launching VHs: The Visual Haystacks Benchmark!. BAIR Blog. https://bair.berkeley.edu/blog/2024/07/20/visual-haystacks/

More resources

CUDA Toolkit 13.0 for Jetson Thor: Unified Arm Ecosystem and More

Unified CUDA toolkit for Arm on Jetson Thor with full memory coherence, multi-process GPU sharing, OpenRM/dmabuf interoperability, NUMA support, and better tooling across embedded and server-class targets.

Cut Model Deployment Costs While Keeping Performance With GPU Memory Swap

Leverage GPU memory swap (model hot-swapping) to share GPUs across multiple LLMs, reduce idle GPU costs, and improve autoscaling while meeting SLAs.

Fine-Tuning gpt-oss for Accuracy and Performance with Quantization Aware Training

Guide to fine-tuning gpt-oss with SFT + QAT to recover FP4 accuracy while preserving efficiency, including upcasting to BF16, MXFP4, NVFP4, and deployment with TensorRT-LLM.

How Small Language Models Are Key to Scalable Agentic AI

Explores how small language models enable cost-effective, flexible agentic AI alongside LLMs, with NVIDIA NeMo and Nemotron Nano 2.

Getting Started with NVIDIA Isaac for Healthcare Using the Telesurgery Workflow

A production-ready, modular telesurgery workflow from NVIDIA Isaac for Healthcare unifies simulation and clinical deployment across a low-latency, three-computer architecture. It covers video/sensor streaming, robot control, haptics, and simulation to support training and remote procedures.

How to Scale Your LangGraph Agents in Production From a Single User to 1,000 Coworkers

Guidance on deploying and scaling LangGraph-based agents in production using the NeMo Agent Toolkit, load testing, and phased rollout for hundreds to thousands of users.