TinyAgent: Edge Function Calling for Small Language Models

Sources: http://bair.berkeley.edu/blog/2024/05/29/tiny-agent, http://bair.berkeley.edu/blog/2024/05/29/tiny-agent/, BAIR Blog

Overview

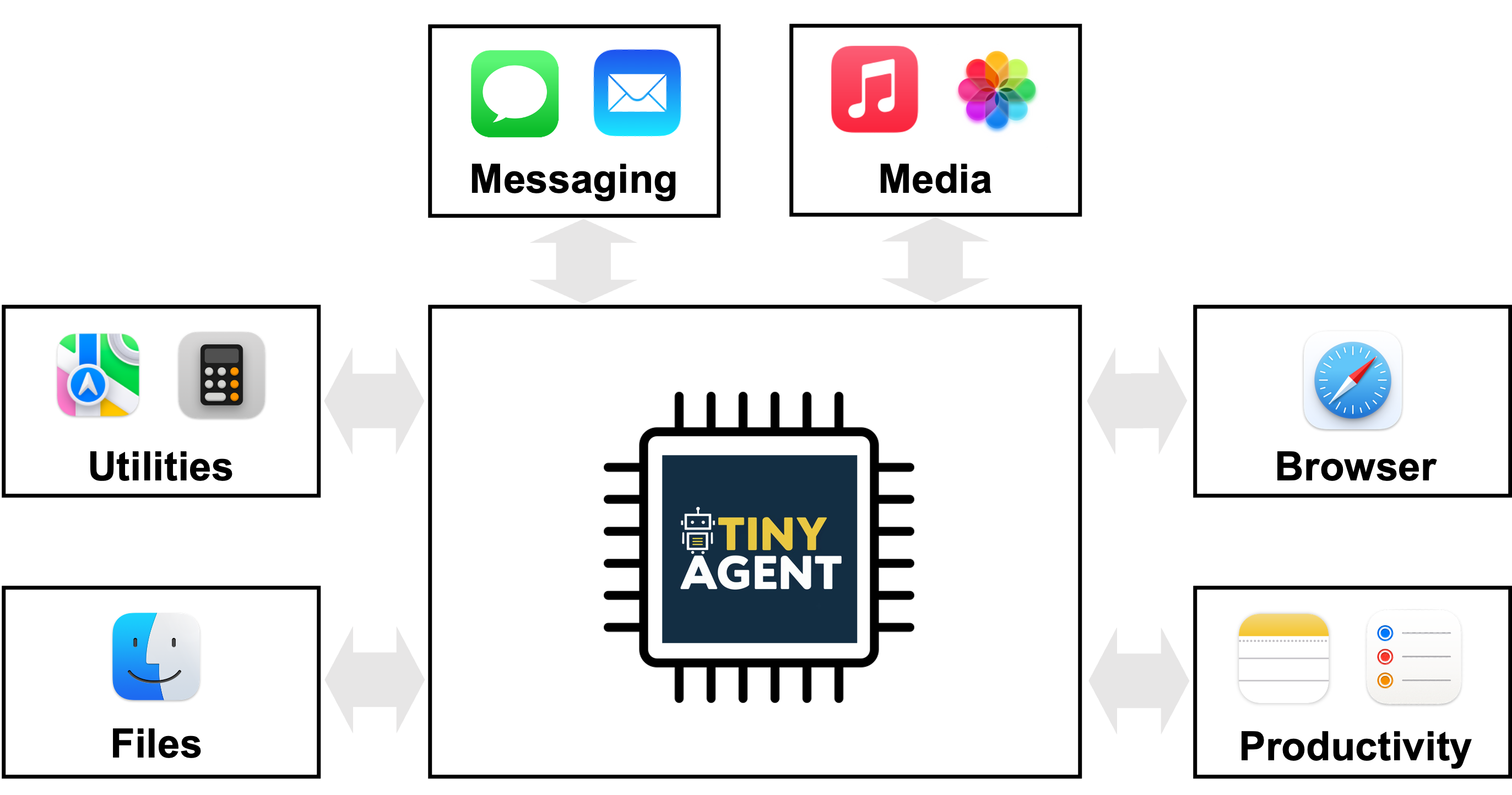

TinyAgent presents a pathway to deploy capable language-model agents on edge devices by focusing on function calling rather than broad world-knowledge memorization. Large models such as GPT-4o or Gemini-1.5 offer strong cloud-based capabilities but incur privacy, connectivity, and latency challenges when deployed at scale on the edge. TinyAgent argues that by training small language models (SLMs) on specialized, high-quality data that emphasizes function calling and tool orchestration, it is possible to achieve robust, real-time edge performance without relying on remote inference. The project centers on the idea that many practical agent tasks boil down to selecting the right sequence of predefined functions with correct inputs and ordering, rather than recalling general information. To enable this, the authors introduce a pipeline built around a function calling planner and a curated dataset that guides SLMs to generate executable plans rather than free-form text. Key to the approach is the LLMCompiler framework, which directs the model to output a function calling plan—identifying which functions to call, the required input arguments, and the interdependencies among calls. After producing the plan, the system parses it and executes the functions in dependency-respecting order. The original research question asks whether smaller, open-source models can be taught to perform reliable function calling with targeted data, thus achieving edge-ready reasoning and orchestration capabilities. The TinyAgent study demonstrates these ideas in a MacOS driving application context. The platform exposes 16 predefined functions that interface with macOS applications via predefined Apple scripts. The model’s task is to compose a correct function calling plan that uses those scripts to accomplish user objectives (for example, creating calendar items without exposing the user to generic Q&A responses). The authors also explore data generation and fine-tuning strategies to close the gap between small models and large, cloud-based baselines. Synthetic data is created with a capable model (for example GPT-4-Turbo) to produce realistic user queries, function calling plans, and input arguments, followed by sanity checks to ensure the resulting plans form valid graphs. The study reports the construction of 80k training examples, 1k validation examples, and 1k test examples, with an overall data-generation cost of about $500. A DAG-based evaluation metric checks plan isomorphism to ensure the generated plan aligns structurally with the ground-truth plan. The TinyAgent-1B model, paired with Whisper-v3 for on-device speech, is demonstrated on a MacBook M3 Pro. The framework is open source and available at the project’s GitHub repository. In short, TinyAgent advances a targeted, edge-friendly path to agentic AI: it prioritizes accurate function calling over world-knowledge memorization, uses an explicit function calling planner to manage tool orchestration, and demonstrates end-to-end edge deployment with a practical MacOS-driven use case.

Key features

- Small, open-source language models capable of function calling when trained on curated data

- LLMCompiler framework that outputs a function calling plan with functions, inputs, and dependencies

- High-quality synthetic data generation workflow (80k training, 1k validation, 1k test) using a capable LLM, enabling task-specific planning

- Fine-tuning pathway that can improve SLMs’ function calling performance beyond larger clouds-based baselines in this niche

- Tool RAG method proposed to further improve efficiency and performance

- Edge deployment focus: on-device inference for privacy-preserving, low-latency operation

- 16 predefined macOS functions interface with Apple scripts for local tool orchestration

- Demo setup with TinyAgent-1B and Whisper-v3 running locally on a MacBook M3 Pro

- Open source availability at the official repository

Common use cases

- Semantic edge assistants that interpret natural language queries and orchestrate a sequence of local tool calls (e.g., calendar, contacts, email) without exposing data to the cloud

- Siri-like workflows where the agent translates user commands into precise API or script invocations (for example, creating calendar invites with specified attendees) using predefined scripts

- Private, on-device automation scenarios where minimizing data exposure is critical

- Edge-enabled agents that operate in environments with intermittent or no network connectivity while preserving responsiveness

Setup & installation

Setup and installation details are not specified in the provided source. The work is released as open source, and the repository is available at:

- https://github.com/SqueezeAILab/TinyAgent For readers seeking practical steps, please consult the repository for installation instructions, dependencies, and usage examples. The source material emphasizes the architectural approach, data-generation workflow, and edge deployment use case rather than a step-by-step setup guide.

Setup and installation details are not specified in the provided source.

See the repository for instructions: https://github.com/SqueezeAILab/TinyAgentQuick start (minimal runnable example)

The described workflow centers on translating a natural language request into a plan of function calls and then executing those calls in the correct order. A minimal, high-level quick start based on the described approach might look like this (pseudo-workflow):

- A user provides a natural language command (for example, “Create a calendar invite with attendees A and B for the next Tuesday at 3 PM”).

- The LLMCompiler-based planner outputs a function calling plan that lists which functions to invoke, their input arguments (e.g., attendee emails, event title, date/time), and the interdependencies among those calls.

- The system executes each function in order, substituting actual values for placeholders (e.g., $1, $2) as results from prior calls become available.

- The final result is a created calendar item, with the inputs validated against the predefined Apple scripts. Note: The above represents the intended workflow described in the source. It is not a runnable script provided in the material; refer to the TinyAgent repository for concrete implementation details, function definitions, and integration points with macOS.

Pros and cons

- Pros

- Privacy-preserving edge deployment by keeping inference locally on the device

- Reduced dependence on cloud connectivity and potentially lower latency

- Targeted learning through curated, high-quality function-calling data

- Demonstrated capability to surpass a strong baseline in function calling for small models

- Clear path to scalable edge agents via an explicit function orchestration paradigm

- Cons (as reported or implied by the study)

- Off-the-shelf small models exhibit limited function calling without task-specific fine-tuning

- Requires curated, high-quality data and careful data generation to achieve robust plans

- The current results focus on a MacOS-driven driving application with predefined 16 functions, potentially limiting generalizability to other domains or tool sets

- Data-generation costs and process complexity (e.g., sanity checks, plan validation) are non-trivial in practice

Alternatives (brief comparisons)

- Large LLMs with cloud inference (e.g., GPT-4/GPT-4o): provide strong function calling but raise privacy and connectivity concerns; often rely on vast parametric memory and cloud access

- ToolFormer and Gorilla: examples cited as related approaches to enabling tool use via LLMs in agentic settings

- LLaMA-2 70B-based approaches: prior work that considered function calling with large models; TinyAgent explores whether smaller models can reach similar capabilities with curated data and fine-tuning

- Tool RAG: proposed optimization to improve efficiency and performance in the function-calling workflow

Pricing or License

- The framework is described as open source; explicit licensing terms are not provided in the source material. Pricing is not specified.

References

- TinyAgent blog post: http://bair.berkeley.edu/blog/2024/05/29/tiny-agent/

- TinyAgent GitHub repository: https://github.com/SqueezeAILab/TinyAgent

More resources

CUDA Toolkit 13.0 for Jetson Thor: Unified Arm Ecosystem and More

Unified CUDA toolkit for Arm on Jetson Thor with full memory coherence, multi-process GPU sharing, OpenRM/dmabuf interoperability, NUMA support, and better tooling across embedded and server-class targets.

Cut Model Deployment Costs While Keeping Performance With GPU Memory Swap

Leverage GPU memory swap (model hot-swapping) to share GPUs across multiple LLMs, reduce idle GPU costs, and improve autoscaling while meeting SLAs.

Fine-Tuning gpt-oss for Accuracy and Performance with Quantization Aware Training

Guide to fine-tuning gpt-oss with SFT + QAT to recover FP4 accuracy while preserving efficiency, including upcasting to BF16, MXFP4, NVFP4, and deployment with TensorRT-LLM.

Getting Started with NVIDIA Isaac for Healthcare Using the Telesurgery Workflow

A production-ready, modular telesurgery workflow from NVIDIA Isaac for Healthcare unifies simulation and clinical deployment across a low-latency, three-computer architecture. It covers video/sensor streaming, robot control, haptics, and simulation to support training and remote procedures.

NVIDIA Co-Packaged Optics Platform: Architecture and Ecosystem

Overview of NVIDIA's co-packaged optics (CPO) platform, highlighting photonics/electronics integration, Micro Ring Modulator, COUPE-based engines, and ecosystem collaboration with TSMC and partners for high-density, energy-efficient data-center interconnects.

Introducing NVIDIA Jetson Thor: The Ultimate Platform for Physical AI

Jetson Thor combines edge AI compute, MIG virtualization, and multimodal sensors for flexible, real-time robotics at the edge, with FP4/FP8 acceleration and support for Isaac GR00T and large language/vision models.