Financial Market Applications of LLMs — Overview, features and use cases

Sources: https://thegradient.pub/financial-market-applications-of-llms, https://thegradient.pub/financial-market-applications-of-llms/, The Gradient

Overview

The Gradient article surveys how large language models (LLMs), famed for language tasks, can be repurposed to finance by modeling sequences beyond words—such as prices, returns, or trades. LLMs are autoregressive learners: they predict the next element in a sequence from earlier elements. In finance, researchers ask whether this same modeling approach can yield price or trade predictions by treating financial data as token sequences rather than textual tokens. The article emphasizes that financial data are noisier and more volatile than language and that market participants can behave in ways that distort signal quality. Still, it highlights several promising directions where AI concepts may help, even if not all ideas are immediately production-ready. A few technical themes recur. First, context windows matter: modern LLMs can attend to long horizons, enabling analysis across multiple time scales. In finance, this means linking information that evolves over months (fundamental data), days (technical signals like momentum), and seconds to minutes (microstructure signals such as order-book imbalances). Second, multi-modal learning and residualization offer structured ways to improve predictions. Multi-modal learning aims to fuse classical time-series data with alternative data sources—sentiment, news articles, Twitter interactions, corporate reports, satellite imagery of ports, and other non-price signals—into a unified model. Residualization adapts the idea from finance’s factor models: by separating a common market component from asset-specific movements, the model can focus on idiosyncratic signals. In neural terms, learning a residual h(X) that’s close to the identity map can make training more efficient when the target function is near the prior expectation. The article also surveys practical AI-centric finance angles beyond simple next-step predictions. Synthetic data generation is highlighted as a potential benefit: simulated price trajectories that mimic market characteristics could supplement scarce data and support meta-learning or strategy development. Generative models could be used to sample extreme scenarios, which are rare but valuable for stress testing. And there’s a case for AI to support fundamental analysis—helping analysts refine investment theses, uncover inconsistencies in management commentary, and discover latent connections across sectors. A recurring theme is that, although bigger models and more data produce impressive capabilities, the case for these models to dominate quantitative trading is not proven, and an open mind is recommended as the AI landscape evolves. A concrete data point surfaced in the discussion is token-scale comparison between language models and financial data. At NeurIPS 2023, Hudson River Trading compared the number of tokens used to train GPT-3 (about 500 billion) with the market data available per year for 3,000 tradable stocks, 10 data points per stock per day, 252 trading days per year, and 23,400 seconds per trading day, yielding roughly 177 billion stock-market tokens per year. This rough parity underscores a practical hurdle: while the data scale is enormous, the tokens in finance are prices, returns, and trades rather than words, and the signal-to-noise ratio is far more challenging to exploit. The article also discusses broader AI research directions that could inform finance. Multimodal learning can potentially integrate prices, volumes, sentiment, news, and imagery (e.g., satellite data) to improve predictions. Residualization connects to the finance notion of isolating market-wide movements to concentrate on asset-specific signals. Long-context reasoning enables the reconciliation of multi-time-horizon phenomena, such as earnings, momentum, and order-book dynamics, within a single modeling framework. Finally, synthetic data, extreme-event sampling, and the potential for AI to augment fundamental analysis are highlighted as compelling yet non-trivial avenues. In summary, the article presents a nuanced view: LLM-inspired approaches bring valuable ideas to finance—contextual understanding across time scales, multi-modal data fusion, residual signal extraction, and synthetic-data benefits—yet there is no consensus that these methods will surpass traditional quantitative techniques in trading. The overall message is to stay open to unexpected developments while continuing careful experimentation as AI research and financial data evolve.

For attribution in academic contexts or books, please cite this work as: https://thegradient.pub/financial-market-applications-of-llms/

More resources

CUDA Toolkit 13.0 for Jetson Thor: Unified Arm Ecosystem and More

Unified CUDA toolkit for Arm on Jetson Thor with full memory coherence, multi-process GPU sharing, OpenRM/dmabuf interoperability, NUMA support, and better tooling across embedded and server-class targets.

Cut Model Deployment Costs While Keeping Performance With GPU Memory Swap

Leverage GPU memory swap (model hot-swapping) to share GPUs across multiple LLMs, reduce idle GPU costs, and improve autoscaling while meeting SLAs.

Fine-Tuning gpt-oss for Accuracy and Performance with Quantization Aware Training

Guide to fine-tuning gpt-oss with SFT + QAT to recover FP4 accuracy while preserving efficiency, including upcasting to BF16, MXFP4, NVFP4, and deployment with TensorRT-LLM.

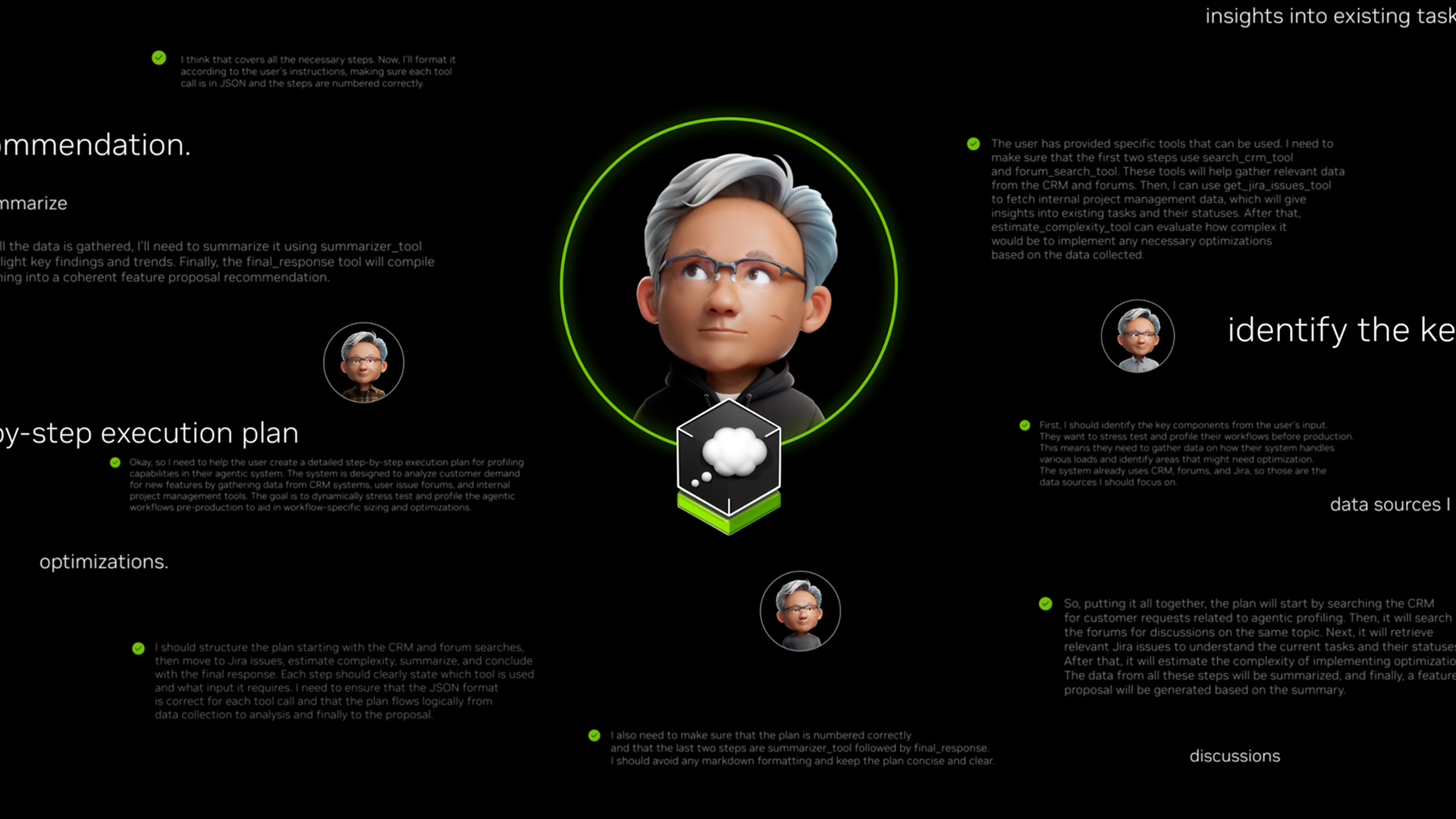

How Small Language Models Are Key to Scalable Agentic AI

Explores how small language models enable cost-effective, flexible agentic AI alongside LLMs, with NVIDIA NeMo and Nemotron Nano 2.

Getting Started with NVIDIA Isaac for Healthcare Using the Telesurgery Workflow

A production-ready, modular telesurgery workflow from NVIDIA Isaac for Healthcare unifies simulation and clinical deployment across a low-latency, three-computer architecture. It covers video/sensor streaming, robot control, haptics, and simulation to support training and remote procedures.

How to Scale Your LangGraph Agents in Production From a Single User to 1,000 Coworkers

Guidance on deploying and scaling LangGraph-based agents in production using the NeMo Agent Toolkit, load testing, and phased rollout for hundreds to thousands of users.