Mamba Explained: State Space Models as a scalable alternative to Transformers

Sources: https://thegradient.pub/mamba-explained, https://thegradient.pub/mamba-explained/, The Gradient

Overview

Mamba is presented as a novel class of models based on State Space Models (SSMs) positioned as an alternative to Transformers. The core promise is similar performance and scaling laws to Transformers while enabling feasible long-context processing (on the order of 1 million tokens). By removing the quadratic bottleneck in the attention mechanism, Mamba aims to achieve fast inference and linear scaling with sequence length, reportedly up to 5x faster than Transformer speeds in some regimes. The authors Gu and Dao describe Mamba as a general sequence model backbone that achieves state-of-the-art results across modalities like language, audio, and genomics. In language modeling, their Mamba-3B model reportedly outperforms Transformers of the same size and matches Transformers twice its size in both pretraining and downstream evaluation. This document distills how Mamba replaces attention with an SSM for communication while preserving MLP-like projections for computation, and what that means for developers building long-context AI systems. https://thegradient.pub/mamba-explained/

In Transformers, every token can attend to all previous tokens, creating a quadratic bottleneck during training (O(n^2) time) and linear memory growth for the KV cache, with autoregressive generation costing O(n) per token. Techniques like Sliding Window Attention or FlashAttention mitigate this, but long contexts still push hardware limits. Mamba instead uses a Control Theory–inspired State Space Model (SSM) to handle inter-token communication, while keeping traditional linear projections and nonlinearities for computation. This juxtaposition is intended to push the Pareto frontier of effectiveness and efficiency further than traditional RNNs or Transformers. The idea is that a compact hidden state can capture essential dynamics, reducing the need to store and attend to all past tokens.

A core intuition behind Mamba is to model sequence evolution with continuous-time dynamics and then discretize for discrete-time processing. Concretely, the SSM describes the hidden state h(t) evolving as a differential equation h’(t) = A h(t) + B x(t), with an output y(t) = C h(t) + D x(t). To align with discrete-time training and inference, Mamba uses a Zero-Order Hold (ZOH) discretization, producing a recurrence h_{t+1} ≈ (I + Δ A) h_t + (Δ B) x_t, where Δ is the dwell time or step size. This framing helps interpret the A, B, C, D matrices and gives a compact, local mechanism for stateful communication between tokens without forming a full quadratic attention matrix. In other words, the Mamba block replaces the attention step with a principled dynamical system while retaining feedforward-like computation.

The resulting architecture stacks Mamba blocks, analogous to Transformer blocks, forming a deep sequence model. The Computation path (MLP-like) relies on standard linear projections, nonlinearities, and local convolutions, while Communication is governed by the SSM. The analogy used in the article highlights how a small, evolving state can capture much of the surrounding dynamics: by observing the top portion of a running sequence and applying known state dynamics, one can infer the rest without reprocessing every token in every step. This perspective underpins the claim that Mamba can process very long sequences with favorable efficiency characteristics while preserving accuracy.

The article emphasizes: attention in Transformers supports near-ideal recall but at significant compute and memory cost; SSMs offer a different trade-off, potentially moving toward a Pareto frontier where long-context performance and efficiency coexist. While the Mamba approach shows promise, the authors acknowledge questions about whether SSMs can discard unnecessary information as effectively as attention-based architectures. The overall narrative positions Mamba as a general, scalable backbone with strong results across domains, including language, audio, and genomics. For the long-context setting, the proposed SSM-based communication is the core differentiator from standard Transformer architectures. The source and discussions are drawn from The Gradient.

Key excerpt perspectives include the classic Cocktail Party Problem analogy, contrasting the attention mechanism’s “photographic memory” with the efficiency goals of Mamba, and a focus on the dynamics of state as the central mechanism for sequence processing. The article contrasts the traditional, always-on exposure of Transformer attention with a more dynamically informed, state-driven communication strategy that can adapt its effective context window through the dwell time Δ, enabling more scalable long-context modeling. See the original source for the full derivation and discussion: https://thegradient.pub/mamba-explained/.

Key features

- SSM-based communication replaces attention while preserving MLP-like computation in each block.

- Linear scaling with sequence length and fast inference, with claims of up to ~5x speedups over traditional Transformer fast paths in some regimes.

- Capability to handle extremely long contexts (claimed feasibility up to 1 million tokens).

- Demonstrated performance on language modeling: Mamba-3B outperforms Transformers of equal size and matches Transformers roughly double in size on pretraining and downstream tasks.

- A continuous-to-discrete time approach using Zero-Order Hold discretization, with a compact state h(t) governed by h’(t) = A h(t) + B x(t) and y(t) = C h(t) + D x(t).

- The dwell time Δ as a tunable step size that shifts the emphasis onto newer versus older inputs.

- The architecture stacks Mamba blocks, keeping the computation path as standard projections and nonlinearities, while replacing the core communication channel with SSM dynamics.

- Cross-modality intent: claimed state-of-the-art across language, audio, and genomics domains as a general backbone for sequence modeling.

- Conceptual framing around efficiency vs. memory tradeoffs, contrasted with the quadratic bottleneck of attention.

| Feature | Benefit |

|---|---|

| SSM-based communication | Replaces attention with a dynamical system for inter-token communication, reducing quadratic costs |

| Long-context feasibility | Designed to handle sequences up to 1M tokens with linear scaling |

| Comparable performance | Mamba-3B reportedly matches Transformers twice its size on tasks, with improvements over same-size models |

| Computation path | Maintains MLP-style projections plus local convolutions for computation |

| Discretization strategy | Zero-Order Hold discretization links continuous-time dynamics to discrete processing |

| Tunable dwell time | Δ controls how much history influences the next state |

Common use cases

- Language modeling with long contexts where Transformer attention becomes impractical.

- Multimodal sequence modeling, including audio and genomics, where sequence length is a critical factor.

- General-purpose sequence backbone for tasks requiring scalable memory of past inputs without quadratic attention.

- Scenarios requiring fast inference and efficient scaling across very long sequences.

Setup & installation

Not provided in the source material. See the original article for conceptual exposition and the cited references.

N/A - Not provided in the sourceQuick start

Not provided in the source material. The article focuses on the architectural concept and reported results rather than end-user setup or runnable examples.

Pros and cons

- Pros

- Handles very long sequences with linear scaling and reduced reliance on attention’s quadratic footprint.

- Competitive performance with Transformer baselines, including strong results for language modeling at a smaller model size.

- A unified backbone that claims cross-domain effectiveness (language, audio, genomics).

- Clear, interpretable discretization framework (A, B, C, D matrices, Δ step) that connects continuous dynamics to discrete processing.

- Cons / open questions

- The effectiveness of SSMs at discarding unnecessary information remains a topic of inquiry within the article and broader discussion.

- Practical tooling, libraries, and ecosystem maturity for SSM-based backbones relative to Transformer ecosystems are not covered in the source.

Alternatives (brief comparisons)

- Transformer architectures with optimized attention (e.g., FlashAttention) to mitigate the quadratic bottleneck have been the standard approach; Mamba proposes a different path by replacing attention entirely.

- Sliding Window Attention offers a partial mitigation by restricting attention to recent tokens, trading off global context for efficiency.

- Other linear- or memory-efficient sequence models (RNNs, etc.) historically trade off performance for efficiency; Mamba positions itself as a more effective point on that frontier by using SSMs. | Alternative | Key idea | Pros | Cons |---|---|---|---| | Transformer | Full pairwise attention | Strong accuracy, flexible long-range dependencies | Quadratic cost in training; large memory footprints for long contexts |FlashAttention | Optimized attention kernels | Faster practical training/inference | Still bound by quadratic memory in theory; practical limits on context length |Sliding Window Attention | Local attention windows | Linear-ish cost with limited context | Sacrifices global context unless window is very large |RNN/Memory-based | Stateful processing with hidden state | Very efficient memory, sequential processing | Struggles to capture long-range dependencies as effectively as Transformers |Mamba (SSM-based) | State Space Model for communication | Potential linear scaling with long contexts; same- or better-than-size-similar Transformer results | Early-stage approach; long-term ecosystem maturity unknown |

Pricing or License

Not specified in the source material.

References

More resources

CUDA Toolkit 13.0 for Jetson Thor: Unified Arm Ecosystem and More

Unified CUDA toolkit for Arm on Jetson Thor with full memory coherence, multi-process GPU sharing, OpenRM/dmabuf interoperability, NUMA support, and better tooling across embedded and server-class targets.

Cut Model Deployment Costs While Keeping Performance With GPU Memory Swap

Leverage GPU memory swap (model hot-swapping) to share GPUs across multiple LLMs, reduce idle GPU costs, and improve autoscaling while meeting SLAs.

Fine-Tuning gpt-oss for Accuracy and Performance with Quantization Aware Training

Guide to fine-tuning gpt-oss with SFT + QAT to recover FP4 accuracy while preserving efficiency, including upcasting to BF16, MXFP4, NVFP4, and deployment with TensorRT-LLM.

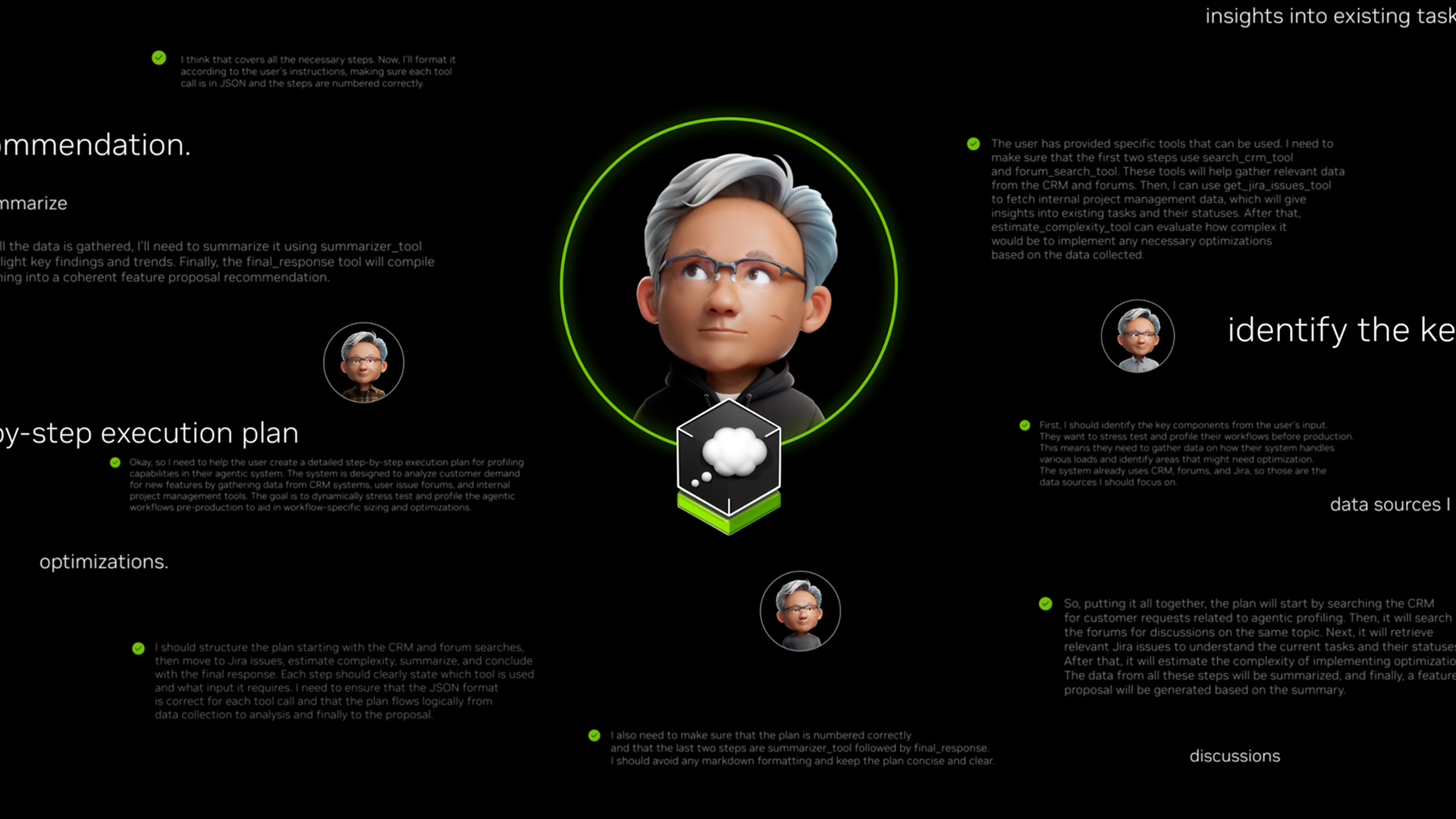

How Small Language Models Are Key to Scalable Agentic AI

Explores how small language models enable cost-effective, flexible agentic AI alongside LLMs, with NVIDIA NeMo and Nemotron Nano 2.

Getting Started with NVIDIA Isaac for Healthcare Using the Telesurgery Workflow

A production-ready, modular telesurgery workflow from NVIDIA Isaac for Healthcare unifies simulation and clinical deployment across a low-latency, three-computer architecture. It covers video/sensor streaming, robot control, haptics, and simulation to support training and remote procedures.

How to Scale Your LangGraph Agents in Production From a Single User to 1,000 Coworkers

Guidance on deploying and scaling LangGraph-based agents in production using the NeMo Agent Toolkit, load testing, and phased rollout for hundreds to thousands of users.