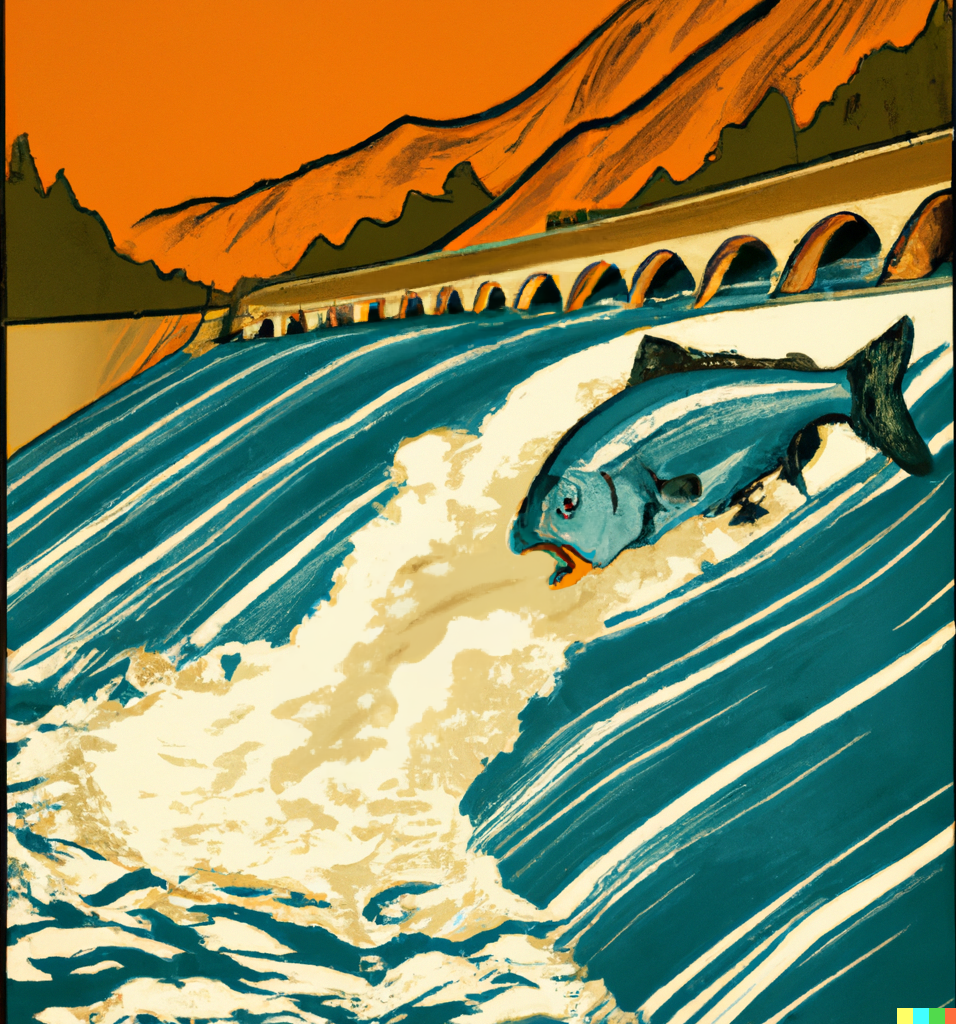

Salmon in the Loop: Human-in-the-Loop Fish Counting at Hydroelectric Dams

Sources: https://thegradient.pub/salmon-in-the-loop, https://thegradient.pub/salmon-in-the-loop/, The Gradient

Overview

Salmon in the Loop examines a complex sociotechnical problem—counting fish in a field undergoing digital transformation. The author worked as a consultant in environmental science focused on counting fish that pass through large hydroelectric dams, highlighting how human-in-the-loop dataset production interacts with machine learning in a regulated setting. The narrative situates fish counting within regulatory oversight by FERC, an independent U.S. agency responsible for licensing and permitting dam construction and operation, and for enforcing compliance if standards are violated. Dams act as large-scale energy storage systems, generating electricity while potentially disrupting waterways and native fish populations, notably salmonids in the Pacific Northwest. To demonstrate regulatory adherence, dam operators collect extensive fish passage data, which serves as the basis for assessing environmental impact and guiding operational decisions. Data collection is traditionally manual and variable: observers visually count fish as they pass through ladders, recording additional attributes such as illness, origin (hatchery vs wild), and other observable characteristics. These classifications are subtle and require expert knowledge; errors can arise from transcription, species misclassification, or inconsistent reporting granularity (hourly, daily, monthly) and seasonal focus. The overarching challenge is to reconcile disparate datasets produced under different standards and to translate them into reliable inputs for evaluating dam effects on fish populations. The piece observes a growing interest in using computer vision and machine learning to automate counts while maintaining scientific integrity and regulatory trust—a quintessential human-in-the-loop (HITL) application that blends domain expertise with algorithmic consistency and scalability. A core practical thread is the problem definition phase: engaging stakeholders to articulate the tasks the system must perform (e.g., species or life-stage identification) and to set realistic performance goals within a regulated context. If automation aims to estimate population density during peak passage, video capture might be preferred; if health indicators or rare species are of interest, still images coupled with targeted labeling may suffice. The author sketches a spectrum of deployment choices, from a simple “fish is moving” detector that triggers image capture for expert tagging to a full classifier trained on labeled data. In regulated domains like hydropower, credibility hinges on rigorous testing, data integrity, algorithmic transparency, and accountability, which shape how such HITL systems are designed and evaluated. The aim frequently cited in practice is automated counts that approach or meet a practical accuracy threshold, often quoted as around 95% relative to human visual counts, recognizing that expectations must be aligned with the production lifecycle and regulatory scrutiny. This context-setting is essential as organizations seek to modernize data pipelines without compromising environmental compliance.

Key features (high-level insights drawn from the narrative)

- Human-in-the-loop data production that leverages subject-matter experts to curate and validate datasets.

- Regulatory alignment with FERC requirements and the environmental governance framework around hydropower.

- A recognition that data are heterogeneous, with varying granularity, temporal coverage, and annotation standards across operators.

- The use of computer vision and machine learning to automate fish counting while preserving domain knowledge and auditability.

- Clear problem-space negotiation with stakeholders to define tasks, constraints, and performance metrics before technical design.

- A pragmatic stance on performance goals, acknowledging real-world limits and the need for robust validation against human baselines.

- The potential for automated workflows to reduce manual data collection burden and speed up information flow to decision-makers.

- Emphasis on data quality, transparency, and accountability in regulated environments where errors can have regulatory consequences.

Key features

- Human-in-the-loop dataset construction and curation.

- Regulatory compliance focus (FERC) and environmental stewardship.

- Varied data collection practices across operators and runs.

- Visual counting by trained observers with classifications beyond species (health, origin, injuries).

- Transition path to computer vision and ML with emphasis on trust and verification.

- Problem-space negotiation and stakeholder alignment as foundational steps.

- Target performance benchmarks and practical feasibility in a regulated setting.

- Integration of video and still-image capture strategies to support labeling and classifier training.

Common use cases

- Automated fish counts to demonstrate compliance with regulatory constraints and to monitor population trends.

- Classification tasks tied to health, injury, and origin (wild vs hatchery) to support conservation goals.

- Species- and life-stage identification to inform population dynamics and management decisions.

- Real-time or near-real-time monitoring of fish passage to assess dam operations and ecological impact.

- Data quality improvement through HITL processes that reduce transcription and misclassification errors.

- Data integration with dam operational information to explore correlations between management actions and fish outcomes.

- Design and evaluation of end-to-end workflows that can scale across multiple dams with diverse data practices.

Setup & installation

Setup and installation details are not explicitly provided in the source. The content emphasizes high-level considerations and processes (defining the problem space, setting performance goals, choosing data capture strategies, and establishing HITL workflows) rather than concrete software setup steps or commands. In lieu of explicit commands, note the following considerations drawn from the narrative:

- Engage stakeholders early to define the tasks the system must perform (e.g., species identification, health assessment).

- Align expectations with regulatory constraints (regulatory approval, data integrity, transparency).

- Decide between data modalities (video for continuous motion and tagging opportunities, still images for rare events or detailed labeling).

- Prepare for varied data granularity and seasonal patterns across dam operators.

- Plan for a HITL pipeline where human experts label data, which then informs ML model training and validation.

N/AQuick start

The source outlines a practical, non-code-oriented pathway to kick off a HITL fish-counting project. A minimal, high-level sequence inspired by the text might be:

- Step 1: Define the problem space with stakeholders, specifying the exact tasks the system should perform (e.g., detect a fish, classify species, flag illness).

- Step 2: Establish performance goals that are realistic within regulatory and operational constraints (e.g., aiming for high accuracy against human counts).

- Step 3: Choose data collection strategy (video capture to observe motion and behavior, or still images for precise labeling) and identify where labeling will occur.

- Step 4: Assemble a dataset with expert annotations (species, life stage, health status, origin) and document uncertainties or edge cases.

- Step 5: Build a light-weight ML pipeline that can operate with human-in-the-loop verification, starting from a simple detector to a classifier.

- Step 6: Validate model outputs against human counts and revise data and labels to improve reliability.

- Step 7: Deploy with governance for transparency, traceability, and auditability, ensuring alignment with FERC oversight.

- Step 8: Monitor performance and update the data-collection and labeling processes as needed. This outline reflects the article’s emphasis on problem framing, stakeholder alignment, and the HITL approach as a practical path from manual counting toward automation while preserving accountability.

Pros and cons

- Pros

- Potentially higher consistency and throughput in fish counting through automated methods.

- Reduction in manual data collection burden and faster feedback to stakeholders.

- HITL design preserves expert judgment, improving trust and compliance with regulatory standards.

- Ability to leverage diverse data (video, stills) and integrate with dam-operational data for broader analyses.

- Cons

- Regulatory scrutiny requires rigorous validation, transparency, and data integrity, which can slow deployment.

- Heterogeneous data practices across operators introduce complexity in standardization.

- High accuracy targets (e.g., ~95% relative to human counts) may be challenging in some operational contexts.

- Building and maintaining HITL workflows demands ongoing human resources and governance.

Alternatives (brief comparisons)

- Manual visual counts by trained observers: Pros include the depth of domain expertise and nuanced judgments; Cons include transcription errors and limited scalability.

- Automated computer vision with no human-in-the-loop: Pros include potential speed and consistency; Cons involve regulatory risk, model generalization challenges, and the need for extensive validation to ensure safety and reliability.

- HITL systems with only limited automation: Provides a balance, leveraging automation for routine tasks while preserving expert verification for critical decisions; aligns with regulatory expectations but requires well-designed workflows to avoid bottlenecks.

- Hybrid approaches (live video tagging for rare cases, selective still-image labeling): Allows targeted labeling of edge cases and efficient use of expert time.

Pricing or License

Not specified in the source.

References

More resources

AGI Is Not Multimodal: Embodiment-First Intelligence

A concise resource outlining why multimodal, scale-driven approaches are unlikely to yield human-level AGI and why embodied world models are essential.

Shape, Symmetries, and Structure: The Changing Role of Mathematics in ML Research

Explores how mathematics remains central to ML, but its role is evolving from theory-first guarantees to geometry, symmetries, and post-hoc explanations in scale-driven AI.

What's Missing From LLM Chatbots: A Sense of Purpose

Explores purposeful dialogue in LLM chatbots, arguing multi-turn interactions better align AI with user goals and enable collaboration, especially in coding and personal assistant use cases.

Positive Visions for AI Grounded in Wellbeing

A wellbeing-centered framework for beneficial AI that blends psychology, economics, and governance to outline plausible, actionable deployment visions that support individual and societal flourishing.

Financial Market Applications of LLMs — Overview, features and use cases

Overview of how LLMs can be applied to financial markets, including autoregressive modeling of price data, multi-modal inputs, residualization, synthetic data, and multi-horizon predictions, with caveats about market efficiency.

A Resource Overview: Measuring and Mitigating Gender Bias in AI

Survey of key work measuring gender bias in AI, across word embeddings, coreference, facial recognition, QA benchmarks, and image generation; discusses mitigation, gaps, and the need for robust auditing.