Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

Sources: https://aws.amazon.com/blogs/machine-learning/move-your-ai-agents-from-proof-of-concept-to-production-with-amazon-bedrock-agentcore, https://aws.amazon.com/blogs/machine-learning/move-your-ai-agents-from-proof-of-concept-to-production-with-amazon-bedrock-agentcore/, AWS ML Blog

TL;DR

- Amazon Bedrock AgentCore provides a production-grade path for agentic AI, addressing memory, security, observability, and scalable tool management. source

- It evolves a simple prototype into a memory-enabled, context-aware agent capable of handling multiple concurrent users while maintaining enterprise standards.

- The journey prioritizes memory first, then centralized tools via Gateway, identity, observability, and finally a customer-facing interface to support scale.

- The approach demonstrates how memory hooks and automation with AgentCore Memory and Strands Agents enable production-ready behavior without reinventing core capabilities from scratch.

- While the example starts with a single-agent architecture, AgentCore supports multi-agent deployments and enterprise-grade security and reliability patterns.

Context and background

Building an AI agent for real-world use is more than making a clever prototype. Production deployments must address scalability, security, observability, and operations that rarely surface in development. In this post, Amazon Bedrock AgentCore is presented as a comprehensive suite of services to help you move agentic AI applications from experimental proof of concept to production-ready systems. The narrative follows a customer support agent that evolves from a local prototype to an enterprise-grade solution capable of handling multiple concurrent users while preserving security and performance. The article introduces AgentCore as a collection of services designed to work together: AgentCore Runtime for deployment and scaling, AgentCore Gateway for enterprise tool development, AgentCore Identity for securing agents at scale, AgentCore Memory for context-aware conversations, AgentCore Code Interpreter, AgentCore Browser Tool for web interaction, and AgentCore Observability for transparency of agent behavior. The goal is to show how these services cooperate to solve operational challenges that emerge as agentic applications mature. source The use case centers on customer support, a domain with high demand and variability across inquiries—from policy questions to technical troubleshooting. A truly intelligent agent must manage orders and accounts, look up policies, search product catalogs, troubleshoot via web research, and remember customer preferences across many interactions. The production path begins with a prototype and progresses toward a robust, enterprise-grade solution that supports multiple users while upholding security and reliability standards. The journey also notes that production deployments can follow multi-agent architectures, which AgentCore supports, even though the initial example focuses on a single agent for clarity. source The article explains that every production system starts with a proof of concept. In the example, a prototype uses Strands Agents as the open-source framework and Anthropic’s Claude 3.7 Sonnet on Bedrock as the LLM powering the agent. The team emphasizes that other frameworks and models can be used as needed. Agents rely on tools to take actions and interact with live systems; the post simplifies the demonstration with three core capabilities: return policy lookup, product information search, and web-based troubleshooting. The end-to-end code is available in the GitHub repository referenced in the post. source

What’s new

Amazon Bedrock AgentCore introduces a production-focused progression for agentic AI, highlighting memory, centralized tooling, security, and observability as core pillars. The first major enhancement is AgentCore Memory, which replaces the baseline “goldfish” behavior where the agent forgets conversations between sessions. Memory is provided as a managed, persistent store with two complementary strategies to determine what information to extract and retain. Automation is central: Strands Agents offers a hook system that enables automatic memory handling through event callbacks, so memory operations occur without manual intervention. The example shows a CustomerSupportMemoryHooks component that retrieves customer context and saves interactions, integrated by passing the hooks into the agent constructor. This memory enables personalized, context-aware responses across sessions, such as recalling a customer’s prior gaming preferences or hardware needs. source With memory in place, the next steps involve addressing tool reuse, security, and multi-user concurrency. The article outlines centralizing tools using AgentCore Gateway and strengthening identity management with AgentCore Identity to establish a scalable and secure foundation for production. The discussion also notes that a single-agent architecture is used for demonstration, but multi-agent deployments are supported in production scenarios. The narrative emphasizes moving from proof of concept to production by solving memory first and then architectural concerns that affect maintainability and reliability at scale. source In addition to memory, the post references practical steps like installing dependencies (boto3, the AgentCore SDK, and the AgentCore Starter Toolkit SDK) to accelerate production-readiness. It also points to the GitHub repository for the end-to-end journey and notes that the architecture in the PoC focuses on essential components while remaining adaptable to other frameworks and models. The overarching message is that Bedrock AgentCore provides managed services designed to address the gaps that typically delay production. source

Why it matters (impact for developers/enterprises)

For developers, the shift from a local prototype to a production agent means confronting memory, security, reliability, and observability at scale. AgentCore Memory removes a primary obstacle—context loss across conversations—enabling hyper-personalized experiences and smoother customer interactions. For enterprises, centralized tools via Gateway and robust identity controls create reusable, compliant tool access across teams and agents, reducing maintenance overhead and drift between deployments. Observability brings transparency into agent behavior, helping teams monitor performance, debug issues, and maintain trust in enterprise deployments. By outlining a concrete path that begins with a memory-first approach and progresses toward a secure, observable, multi-agent platform, the post offers a blueprint for organizations seeking to operationalize agentic AI without sacrificing governance or reliability. source

Technical details or Implementation (how it’s built)

The PoC starts with a functional prototype that demonstrates core capabilities using three tools: return policy lookup, product information search, and web search for troubleshooting. The model powering the agent in the PoC is Anthropic’s Claude 3.7 Sonnet on Bedrock, though the post notes that other agent frameworks and models can be used in practice. The essential insight is that tools enable agents to perform actions and interact with live systems, and organizing these tools for production is a key requirement. A central technical hurdle identified is memory. Bedrock AgentCore Memory provides persistent memory at two complementary levels, enabling the agent to remember customers across conversations and deliver more personalized experiences. The memory is driven by configurable strategies that determine what information to extract and store, and it relies on automation via Strands Agents’ hook system. The hooks intercept agent lifecycle events and handle memory operations automatically, allowing developers to retrieve customer context and save interactions with minimal code changes. In the example, a CustomerSupportMemoryHooks component is connected to the memory system and passed to the agent constructor, enabling session-based memory that tailors responses as interactions unfold. source Once memory is in place, the post describes evolving tool architecture to support scale and reuse. Centralizing tools with AgentCore Gateway avoids duplicating the same tools across multiple agents, reducing code complexity and maintenance burdens. Identity management with AgentCore Identity helps secure agent operations at scale, a critical requirement for enterprise deployments. The article emphasizes that the single-agent PoC is a simplification for illustration, and production environments can support multiple agents organized around shared, secure tooling. source The implementation path also points to practical steps such as installing dependencies (boto3, AgentCore SDK, Starter Toolkit SDK) to accelerate integration with AgentCore capabilities. The GitHub repository referenced in the post contains the end-to-end code illustrating how to connect an agent to Bedrock AgentCore, while keeping the core concept adaptable to other toolchains and models. The emphasis remains on leveraging Bedrock AgentCore’s managed services to address production gaps rather than building all components from scratch. source

Key takeaways

- Production-grade agent systems require memory, security, observability, and scalable tooling; Bedrock AgentCore offers dedicated services to address these needs. source

- The memory-enabled architecture transforms agents from forgetful “goldfish” implementations to context-aware assistants capable of personalization across sessions. source

- Centralized tooling via Gateway and secure identity via Identity are essential steps toward multi-agent, enterprise-grade deployments. source

- Observability and automatic memory management reduce operational risk and improve debugging and governance in production. source

- The approach is demonstrated with a customer support scenario but is presented as a general blueprint for productionizing agentic AI using Bedrock AgentCore. source

FAQ

-

What is the primary production challenge addressed first in Bedrock AgentCore?

Memory and preserving context across conversations, implemented with AgentCore Memory and memory hooks. [source](https://aws.amazon.com/blogs/machine-learning/move-your-ai-agents-from-proof-of-concept-to-production-with-amazon-bedrock-agentcore/)

-

Can production deployments use more than one agent?

Yes, multi-agent architectures are supported by Amazon Bedrock AgentCore. [source](https://aws.amazon.com/blogs/machine-learning/move-your-ai-agents-from-proof-of-concept-to-production-with-amazon-bedrock-agentcore/)

-

What role do Gateway and Identity play in production?

Gateway centralizes tool management for reliability and security, while Identity provides scalable security for agent interactions. [source](https://aws.amazon.com/blogs/machine-learning/move-your-ai-agents-from-proof-of-concept-to-production-with-amazon-bedrock-agentcore/)

-

Where can I find the end-to-end code for the concepts described?

The GitHub repository linked in the post contains the end-to-end journey and code. [source](https://aws.amazon.com/blogs/machine-learning/move-your-ai-agents-from-proof-of-concept-to-production-with-amazon-bedrock-agentcore/)

References

More news

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Prompting for precision with Stability AI Image Services in Amazon Bedrock

Amazon Bedrock now offers Stability AI Image Services, extending Stable Diffusion and Stable Image with nine tools for precise image creation and editing. Learn prompting best practices for enterprise use.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.

Scale visual production using Stability AI Image Services in Amazon Bedrock

Stability AI Image Services are now available in Amazon Bedrock, delivering ready-to-use media editing via the Bedrock API and expanding on Stable Diffusion models already in Bedrock.

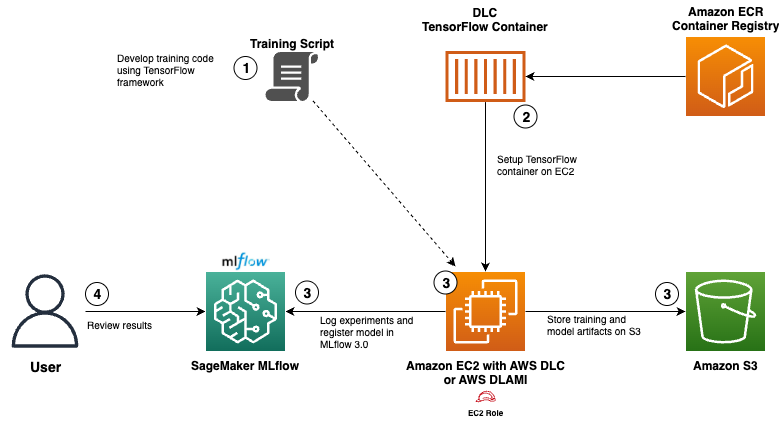

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.

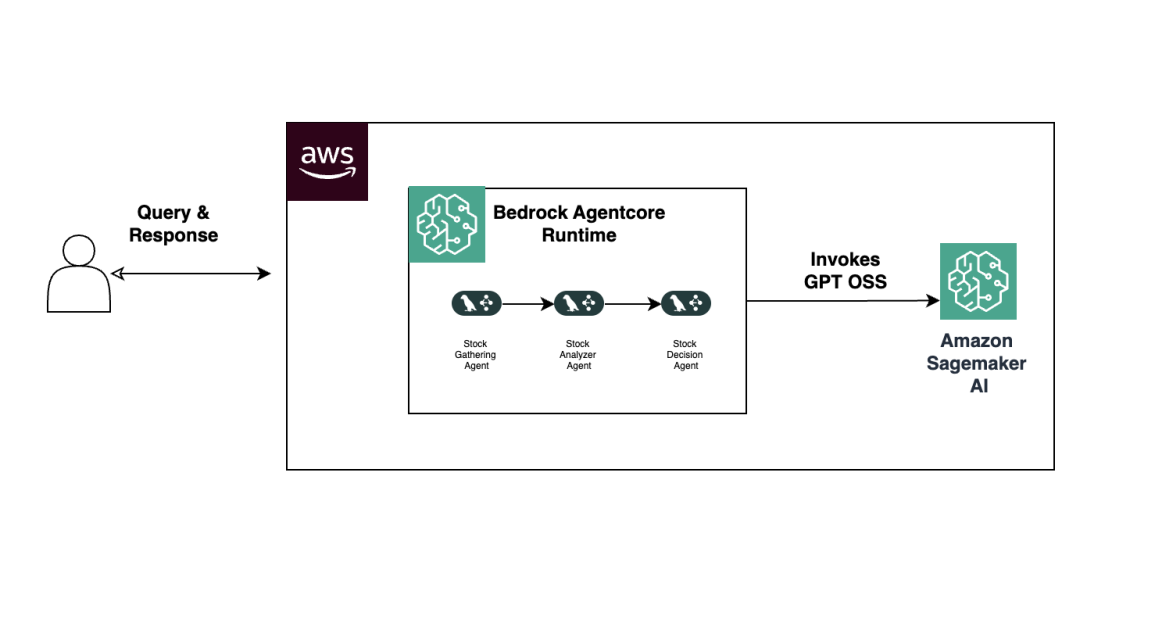

Build Agentic Workflows with OpenAI GPT OSS on SageMaker AI and Bedrock AgentCore

An end-to-end look at deploying OpenAI GPT OSS models on SageMaker AI and Bedrock AgentCore to power a multi-agent stock analyzer with LangGraph, including 4-bit MXFP4 quantization, serverless orchestration, and scalable inference.