Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Sources: https://aws.amazon.com/blogs/machine-learning/use-aws-deep-learning-containers-with-amazon-sagemaker-ai-managed-mlflow, https://aws.amazon.com/blogs/machine-learning/use-aws-deep-learning-containers-with-amazon-sagemaker-ai-managed-mlflow/, AWS ML Blog

TL;DR

- Integrates AWS Deep Learning Containers (DLCs) with SageMaker AI managed MLflow to balance infrastructure control with robust ML governance.

- Uses a TensorFlow abalone age prediction workflow where training runs in DLCs, artifacts are stored in Amazon S3, and results are logged to MLflow for centralized tracking.

- SageMaker managed MLflow provides one-line automatic logging, enhanced comparison capabilities, and complete lineage tracking for auditability.

- The approach offers a balance between customization and standardization, enabling organizations to standardize ML workflows while preserving infrastructure flexibility via DLCs or DLAMIs.

- Prerequisites include pulling an optimized TensorFlow training container from the AWS public Elastic Container Registry (ECR) and following the accompanying GitHub README for provisioning and execution.

Context and background

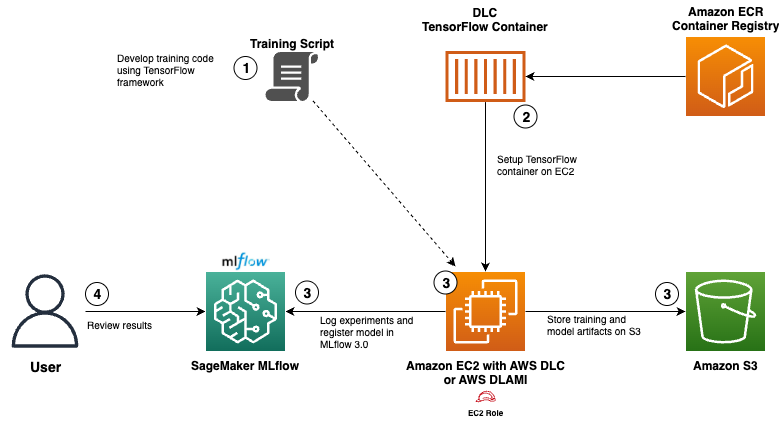

Organizations building custom machine learning (ML) models often have specialized requirements that standard platforms can’t accommodate. For example, healthcare companies need specific environments to protect patient data while meeting HIPAA compliance, financial institutions require specific hardware configurations to optimize proprietary trading algorithms, and research teams need flexibility to experiment with cutting-edge techniques using custom frameworks. These needs drive teams to construct custom training environments that grant control over hardware selection, software versions, and security configurations. While this flexibility is valuable, it also creates challenges for ML lifecycle management, increasing operational costs and demanding engineering resources. AWS Deep Learning Containers (DLCs) and SageMaker AI managed MLflow offer a powerful solution to address both control and governance. DLCs provide preconfigured Docker containers with popular frameworks (such as TensorFlow and PyTorch), NVIDIA CUDA drivers for GPU support, and are optimized for performance on AWS. They are regularly updated with the latest framework versions and patches andDesigned to integrate with AWS services for training and inference. AWS Deep Learning AMIs (DLAMIs) are preconfigured Amazon Machine Images for EC2 instances, including CUDA, cuDNN, and other essential tools, with updates managed by AWS. Together, DLAMIs and DLCs give ML practitioners the infrastructure and tooling to accelerate deep learning in the cloud at scale. SageMaker managed MLflow delivers comprehensive lifecycle management with one-line automatic logging, enhanced comparison capabilities, and complete lineage tracking. As a fully managed service on SageMaker AI, it removes the operational burden of maintaining tracking infrastructure. The integration showcased here demonstrates how to combine AWS DLCs with MLflow to achieve a governance-focused workflow while preserving infrastructure flexibility. The approach is described through a functional setup that can be adapted to meet specialized requirements and reduce the time and resources needed for ML lifecycle management. In the described solution, you develop a TensorFlow neural network model for abalone age prediction with integrated SageMaker managed MLflow tracking. You pull an optimized TensorFlow training container from the AWS public ECR repository, configure an EC2 instance with access to the MLflow tracking server, and execute the training within the DLC. Model artifacts are stored in Amazon S3, and experiment results are logged to MLflow. Finally, you can view and compare experiment results in the MLflow UI to evaluate model performance. The overall workflow, as depicted in the accompanying diagram, shows the interaction between AWS services, AWS DLCs, and SageMaker managed MLflow. The steps include provisioning infrastructure, configuring permissions, running the first training job, and employing comprehensive experiment tracking throughout the ML lifecycle.

What’s new

This post introduces a practical integration pattern that balances infrastructure control with governance by combining DLCs (or DLAMIs) with SageMaker AI managed MLflow. The workflow enables end-to-end tracking, model provenance, and governance directly from training runs to registered models, providing a central, auditable history of experiments, metrics, hyperparameters, and artifacts. The solution demonstrates how to:

- Run training in DLCs while securely storing artifacts in S3.

- Log experiments, metrics, and parameters to a centralized MLflow tracking server managed by SageMaker AI.

- Automatically register models in the SageMaker Model Registry through MLflow integration, creating a traceable link from training runs to production models.

- Use the MLflow UI to compare runs, inspect provenance, and gain visibility into training dynamics and convergence.

- Establish a scalable, governed ML workflow that accommodates specialized environments while maintaining standardization where possible. The post also highlights the prerequisites and provides references to detailed step-by-step instructions in the accompanying GitHub repository README, ensuring teams can provision infrastructure and execute their first training job with full experiment tracking.

Why it matters (impact for developers/enterprises)

For organizations with strict governance requirements or unique compute and security needs, the ability to control the training environment without sacrificing governance is essential. By combining DLCs or DLAMIs with SageMaker AI managed MLflow, teams can:

- Preserve flexibility to tailor environments for HIPAA compliance, specialized hardware configurations, or cutting-edge experimentation, while benefiting from centralized governance.

- Achieve comprehensive experiment tracking, model provenance, and an auditable lineage from training runs to production deployments.

- Accelerate the ML lifecycle from experimentation to deployment by reducing operational overhead associated with tracking infrastructure.

- Standardize ML workflows across teams and projects, enabling reproducibility and easier collaboration while maintaining the safeguards required by regulated industries. The approach aligns with broader aims to balance customization and governance in enterprise AI initiatives, enabling data scientists and administrators to work more efficiently without compromising security, traceability, or compliance.

Technical details or Implementation

Key elements of the described solution include the following components and steps:

- AWS Deep Learning Containers (DLCs): preconfigured Docker containers with frameworks like TensorFlow and PyTorch, including NVIDIA CUDA drivers for GPU support; DLCs are optimized for AWS and regularly updated. DLCs are designed to integrate with AWS services for training and inference.

- AWS Deep Learning AMIs (DLAMIs): preconfigured AMIs for EC2 with popular frameworks; available for CPU and GPU instances; include CUDA, cuDNN, and other necessary tools; AWS manages updates.

- SageMaker managed MLflow: provides one-line automatic logging, enhanced comparisons, and complete lineage tracking; a fully managed MLflow experience on SageMaker AI.

- Implementation steps (at a high level):

- Pull an optimized TensorFlow training container from the AWS public ECR repository.

- Configure an EC2 instance with access to the MLflow tracking server.

- Run the training process within the DLC while storing model artifacts in Amazon S3 and logging experiment results to MLflow.

- View and compare experiment results in the MLflow UI to evaluate model performance.

- When logging a model with mlflow.tensorflow.log_model() using the registered_model_name parameter, the model is automatically registered in the Amazon SageMaker Model Registry, establishing a direct audit trail from training to production.

- The model artifacts are stored in S3 following a standardized structure, ensuring weights, configurations, and metadata are preserved and accessible.

- Practical example: abalone age prediction using a TensorFlow model and the abalone-tensorflow-experiment with a run named unique-cod-104, showcasing how governance features and model versioning are surfaced in the UI and the model registry.

- Reference implementation: all code examples and implementation details are provided in the accompanying GitHub repository README, which covers provisioning, permissions, and training with full experiment tracking. As shown in the visuals accompanying the post, SageMaker managed MLflow provides a central hub for tracking metrics, parameters, artifacts, and registered models, offering a complete governance and audit trail for ML workflows. The integration ensures that training results can be traced to the exact model deployed in production, delivering traceability and compliance across the ML lifecycle.

Key takeaways

- DLCs (and DLAMIs) offer the right balance between customization and managed infrastructure for ML workflows on AWS.

- SageMaker AI managed MLflow adds robust governance features, including one-line logging, lineage tracking, and model registry integration.

- The TensorFlow abalone age-prediction workflow demonstrates end-to-end governance: from training runs to artifact storage, experiment comparison, and production deployment traceability.

- Centralized MLflow tracking in SageMaker AI simplifies auditing, provenance, and collaboration across data science and operations teams.

- The approach reduces operational overhead while preserving flexibility for specialized requirements.

FAQ

-

What are AWS DLCs and DLAMIs?

DLCs are preconfigured Docker containers with frameworks like TensorFlow and PyTorch, including NVIDIA CUDA drivers; DLAMIs are preconfigured Amazon Machine Images for EC2 instances. They come with CUDA, cuDNN, and other necessary tools, with AWS managing updates.

-

How does SageMaker managed MLflow help governance?

It provides comprehensive lifecycle management with one-line automatic logging, enhanced comparison capabilities, and complete lineage tracking, all within a fully managed SageMaker AI environment.

-

What is the abalone example used in the walkthrough?

It is a TensorFlow neural network model for abalone age prediction used to demonstrate end-to-end training, logging, and governance with MLflow and SageMaker.

-

Where are artifacts and results stored in this workflow?

Model artifacts and data are stored in Amazon S3, while experiment metrics and parameters are logged to MLflow for centralized tracking and governance.

-

How can I start implementing this pattern?

Refer to the accompanying GitHub repository README for detailed, step-by-step instructions on provisioning infrastructure, setting permissions, and running the first training job with MLflow governance.

References

More news

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Microsoft to turn Foxconn site into Fairwater AI data center, touted as world's most powerful

Microsoft unveils plans for a 1.2 million-square-foot Fairwater AI data center in Wisconsin, housing hundreds of thousands of Nvidia GB200 GPUs. The project promises unprecedented AI training power with a closed-loop cooling system and a cost of $3.3 billion.

Reddit Pushes for Bigger AI Deal with Google: Users and Content in Exchange

Reddit seeks a larger licensing deal with Google, aiming to drive more users and access to Reddit data for AI training, potentially via dynamic pricing and traffic incentives.

Prompting for precision with Stability AI Image Services in Amazon Bedrock

Amazon Bedrock now offers Stability AI Image Services, extending Stable Diffusion and Stable Image with nine tools for precise image creation and editing. Learn prompting best practices for enterprise use.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.

Scale visual production using Stability AI Image Services in Amazon Bedrock

Stability AI Image Services are now available in Amazon Bedrock, delivering ready-to-use media editing via the Bedrock API and expanding on Stable Diffusion models already in Bedrock.