Build Agentic Workflows with OpenAI GPT OSS on SageMaker AI and Bedrock AgentCore

Sources: https://aws.amazon.com/blogs/machine-learning/build-agentic-workflows-with-openai-gpt-oss-on-amazon-sagemaker-ai-and-amazon-bedrock-agentcore, https://aws.amazon.com/blogs/machine-learning/build-agentic-workflows-with-openai-gpt-oss-on-amazon-sagemaker-ai-and-amazon-bedrock-agentcore/, AWS ML Blog

TL;DR

- OpenAI released two open-weight models, gpt-oss-120b (117B parameters) and gpt-oss-20b (21B parameters), both with a 128K context window and a sparse Mixture of Experts (MoE) design.

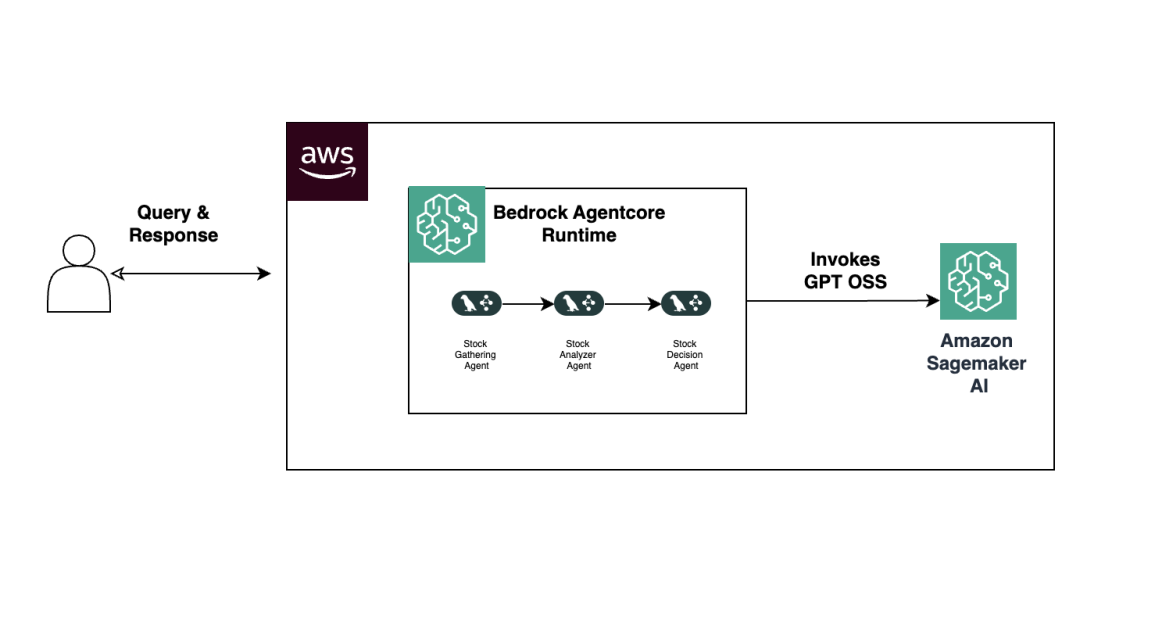

- The post demonstrates deploying gpt-oss-20b on SageMaker AI managed endpoints using a vLLM container, and orchestrating a multi-agent stock analyzer with LangGraph, then deploying to Amazon Bedrock AgentCore Runtime.

- A three-agent pipeline (Data Gathering Agent, Stock Performance Analyzer Agent, Stock Report Generation Agent) runs across Bedrock AgentCore while GPT-OSS handles language understanding and generation via SageMaker AI.

- 4-bit quantization (MXFP4) reduces model weights to 63 GB (120B) or 14 GB (20B), enabling operation on single H100 GPUs, with deployment options including BYOC (bring-your-own-container) paths and fully managed hosting through SageMaker AI.

- The solution emphasizes serverless, modular, and scalable agentic systems with persistent memory and workflow orchestration, plus clear steps for deployment, invocation, and cleanup. See the AWS blog for details. This article is based on the approach described by AWS and OpenAI in their documentation and demonstrations for building agentic workflows with GPT OSS on SageMaker AI and Bedrock AgentCore. AWS blog

More news

First look at the Google Home app powered by Gemini

The Verge reports Google is updating the Google Home app to bring Gemini features, including an Ask Home search bar, a redesigned UI, and Gemini-driven controls for the home.

NVIDIA HGX B200 Reduces Embodied Carbon Emissions Intensity

NVIDIA HGX B200 lowers embodied carbon intensity by 24% vs. HGX H100, while delivering higher AI performance and energy efficiency. This article reviews the PCF-backed improvements, new hardware features, and implications for developers and enterprises.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.