Unified multimodal access layer for Quora’s Poe using Amazon Bedrock

Sources: https://aws.amazon.com/blogs/machine-learning/unified-multimodal-access-layer-for-quoras-poe-using-amazon-bedrock, https://aws.amazon.com/blogs/machine-learning/unified-multimodal-access-layer-for-quoras-poe-using-amazon-bedrock/, AWS ML Blog

TL;DR

- A unified wrapper API enables a build-once, deploy-many approach for Amazon Bedrock models on Poe.

- The framework translates Poe’s ServerSentEvents protocol to Bedrock REST-based APIs, with a hybrid use of the Converse API introduced by Bedrock in May 2024.

- A template-based configuration system dramatically reduces deployment time to about 15 minutes and scales to 30+ Bedrock models across text, image, and video modalities.

- The solution reduces code changes by up to 95% and supports high-volume production with robust error handling, token accounting, and secure AWS authentication.

Context and background

Organizations increasingly rely on Generative AI Gateway architectures to access multiple foundation models (FMs) through a single, normalized API. Building and maintaining separate integrations for every model creates substantial engineering effort, maintainability challenges, and onboarding friction for new models. To address these issues, the AWS Generative AI Innovation Center and Quora collaborated on a unified wrapper API framework that standardizes access to Bedrock FMs for Poe. Poe is Quora’s multi-model AI system that enables users to interact with a library of AI models and assistants from various providers. Poe’s interface supports side-by-side conversations across models for tasks such as natural language understanding, content generation, image creation, and more. The integration required reconciling Poe’s event-driven Server-Sent Events (SSE) architecture, built with FastAPI (fastapi_poe), with Bedrock’s REST-based API surface, AWS SDK usage patterns, SigV4 authentication, region-specific model availability, and streaming options. The effort also highlighted the need for maintainability and rapid onboarding when new Bedrock models become available. In May 2024, Amazon Bedrock introduced the Converse API, which offered standardization benefits that simplified integration. The solution described here uses the Converse API where appropriate while preserving compatibility with model-specific APIs for specialized capabilities. This hybrid approach delivers flexibility and standardization in a single integration pathway.

What’s new

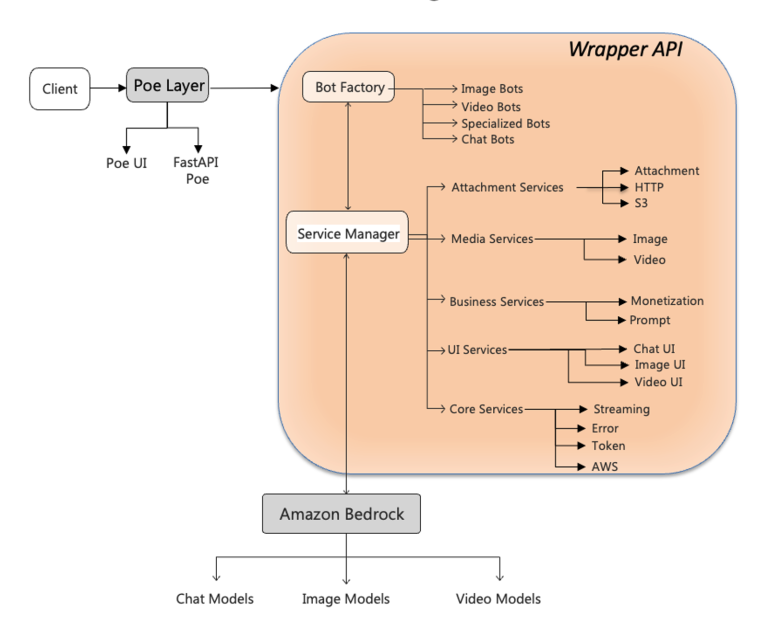

The core advancement is a modular wrapper API framework that sits between Poe and Amazon Bedrock, providing a unified interface and translation layer that normalizes model and protocol differences while preserving each model’s capabilities. Key architectural elements include:

- Bot Factory: Dynamically creates the appropriate model handler based on the requested model type (text, image, or video).

- Service Manager: Orchestrates specialized services needed to process requests, including token services, streaming services, and error handling services.

- Translation layer: Bridges Poe’s SSE-based requests and Bedrock REST endpoints, handling subtle differences across models to ensure Poe’s response format is consistently satisfied.

- ErrorService: Provides consistent error handling and clear user messages across models.

- AwsClientService: Manages secure authentication and connection management for Bedrock API calls.

- Template-based configuration: A centralized configuration system with shared defaults and model-specific overrides, enabling rapid onboarding of new models with minimal code changes.

- Token counting and optimization: Tracks token usage for accurate cost estimation and efficient use of Bedrock models.

- Connection pooling with aiobotocore: Enables high-volume, concurrent Bedrock requests by maintaining a pool of connections.

- Converse API usage: Where applicable, the framework leverages the Converse API to standardize interactions while preserving model-specific capabilities. The wrapper API framework delivers a “build once, deploy multiple models” capability, enabling Poe to onboard tens of Bedrock models across text, image, and video modalities in a fraction of the time it would have taken with prior approaches. The solution architecture is designed to be modular and scalable, separating concerns and facilitating future expansion.

Why it matters (impact for developers/enterprises)

- Accelerated multi-model deployment: The template-based configuration and unified translation layer dramatically shorten onboarding times and reduce engineering effort.

- Expanded model diversity: Poe can rapidly expand its Bedrock model catalog, including dozens of models across modalities, without rewriting integration logic for each model.

- Operational control and reliability: Consistent error handling, token accounting, and secure AWS authentication provide reliable, predictable behavior across models and environments.

- Maintainability: A single abstraction layer reduces the maintenance burden associated with evolving model APIs and Bedrock features.

- Production readiness: The architecture is designed to handle high-volume workloads with robust scaling strategies and streamlined deployment processes.

Technical details or Implementation

The most technically challenging aspect was bridging Poe’s SSE-based event model with Bedrock’s REST-based API surface. The team implemented a robust protocol translation layer that normalizes responses and keeps Poe’s expected format intact, regardless of the underlying Bedrock model. Several important components and practices include:

- BotConfig and template-based configuration: The BotConfig class provides structured, type-validated definitions for bot configurations, enabling consistent deployment patterns and predictable behavior across models.

- Error handling and normalization: The ErrorService ensures meaningful, consistent error messages across diverse model failures and API conditions.

- Token counting and optimization: Detailed token accounting supports accurate cost estimation and efficient model usage.

- AwsClientService for authentication: Securely handles AWS authentication, with proper error handling and connection management to Bedrock services.

- BPA: A hybrid model approach that effectively uses Converse API for standardization while maintaining compatibility with model-specific APIs for specialized capabilities.

- Multimodal support: The framework demonstrates capabilities across text, image, and video modalities by enabling integrations with a broad set of Bedrock models.

- Production readiness: The wrapper is designed to handle high-throughput workloads with robust pooling via aiobotocore to maintain a pool of Bedrock connections. A notable impact metric is the deployment efficiency improvement: onboarding more than 30 Bedrock models across modalities occurred in weeks rather than months of work, with code changes reduced by as much as 95%. The approach also demonstrated how a unified configuration system and protocol translation layer can accelerate innovation cycles while maintaining operational control.

Deployment and model catalog context

The Bedrock Model Catalog serves as a central hub for discovering and evaluating a diverse set of foundation models from multiple providers. On Poe’s side, the integration enabled a scalable model catalog experience where models are presented as individual chatbots in Poe’s user interface, enabling users to engage with multiple providers through a single platform.

Key metrics and comparisons

| Metric | Before integration | After integration |---|---|---| | Deployment time to onboard Bedrock models | days/weeks | ~15 minutes |Number of Bedrock models onboarded | not readily scalable | >30 across text, image, video |Code changes required per model onboarding | significant | up to 95% reduction |Concurrency strategy | manual, separate connections | aiobotocore connection pooling for Bedrock |

Key takeaways

- A well-designed abstraction layer can dramatically simplify multi-model deployments by normalizing protocols and interfaces.

- A template-driven configuration model accelerates onboarding and reduces the risk of per-model integration drift.

- Hybrid API strategies that combine standardized APIs (Converse) with model-specific APIs can balance consistency and capability.

- Strong operational primitives—error handling, token accounting, and secure auth—are critical for production-scale multi-model AI systems.

- Modular architectures enable rapid expansion of model catalogs while maintaining predictable behavior and performance.

FAQ

-

What problem does the wrapper API solve?

It provides a unified interface to Poe that normalizes differences between Poe’s SSE-based architecture and Bedrock’s REST-based APIs, reducing integration complexity and maintenance burden.

-

How does the system bridge Poe SSE and Bedrock REST?

Through a protocol translation layer that ensures responses conform to Poe’s expected format, while leveraging Bedrock REST endpoints and, where appropriate, the Converse API for standardization.

-

What role does the Converse API play in this integration?

The Converse API offers standardization benefits that the wrapper uses where appropriate, paired with compatibility with model-specific APIs for specialized features.

-

How many Bedrock models were onboarded and across which modalities?

The integration enabled onboarding over 30 Bedrock models across text, image, and video modalities.

References

More news

First look at the Google Home app powered by Gemini

The Verge reports Google is updating the Google Home app to bring Gemini features, including an Ask Home search bar, a redesigned UI, and Gemini-driven controls for the home.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

Google expands Gemini in Chrome with cross-platform rollout and no membership fee

Gemini AI in Chrome gains access to tabs, history, and Google properties, rolling out to Mac and Windows in the US without a fee, and enabling task automation and Workspace integrations.