Streamline ISO-rating content changes with Verisk Rating Insights and Amazon Bedrock

Sources: https://aws.amazon.com/blogs/machine-learning/streamline-access-to-iso-rating-content-changes-with-verisk-rating-insights-and-amazon-bedrock, https://aws.amazon.com/blogs/machine-learning/streamline-access-to-iso-rating-content-changes-with-verisk-rating-insights-and-amazon-bedrock/, AWS ML Blog

TL;DR

- Verisk Rating Insights is a feature of ISO Electronic Rating Content (ERC) that provides summaries of ISO Rating changes between two releases.

- The solution uses generative AI and AWS services, including Amazon Bedrock, Claude Sonnet 3.5, LlamaIndex, and OpenSearch Serverless with Retrieval Augmented Generation (RAG) to enable a conversational interface.

- It eliminates the need to manually download entire content packages, reducing analysis time from 3–4 hours per test case to minutes and lowering the customer support burden.

- Guardrails, governance, and data access controls are built into the system to protect compliance, IP, and data privacy, supported by a governance council.

- Verisk plans to expand query capabilities and scale the platform to more users and content sets across product lines.

Context and background

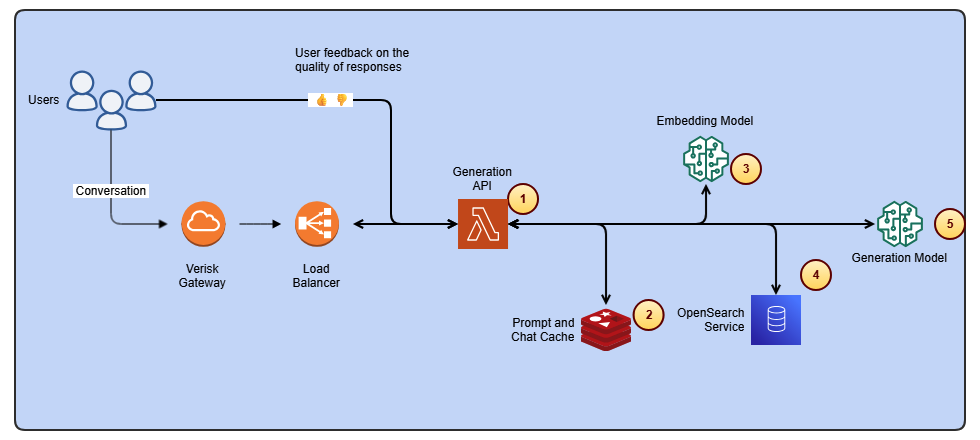

ISO Electronic Rating Content (ERC) changes are traditionally accessed through downloads of full content packages or by manual comparison across releases. This process is time-consuming and can hinder timely customer support. The AWS ML Blog describes how Verisk Rating Insights emerged from the need to improve accessibility and automate repetitive tasks. By combining generative AI with AWS services, Verisk created a conversational platform that helps users retrieve specific information, identify content differences, and work more efficiently. The article notes that the solution is powered by Amazon Bedrock, large language models (LLMs), and Retrieval Augmented Generation (RAG), with Claude Sonnet 3.5 handling user queries and grounding responses. The architecture integrates LlamaIndex as the chain framework to connect and manage multiple data sources, enabling dynamic content retrieval, while the OpenSearch Serverless vector database supports intelligent search and retrieval from the rating content changes. RAG enables the model to pull relevant, up-to-date data without requiring users to scan or download massive packages. The system uses Bedrock Guardrails and custom guardrails around the models to ensure outputs meet compliance and quality standards. A governance council oversees security, data usage, and IP protection, reinforcing the trustworthiness of the AI-enabled workflow. For more details, see the AWS blog post referenced in the sources.

What’s new

Verisk Rating Insights now combines several advanced technologies to deliver a seamless user experience for ISO ERC content changes. The platform uses Claude Sonnet 3.5 (model ID: anthropic.claude-3-5-sonnet-20240620-v1:0) to interpret user input and generate contextually relevant responses. LlamaIndex coordinates the data sources, while OpenSearch Serverless stores and retrieves content changes as vectors to support precise matches. The integration of Retrieval Augmented Generation (RAG) allows responses to be grounded in current, authoritative data, reducing the likelihood of outdated or irrelevant outputs. In addition to the AI components, Verisk implemented governance and safety mechanisms, including Bedrock Guardrails and custom model guardrails that constrain outputs to meet security and compliance requirements. The company established a governance council to supervise AI usage, ensuring adherence to data usage policies and IP protection, and implemented strict access controls within the RAG pipeline so sensitive information remains accessible only to authorized users. The result is a secure, flexible service suitable for building capable AI applications and agents that can connect to up-to-date data. The architecture and evaluation loop are designed to coordinate multiple LLM calls and ensure responses are relevant and grounded in the underlying rating content. The article also emphasizes the practical benefits for customers: a conversational interface that enables self-service access to change summaries and differences, eliminating the need to download entire ERC packages, and providing grounded, timely insights that support faster decision-making. The solution demonstrates how generative AI can transform operational processes, reduce manual effort, and improve accuracy in handling ISO ERC content changes. It also positions Bedrock as the infrastructure to scale and maintain such capabilities across multiple content sets and product lines. For more context, the AWS blog post provides the full technical narrative and diagrams describing data ingestion and inference flow, as well as the evaluation and memory strategies used to maintain context across conversations.

Why it matters (impact for developers/enterprises)

- Time savings and productivity: By removing the need to download and compare large ERC packages, customers can obtain relevant insights in minutes rather than hours or days. This accelerates decision-making and reduces analyst workload. The article notes substantial time reductions per test case and improved throughput for customer analysis, which also reduces repetitive manual tasks for teams.

- Self-service and customer onboarding: An AI-powered conversational interface enables users to self-serve and obtain answers in real time, which reduces the burden on ERC customer support and speeds onboarding for new customers.

- Improved accuracy and consistency: Grounded responses via RAG minimize hallucinations and irrelevant details by tying outputs to current data sources, with governance and guardrails ensuring performance aligns with compliance standards.

- Scale and automation: The integration with AWS Bedrock and OpenSearch, plus the governance framework, provides a scalable foundation to extend this approach to additional content sets and product lines, enabling faster expansion without compromising security or quality.

- Governance and security emphasis: A governance council and strict access controls help safeguard sensitive information and ensure IP protection and contract compliance, addressing the novelty and risk associated with generative AI in regulated domains. These controls are designed to support enterprise adoption while maintaining control over data usage and model behavior.

Technical details or Implementation

The Verisk solution described in the AWS blog combines multiple AWS services and AI capabilities to deliver a robust, scalable platform for ISO ERC content changes. The key components include:

- Generative AI layer: Claude Sonnet 3.5 (model ID: anthropic.claude-3-5-sonnet-20240620-v1:0) is used to understand user input and produce detailed, contextually grounded responses.

- Data orchestration: LlamaIndex serves as the chain framework to orchestrate connections to various data sources, enabling dynamic retrieval of content changes and insights.

- Retrieval augmented generation: OpenSearch Serverless vector database stores content changes as vectors and facilitates precise retrieval for grounding model outputs.

- Guardrails and governance: Bedrock Guardrails along with custom guardrails are implemented to enforce compliance and quality constraints on model outputs. A governance council oversees the AI initiative, with strict controls to ensure data access is limited to authorized users and to protect IP.

- Data ingestion and inference loops: The solution architecture separates data ingestion from the inference loop, coordinating multiple LLM calls to generate user responses. The exact step-by-step diagrams are described in the post, illustrating how data is ingested, indexed, and queried, and how responses are generated and refined in an iterative process.

- Evaluation and memory: The system includes an evaluation framework and feedback loop to support continuous improvement and address potential issues such as accuracy and consistency. Chat history is captured as contextual memory to ground ongoing conversations and analyses. These components work together to deliver a conversational interface that can retrieve and summarize ISO ERC changes, identify content differences across releases, and present precise, up-to-date insights without requiring manual downloads or exhaustive searches. The solution is built to be secure, auditable, and scalable, with explicit emphasis on data privacy and IP protection.

Key takeaways

- Verisk Rating Insights leverages a modern AI-enabled data platform to streamline access to ISO ERC changes.

- The solution uses Claude Sonnet 3.5, LlamaIndex, OpenSearch Serverless, and RAG to deliver grounded, up-to-date answers.

- Guardrails, governance, and strict data access controls help ensure security, compliance, and IP protection.

- Time savings and improved onboarding lead to lower support costs and greater user satisfaction.

- The architecture is designed for scale, enabling expansion to more users and additional content sets across product lines.

FAQ

- Q: What is Verisk Rating Insights and what problem does it solve? A: It is a feature of ISO ERC that provides summaries of rating changes between releases, and it uses AI and AWS services to enable a conversational interface for retrieving specific content changes and differences.

- Q: Which technologies are used in the platform? A: The platform employs Amazon Bedrock, Claude Sonnet 3.5, LlamaIndex, and OpenSearch Serverless with RAG, supported by Bedrock Guardrails and custom model guardrails for compliance.

- Q: How much time does the solution save for users? A: The automated approach eliminates the need to download entire packages and perform manual searches, reducing analysis time from 3–4 hours per test case to minutes.

- Q: How is data security and IP protection addressed? A: A governance council oversees the solution, and the system uses strict access controls in the RAG pipeline, along with guardrails, to protect data usage, security, and IP.

- Q: What are the plans for the future? A: Verisk intends to expand the scope of queries to support more filing types and broader coverage, and to scale the platform to accommodate more users and additional content sets across product lines.

References

More news

First look at the Google Home app powered by Gemini

The Verge reports Google is updating the Google Home app to bring Gemini features, including an Ask Home search bar, a redesigned UI, and Gemini-driven controls for the home.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

Google expands Gemini in Chrome with cross-platform rollout and no membership fee

Gemini AI in Chrome gains access to tabs, history, and Google properties, rolling out to Mac and Windows in the US without a fee, and enabling task automation and Workspace integrations.