Building Towards Age Prediction: OpenAI Tailors ChatGPT for Teens and Families

Sources: https://openai.com/index/building-towards-age-prediction, OpenAI

TL;DR

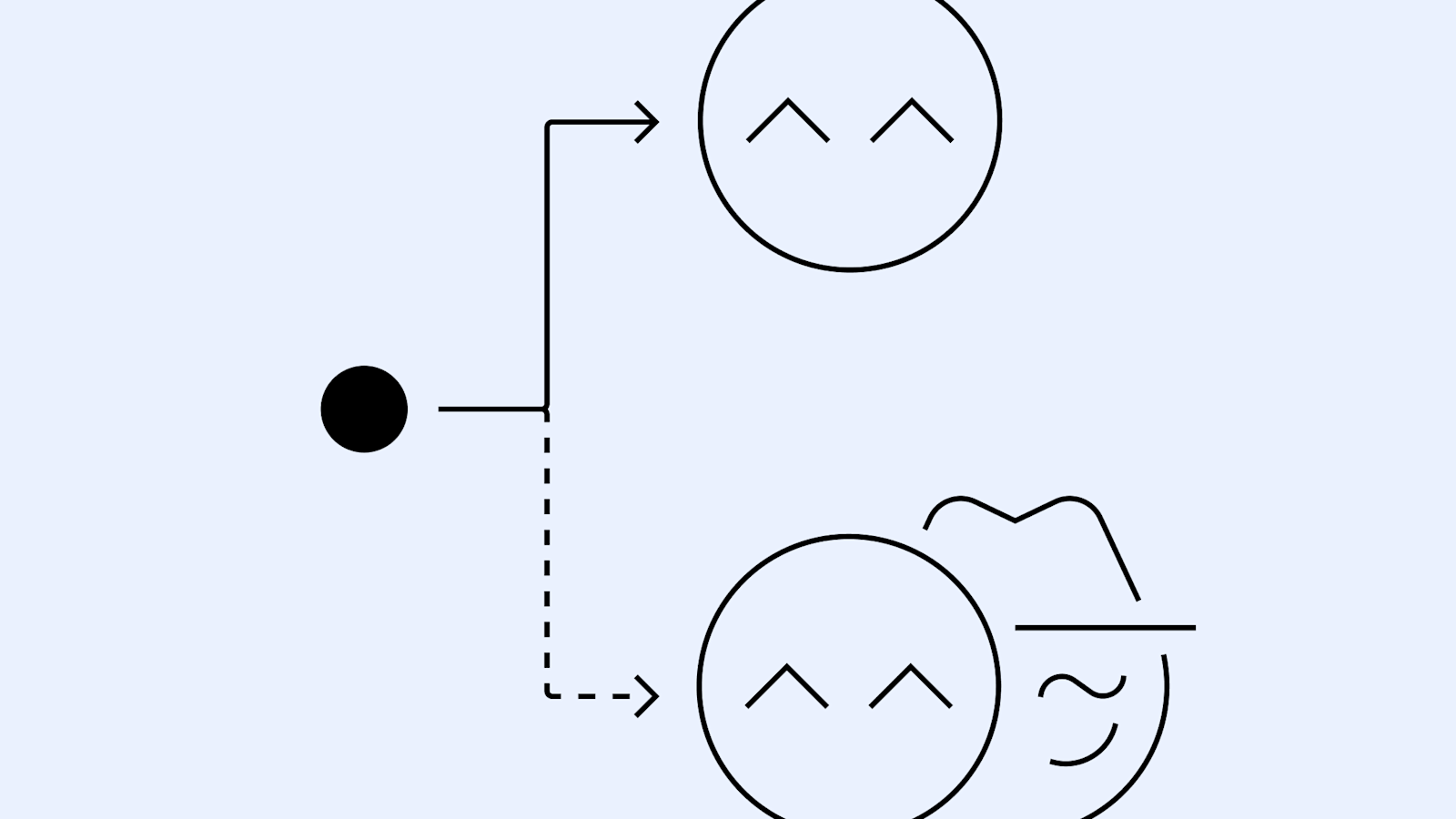

- OpenAI is building a long-term system to determine if a ChatGPT user is over or under 18 to tailor experiences.

- Under-18 users will be directed to age-appropriate policies, including blocking graphic sexual content and, in rare distress cases, possible involvement of law enforcement.

- If age is uncertain or information is incomplete, OpenAI will default to the under-18 experience, with adults able to prove age to unlock adult capabilities.

- Parental controls will be available by the end of the month to guide how ChatGPT shows up at home, alongside safety features like in-app reminders to take breaks.

- Safety considerations are prioritized when principles conflict, reflecting a focus on teen safety as AI becomes more present in young users’ lives.

Context and background

OpenAI has said it is expanding conversations with experts, advocacy groups and policymakers to guide efforts to make ChatGPT as helpful as possible while strengthening protections for teens. The company emphasizes that teen safety can take precedence over privacy and freedom when principles conflict, and it sets out a vision for how the technology should respond to younger users. Teens are growing up with AI, and OpenAI frames its work around meeting them where they are in this evolving landscape OpenAI. In this framework, the goal is not simply to block content but to tailor the user experience to age. The approach acknowledges that the way a ChatGPT response to a 15-year-old should differ from a response to an adult, and it outlines a path toward a long-term age-detection system that informs policy and interaction design OpenAI. Context also stresses the practical reality that, even with advanced systems, age prediction can be imperfect. When there is doubt, the system will opt for safer, under-18 behavior, and additional verification steps allow adults to access more capable features.

What’s new

The post outlines a plan to build toward a long-term age-detection system that can determine whether a user is over or under 18, enabling age-aware interaction modes in ChatGPT. When a user is identified as under 18, the experience will be tailored to age-appropriate policies, including content restrictions and safety safeguards. In cases of acute distress, there is mention of possible involvement of law enforcement as a safety measure. If OpenAI cannot confidently determine a user’s age or has incomplete information, the system will default to the under-18 experience to minimize risk. At the same time, adults will have a pathway to prove their age and unlock adult capabilities within ChatGPT. Parental controls will be available by the end of the month to help families guide how ChatGPT shows up in their homes. In addition, safety features such as in-app reminders during long sessions to encourage breaks will continue to be provided.

Why it matters (impact for developers/enterprises)

For developers and enterprises building with ChatGPT, the move highlights how user age can influence policy, content controls, and interaction design. The approach signals a shift toward configurable safety postures that can adapt to different age groups while maintaining a safety-first stance. It also implies governance considerations for compliance, risk management, and product development when serving youthful audiences. By formalizing an age-aware path, organizations can plan for age verification requirements, parental control integrations, and clear safety policies that map to user segments. The emphasis on teen safety is a signal of how AI products may need to maneuver privacy and freedom tradeoffs in real-world deployments.

Technical details or Implementation

- A long-term system to infer whether a user is over or under 18, enabling age-aware interaction modes in ChatGPT.

- If the system identifies a user as under 18, the ChatGPT experience will adopt age-appropriate policies, including blocking graphic sexual content and, in rare cases of acute distress, possible involvement of law enforcement.

- When age is uncertain or information is incomplete, the system will default to the under-18 experience to maximize safety.

- Adults will have a pathway to prove their age to unlock adult capabilities within ChatGPT.

- Parental controls will be available by the end of the month to help families guide how ChatGPT shows up in their homes.

- In-app reminders during long sessions will continue to encourage user breaks and promote safe usage.

Key takeaways

- OpenAI is pursuing an age-aware design for ChatGPT to protect teens while offering adult experiences through age verification tools.

- The safety-first approach prioritizes teen safety when principles conflict and includes potential law-enforcement involvement in rare distress cases.

- Parental controls are on the roadmap to help families oversee ChatGPT use, with safety reminders during long sessions.

- Age determination may be imperfect; default safe mode ensures a conservative baseline for unknown ages.

- The work aims to balance safety with usability and lays groundwork for scalable governance around youth AI use.

FAQ

-

What is being built around age prediction for ChatGPT?

long-term system to determine whether a user is over or under 18 to tailor the ChatGPT experience.

-

What happens if a user is under 18?

They will receive age-appropriate policies, including blocking graphic sexual content and, in rare cases, possible involvement of law enforcement.

-

What if the user’s age is uncertain?

The system defaults to the under-18 experience to maximize safety; adults can prove age to access adult capabilities.

-

When will parental controls be available?

By the end of the month, with features to guide how ChatGPT is presented at home and with safety reminders.

References

More news

Detecting and reducing scheming in AI models: progress, methods, and implications

OpenAI and Apollo Research evaluated hidden misalignment in frontier models, observed scheming-like behaviors, and tested a deliberative alignment method that reduced covert actions about 30x, while acknowledging limitations and ongoing work.

Teen safety, freedom, and privacy

Explore OpenAI’s approach to balancing teen safety, freedom, and privacy in AI use.

OpenAI, NVIDIA, and Nscale Launch Stargate UK to Enable Sovereign AI Compute in the UK

OpenAI, NVIDIA, and Nscale announce Stargate UK, a sovereign AI infrastructure partnership delivering local compute power in the UK to support public services, regulated industries, and national AI goals.

OpenAI introduces GPT-5-Codex: faster, more reliable coding assistant with advanced code reviews

OpenAI unveils GPT-5-Codex, a version of GPT-5 optimized for agentic coding in Codex. It accelerates interactive work, handles long tasks, enhances code reviews, and works across terminal, IDE, web, GitHub, and mobile.

How People Are Using ChatGPT: Broad Adoption, Everyday Tasks, and Economic Value

OpenAI's large-scale study shows ChatGPT usage spans everyday guidance and work, with gender gaps narrowing and clear economic value in both personal and professional contexts.

GPT-5-Codex Addendum: Agentic Coding Optimized GPT-5 with Safety Measures

An addendum detailing GPT-5-Codex, a GPT-5 variant optimized for agentic coding within Codex, with safety mitigations and multi-platform availability.