Autodesk Research Brings Warp Speed to Computational Fluid Dynamics on NVIDIA GH200

Sources: https://developer.nvidia.com/blog/autodesk-research-brings-warp-speed-to-computational-fluid-dynamics-on-nvidia-gh200, https://developer.nvidia.com/blog/autodesk-research-brings-warp-speed-to-computational-fluid-dynamics-on-nvidia-gh200/, NVIDIA Dev Blog

TL;DR

- Autodesk Research developed Accelerated Lattice Boltzmann (XLB), a Python-native, open-source CFD solver based on the Lattice Boltzmann Method (LBM), designed to integrate with AI/ML workflows.

- XLB runs on NVIDIA Warp, a Python-based framework that compiles GPU kernels to native CUDA code. In tests, Warp-enabled XLB achieved an approximate ~8x speedup versus its GPU-accelerated JAX backend on specific hardware configurations.

- An out-of-core computation strategy, enabled by the GH200 Grace Hopper Superchip and its NVLink-C2C interconnect (900 GB/s CPU-GPU bandwidth), allowed scaling to about 50 billion lattice cells across a multi-node GH200 cluster.

- The Warp-backed XLB demonstrated performance comparable to an OpenCL-based FluidX3D solver for a 512^3 lid-driven cavity flow, while offering Python readability and eager prototyping.

- This work highlights a turning point where Python-native CFD can match high-performance, low-level implementations, accelerating rapid experimentation and AI-augmented CAE research.

Context and background

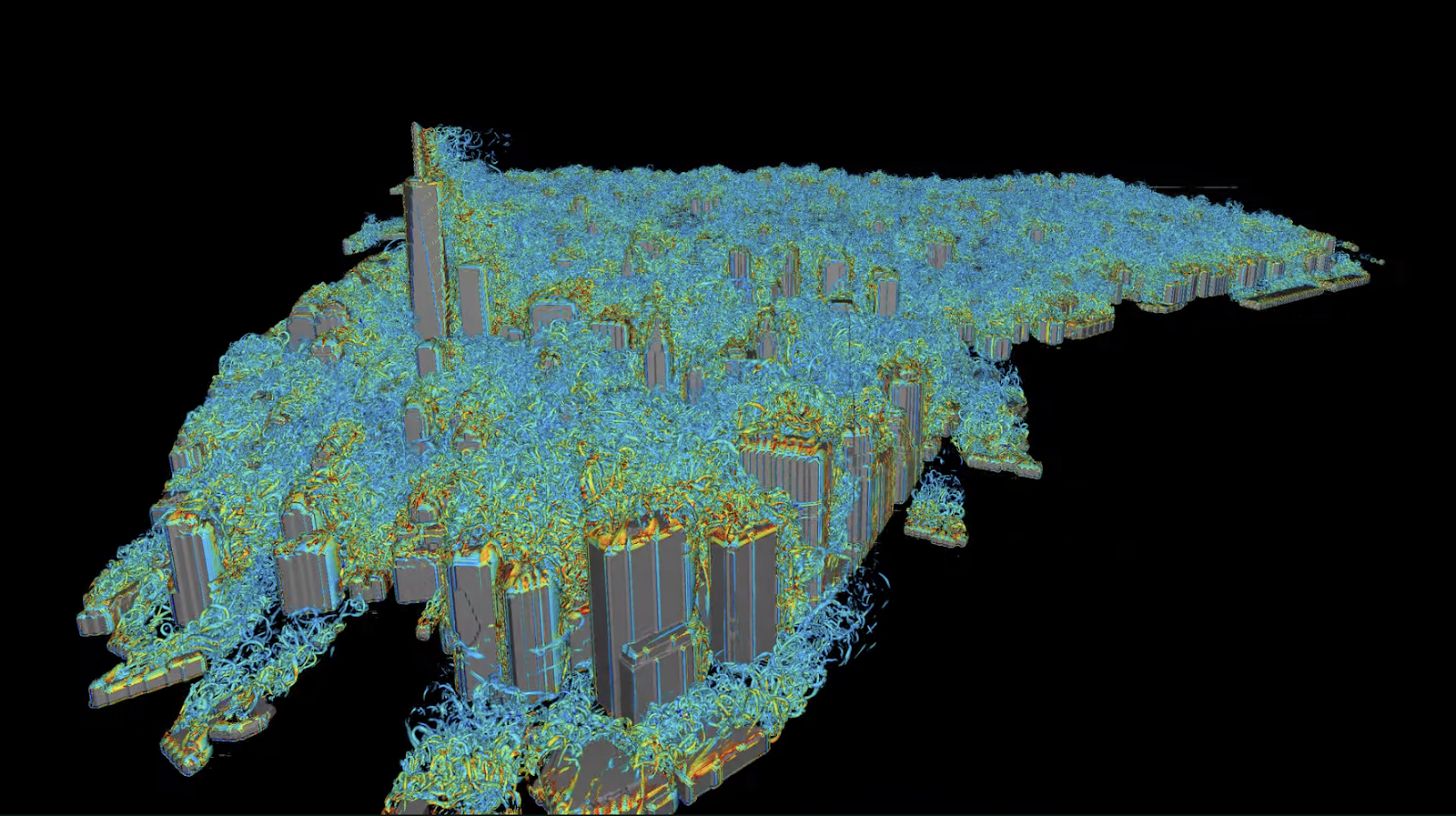

Computational Fluid Dynamics (CFD) sits at the heart of modern CAE-driven product development—from safer aircraft design to optimizing renewable energy systems. Speed and accuracy in simulations underpin rapid prototyping and informed engineering decisions. Historically, CAE solvers have relied on low-level languages like C++ and Fortran to meet throughput and scalability demands, while Python has dominated AI/ML development but struggled with large-scale CFD performance due to its high-level, interpreted nature. The rise of physics-based machine learning has created demand for Python-based CAE solvers that fit naturally into AI/ML workflows without sacrificing performance. In response, Autodesk Research developed XLB, an open-source CFD solver built on the Lattice Boltzmann Method (LBM). Python-native by design, XLB is accessible to developers and differentiable by architecture, enabling seamless integration with modern AI-physics modeling frameworks. This work positions XLB as a bridge between CAE solvers and AI ecosystems, aligning computational speed with Python’s ecosystem of libraries and tools. NVIDIA Warp is an open-source Python framework that enables high-performance simulation and spatial computing by allowing developers to write GPU kernels directly in Python that are just-in-time compiled into native CUDA code. Warp provides features such as warp.fem for finite element analysis and maintains interoperability with NumPy, CuPy, and JAX, among others. Importantly, Warp kernels are differentiable by design, enabling integration with deep learning frameworks like PyTorch and JAX. The GH200 Grace Hopper Superchip brings a core CAE requirement—running high-fidelity simulations at maximum throughput and scale—into focus. The combination of Warp and GH200 supports a workflow where Python-native CFD codes can achieve performance comparable to traditional, high-performance implementations while preserving the accessibility and rapid development cycle of the Python ecosystem.

What’s new

The Autodesk Research and NVIDIA collaboration demonstrates several notable advances:

- Python-native CFD with XLB: XLB is a Python-first CFD solver that leverages LBM and is open source, designed to work hand-in-hand with AI/ML frameworks.

- Warp-enabled performance: Using NVIDIA Warp, XLB achieved an approximate 8x speedup relative to its JAX backend on a single A100 GPU in defined benchmarking scenarios, highlighting the efficiency gains from explicit Python-to-CUDA kernel programming and JIT optimizations.

- Out-of-core scaling on GH200: An out-of-core computation strategy places the domain and flow variables primarily in CPU memory while transferring data to GPUs for processing. The GH200 NVLink-C2C interconnect (900 GB/s CPU-GPU bandwidth) enables rapid streaming of data and memory-coherent transfers, reducing CPU-GPU bottlenecks for large-scale simulations.

- Multi-node scalability: In joint testing, XLB’s Warp backend scaled to a multi-node setup capable of simulating up to about 50 billion lattice cells, with near-linear scaling as node count increased. An eight-node GH200 cluster ran simulations with roughly 50 billion lattice cells, achieving ~8x speedup compared to a single-node GH200 system.

- Performance parity with traditional CFD on Python, with memory efficiency gains: In a comparative study against a traditional OpenCL-based FluidX3D solver implemented in C++, Warp-based XLB delivered comparable throughput for a 512^3 lid-driven cavity flow, measured in MLUPS, while offering improved memory efficiency (two- to three-times better) on the same hardware. | Backend | Throughput (MLUPS) | Memory efficiency (relative) |---|---|---| | Warp (XLB) | ~8x faster than JAX on a single A100 GPU | Two- to three-times better memory efficiency |

How Warp complements XLB

Warp provides a powerful bridge between CUDA and Python by letting developers author GPU kernels in Python that are JIT-compiled to CUDA. This explicit kernel programming model minimizes overhead and enables more predictable CFD performance. Warp’s design supports domain-specific kernels and device functions while maintaining interoperability with NumPy, CuPy, and JAX, enabling researchers to leverage familiar Python tooling alongside CUDA performance.

Benchmarking and observed tradeoffs

The XLB Warp backend achieves an observational performance advantage due to its simulation-optimized design and explicit memory management. Warp requires developers to pre-allocate input and output arrays, reducing hidden allocations and intermediate buffers and contributing to leaner memory footprints that scale more predictably with problem size. In tests with a 512^3 lid-driven cavity flow, Warp-based XLB reached throughput near that of FluidX3D’s OpenCL-based C++ backend, with the added benefits of Python readability and rapid prototyping.

Why it matters (impact for developers/enterprises)

For developers and enterprises, the integration of Warp with XLB signals a new era in CAE toolchains where Python-native CFD codes can meet the performance demands traditionally reserved for low-level languages. The ability to write GPU kernels directly in Python—then JIT-compile to CUDA—lowers the barrier to prototyping new physics models and AI-augmented simulations. The differentiable kernel model aligns CFD simulations with modern ML workflows, enabling tighter coupling between AI models and physical simulators. The hardware context is also important. The GH200 Grace Hopper Superchip, paired with NVLink-C2C interconnects, delivers a high-bandwidth path for streaming data from CPU memory to GPUs, enabling out-of-core strategies that address memory constraints in very large simulations. This configuration supports scaling Python-native CFD to tens of billions of lattice cells, a scale that previously favored more traditional, low-level implementations. From an operations perspective, the combination of Python accessibility, JIT-accelerated CUDA kernels, and scalable memory management can accelerate research workflows, enable rapid iteration of CAE prototypes, and facilitate closer integration with AI/ML pipelines used for design optimization, uncertainty quantification, and data-driven turbulence modeling.

Technical details or Implementation

- XLB is built as a Python-native CFD solver based on the Lattice Boltzmann Method (LBM) and is open source. It is designed to be compatible with Python’s numerical and ML ecosystems, enabling researchers to leverage familiar libraries while executing high-performance simulations.

- NVIDIA Warp enables writing GPU kernels directly in Python, with just-in-time compilation to native CUDA code. Warp supports domain-specific features such as warp.fem for finite element analysis and ensures differentiability of kernels by design, enabling integration with PyTorch and JAX.

- Interoperability with existing frameworks: Warp kernels work with NumPy, CuPy, and JAX, allowing users to mix and match tools within the Python ecosystem.

- Performance characteristics: On defined hardware configurations, Warp-based XLB demonstrated an approximate 8x speedup over the JAX backend on a single A100 GPU. It also achieved two- to three-times better memory efficiency on the same GPU, due to Warp’s explicit memory management and pre-allocated arrays.

- Out-of-core strategy and interconnects: The GH200’s NVLink-C2C interconnect provides 900 GB/s CPU-GPU bandwidth, enabling rapid data streaming for out-of-core computations where the domain and flow variables reside primarily in CPU memory and are swapped into GPU memory as needed. Memory coherency across CPU-GPU transfers reduces traditional bottlenecks in large-scale simulations.

- Scaling results: In a multi-node configuration, XLB’s Warp backend reached ~50 billion lattice cells with near-linear scaling as nodes increased. An eight-node GH200 cluster achieved this scale, illustrating the potential for Python-native CFD to scale to very large problems without sacrificing performance.

- Benchmarking context: The 512^3 lid-driven cavity flow was used as a benchmark. Warp’s performance was measured against an OpenCL-based FluidX3D backend, showing comparable throughput (MLUPS) while offering Python readability and faster development cycles.

Key architectural benefits

- Python-to-CUDA path: Direct Python kernels reduce Python-C++ boundary overhead and enable predictable performance characteristics for CAE simulations.

- Differentiable simulations: Kernel-level differentiability enables smoother integration with AI-physics modeling frameworks, supporting end-to-end differentiable pipelines.

- Interoperability: Users can leverage NumPy, CuPy, and JAX alongside Warp, facilitating hybrid workflows that combine traditional numerical methods with modern ML tooling.

- Memory management philosophy: Pre-allocation of inputs and outputs simplifies memory budgeting and improves scalability for large-scale simulations.

Key takeaways

- XLB demonstrates that a Python-native CFD solver, accelerated with Warp on GH200, can approach the performance of traditional, optimized low-level implementations while preserving Python’s accessibility.

- A combination of JIT-compiled Python kernels, explicit memory management, and out-of-core strategies enables scaling to tens of billions of lattice cells.

- The integration of Warp with XLB enables AI-friendly CFD workflows, combining Python AI tooling with high-performance CFD capabilities.

- Benchmark results show Warp delivering substantial speedups and memory efficiency improvements over a JAX backend on the same hardware, and near parity with OpenCL/C++ solvers in representative cases.

- The collaboration underscores a broader shift toward Python-native CAE tooling that can meet the demands of modern AI-augmented research and development pipelines.

FAQ

-

What is XLB?

XLB stands for Accelerated Lattice Boltzmann, an open-source, Python-native CFD solver based on the Lattice Boltzmann Method.

-

How does Warp improve CFD performance?

Warp allows writing GPU kernels directly in Python that are just-in-time compiled to CUDA, reducing overhead and enabling optimized, differentiable simulations.

-

What hardware enabled the scaling results?

The GH200 Grace Hopper Superchip, with NVLink-C2C interconnect providing 900 GB/s CPU-GPU bandwidth, supported the out-of-core strategy and multi-node scaling.

-

Is this CFD software open source?

Yes, XLB is described as an open-source CFD solver.

-

What is the significance for CAE workflows?

It enables Python-native CFD development that integrates with AI/ML ecosystems while maintaining high-throughput performance and scalability.

References

- NVIDIA Dev Blog: Autodesk Research Brings Warp Speed to Computational Fluid Dynamics on NVIDIA GH200. https://developer.nvidia.com/blog/autodesk-research-brings-warp-speed-to-computational-fluid-dynamics-on-nvidia-gh200/

More news

First look at the Google Home app powered by Gemini

The Verge reports Google is updating the Google Home app to bring Gemini features, including an Ask Home search bar, a redesigned UI, and Gemini-driven controls for the home.

NVIDIA HGX B200 Reduces Embodied Carbon Emissions Intensity

NVIDIA HGX B200 lowers embodied carbon intensity by 24% vs. HGX H100, while delivering higher AI performance and energy efficiency. This article reviews the PCF-backed improvements, new hardware features, and implications for developers and enterprises.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

Google expands Gemini in Chrome with cross-platform rollout and no membership fee

Gemini AI in Chrome gains access to tabs, history, and Google properties, rolling out to Mac and Windows in the US without a fee, and enabling task automation and Workspace integrations.