Unlock model insights with log probability support for Amazon Bedrock Custom Model Import

Sources: https://aws.amazon.com/blogs/machine-learning/unlock-model-insights-with-log-probability-support-for-amazon-bedrock-custom-model-import, https://aws.amazon.com/blogs/machine-learning/unlock-model-insights-with-log-probability-support-for-amazon-bedrock-custom-model-import/, AWS ML Blog

TL;DR

- Log probability support for Custom Model Import provides token-level confidence data for imported models such as Llama, Mistral, and Qwen on Bedrock.

- Enable by setting return_logprobs: true in the InvokeModel API request; the response includes log probabilities for both prompt and generated tokens.

- Use log probabilities to rank outputs, detect hallucinations, assess fine-tuned models, and optimize prompts and retrieval-augmented generation (RAG) systems.

- An example with a fine-tuned Llama 3.2 1B model illustrates decoding token IDs and converting log probabilities to probabilities for intuitive understanding of model confidence.

Context and background

Bedrock Custom Model Import enables you to seamlessly integrate your customized models—such as Llama, Mistral, and Qwen—that you have fine-tuned elsewhere into Amazon Bedrock. The experience is serverless, minimizing infrastructure management while providing imported models with the same unified API access as native Bedrock models. Your custom models benefit from automatic scaling, enterprise-grade security, and native integration with Bedrock features such as Bedrock Guardrails and Bedrock Knowledge Bases. Understanding how confident a model is in its predictions is essential for building reliable AI applications, particularly when working with domain-specific queries. With log probability support now added to Custom Model Import, you can access information about your models’ confidence in their predictions at the token level. This enhancement provides greater visibility into model behavior and enables new capabilities for model evaluation, confidence scoring, and advanced filtering techniques.

What’s new

With this release, Amazon Bedrock now allows models imported using the Custom Model Import feature to return token-level log probabilities as part of the inference response. When invoking a model through the Amazon Bedrock InvokeModel API, you can access token log probabilities by setting “return_logprobs”: true in the JSON request body. With this flag enabled, the model’s response will include additional fields providing log probabilities for both the prompt tokens and the generated tokens, so that customers can analyze the model’s confidence in its predictions. These log probabilities let you quantitatively assess how confident your custom models are when processing inputs and generating responses. The granular metrics allow for better evaluation of response quality, troubleshooting of unexpected outputs, and optimization of prompts or model configurations. Suppose you have already imported a custom model (for instance, a fine-tuned Llama 3.2 1B model) into Amazon Bedrock and have its model ARN. You can invoke this model using the Amazon Bedrock Runtime SDK (Boto3 for Python in this example) as shown in the following example: In the preceding code, we send a prompt— “The quick brown fox jumps” —to our custom imported model. We configure standard inference parameters: a maximum generation length of 50 tokens, a moderate temperature of 0.5 for moderate randomness, and a stop condition (either a period or a newline). The “return_logprobs”:True parameter tells Amazon Bedrock to return log probabilities in the response. The InvokeModel API returns a JSON response containing three main components: the standard generated text output, metadata about the generation process, and now log probabilities for both prompt and generated tokens. These values reveal the model’s internal confidence for each token prediction, so you can understand not just what text was produced, but how certain the model was at each step of the process. The raw API response provides token IDs paired with their log probabilities. To make this data interpretable, we need to first decode the token IDs using the appropriate tokenizer (in this case, the Llama 3.2 1B tokenizer), which maps each ID back to its actual text token. Then we convert log probabilities to probabilities by applying the exponential function, translating these values into more intuitive probabilities between 0 and 1. We have implemented these transformations using custom code (not shown here) to produce a human-readable format where each token appears alongside its probability, making the model’s confidence in its predictions immediately clear. Token-level log probabilities from the Custom Model Import feature provide valuable insights into your model’s decision-making process. These metrics transform how you interact with your custom models by revealing their confidence levels for each generated token. Here are impactful ways to use these insights:

- You can use log probabilities to quantitatively rank multiple generated outputs for the same prompt. When your application needs to choose between different possible completions—whether for summarization, translation, or creative writing—you can calculate each completion’s overall likelihood by averaging or adding the log probabilities across all its tokens. Example: Prompt : Translate the phrase “Battre le fer pendant qu’il est chaud” In this example, Completion A receives a higher log probability score (closer to zero), indicating the model found this idiomatic translation more natural than the more literal Completion B. This numerical approach enables your application to automatically select the most probable output or present multiple candidates ranked by the model’s confidence level. This ranking capability extends beyond translation to many scenarios where multiple valid outputs exist—including content generation, code completion, and creative writing—providing an objective quality metric based on the model’s confidence rather than relying solely on subjective human judgment.

- Models might produce hallucinations—plausible-sounding but factually incorrect statements—when handling ambiguous prompts, complex queries, or topics outside their expertise. Log probabilities provide a practical way to detect these instances by revealing the model’s internal uncertainty, helping you identify potentially inaccurate information even when the output appears confident. By analyzing token-level log probabilities, you can identify which parts of a response the model was potentially uncertain about, even when the text appears confident on the surface. This capability is especially valuable in retrieval-augmented generation (RAG) systems, where responses should be grounded in retrieved context. When a model has relevant information available, it typically generates answers with higher confidence. Conversely, low confidence across multiple tokens suggests the model might be generating content without sufficient supporting information. Example: In this example, we intentionally asked about a fictional metric—Portfolio Synergy Quotient (PSQ)—to demonstrate how log probabilities reveal uncertainty in model responses. Despite producing a professional-sounding definition for this non-existent financial concept, the token-level confidence scores tell a revealing story. The confidence scores shown below are derived by applying the exponential function to the log probabilities returned by the model. By identifying these low-confidence segments, you can implement targeted safeguards in your applications—such as flagging content for verification, retrieving additional context, generating clarifying questions, or applying confidence thresholds for sensitive information. This approach helps create more reliable AI systems that can distinguish between high-confidence knowledge and uncertain responses. When engineering prompts for your application, log probabilities reveal how well the model understands your instructions. If the first few generated tokens show unusually low probabilities, it often signals that the model struggled to interpret what you are asking. By tracking the average log probability of the initial tokens—typically the first 5–10 generated tokens—you can quantitatively measure prompt clarity. Well-structured prompts with clear context typically produce higher probabilities because the model immediately knows what to do. Vague or underspecified prompts often yield lower initial token likelihoods as the model hesitates or searches for direction. Example: Prompt comparison for customer service responses: The optimized prompt generates higher log probabilities, demonstrating that precise instructions and clear context reduce the model’s uncertainty. Rather than making absolute judgments about prompt quality, this approach lets you measure relative improvement between versions.

- You can use these insights to guide prompt design, model selection, and deployment decisions, helping you build more reliable, transparent AI applications with your custom Bedrock models.

Why it matters (impact for developers/enterprises)

For developers and enterprises building production AI, access to token-level log probabilities enables better evaluation of model behavior and confidence in responses. It supports more informed decisions in areas such as:

- ranking and selecting among multiple candidate outputs for a given prompt

- detecting and mitigating hallucinations by surfacing uncertain tokens

- improving retrieval-augmented generation (RAG) by grounding responses in verified context

- diagnosing failures and refining prompts, configurations, or fine-tuning data This capability aligns with Bedrock’s broader goals of scalable, secure, and observable AI, and it complements features like Bedrock Guardrails and Knowledge Bases by giving developers quantitative insight into model decisions. You can read the official announcement and example usage in the referenced AWS blog post.

Technical details or Implementation

To use log probability support with custom model import in Amazon Bedrock, you need to:

- Invoke a model through the Amazon Bedrock InvokeModel API and set the JSON parameter “return_logprobs”: true. This enables token-level log probabilities to be included in the response for both the prompt and generated tokens.

- Receive a JSON response that includes the standard generated text, generation metadata, and the new log probability fields. These values reveal the model’s internal confidence for each token as it processes the input and produces output.

- Decode the token IDs from the response using the appropriate tokenizer (e.g., the Llama 3.2 1B tokenizer) to map IDs back to text tokens.

- Convert log probabilities to probabilities by applying the exponential function, yielding values between 0 and 1. This makes the data easier to interpret and compare across candidates. The following is an example scenario described in the AWS post:

- You imported a custom model such as a fine-tuned Llama 3.2 1B model and have its ARN.

- You invoke it with a prompt like “The quick brown fox jumps” and request a maximum generation length of 50, temperature 0.5, and a stop condition of either a period or a newline. The return_logprobs: true flag returns log probabilities for both prompt and generated tokens in the API response.

- The response includes the standard text, plus per-token log probabilities. You can decode token IDs to text and transform logprobs to probabilities to obtain a human-readable representation of the model’s confidence at each step. What you do next depends on your use case. Here are representative patterns:

- Rank competing completions by their total log probability and present the most likely option to users or downstream components.

- Use low-confidence tokens to trigger verification, fetch additional context, or ask clarifying questions in a dialog system.

- In RAG scenarios, identify tokens grounded in retrieved data by correlating token confidence with retrieved context.

- Monitor the first tokens of generated responses to gauge prompt clarity; higher average log probabilities for the initial tokens typically indicate clearer instructions and context.

Key takeaways

- Token-level log probabilities provide a quantitative measure of model confidence for each token.

- Enable by including “return_logprobs”: true in the InvokeModel API request; results include prompt and generated token log probabilities.

- Decode token IDs, then exponentiate log probabilities to obtain token probabilities for intuitive interpretation.

- Use log probabilities to rank outputs, detect hallucinations, evaluate prompts, and tune retrieval-augmented generation.

- The approach supports building more trustworthy AI systems with custom Bedrock models.

FAQ

-

What are log probabilities in language models?

They are the logarithm of the probability assigned to a token; values are negative, with closer to zero indicating higher confidence (e.g., -0.1 ~ 90% confidence).

-

How do I enable log probabilities for a custom Bedrock model?

By calling the InvokeModel API with "return_logprobs": true; the response then includes log probabilities for prompt and generated tokens.

-

How should I interpret the log probabilities in practice?

Decode token IDs with the appropriate tokenizer, then convert log probabilities to probabilities using the exponential function to obtain values between 0 and 1; use these to assess confidence per token and overall completion quality.

-

What are practical use cases for log probabilities?

Ranking multiple outputs, detecting hallucinations, grounding answers in retrieved context (RAG), and diagnosing prompt or model configuration issues.

-

Can this help with prompt engineering?

Yes; tracking the average log probability of initial tokens helps measure prompt clarity and how well the model understands instructions.

References

More news

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Prompting for precision with Stability AI Image Services in Amazon Bedrock

Amazon Bedrock now offers Stability AI Image Services, extending Stable Diffusion and Stable Image with nine tools for precise image creation and editing. Learn prompting best practices for enterprise use.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.

Scale visual production using Stability AI Image Services in Amazon Bedrock

Stability AI Image Services are now available in Amazon Bedrock, delivering ready-to-use media editing via the Bedrock API and expanding on Stable Diffusion models already in Bedrock.

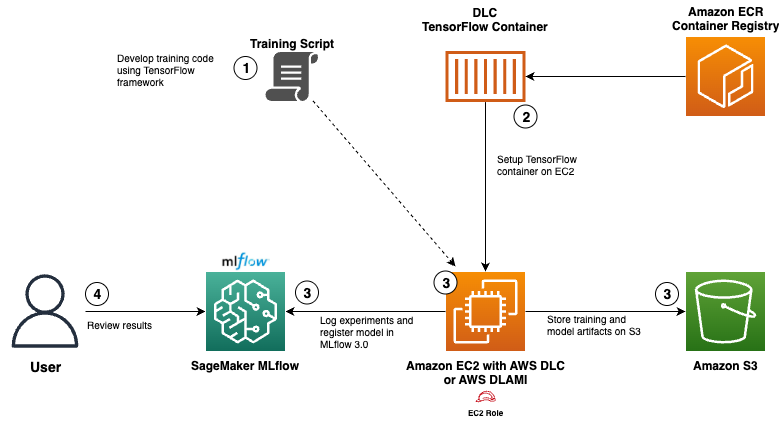

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.

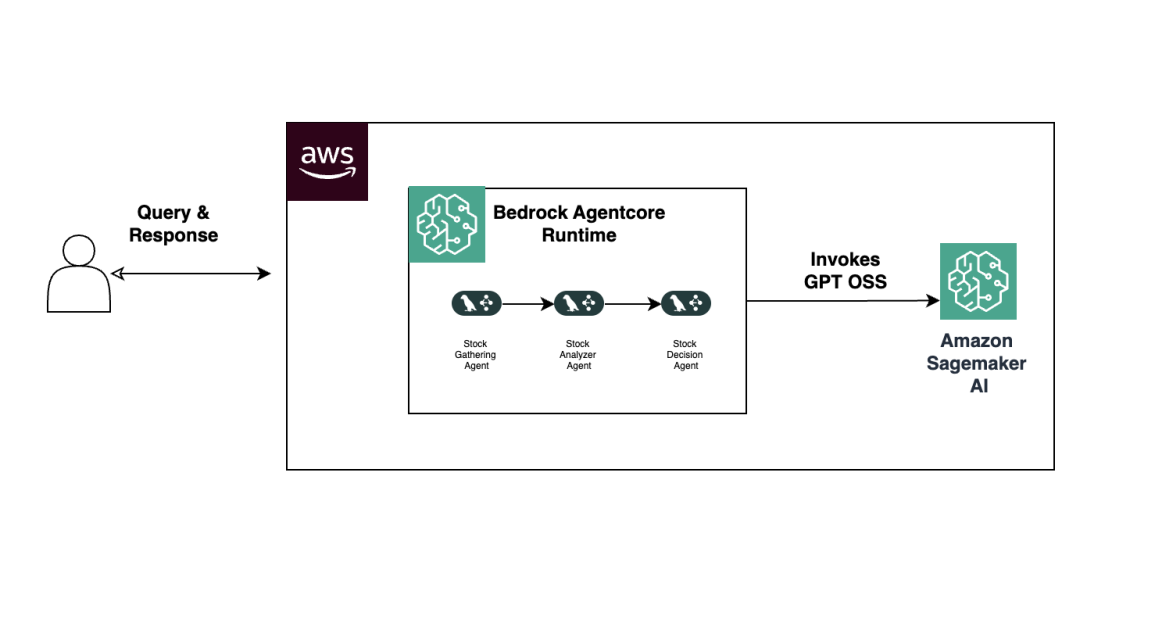

Build Agentic Workflows with OpenAI GPT OSS on SageMaker AI and Bedrock AgentCore

An end-to-end look at deploying OpenAI GPT OSS models on SageMaker AI and Bedrock AgentCore to power a multi-agent stock analyzer with LangGraph, including 4-bit MXFP4 quantization, serverless orchestration, and scalable inference.