Automate advanced agentic RAG pipelines using Amazon SageMaker AI

Sources: https://aws.amazon.com/blogs/machine-learning/automate-advanced-agentic-rag-pipeline-with-amazon-sagemaker-ai, https://aws.amazon.com/blogs/machine-learning/automate-advanced-agentic-rag-pipeline-with-amazon-sagemaker-ai/, AWS ML Blog

TL;DR

- Retrieval Augmented Generation (RAG) connects large language models to enterprise knowledge to build advanced generative AI apps.

- A reliable RAG pipeline is rarely a one-shot effort; teams test many configurations across chunking, embeddings, retrieval, and prompts.

- Amazon SageMaker AI, integrated with SageMaker Pipelines and managed MLflow, enables end-to-end automation, versioning, and governance for RAG workflows.

- CI/CD practices with automated promotion from development to staging and production improve reproducibility and reduce operational risk.

- MLflow provides centralized experiment tracking, logging of parameters, metrics, and artifacts across all pipeline stages, supporting traceability and robust governance.

Context and background

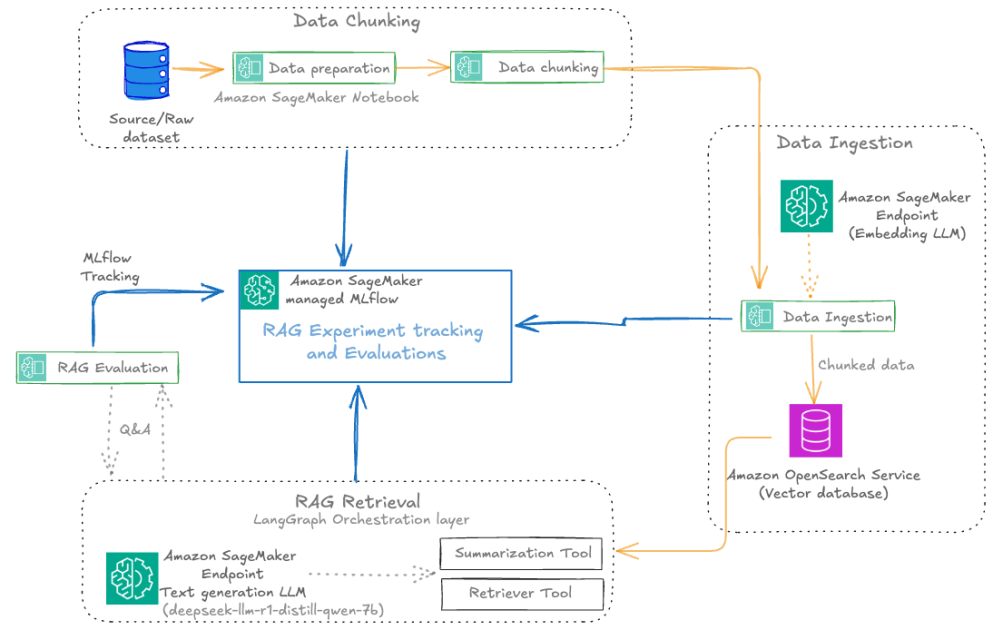

Retrieval Augmented Generation (RAG) is a fundamental approach for building generative AI applications that connect large language models (LLMs) to enterprise knowledge. However, crafting a reliable RAG pipeline is rarely a one-shot process. Teams typically evaluate dozens of configurations—varying chunk sizes, embedding models, retrieval techniques, and prompt designs—before identifying a solution that meets their use case. Manual RAG pipeline management leads to inconsistent results, lengthy troubleshooting, and difficulties reproducing successful configurations. In addition, documentation of parameter choices can be scattered, and there is limited visibility into component performance, which complicates cross-team collaboration and governance. The post argues for streamlining the RAG development lifecycle from experimentation to automation, so RAG solutions can be operationalized for production deployments with Amazon SageMaker AI. By combining experimentation with automation, teams can verify that the entire pipeline is versioned, tested, and promoted as a cohesive unit, enabling traceability, reproducibility, and risk mitigation as RAG systems advance from development to production. Integrating SageMaker managed MLflow for experiment tracking with SageMaker Pipelines for orchestration creates a unified platform for logging configurations, metrics, and artifacts while enabling repeatable, version-controlled automation across data ingestion, chunking, embedding generation, retrieval, and generation. AWS ML Blog The architecture supports scalable, production-ready deployment by promoting validated RAG configurations from development to staging and production environments, accounting for data, configurations, and infrastructure differences across environments. The approach emphasizes monitoring at every stage of the pipeline—including chunking quality, retrieval relevance, answer correctness, and LLM evaluation scores—to ensure robust performance on production-like data.

What’s new

The article presents a cohesive methodology to automate an advanced agentic RAG pipeline on Amazon SageMaker AI. Key elements include:

- End-to-end automation of RAG workflows from data preparation and vector embedding generation to model inference and evaluation using SageMaker Pipelines, with repeatable, version-controlled code.

- Deep integration with SageMaker managed MLflow for centralized experiment tracking across all pipeline stages. Each RAG experiment is a top‑level MLflow run with nested runs for data preparation, data chunking, data ingestion, RAG retrieval, and RAG evaluation, enabling granular visibility and lineage.

- A production-ready lifecycle that couples experimentation in SageMaker Studio with automated promotion via CI/CD to staging or production, ensuring stage-specific metrics are validated on production-like data before deployment.

- Support for multiple chunking strategies (fixed-size and recursive) to compare how granularity affects embedding and retrieval quality.

- Emphasis on governance and reproducibility through centralized metadata, data lineage, PII-type tracking, and comprehensive metric capture at each stage.

- A structured, code-based reference implementation in a GitHub repository, illustrating how to evolve RAG experiments into automated pipelines. The architecture diagram highlighted in the post demonstrates how SageMaker Pipelines orchestrate the entire workflow and how MLflow serves as the centralized tracking surface across the pipeline, enabling side-by-side comparisons of configurations and results across experiments. This ecosystem aims to reduce manual intervention, improve repeatability, and scale RAG deployments across environments.

Why it matters (impact for developers/enterprises)

- Reproducibility and governance: By versioning the entire RAG pipeline and logging every run, team members can audit, compare, and reproduce results, which is critical in enterprise settings.

- Faster experimentation and collaboration: Centralized MLflow tracking and a unified CI/CD flow accelerate iterative experimentation, reduce configuration drift, and enable teams to collaborate more effectively.

- Scalable, production-ready deployments: Automating promotion of validated configurations from development to staging and production helps ensure that data, configurations, and infrastructure are appropriately tested before live use.

- Production-quality monitoring: Metrics at every stage—chunk quality, retrieval relevance, answer correctness, and LLM evaluation scores—support continuous improvement and risk mitigation in real-world scenarios.

- Clear data governance: Logging data source details, detected PII types, and data lineage supports compliance and trustworthy AI practices in enterprise environments.

Technical details or Implementation

The solution described in the post centers on a RAG pipeline built with SageMaker AI, integrated MLflow, and SageMaker Pipelines. Core components include:

- SageMaker managed MLflow for centralized, hierarchical experiment tracking: Each RAG experiment is organized as a top-level MLflow run under a specific experiment name, with nested runs for stages such as data preparation, data chunking, data ingestion, RAG retrieval, and RAG evaluation. This hierarchical structure enables granular logging of parameters, metrics, and artifacts at every step and preserves clear lineage from raw data to final results.

- SageMaker Pipelines for end-to-end orchestration: Pipelines manage dependencies between critical stages—from data ingestion and chunking to embedding generation, retrieval, and generation—providing repeatable, version-controlled automation across environments. The platform supports promoting validated configurations from development to staging and production with IaC-driven traceability.

- CI/CD for automated promotion: The automated promotion phase leverages CI/CD to trigger pipeline execution in target environments, rigorously validating stage-specific metrics against production data before deployment. This ensures that production deployments reflect production-like conditions and that configurations are robust before going live.

- Production-focused lifecycle and data readiness: The workflow emphasizes data preparation quality—tracking metrics such as total question-answer pairs, unique questions, average context length, and initial evaluation predictions—and recording metadata like data source, detected PII types, and data lineage to support reproducibility and trust.

- RAG workflow stages and experimentation: The pipeline comprises data ingestion, chunking, retrieval, and evaluation. The architecture supports multiple chunking strategies (including fixed-size and recursive) to assess how chunking affects embedding quality and retrieval relevance. MLflow UI provides a side-by-side view of experiments to compare results across different configurations.

- Practical notes on the implementation: The article suggests using the provided code from a GitHub repository as a reference implementation to illustrate how RAG experiment evolution and automation can be achieved in practice. In practice, the RAG pipeline is designed to be evaluated with production-like data at each stage, ensuring accuracy, relevance, and robustness before deployment. By capturing and visualizing metrics across data preparation, chunking, embedding, retrieval, and evaluation, teams can systematically refine configurations and governance, leading to more reliable operational AI systems. The approach also highlights the importance of treating the entire RAG pipeline as the unit of deployment, not just individual subsystems such as chunking or orchestration layers.

Key takeaways

- A robust RAG pipeline requires iterative experimentation and careful automation to reach production readiness.

- Integrating SageMaker Pipelines with SageMaker managed MLflow provides a cohesive platform for end-to-end automation, tracking, and governance.

- Automated promotion from development to staging and production, guided by CI/CD, helps maintain consistency across environments and meet enterprise compliance needs.

- Centralized experiment tracking with hierarchical MLflow runs enables granular visibility and reproducibility across data preparation, chunking, ingestion, retrieval, and evaluation.

- Production-like evaluation and metrics at every stage are essential to ensure quality and reliability in real-world deployments.

FAQ

-

What is RAG and why is it challenging to productionize?

RAG connects LLMs to enterprise knowledge to enable advanced AI applications, but producing reliable results requires testing many configurations across chunking, embeddings, retrieval, and prompts, along with robust governance and reproducibility.

-

How do SageMaker AI, MLflow, and Pipelines work together in this approach?

MLflow provides centralized, hierarchical experiment tracking across all pipeline stages; SageMaker Pipelines orchestrates end-to-end workflows; together they enable versioned, repeatable automation with CI/CD support.

-

What does automated promotion involve, and why is it important?

utomated promotion uses CI/CD to trigger pipeline runs in target environments and validates stage-specific metrics against production data, ensuring that configurations are safe and effective before deployment.

-

What kinds of metrics are tracked in this workflow?

Metrics include chunk quality, retrieval relevance, answer correctness, LLM evaluation scores, data quality indicators (e.g., total QA pairs, unique questions, average context length), and data lineage/PII metadata.

-

Where can I find an implementation example?

The article references a GitHub repository with a reference implementation to illustrate RAG experiment evolution and automation (as described in the AWS blog).

References

More news

First look at the Google Home app powered by Gemini

The Verge reports Google is updating the Google Home app to bring Gemini features, including an Ask Home search bar, a redesigned UI, and Gemini-driven controls for the home.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

Google expands Gemini in Chrome with cross-platform rollout and no membership fee

Gemini AI in Chrome gains access to tabs, history, and Google properties, rolling out to Mac and Windows in the US without a fee, and enabling task automation and Workspace integrations.