How Quantization Aware Training Enables Low-Precision Accuracy Recovery

Sources: https://developer.nvidia.com/blog/how-quantization-aware-training-enables-low-precision-accuracy-recovery, https://developer.nvidia.com/blog/how-quantization-aware-training-enables-low-precision-accuracy-recovery/, NVIDIA Dev Blog

TL;DR

- Quantization aware training (QAT) and quantization aware distillation (QAD) extend post-training quantization (PTQ) by training the model to adapt to low-precision representations, often recovering higher accuracy during inference.

- QAT uses fake quantization in the forward pass and requires no native hardware support; it can be integrated into existing high-precision training pipelines and uses techniques like straight-through estimation (STE) for gradients.

- QAD pairs a quantized student with a full-precision teacher through distillation loss, exposing quantization errors during training to improve final accuracy; both PTQ and QAT/QAD work with TensorRT Model Optimizer formats such as NVFP4 and MXFP4.

- Real-world results show sizable gains in certain models and benchmarks (e.g., Llama Nemotron Super) when applying QAD, while many models still perform well with PTQ alone; the quality of data and hyperparameters remains critical.

- NVIDIA provides experimental APIs in Model Optimizer for QAT and QAD, with guidance and tutorials to help practitioners prepare and deploy models for low-precision inference on NVIDIA GPUs.

Context and background

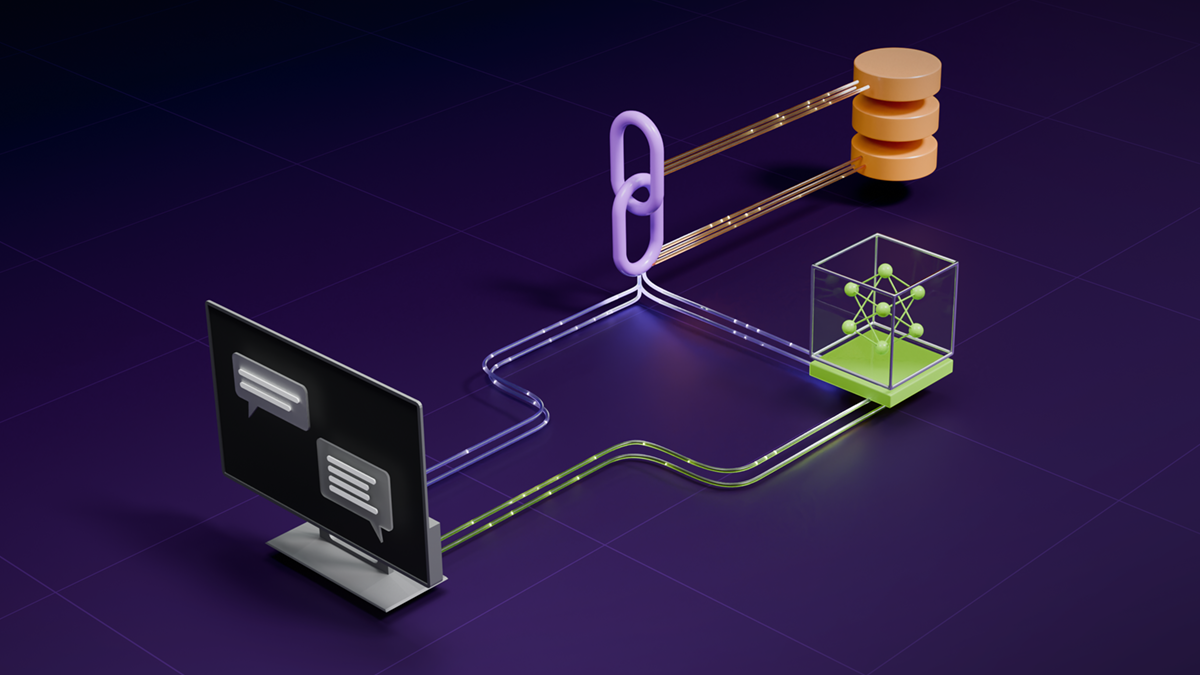

Post-training quantization (PTQ) is a common approach to compress and deploy models by applying numerical scaling to weights in lower-precision data types. However, PTQ can fall short for some architectures or workloads, prompting the exploration of Quantization Aware Training (QAT) and Quantization Aware Distillation (QAD). QAT aims to preserve accuracy by teaching the model to operate under low-precision arithmetic during an additional training phase after pre-training. Unlike PTQ, which quantizes after full-precision training using a calibration dataset, QAT trains the model with quantized values in the forward path. In practice, the QAT workflow is almost identical to PTQ, with the key difference being the training loop that follows the quantization recipe. QAT typically employs “fake quantized” weights and activations in the forward pass. The lower precision is represented within a higher-precision data type through a quantize/dequantize operator, so native hardware support is not strictly required. For example, QAT for NVFP4 can be executed on Hopper GPUs using simulated quantization, and backward gradients are computed in higher precision via straight-through estimation (STE). QAD, by contrast, introduces a distillation component. The goal is to recover accuracy after post-training quantization by aligning the quantized student’s outputs with those of a full-precision teacher model that has been trained on the same data. The teacher remains at full precision, while the student operates with fake-quantized computations during the distillation process. The distillation loss measures how far the quantized predictions deviate from the teacher’s outputs, guiding the student toward the teacher’s behavior even under quantization. TensorRT Model Optimizer supports the same quantization formats as the PTQ workflow, including FP8, NVFP4, MXFP4, INT8, and INT4. The output of QAT in Model Optimizer is a new model in the original precision with updated weights and critical metadata to convert to the target format. The workflow for QAT mirrors PTQ up to the point of the training loop, and practitioners can leverage familiar training components such as optimizers, schedulers, and learning rate strategies. For developers, this approach integrates cleanly into existing Hugging Face and PyTorch pipelines, enabling experimentation with low-precision formats while preserving established workflows. In the current Model Optimizer implementation, QAD is experimental. It begins with a process similar to QAT and PTQ for the student model, after which a distillation configuration defines elements such as the teacher model, training arguments, and the distillation loss function. NVIDIA notes that ongoing work will simplify these steps and improve the QAD experience. For those seeking hands-on exploration, the NVIDIA blog points to a complete Jupyter notebook walkthrough and to the QAD section of the Model Optimizer repository for the latest code examples and documentation. Not all models require QAT or QAD—many achieve more than 99.5% of their original accuracy across key benchmarks with PTQ alone. Yet in cases like Llama Nemotron Super, QAD can yield notable gains. Across various benchmarks, QAD has recovered, and in some cases exceeded, the accuracy available from PTQ alone. In particular, the report highlights improvements on tests such as GPQA Diamond, LIVECODEBENCH, Math-500, and AIME 2024, where QAD helped close gaps to the full-precision baselines. Below, the precise impact varies by model and task, with NVFP4 offering finer-grained scaling and helping preserve small signals and outliers during 4-bit quantization. From a practical perspective, the success of QAT and QAD depends heavily on the quality of training data, hyperparameters, and model architecture. For 4-bit quantization, formats like NVFP4 benefit from more granular and higher-precision scaling factors. Comparative results across VLM benchmarks show that NVFP4 can offer marginal but meaningful improvements over MXFP4 in certain tasks, underscoring the importance of format selection for a given workload. In the OpenVLM Hugging Face Leaderboard context, small performance gaps between top models and lower-ranked models highlight the sensitivity of these metrics to quantization choices and task characteristics. Quantization aware techniques aim to balance the speed and memory advantages of FP4 execution with the robustness of high-precision training.

What’s new

The NVIDIA article emphasizes that QAT and QAD extend the capabilities of PTQ by enabling models to adapt directly to low-precision environments, thereby recovering accuracy where simple calibration falls short. The TensorRT Model Optimizer now provides APIs that are natively compatible with Hugging Face and PyTorch, making it easier to prepare models for QAT and QAD while leveraging familiar training workflows. Supported quantization formats include FP8, NVFP4, MXFP4, INT8, and INT4, with QAT producing a quantized model that retains high inference accuracy after training. The code path for applying QAT is initially straightforward and mirrors PTQ up to the training loop; a standard training loop with tunable parameters is required, and NVIDIA suggests dedicating roughly 10% of the initial training epochs to QAT for best results. In the context of large language models (LLMs), even smaller fractions of pre-training time—sometimes less than 1%—have been observed to suffice for quality restoration. QAD brings an additional dimension by using a teacher-student paradigm. The student is quantized during distillation, while the teacher remains in full precision. The distillation loss aligns the student’s outputs with the teacher’s, exposing and compensating for quantization errors. After QAD, the resulting model runs with the same inference performance as PTQ and QAT but with potentially higher accuracy due to distillation. NVIDIA notes that TensorRT Model Optimizer currently provides experimental APIs for applying QAD, and mentions ongoing improvements to simplify this workflow. For those seeking the latest guidance, the QAD section of the Model Optimizer repository and accompanying Jupyter notebooks are recommended resources. The article also notes that many models retain substantial accuracy with PTQ alone, underscoring that QAT and QAD are not universally required. It highlights specific cases, such as Llama Nemotron Super, where QAD produced observable gains. When quantizing to 4-bit data types, NVFP4 generally benefits from more granular scaling factors, and comparative results across benchmarks show NVFP4 delivering slightly higher accuracy in some scenarios. Overall, QAT and QAD extend the benefits of PTQ by incorporating targeted training dynamics that help models adapt to low-precision environments, enabling the speed and efficiency advantages of FP4 execution while maintaining robust accuracy for production deployments.

Why it matters (impact for developers/enterprises)

For developers and enterprises deploying AI at scale, the ability to run inference efficiently in low-precision formats translates into faster throughput, lower memory usage, and reduced cost. PTQ provides a baseline level of compression and speed, but QAT and QAD offer pathways to recover or improve accuracy when simple calibration falls short. The NVIDIA analysis demonstrates that, depending on the model and task, QAD can close the gap between low-precision inference and full-precision baselines by several percentage points, which can be meaningful across end-user applications. The practical takeaway is that a complete quantization strategy is available: PTQ for broad, straightforward compression; QAT when higher inference accuracy in FP4 is required and training time is acceptable; and QAD when the additional distillation step can yield meaningful gains without altering the underlying architecture. The availability of PyTorch and Hugging Face API compatibility through the TensorRT Model Optimizer lowers the barrier to experimentation, enabling teams to test formats such as NVFP4 and MXFP4 within familiar workflows. For real-world deployments, this means selecting the right quantization format for a given workload, applying the right amount of QAT or QAD, and validating performance with representative data.

Technical details or Implementation

- QAT overview: trains with quantized values in the forward path to simulate deployment-time behavior and expose rounding and clipping effects during training. The approach typically uses a pass-through quantization operator with gradients computed in higher precision (STE). This makes QAT feasible without native hardware support and integrates into existing high-precision pipelines.

- Training loop and duration: QAT requires a training loop that includes standard hyperparameters like optimizer choices and learning rate schedules. NVIDIA suggests running QAT for about 10% of the initial training epochs as a practical target. In LLM contexts, even smaller fractions of pre-training time have shown benefits in restoring model quality.

- Formats and metadata: QAT in Model Optimizer supports formats including FP8, NVFP4, MXFP4, INT8, and INT4. The output is a model in the original precision with updated weights and metadata to enable conversion to the target low-precision format.

- QAD details: QAD mirrors the initial steps of QAT and PTQ for the quantization recipe, then introduces a distillation configuration. The distillation process uses a full-precision teacher model and a quantized student, with a distillation loss guiding the student toward the teacher’s behavior. The student’s computations are fake quantized during distillation while the teacher remains full precision. After QAD, the resulting model maintains inference performance similar to PTQ/QAT but can exhibit higher accuracy because quantization errors are accounted for during training. NVIDIA notes that QAD APIs are experimental and will undergo improvements to simplify application.

- Practical guidance: the success of QAT and QAD depends on data quality, hyperparameters, and model architecture. When quantizing to 4-bit data types, NVFP4 can provide finer-grained scaling that helps preserve small signals and outliers. The formats NVFP4 and MXFP4 exhibit small yet meaningful differences across benchmarks, reinforcing the importance of format selection for a given workload.

- Integration and workflow: TensorRT Model Optimizer offers APIs compatible with Hugging Face and PyTorch to streamline preparation for QAT and QAD while leveraging familiar training workflows. The overall workflow enables researchers and engineers to compress, fine-tune, and deploy models on NVIDIA GPUs with a balance of speed, size, and accuracy.

Key takeaways

- QAT and QAD extend PTQ by explicitly teaching models to cope with low-precision arithmetic, often yielding higher inference accuracy.

- QAT uses fake quantization in the forward pass and does not require native hardware support; it integrates with existing training pipelines and supports FP8, NVFP4, MXFP4, INT8, and INT4.

- QAD adds a distillation objective, aligning a quantized student with a full-precision teacher to compensate for quantization error and potentially yield higher accuracy.

- PTQ remains effective for many models, but QAT/QAD can deliver notable gains for certain architectures and tasks, particularly at 4-bit precision.

- Training data quality, hyperparameters, and model design critically influence the effectiveness of quantization-aware methods; experiments are supported via TensorRT Model Optimizer with PyTorch/Hugging Face APIs.

FAQ

-

What is QAT?

QAT trains the model with quantized values in the forward path to adapt to low-precision arithmetic, using a training phase after applying the quantization recipe.

-

How does QAD differ from QAT?

QAD adds a distillation loss by aligning a quantized student model with a full-precision teacher, aiming to recover accuracy by accounting for quantization error during training.

-

Do I need native hardware support to use QAT or QAD?

QAT can be performed with simulated quantization and STE; native hardware support is not required for QAT. QAD APIs are experimental and focused on software workflows.

-

How long should QAT be run during training?

NVIDIA suggests around 10% of the initial training epochs for QAT, with some LLM cases showing benefits even at less than 1% of pre-training time.

-

Are there caveats to expect with QAT/QAD?

The effectiveness depends on data quality, hyperparameters, and model architecture; results vary across models and benchmarks, and careful experimentation is advised.

References

More news

First look at the Google Home app powered by Gemini

The Verge reports Google is updating the Google Home app to bring Gemini features, including an Ask Home search bar, a redesigned UI, and Gemini-driven controls for the home.

NVIDIA HGX B200 Reduces Embodied Carbon Emissions Intensity

NVIDIA HGX B200 lowers embodied carbon intensity by 24% vs. HGX H100, while delivering higher AI performance and energy efficiency. This article reviews the PCF-backed improvements, new hardware features, and implications for developers and enterprises.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

Google expands Gemini in Chrome with cross-platform rollout and no membership fee

Gemini AI in Chrome gains access to tabs, history, and Google properties, rolling out to Mac and Windows in the US without a fee, and enabling task automation and Workspace integrations.