Enhance video understanding with Amazon Bedrock Data Automation and open-set object detection

Sources: https://aws.amazon.com/blogs/machine-learning/enhance-video-understanding-with-amazon-bedrock-data-automation-and-open-set-object-detection, https://aws.amazon.com/blogs/machine-learning/enhance-video-understanding-with-amazon-bedrock-data-automation-and-open-set-object-detection/, AWS ML Blog

TL;DR

- Open-set object detection (OSOD) enables models to detect both known and previously unseen objects, using flexible prompts and adapting in real time without retraining.

- Amazon Bedrock Data Automation adds frame-level OSOD to video workflows via video blueprints, returning per-frame bounding boxes (XYWH), labels, and confidence scores.

- The service combines OSOD with Bedrock video capabilities such as chapter segmentation, frame-level text detection, and taxonomy classification to derive actionable insights.

- Use cases span advertising optimization, security and safety monitoring, retail content search, and more, with output that can be filtered or customized to fit precision or recall goals. AWS ML Blog

Context and background

In real-world video and image analysis, traditional closed-set object detection (CSOD) models—those limited to a fixed list of predefined categories—struggle when objects appear that were not part of the training data. This challenge is magnified in dynamic environments where new, unknown, or user-defined objects frequently emerge. Examples highlighted in practice include media publishers tracking emerging brands in user-generated content, advertisers analyzing product appearances in influencer videos despite visual variability, retailers enabling flexible, descriptive search, and even self-driving or manufacturing contexts where novel debris or defects may appear. When unknown objects are encountered, CSOD models can misclassify or ignore them, reducing their usefulness for real-world workflows. Open-set object detection (OSOD) addresses this gap by enabling models to detect both known and unseen objects, including objects not seen during training. OSOD supports flexible input prompts—from specific object names to open-ended descriptions—and can adapt to user-defined targets in real time without retraining. By combining visual recognition with semantic understanding—often via vision-language models—OSOD lets users query the system broadly, even when the target is unfamiliar or ambiguous. AWS ML Blog Amazon Bedrock Data Automation is a cloud-based service that extracts insights from unstructured content, including documents, images, video, and audio. For video, it supports chapter segmentation, frame-level text detection, chapter-level classification using Interactive Advertising Bureau (IAB) taxonomies, and frame-level OSOD. The OSOD capability is available on Bedrock Data Automation video blueprints, enabling a video + text prompt workflow to detect desired objects at the frame level. AWS ML Blog

What’s new

The OSOD capability is integrated into Amazon Bedrock Data Automation’s video analysis features, delivering powerful, flexible object detection without retraining.

- Frame-level OSOD in video blueprints: You provide a video and a text prompt specifying the objects to detect. For each frame, the model outputs a dictionary containing bounding boxes in XYWH format (the x and y coordinates of the top-left corner, followed by width and height), along with corresponding labels and confidence scores. This enables precise localization and ranking of detections across the timeline.

- Flexible prompt and dynamic fields: The input text can be highly flexible, enabling dynamic fields in the video blueprints powered by OSOD. You can define prompts that reflect current business questions, such as locating certain devices or objects, or more general scene-level targets.

- Output customization: The workflow supports filtering by high-confidence detections or other criteria to balance precision and recall according to needs.

- Broader Bedrock video capabilities: In addition to OSOD, the platform provides chapter segmentation, frame-level text detection, and chapter-level IAB taxonomy classification, all integrated with the OSOD capability to support end-to-end video insights. This combination helps streamline contextual advertising, security monitoring, and other analysis workflows.

- Practical use cases and examples: Advertisers can run A/B tests to compare ad placement effectiveness across locations; prompts like “Detect the locations of echo devices” demonstrate how the system can be steered toward practical, business-relevant targets. Other prompts illustrate identifying key elements in a video, or checking for potentially dangerous elements in home-security scenarios. The system can also locate specific objects with descriptive prompts like “Detect the white car with red wheels.”

- Outputs and sample blueprints: A sample blueprint schema and example outputs are provided to illustrate how objects and their bounding boxes appear in the per-frame results. While the article references a GitHub repository for full examples, the key takeaway is that the OSOD-enabled blueprint outputs detailed per-frame detections that can be consumed by downstream pipelines. AWS ML Blog The OSOD capability within Bedrock Data Automation significantly enhances the ability to extract actionable insights from video content. By combining flexible text-driven queries with frame-level object localization, OSOD helps users across industries implement intelligent video analysis workflows—ranging from targeted ad evaluation and security monitoring to custom object tracking. Integrated into the broader suite of video analysis tools in Bedrock Data Automation, OSOD reduces the need for manual intervention and rigid pre-defined schemas, supporting scalable, real-world applications. AWS ML Blog

Why it matters (impact for developers/enterprises)

- Flexible query capability: OSOD enables searches that go beyond fixed category lists. Users can prompt the system with specific objects or open-ended descriptions, enabling broad and adaptive queries across video content.

- No retraining required: The open-set approach allows models to detect unknown objects without the need to re-train on new labels, reducing time-to-insight and maintenance burden for enterprise pipelines. AWS ML Blog

- Frame-accurate localization: By returning per-frame detections with XYWH bounding boxes, organizations can integrate precise object tracking into downstream workflows, including search, retrieval, and automated content moderation or safety checks.

- End-to-end video analysis: OSOD complements other Bedrock Data Automation video features, such as chapter segmentation and text detection, to provide a richer, context-aware view of video content across chapters and segments. This enables more nuanced analyses, better ad targeting, and safer deployments in consumer or enterprise environments.

- Cross-industry applicability: Use cases span advertising and audience measurement, media moderation, retail-enabled search and indexing, security monitoring, and manufacturing quality checks, illustrating the broad value of combining OSOD with video analysis pipelines. AWS ML Blog

Technical details or Implementation

- OSOD on frame level within Bedrock Data Automation: The core capability lets you input a video and a text prompt that specifies the desired objects to detect. For each frame, the system outputs a dictionary that includes:

- Bounding boxes in XYWH format (x, y, width, height) for each detected object

- Labels identifying the detected object

- Confidence scores reflecting detection certainty

- How prompts work: The input text is highly flexible, enabling dynamic fields in the video blueprint, which allows teams to define prompts that reflect current business questions or target objects without retraining. This enables real-time adaptation to evolving needs.

- Output customization: Users can tailor the per-frame results, for example by filtering high-confidence detections to prioritize precision, or by adjusting thresholds to balance precision and recall in a given scenario.

- Integrated capabilities: Alongside OSOD, Bedrock Data Automation supports chapter segmentation (to divide video into meaningful sections), frame-level text detection (for on-frame textual content), and chapter-level IAB taxonomy classification (structured content tagging by taxonomy). The OSOD capability sits alongside these features to enable richer, frame-aware analyses.

- Example and schemas: The article references an example blueprint schema and an example of per-frame output, illustrating how objects and their bounding boxes appear within the video’s frame sequence. For more extensive examples, a GitHub repository is mentioned as the source of complete demonstrations. AWS ML Blog

- Practical considerations: In practice, businesses can define prompts such as “Detect the locations of echo devices,” or “Detect the white car with red wheels,” to tailor detections to specific scenarios. The approach supports effective resizing decisions for devices with different resolutions and aspect ratios by focusing on visually salient elements in the frame. The per-frame localization enables precise ad evaluation, security reviews, and targeted content retrieval. AWS ML Blog

Key table: OSOD in Bedrock Data Automation – features and benefits

| Feature | Benefit |

|---|---|

| Frame-level OSOD in video blueprints | Detect known and unseen objects per frame using flexible prompts |

| Output per frame | Bounding boxes (XYWH), labels, confidence scores for precise localization |

| Prompt flexibility | Define dynamic fields and targets without retraining |

| Output customization | Filter by confidence to balance precision and recall |

| Additional video tools | Chapter segmentation, frame-level text detection, IAB taxonomy classification for richer insights |

Key takeaways

- OSOD enables detecting both known and unseen objects in video, guided by flexible prompts and without retraining.

- Bedrock Data Automation provides frame-level OSOD within video blueprints, outputting per-frame object localization data (XYWH, labels, confidence).

- The platform integrates OSOD with other video analysis capabilities (chapter segmentation, text detection, taxonomy classification) to deliver end-to-end insights.

- Use cases span advertising optimization, security monitoring, retail search, and more, with outputs that can be customized to meet business requirements.

- The approach reduces manual intervention and supports scalable, real-world video analysis workflows across industries. AWS ML Blog

FAQ

-

What is open-set object detection (OSOD)?

OSOD enables models to detect both known and unseen objects, including those not encountered during training, using flexible prompts and often vision-language models. [AWS ML Blog](https://aws.amazon.com/blogs/machine-learning/enhance-video-understanding-with-amazon-bedrock-data-automation-and-open-set-object-detection/)

-

How does Bedrock Data Automation implement OSOD on video?

OSOD is applied on frame level within video blueprints. You input a video and a text prompt; for each frame, the system outputs bounding boxes, labels, and confidence scores, with the ability to customize output. [AWS ML Blog](https://aws.amazon.com/blogs/machine-learning/enhance-video-understanding-with-amazon-bedrock-data-automation-and-open-set-object-detection/)

-

Do I need to retrain models to use OSOD with Bedrock Data Automation?

No. OSOD allows for real-time adaptation to new objects without retraining, using flexible prompts. [AWS ML Blog](https://aws.amazon.com/blogs/machine-learning/enhance-video-understanding-with-amazon-bedrock-data-automation-and-open-set-object-detection/)

-

What kind of outputs are produced per frame?

For each frame, the system provides a dictionary of detections that includes XYWH bounding boxes, object labels, and confidence scores. [AWS ML Blog](https://aws.amazon.com/blogs/machine-learning/enhance-video-understanding-with-amazon-bedrock-data-automation-and-open-set-object-detection/)

-

What are some example use cases mentioned?

dvertisers evaluating ad placements, home security scenarios checking for dangerous elements, and the ability to search or locate objects like a white car with red wheels, all through open-set prompts. [AWS ML Blog](https://aws.amazon.com/blogs/machine-learning/enhance-video-understanding-with-amazon-bedrock-data-automation-and-open-set-object-detection/)

References

More news

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Prompting for precision with Stability AI Image Services in Amazon Bedrock

Amazon Bedrock now offers Stability AI Image Services, extending Stable Diffusion and Stable Image with nine tools for precise image creation and editing. Learn prompting best practices for enterprise use.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.

Scale visual production using Stability AI Image Services in Amazon Bedrock

Stability AI Image Services are now available in Amazon Bedrock, delivering ready-to-use media editing via the Bedrock API and expanding on Stable Diffusion models already in Bedrock.

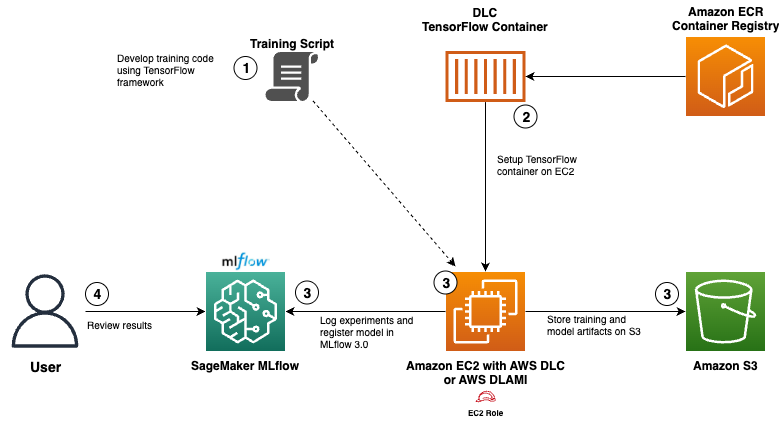

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.