London Stock Exchange Group Detects Market Abuse with AI-Powered Surveillance Guide on Amazon Bedrock

Sources: https://aws.amazon.com/blogs/machine-learning/how-london-stock-exchange-group-is-detecting-market-abuse-with-their-ai-powered-surveillance-guide-on-amazon-bedrock, https://aws.amazon.com/blogs/machine-learning/how-london-stock-exchange-group-is-detecting-market-abuse-with-their-ai-powered-surveillance-guide-on-amazon-bedrock/, AWS ML Blog

TL;DR

- London Stock Exchange Group (LSEG) partnered with AWS to enhance insider dealing detection using a generative AI prototype on Amazon Bedrock, leveraging Anthropic’s Claude Sonnet 3.5 model.

- The system analyzes regulatory news content (RNS) at scale, processing 250,000 articles from six months of 2023 to predict price sensitivity and guide analyst review.

- A two-step, multi-stage classification framework with prompt optimization reduced false positives and helped analysts prioritize high-impact cases.

- Bedrock’s serverless, scalable model-inference and governance features support secure, auditable, enterprise-grade deployment for market surveillance.

- Early results show notable accuracy, and LSEG plans to expand the solution for internal use, improving efficiency and consistency in market abuse detection.

Context and background

London Stock Exchange Group (LSEG) is a global provider of financial markets data, infrastructure, and software. It operates the London Stock Exchange and supports international equity, fixed income, and derivative markets, while also offering real-time data products and post-trade services. Regulators expect market surveillance teams to keep pace with evolving risk profiles across MiFID asset classes, venues, and jurisdictions. The scale of activity is substantial: London Stock Exchange facilitates trading and reporting of over £1 trillion in securities by 400 members annually. Given the complexity and dynamic nature of modern markets, surveillance systems must both detect sophisticated market abuse and manage vast data, relationships, and volatility. Traditionally, many surveillance systems were rule-based, manual, and slow to adapt to new tactics used by bad actors. Analysts faced high volumes of alerts and false positives, which strained resources and could delay investigations. Against this backdrop, LSEG sought a solution that would automate parts of the review process, improve accuracy, and scale with market activity while maintaining regulatory compliance. In collaboration with AWS, LSEG developed an AI-powered Surveillance Guide to automate and augment analysts’ reviews of trades flagged for potential market abuse. The Guide analyzes news sensitivity and its potential market impact, helping analysts decide whether a highlighted activity warrants further investigation. The project combines a robust data pipeline, model-driven insights, and human-in-the-loop evaluation to balance automation with expert judgment.

What’s new

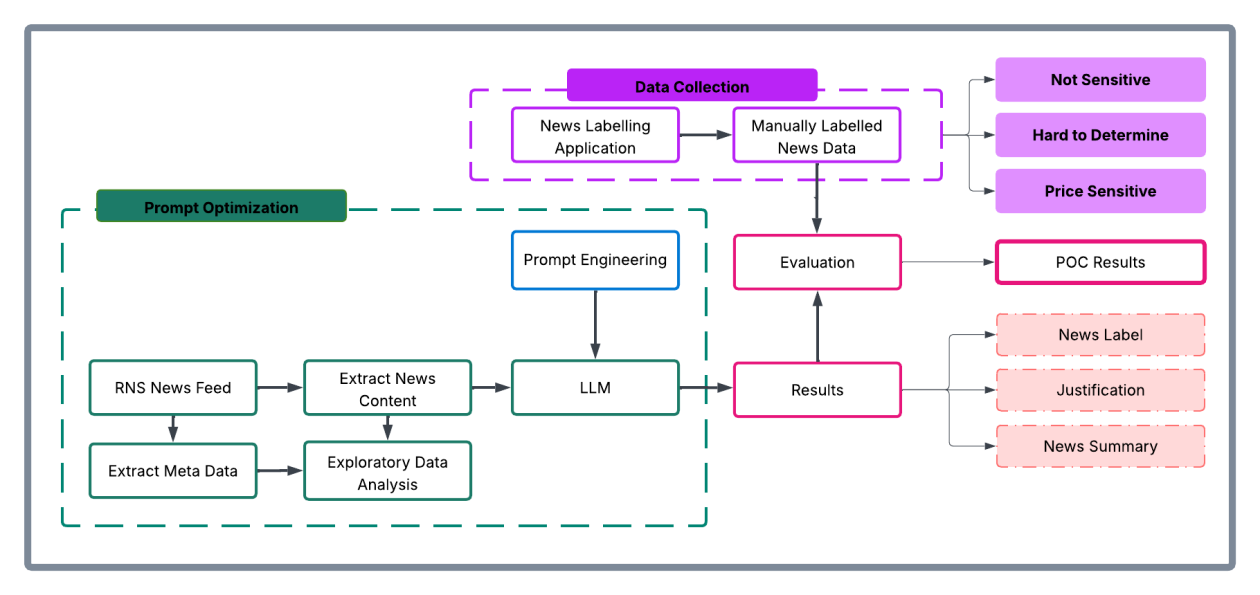

The core innovation is an AI-powered surveillance workflow built on Amazon Bedrock, using Anthropic’s Claude Sonnet 3.5 as the foundation model. Claude Sonnet 3.5 was selected for its performance characteristics relevant to processing financial news content. The system analyzes news from the London Stock Exchange’s Regulatory News Service (RNS) and classifies articles according to their potential market impact, specifically focusing on price sensitivity during the observed period. A key aspect of the initiative is the data-driven evaluation conducted on a curated subset of articles. The team collected approximately 250,000 RNS articles spanning six consecutive months of 2023, pre-processed the raw HTML to extract clean textual content, and performed exploratory data analysis to understand distribution patterns within the RNS corpus. From this corpus, 110 articles covering major news categories were selected and evaluated by market surveillance analysts on a nine-point price-sensitivity scale. This expert-labeled evaluation served as the baseline for measuring the AI system’s performance. Analytically, the project employed a two-step classification framework. The workflow processed news articles through stages that separated the tasks of identifying price-sensitive content from assessing its likely impact on trading behavior. The outputs from both steps were merged according to predefined rules and then compared with the expert-labeled dataset to quantify accuracy. Throughout the process, prompts were iteratively refined to elicit the model’s three core components and maximize reliability—namely, reducing false positives by favoring non-sensitive classifications when appropriate. From a technical perspective, the implementation took place in Amazon SageMaker using Jupyter Notebooks for experimentation. The stack supported traditional supervised learning methods, prompt engineering with foundation models, and fine-tuning scenarios, enabling systematic experimentation and comparison across approaches. The resulting AI-assisted workflow now provides automated alerts and detailed justifications for flagged activity, enabling analysts to focus on the most meaningful cases while maintaining comprehensive oversight. Bedrock enables secure, scalable access to multiple foundation models through a unified API, minimizing direct model management and reducing operational complexity. The serverless architecture supports dynamic scaling of model inference capacity in response to news volume, ensuring consistent performance during market-critical periods. Built-in monitoring and governance features provide reliable model performance and audit trails for regulatory compliance.

Why it matters (impact for developers/enterprises)

For developers and enterprises, this initiative demonstrates how a scalable AI-powered surveillance solution can augment human expertise in highly regulated environments. The use of Bedrock allows organizations to experiment with leading foundation models without heavy upfront infrastructure management, while preserving security and governance controls. Key implications include:

- Scalable AI-enabled monitoring: Serverless model inference lets surveillance systems adapt to spikes in alert volume without sacrificing latency or reliability.

- Improved analyst productivity: By instantly assessing news sensitivity and market impact, the system helps triage alerts and prioritize cases that merit deeper investigation.

- Enhanced regulatory compliance: Built-in auditing and governance features support traceability and accountability for decisions made during surveillance operations.

- Data-driven iteration: A structured evaluation framework with human-in-the-loop checks enables rapid, evidence-based improvements to the detection workflow.

Technical details or Implementation

The architecture described by LSEG consists of three main components, as outlined in the accompanying diagrams in the original post. While the article does not enumerate the components in exhaustive detail, it emphasizes an end-to-end pipeline that integrates data ingestion, model inference, and analyst decision support. Data and preprocessing:

- A dataset of ~250,000 RNS articles spanning six months of 2023 was collected and stored within the AWS environment.

- Raw HTML from RNS was pre-processed to remove extraneous elements and extract clean textual content suitable for NLP modeling.

- Exploratory data analysis characterized distribution patterns across the news corpus, informing sampling strategies for evaluation. Evaluation dataset and labeling:

- A curated subset of 110 articles was selected to represent major news categories.

- Market surveillance analysts annotated each article’s price sensitivity on a nine-point scale, creating a human baseline for model evaluation. Modeling and experimentation:

- Experiments were conducted in Amazon SageMaker using Jupyter Notebooks, enabling a controlled development environment for comparing approaches.

- The technical stack supported traditional supervised learning, prompt engineering with foundation models, and fine-tuning scenarios to identify the most effective method for this task.

- A two-step classification process was implemented to maximize accuracy by separating the detection of price sensitivity from the assessment of likely price impact, with results merged according to defined rules. Prompt design and optimization:

- Prompts elicited three key components from the model and were iteratively refined to maximize non-sensitive classifications, thereby reducing unnecessary escalations to human analysts (i.e., lowering false positives).

- The prompts were optimized based on performance against the expert-annotated baseline data, aiming for higher confidence in low-risk classifications. Deployment and governance:

- Amazon Bedrock provides secure access to Claude Sonnet 3.5 through a unified API, supporting scalable deployment with enterprise-grade security and auditability.

- The serverless architecture supports dynamic scaling during periods of elevated news flow and market activity, helping preserve performance.

- Built-in monitoring and governance features enable traceability of model decisions and compliance with regulatory requirements. Performance and outcomes:

- Over a six-week evaluation period, the Surveillance Guide demonstrated notable accuracy on the representative sample dataset, validating the approach of combining automated analysis with analyst-driven labeling.

- The solution currently supports automated alerts to the Market Supervision team, followed by analyst triage to determine the necessity of a full investigation. This hybrid approach balances speed with thorough qualitative assessment.

Key takeaways

- AI-powered market surveillance can be implemented in a scalable, governance-friendly way using Amazon Bedrock and Claude Sonnet 3.5.

- A two-step classification framework combined with prompt optimization can reduce false positives while preserving the ability to identify meaningful market-abuse signals.

- A large, varied dataset of regulatory news articles provides a strong foundation for evaluating model performance in real-world conditions.

- Serverless model deployment and unified APIs help organizations iterate quickly and maintain regulatory compliance during fast-moving market environments.

- Human analysts remain essential for final investigations, but AI-guided triage improves efficiency and focus on high-impact cases.

FAQ

-

What problem is LSEG addressing with Surveillance Guide?

The need to modernize market surveillance to handle large volumes, evolving abuse tactics, and the high rate of false positives from traditional rules-based approaches.

-

Which models and services are used?

nthropic’s Claude Sonnet 3.5 model via Amazon Bedrock, with experimentation in Amazon SageMaker.

-

What data underpins the evaluation?

pproximately 250,000 RNS articles from six consecutive months of 2023, with a curated 110-article evaluation subset.

-

How is success measured?

nalysts rated price sensitivity on a nine-point scale during evaluation; a two-step classification process and prompt optimization contributed to higher accuracy and reduced false positives.

References

More news

NVIDIA HGX B200 Reduces Embodied Carbon Emissions Intensity

NVIDIA HGX B200 lowers embodied carbon intensity by 24% vs. HGX H100, while delivering higher AI performance and energy efficiency. This article reviews the PCF-backed improvements, new hardware features, and implications for developers and enterprises.

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

Prompting for precision with Stability AI Image Services in Amazon Bedrock

Amazon Bedrock now offers Stability AI Image Services, extending Stable Diffusion and Stable Image with nine tools for precise image creation and editing. Learn prompting best practices for enterprise use.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.