Deploy Scalable AI Inference with NVIDIA NIM Operator 3.0.0

Sources: https://developer.nvidia.com/blog/deploy-scalable-ai-inference-with-nvidia-nim-operator-3-0-0, https://developer.nvidia.com/blog/deploy-scalable-ai-inference-with-nvidia-nim-operator-3-0-0/, NVIDIA Dev Blog

TL;DR

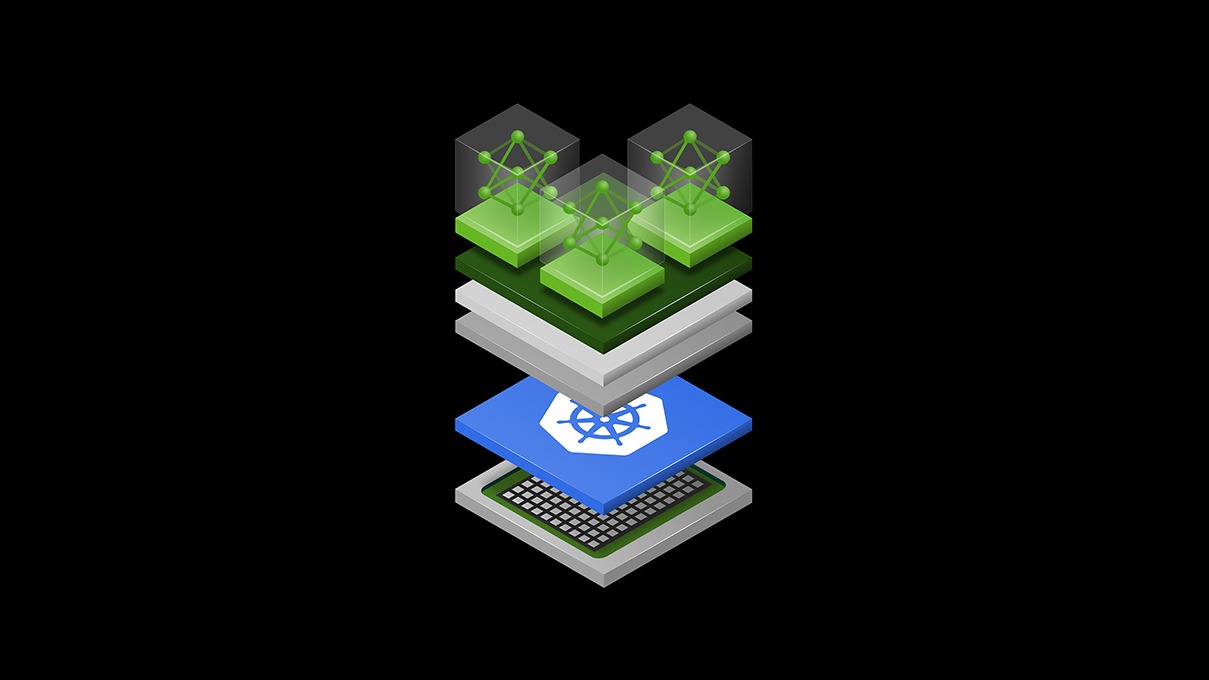

- NVIDIA NIM Operator 3.0.0 expands deployment options for NVIDIA NIM and NVIDIA NeMo microservices across Kubernetes.

- It supports domain-specific NIMs and multiple deployment modes, including multi-LLM and multi-node configurations.

- The release integrates with KServe for both raw and serverless deployments, with autoscaling and lifecycle management handled through InferenceService CRDs.

- Deep Resource Allocation (DRA) is available in technology preview to manage GPU attributes via ResourceClaim and ResourceClaimTemplate.

- Red Hat contributed to enabling NIM deployment on KServe, enabling lifecycle management and cached models, and NeMo Guardrails for Trusted AI. This release targets scalable, resilient AI inference pipelines for large models and multimodal workloads, including chatbots, agentic RAG, and virtual drug discovery, while aligning with NVIDIA AI Enterprise for enterprise support and security updates. For more details, see the official NVIDIA Dev Blog: https://developer.nvidia.com/blog/deploy-scalable-ai-inference-with-nvidia-nim-operator-3-0-0/

Context and background

The AI model landscape is evolving rapidly, with increasingly capable inference engines, backends, and distributed frameworks. Deploying these pipelines efficiently is a key operational challenge for organizations building large-scale AI solutions. NVIDIA NIM Operator is designed to help Kubernetes cluster administrators deploy and manage the NVIDIA NIM inference microservices needed to run modern LLMs and multimodal models—covering tasks such as reasoning, retrieval, vision, speech, biology, and more. The 3.0.0 release broadens these capabilities to simplify, accelerate, and stabilize deployments across diverse Kubernetes environments. NVIDIA has also collaborated with Red Hat to enable NIM deployment on KServe. Red Hat’s contribution helps NIM deployments leverage KServe lifecycle management and model caching, while also enabling NeMo capabilities like Guardrails for building Trusted AI across KServe inference endpoints. This collaboration showcases the growing ecosystem around scalable AI inference, combining NVIDIA software with enterprise-grade Kubernetes platforms. NIM deployments have been used for a range of applications, including chatbots, agentic retrieval-augmented generation (RAG) workflows, and domains as varied as biology and retrieval tasks. The new release continues to emphasize ease of use, reliability, and interoperability with existing cloud-native tooling.

What’s new

NIM Operator 3.0.0 introduces several enhancements designed to simplify and optimize the deployment of NVIDIA NIM microservices and NVIDIA NeMo microservices across Kubernetes environments:

- Easy, fast NIM deployment: The operator supports domain-specific NIMs (biology, speech, retrieval) and multiple deployment options, including multi-LLM compatible configurations and multi-node deployments. The documentation highlights that multi-node deployments without GPUDirect RDMA can incur model shard loading timeouts and restarts, with fast networks (IPoIB or ROCE) recommended and configurable via the NVIDIA Network Operator.

- KServe integration: NIM Operator now supports both raw and serverless deployments on KServe by configuring the InferenceService custom resource to manage deployment, upgrades, and autoscaling of NIM. The operator automatically configures required environment variables and resources in the InferenceService CRDs, enabling streamlined, Kubernetes-native lifecycle management.

- DRA (Deep Resource Allocation) integration (tech preview): DRA provides a more flexible GPU management approach by allowing users to define GPU device classes, request GPUs based on those classes, and filter them according to workload and business needs. This feature is available as a technology preview, with full support coming soon. Deployment examples show NIM Pod resource claims and templates configured via NIM service and NIM Pipeline CRDs.

- NeMo and NIM ecosystem enhancements: The release continues to support NeMo capabilities such as NeMo Guardrails for building Trusted AI across KServe endpoints and model caching with NIM cache, enabling efficient inference workflows.

- Red Hat collaboration and KServe lifecycle management: The partnership enables running NIM microservices on KServe with lifecycle management and simplifies scalable deployments using NIM services. Native KServe support in the NIM Operator unlocks model caching and NeMo features within KServe inference endpoints.

- Real-world validation: The document includes a deployment example using the Hugging Face library to run Llama 3 8B Instruct as a NIM deployment on Kubernetes, including service and pod status checks and curl-based request testing. These changes collectively make deploying scalable AI inference easier than ever, whether targeting multi-LLM deployments or multi-node configurations, optimizing GPU usage with DRA, or deploying on KServe. The release also reinforces enterprise-readiness through NVIDIA AI Enterprise, ongoing security patching, API stability, and enterprise support.

Why it matters (impact for developers/enterprises)

For developers and enterprises building production-grade AI pipelines, the 3.0.0 release provides several practical benefits:

- Reduced deployment friction: By supporting domain-specific NIMs and multiple deployment modes, teams can select the most appropriate configuration for their workloads without bespoke infrastructure work.

- Kubernetes-native lifecycle and autoscaling: Integrating with KServe via InferenceService CRDs enables automated upgrades, scale-out, and health monitoring that align with existing Kubernetes operations.

- Efficient GPU utilization: DRA offers a flexible way to allocate GPU resources to NIM workloads, enabling more precise control over hardware attributes such as architecture and memory—critical for large, memory-bound LLMs and multimodal models (technology preview).

- Enhanced reliability and security: NeMo Guardrails provide a path toward Trusted AI for KServe endpoints, while NVIDIA AI Enterprise support reinforces API stability and proactive security patching.

- Ecosystem collaboration: The Red Hat collaboration extends the accessibility of NIM deployments on KServe, combining NIM’s inference capabilities with KServe lifecycle management and model caching. In practical terms, operators can deploy, scale, and manage NVIDIA NIM and NeMo microservices more efficiently, enabling faster movement from development to production AI workflows. Organizations can leverage existing Kubernetes tooling while benefiting from NVIDIA’s optimization and enterprise support.

Technical details or Implementation

This section summarizes the technical changes and implementation considerations highlighted in the release:

- Deployment options and domain-specific NIMs: Users can deploy domain-specific NIMs (biology, speech, retrieval) or various NIM deployment options, including multi-LLM compatible configurations and multi-node deployments. It is important to note that multi-node NIM deployments without GPUDirect RDMA may encounter model shard loading timeouts and restarts of the LWS leader/worker pods; using high-speed networking (IPoIB or ROCE) is advised and can be configured via the NVIDIA Network Operator.

- Resource management with DRA (tech preview): DRA enables GPU management through ResourceClaim and ResourceClaimTemplate on NIM Pods via the NIM service CRD and NIM Pipeline CRD. Users can create and attach their own claims or let the NIM Operator manage them automatically. DRA supports defining GPU attributes such as architecture and memory to filter workloads according to business needs. This is a technology preview feature with full support planned soon.

- KServe integration and InferenceService CRDs: The NIM Operator supports both raw and serverless deployments on KServe by configuring the InferenceService custom resource to handle deployment, upgrades, and autoscaling. The operator automates the setup of environment variables and resources required by the InferenceService, simplifying the integration with KServe.

- Deployment methodologies with KServe: Two deployment approaches are demonstrated—RawDeployment and Serverless. Serverless deployments enable autoscaling through Kubernetes annotations, offering dynamic resource management for varying inference demand.

- Example deployment and testing: The article presents an example of deploying Llama 3 8B Instruct NIM on Kubernetes using the NIM Operator, including service and pod status verification and curl-based testing to exercise the deployed model.

- Table: deployment options

| Deployment option | Description |

|---|---|

| RawDeployment | Direct deployment managed by NIM Operator and InferenceService CRD. |

| Serverless | Autoscaled deployment via KServe annotations. |

- Ecosystem and support: The NIM Operator is part of NVIDIA AI Enterprise, ensuring enterprise support, API stability, and proactive security patching. Start instructions point to NGC or the NVIDIA/k8s-nim-operator GitHub repository for installation, usage, or issues.

Key takeaways

- NIM Operator 3.0.0 broadens deployment modalities for NVIDIA NIM and NeMo microservices within Kubernetes, including multi-LLM and multi-node scenarios.

- Deep Resource Allocation (DRA) provides a preview-based pathway for granular GPU resource control at the workload level.

- KServe integration, with RawDeployment and Serverless modes, simplifies lifecycle management, upgrades, and autoscaling of AI inference services.

- Red Hat collaboration enables deployment on KServe with model caching and NeMo Guardrails, strengthening Trusted AI capabilities.

- The release emphasizes enterprise-readiness, alignment with NVIDIA AI Enterprise, and a pathway from development to production using familiar cloud-native tools.

FAQ

-

What is NVIDIA NIM Operator 3.0.0 designed to do?

It expands deployment capabilities for NVIDIA NIM and NVIDIA NeMo microservices on Kubernetes, including domain-specific NIMs and multi-LLM/multi-node configurations, with KServe integration and DRA technology preview.

-

What networking considerations exist for multi-node deployments?

Multi-node deployments without GPUDirect RDMA may experience model shard loading timeouts; high-speed networking such as IPoIB or ROCE is recommended and configurable via the NVIDIA Network Operator.

-

What role does Red Hat play in this release?

Red Hat contributed to enabling NIM deployment on KServe, facilitating lifecycle management, model caching, and NeMo Guardrails within KServe endpoints.

-

How does KServe integration work with NIM Operator?

The NIM Operator configures the KServe InferenceService CRD to manage deployments, upgrades, and autoscaling of NIM/NeMo microservices, and supports both RawDeployment and Serverless modes.

-

Where can I start using these tools?

Get started via NVIDIA NGC or the NVIDIA/k8s-nim-operator open source GitHub repository, as mentioned in the release notes.

References

More news

NVIDIA HGX B200 Reduces Embodied Carbon Emissions Intensity

NVIDIA HGX B200 lowers embodied carbon intensity by 24% vs. HGX H100, while delivering higher AI performance and energy efficiency. This article reviews the PCF-backed improvements, new hardware features, and implications for developers and enterprises.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.