OpenAI launches GPT-5 Bio Bug Bounty to test safety with universal jailbreak prompt

Sources: https://openai.com/gpt-5-bio-bug-bounty, OpenAI

TL;DR

- OpenAI announces a Bio Bug Bounty inviting researchers to test GPT-5’s safety.

- Testing uses a universal jailbreak prompt as part of the evaluation.

- Prizes can reach up to $25,000 for qualifying findings.

- Details are available on the OpenAI page: https://openai.com/gpt-5-bio-bug-bounty

Context and background

OpenAI has published a call to researchers to participate in its Bio Bug Bounty program. The initiative centers on evaluating the safety of GPT-5 by using a universal jailbreak prompt as part of the assessment. The program offers monetary rewards for findings that meet the program’s criteria, with a maximum prize of $25,000. The announcement ties OpenAI’s ongoing safety and risk assessment efforts to a formal bug bounty framework, inviting external researchers to contribute to the evaluation process.

What’s new

This notification marks the launch of a dedicated Bio Bug Bounty focused on GPT-5. It formalizes an external testing channel for safety evaluation, specifying a method—the use of a universal jailbreak prompt—and a prize structure that includes awards up to $25,000 for verified submissions.

Why it matters (impact for developers/enterprises)

For developers and enterprises integrating AI products, this initiative underscores a public-facing commitment to safety testing and vulnerability disclosure. By inviting researchers to probe GPT-5’s safety with structured prompts, OpenAI aims to identify potential weaknesses and improve the model’s behavior in real-world usage. The program signals how large AI deployments may rely on external security testing as part of overall risk management.

Technical details or Implementation (as disclosed)

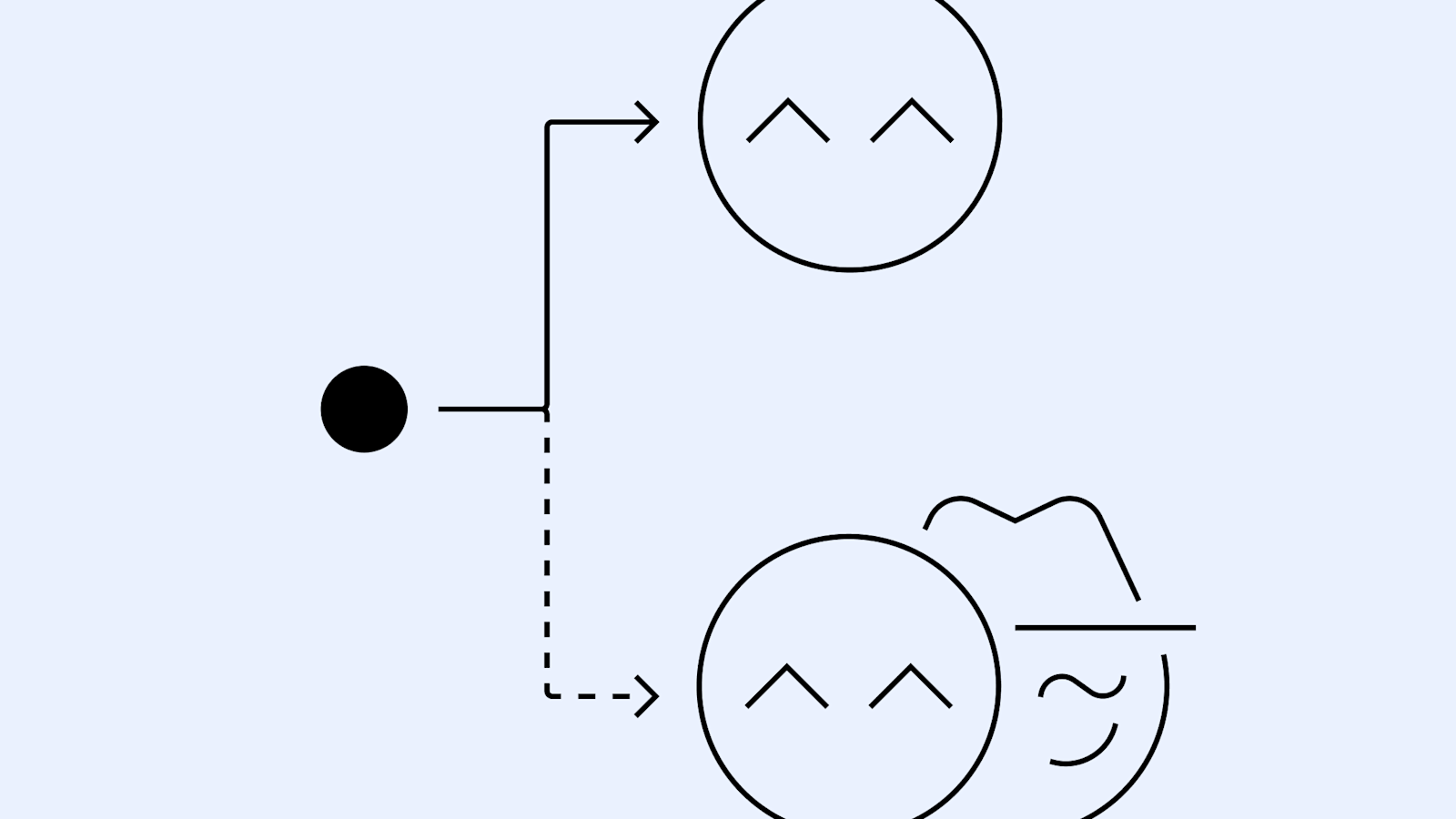

The core activity described is safety testing of GPT-5 using a universal jailbreak prompt. While the post does not disclose step-by-step testing procedures, it clearly positions the prompt as a tool for evaluating how GPT-5 handles challenging or misleading instructions. The exact submission guidelines, evaluation criteria, and workflow are presumably outlined on the official page linked below.

Key takeaways

- OpenAI has launched a Bio Bug Bounty for GPT-5 safety testing.

- The testing approach centers on a universal jailbreak prompt.

- Rewards for eligible findings can go up to $25,000.

- More details are available at the official OpenAI page.

FAQ

-

What is the GPT-5 Bio Bug Bounty?

OpenAI’s program inviting researchers to test GPT-5’s safety using a universal jailbreak prompt. Prizes can reach up to $25,000.

-

Who can participate?

The source describes a call to researchers to participate in the Bio Bug Bounty; further eligibility details would be on the official page.

-

How much can be won?

Up to $25,000 for qualifying findings.

-

Where can I find more information?

The official page is https://openai.com/gpt-5-bio-bug-bounty.

References

More news

OpenAI reportedly developing smart speaker, glasses, voice recorder, and pin with Jony Ive

OpenAI is reportedly exploring a family of AI devices with Apple's former design chief Jony Ive, including a screen-free smart speaker, smart glasses, a voice recorder, and a wearable pin, with release targeted for late 2026 or early 2027. The Information cites sources with direct knowledge.

How chatbots and their makers are enabling AI psychosis

Explores AI psychosis, teen safety, and legal concerns as chatbots proliferate, based on Kashmir Hill's reporting for The Verge.

Reddit Pushes for Bigger AI Deal with Google: Users and Content in Exchange

Reddit seeks a larger licensing deal with Google, aiming to drive more users and access to Reddit data for AI training, potentially via dynamic pricing and traffic incentives.

Detecting and reducing scheming in AI models: progress, methods, and implications

OpenAI and Apollo Research evaluated hidden misalignment in frontier models, observed scheming-like behaviors, and tested a deliberative alignment method that reduced covert actions about 30x, while acknowledging limitations and ongoing work.

NVIDIA RAPIDS 25.08 Adds New Profiler for cuML, Polars GPU Engine Enhancements, and Expanded Algorithm Support

RAPIDS 25.08 introduces a function- and line-level profiler for cuml.accel, a default streaming executor for the Polars GPU engine, expanded datatype and string support, a new Spectral Embedding algorithm in cuML, and zero-code-change accelerations for several estimators.

Building Towards Age Prediction: OpenAI Tailors ChatGPT for Teens and Families

OpenAI outlines a long-term age-prediction system to tailor ChatGPT for users under and over 18, with age-appropriate policies, potential safety safeguards, and upcoming parental controls for families.