Deploy Amazon Bedrock Knowledge Bases using Terraform for RAG-based generative AI applications

Sources: https://aws.amazon.com/blogs/machine-learning/deploy-amazon-bedrock-knowledge-bases-using-terraform-for-rag-based-generative-ai-applications, https://aws.amazon.com/blogs/machine-learning/deploy-amazon-bedrock-knowledge-bases-using-terraform-for-rag-based-generative-ai-applications/, AWS ML Blog

TL;DR

- Automate deploying an Amazon Bedrock knowledge base and its data-source connections using Terraform IaC. AWS Blog

- The solution creates and configures the AWS services involved, enabling rapid startup of RAG workflows with minimal initial configuration. AWS Blog

- It supports customizable chunking strategies and OpenSearch vector dimensions, with Titan Text Embeddings V2 as the default embedding model. AWS Blog

- Terraform plan auditing (with -out) helps you review changes before applying, reducing risk to production environments. AWS Blog

- Post-deployment cleanup steps (terraform destroy and manual S3 cleanup) help avoid unnecessary costs. AWS Blog

Context and background

Retrieval Augmented Generation (RAG) combines foundation models with access to relevant external data to improve response quality, transparency, and cost control. Amazon Bedrock Knowledge Bases are a common choice for implementing RAG workflows, and they can be deployed with a few clicks in the AWS Management Console for initial development. When moving from a console-based setup to production, infrastructure-as-code (IaC) templates help capture the configuration in a reproducible, auditable form. While CDK-based templates exist for Bedrock knowledge bases, Terraform remains a widely used IaC framework among many organizations. This post provides a Terraform-based IaC solution to deploy an Amazon Bedrock knowledge base and establish a data source connection to support RAG workflows. You can find the solution in our AWS Samples GitHub repository. AWS Blog The solution automates the creation and configuration of the AWS service components necessary for a Bedrock knowledge-base-backed RAG workflow. An accompanying diagram illustrates how these services are integrated and notes that several IAM policies govern permissions for the involved resources. Deploying resources via IaC enables programmable infrastructure management, enabling you to start querying your data almost immediately after setup and streamlining ongoing maintenance of the RAG-based application. AWS Blog Prerequisites include enabling access to a foundation model (FM) in Amazon Bedrock for embeddings. The solution uses Titan Text Embeddings V2 as the default model. To enable model access, ensure the environment has Git installed and SSH access configured for the repository. Module inputs, including fine-grained settings for embedding size and chunking behavior, are exposed in modules/variables.tf. You can review the generated plan with terraform init and terraform plan -out to save the plan to a file for exact application with terraform apply. AWS Blog

What’s new

The featured IaC solution automates the end-to-end deployment of a Bedrock knowledge base along with the data-source connection required for RAG workflows. It is designed to be used as a production-ready starting point, enabling teams to customize and extend the deployment through Terraform modules. A key design element is the accompanying architecture diagram, which highlights the integrated services and the IAM permissions that govern them. The repository provides a Terraform module that encapsulates this integration and configuration, aligning with the goal of reducing manual setup effort and enabling repeatable deployments. AWS Blog You can find the solution in AWS Samples on GitHub, which serves as a reproducible baseline for developing and testing Bedrock RAG workloads. The post also notes that the deployment process emphasizes plan review before apply, using terraform init and terraform plan -out to capture the proposed changes for verification. AWS Blog

Why it matters (impact for developers/enterprises)

For developers and enterprises building RAG-based generative AI applications, this Terraform-based solution provides a reproducible, auditable path from development to production. By codifying the knowledge-base deployment and its data-source connections, teams can achieve consistent environments, reduce manual configuration errors, and streamline maintenance as their RAG workloads evolve. The approach also supports faster iteration by enabling near-immediate data querying as part of the deployed knowledge base, once the FM embeddings are enabled. AWS Blog The automation aligns with best practices for IaC, including upfront plan generation and explicit resource creation/modification/destruction details, which helps operators understand and approve infrastructure changes before they are applied. This is particularly valuable for teams managing large data sources and complex embedding configurations in production environments. AWS Blog

Technical details or Implementation

The Terraform module in the solution automates the creation and configuration of the AWS service components required for a Bedrock knowledge base and its data-source connection. It supports configurable chunking strategies and OpenSearch vector dimensions, which influence how content is chunked and how embeddings are indexed for retrieval. The default embedding model is Titan Text Embeddings V2, and the module exposes several parameters in modules/variables.tf to tailor chunking size, hierarchy, and semantic behavior to your data and use case. AWS Blog The deployment process involves inspecting the Terraform plan before applying changes, typically by running:

- terraform init

- terraform plan -out

- terraform apply This ensures that only intended resources are created, modified, or destroyed, helping prevent disruptions during deployment. The solution also highlights that you may need to adjust OpenSearch vector dimensions and chunking configuration via the variables.tf file to fit your data and performance objectives. AWS Blog The Terraform module accommodates flexible configurations for three major chunking approaches:

- FIXED_SIZE: customize fixed-size chunking (optional)

- HIERARCHICAL: customize hierarchical chunking (optional)

- SEMANTIC: customize semantic chunking (optional) A table summarizes these options and related tunable aspects:

| Chunking strategy | Notes |

|---|---|

| FIXED_SIZE | customize fixed-size chunking (optional) |

| HIERARCHICAL | customize hierarchical chunking (optional) |

| SEMANTIC | customize semantic chunking (optional) |

| The solution also enables control over the embedding vector dimension, which affects retrieval precision and storage/performance trade-offs. The vector_dimension variable in variables.tf governs this setting, and operators may increase it for higher precision or decrease it to optimize storage and query performance. AWS Blog | |

| From a lifecycle perspective, once testing is complete, cleaning up resources is straightforward: run terraform destroy to remove infrastructure and clean up S3 bucket contents as appropriate. The post emphasizes that removing unused resources reduces costs, and it suggests optional manual cleanup of local state files if desired. AWS Blog | |

| For testing and validation, the article recommends using a sample document source such as content from the AWS Well-Architected Framework guide (downloadable as a PDF) to exercise the knowledge base and embedding workflow before moving to production. It also notes that access to a foundation model must be enabled in Bedrock to generate embeddings. AWS Blog |

Key implementation notes

- Environment setup: Git installed; SSH keys configured for repository access. This is required to fetch the Terraform modules and related resources. AWS Blog

- Module inputs are documented in modules/variables.tf, including fine-grained settings for embedding sizes and chunking behavior. AWS Blog

- The architecture diagram in the post illustrates the integrated services and the IAM policies that govern permissions. AWS Blog

Key takeaways

- Terraform-based IaC can accelerate and standardize Bedrock knowledge-base deployments for RAG workflows.

- You can customize how content is chunked and how embeddings are indexed to balance retrieval quality and performance.

- Plan review and explicit cleanup steps help reduce risk and cost in development and production environments.

- The solution relies on Titan Text Embeddings V2 by default and requires enabling FM access in Bedrock.

- Documentation in the AWS blog and the repository provides the starting point for testing and production rollout. AWS Blog

FAQ

-

What does the Terraform module automate?

It automates the creation and configuration of the AWS service components involved in deploying an Amazon Bedrock knowledge base and setting up a data source connection for RAG workflows. [AWS Blog](https://aws.amazon.com/blogs/machine-learning/deploy-amazon-bedrock-knowledge-bases-using-terraform-for-rag-based-generative-ai-applications/)

-

What are the prerequisites for using this solution?

ccess to Bedrock for generating embeddings, a Git-enabled environment, and SSH keys configured to access the repository. Module inputs for chunking and embedding settings are in modules/variables.tf. [AWS Blog](https://aws.amazon.com/blogs/machine-learning/deploy-amazon-bedrock-knowledge-bases-using-terraform-for-rag-based-generative-ai-applications/)

-

How do you validate the deployment before applying changes?

Use terraform init and terraform plan -out to generate and review the execution plan, ensuring changes align with expectations before applying. [AWS Blog](https://aws.amazon.com/blogs/machine-learning/deploy-amazon-bedrock-knowledge-bases-using-terraform-for-rag-based-generative-ai-applications/)

-

How do you clean up resources after testing?

Run terraform destroy to remove infrastructure and optionally clean up S3 bucket contents to avoid ongoing costs. [AWS Blog](https://aws.amazon.com/blogs/machine-learning/deploy-amazon-bedrock-knowledge-bases-using-terraform-for-rag-based-generative-ai-applications/)

References

More news

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Prompting for precision with Stability AI Image Services in Amazon Bedrock

Amazon Bedrock now offers Stability AI Image Services, extending Stable Diffusion and Stable Image with nine tools for precise image creation and editing. Learn prompting best practices for enterprise use.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.

Scale visual production using Stability AI Image Services in Amazon Bedrock

Stability AI Image Services are now available in Amazon Bedrock, delivering ready-to-use media editing via the Bedrock API and expanding on Stable Diffusion models already in Bedrock.

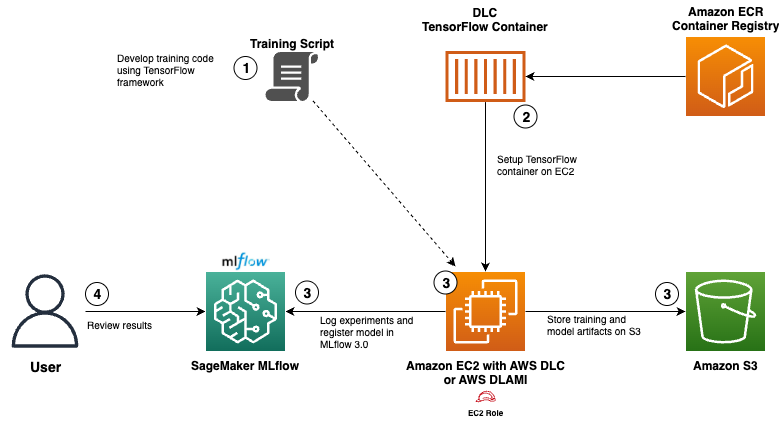

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.

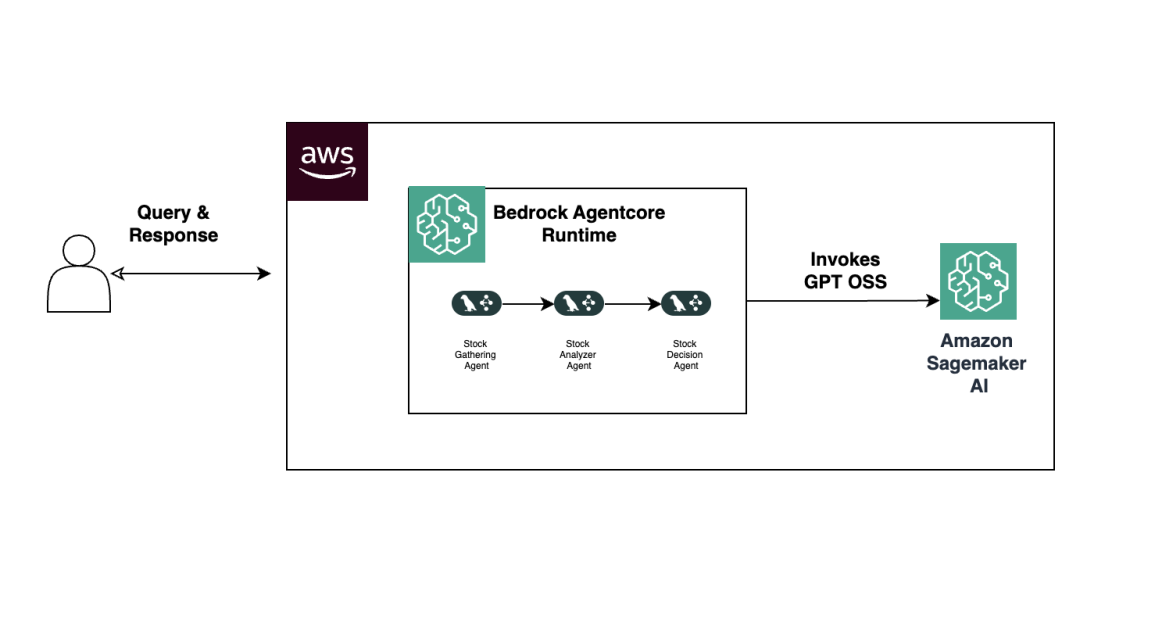

Build Agentic Workflows with OpenAI GPT OSS on SageMaker AI and Bedrock AgentCore

An end-to-end look at deploying OpenAI GPT OSS models on SageMaker AI and Bedrock AgentCore to power a multi-agent stock analyzer with LangGraph, including 4-bit MXFP4 quantization, serverless orchestration, and scalable inference.