Amazon Lens Live AI: Real-time Shopping by Pointing Your Camera

Sources: https://www.theverge.com/news/769585/amazon-lens-live-ai-real-time-shopping, The Verge AI

TL;DR

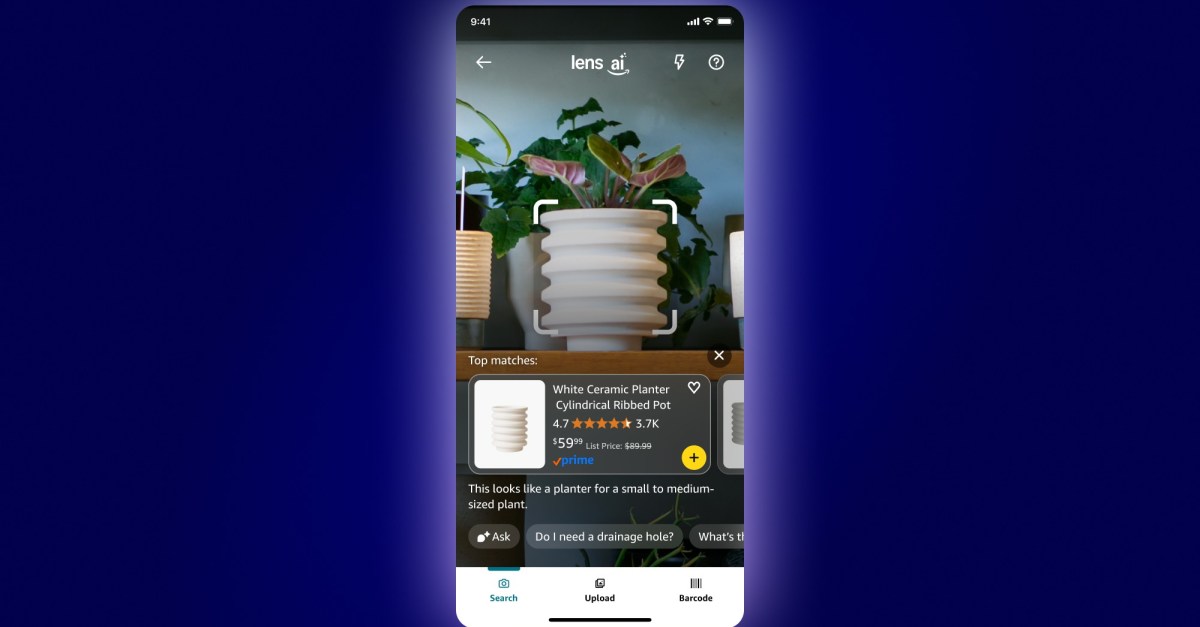

- Amazon Lens Live lets you shop by pointing your camera, scanning the environment to surface matching product listings in real time.

- It uses an object detection model to identify products and compares them against billions of Amazon listings, presenting results in a swipeable carousel.

- The feature includes options to add items to cart or wishlist and integrates Rufus to summarize descriptions and answer questions.

- Lens Live is rolling out to the Amazon Shopping app on iOS for now, with plans to reach more customers in the coming weeks; this is Amazon’s take on live-scanning tools, with a buy emphasis.

Context and background

Amazon has long offered visual search capabilities within its Shopping app, including image uploads, barcode scans, and photo-based queries. Lens Live expands on these existing features by enabling real-time object recognition in the user’s environment as you pan or focus your camera. The Verge frames Lens Live as Amazon’s answer to live-scanning experiences like Google’s Gemini Live, but notes the key distinction: Lens Live places a large emphasis on purchasing with a prominent buy option on items it identifies. The feature leverages Amazon’s vast catalog, planning to surface matches from billions of products on its marketplace.

What’s new

Lens Live uses an on-device or cloud-assisted object detection model to identify products shown to the camera in real time. When a product is recognized, Lens Live compares it against Amazon’s marketplace to surface similar or matching items. The user sees a swipeable carousel of matches, with direct actions to add to cart or to a wishlist. The experience can be guided by either broad room scanning or focusing on a specific product. In addition to product results, Lens Live integrates Amazon’s AI assistant Rufus to summarize product descriptions and answer questions about items. This functionality builds on Amazon’s existing visual search capabilities, which already allowed searching by image upload, barcode scan, or snapping a photo within the Shopping app. Amazon describes Lens Live as rolling out to iOS for the moment and indicates broader availability in the coming weeks.

Why it matters (impact for developers/enterprises)

Lens Live demonstrates a concrete, real-time AI-enabled shopping workflow within a consumer app. For developers and enterprises, it highlights how live object detection can be paired with a large product catalog to surface actionable recommendations directly in an app’s user interface. The integration with Rufus shows a path for combining product summarization and Q&A capabilities with live search results, potentially streamlining purchase journeys and reducing information gaps at the moment of decision. From an enterprise perspective, the approach underscores the growing value of multimodal AI in retail: real-time perception, catalog-wide comparison, and immediate shopping actions inside an app, all supported by an AI assistant for on-the-fly information.

Technical details or Implementation

Lens Live relies on an object detection model capable of identifying products shown in the user’s camera feed in real time. Once a product is recognized, the system compares it against billions of products listed on Amazon’s marketplace to surface matching or similar items. The results appear in a swipeable carousel, with quick actions to add items to a cart or a wishlist. The feature is currently rolling out to the Amazon Shopping app on iOS and is expected to reach more customers in the coming weeks. Lens Live also integrates Rufus, Amazon’s AI assistant, to summarize product descriptions and respond to questions about identified items. This integration aims to help users quickly understand product details without leaving the scanning flow. The service builds on Amazon’s existing visual search capabilities, which already supported image uploads, barcode scanning, and photo-based queries.

Key takeaways

- Lens Live enables real-time product identification and shopping by camera in the Amazon Shopping app (iOS, rolling out).

- It uses an object detection model and compares results against billions of Amazon listings to surface matches.

- A swipeable carousel presents matches with direct add-to-cart and wishlist options.

- Rufus AI is integrated to summarize descriptions and answer questions about identified items.

- The rollout is planned to expand in the coming weeks, signaling Amazon’s continued push toward in-app AI-powered shopping experiences.

FAQ

-

What is Lens Live?

Lens Live is an AI-powered feature that uses your camera to scan the environment in real time and surface matching product listings from Amazon’s catalog.

-

Where is Lens Live available now?

It is rolling out to the Amazon Shopping app on iOS for now, with plans to reach more customers in the coming weeks.

-

How does Lens Live identify items?

It uses an object detection model to identify products shown on camera in real time and then compares them against billions of Amazon listings.

-

What happens after items are identified?

swipeable carousel displays matching items, with options to add to cart or to a wishlist, and Rufus can summarize descriptions and answer questions.

References

More news

First look at the Google Home app powered by Gemini

The Verge reports Google is updating the Google Home app to bring Gemini features, including an Ask Home search bar, a redesigned UI, and Gemini-driven controls for the home.

Meta’s failed Live AI smart glasses demos had nothing to do with Wi‑Fi, CTO explains

Meta’s live demos of Ray-Ban smart glasses with Live AI faced embarrassing failures. CTO Andrew Bosworth explains the causes, including self-inflicted traffic and a rare video-call bug, and notes the bug is fixed.

OpenAI reportedly developing smart speaker, glasses, voice recorder, and pin with Jony Ive

OpenAI is reportedly exploring a family of AI devices with Apple's former design chief Jony Ive, including a screen-free smart speaker, smart glasses, a voice recorder, and a wearable pin, with release targeted for late 2026 or early 2027. The Information cites sources with direct knowledge.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

How chatbots and their makers are enabling AI psychosis

Explores AI psychosis, teen safety, and legal concerns as chatbots proliferate, based on Kashmir Hill's reporting for The Verge.

Google expands Gemini in Chrome with cross-platform rollout and no membership fee

Gemini AI in Chrome gains access to tabs, history, and Google properties, rolling out to Mac and Windows in the US without a fee, and enabling task automation and Workspace integrations.