Mercury foundation models from Inception Labs available on Amazon Bedrock Marketplace and SageMaker JumpStart

Sources: https://aws.amazon.com/blogs/machine-learning/mercury-foundation-models-from-inception-labs-are-now-available-in-amazon-bedrock-marketplace-and-amazon-sagemaker-jumpstart, https://aws.amazon.com/blogs/machine-learning/mercury-foundation-models-from-inception-labs-are-now-available-in-amazon-bedrock-marketplace-and-amazon-sagemaker-jumpstart/, AWS ML Blog

TL;DR

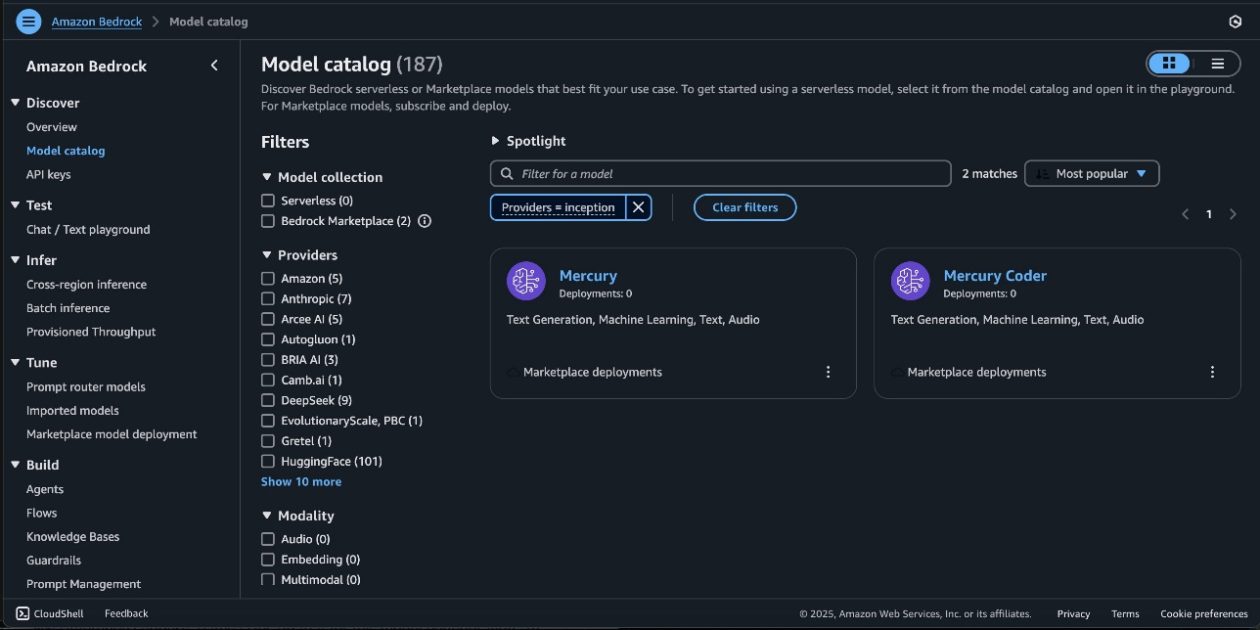

- Mercury and Mercury Coder foundation models from Inception Labs are now available through Amazon Bedrock Marketplace and Amazon SageMaker JumpStart.

- The Mercury family uses diffusion-based generation to produce multiple tokens in parallel, delivering faster inference than traditional autoregressive models.

- Access via Bedrock Marketplace and JumpStart includes testing in the Bedrock playground and deployment through the SageMaker Studio interface or the SageMaker Python SDK.

- Demonstrations include a complete tic-tac-toe game with minimax AI generated in a single response at 528 tokens per second, illustrating strong code-generation capabilities.

- Mercury models support advanced tool use, enabling them to call external functions based on user queries, enabling AI agents that interact with APIs and databases. For more details, see the original AWS blog post describing the availability and capabilities. AWS ML Blog.

Context and background

Mercury represents the first family of commercial-scale diffusion-based language models from Inception Labs. Diffusion-based generation differs from conventional autoregressive models by producing multiple tokens in parallel through a coarse-to-fine process, enabling dramatically faster inference while maintaining high-quality outputs. Mercury Coder extends these capabilities to code generation tasks. The models are designed to support enterprise needs for speed, reliability, and security when building generative AI workloads on AWS. Amazon Bedrock Marketplace provides access to a broad catalog of foundation models, including Mercury and Mercury Coder, while SageMaker JumpStart offers ready-to-deploy, pre-trained models and a streamlined workflow for model deployment and MLOps. This combination allows developers to explore, deploy, and test Mercury models in a secure AWS environment, with options to run in your own VPC and to leverage Bedrock tooling via the Converse API for model invocation. This launch aligns with AWS guidance on integrating foundation models through Bedrock and JumpStart, and highlights the role of model hubs in JumpStart (for example, agencies and partners surface pre-trained models such as Mistral for various tasks). The goal is to accelerate experimentation and responsible adoption of generative AI at scale on AWS.

What’s new

- Mercury and Mercury Coder are now accessible via Amazon Bedrock Marketplace and Amazon SageMaker JumpStart. You can deploy these diffusion-based FMs to build, experiment, and responsibly scale generative AI applications on AWS.

- The models support Mini and Small parameter sizes, enabling you to choose the model footprint that best fits latency, cost, and throughput requirements.

- Access is via two integrated pathways:

- Bedrock Marketplace: Subscribe to the Mercury model package and provision endpoints from the model detail page; you can also invoke the model with the Converse API and test capabilities in the Bedrock playground before integrating it into production applications.

- SageMaker JumpStart: Discover and deploy Mercury and Mercury Coder in SageMaker Studio or programmatically via the SageMaker Python SDK. JumpStart model hubs provide a catalog of pre-trained models (including Mercury) for a variety of tasks.

- Deployment creates endpoints inside a secure AWS environment and in your VPC, supporting enterprise data security requirements. You’ll be guided through permissions and deployment configuration, including the necessary IAM role permissions to create Marketplace subscriptions.

- After deployment, you can test the endpoint by sending a sample payload or using the SDK testing options. The post provides a concrete example deploying Mercury via the SageMaker SDK and verifying its capabilities with a simple code-generation task.

- Mercury models include advanced tool-use capabilities, enabling them to determine when and how to call external functions based on user queries. This enables building AI agents and assistants that can interact with external systems, APIs, and databases.

- The article also provides deployment cleanup guidance to avoid charges, including instructions to delete endpoints when no longer needed.

How to access and deploy

You can access Mercury models through both Bedrock Marketplace and SageMaker JumpStart. Bedrock Marketplace exposes model packages and provides a testing playground for quick exploration, while JumpStart offers a curated, fully managed deployment workflow inside SageMaker Studio or via the SageMaker Python SDK. To deploy, you typically verify your resources and IAM permissions, subscribe to the model package, obtain the model package ARN, and then create a deployable model using the provided ARN in your code. The article walks through a concrete end-to-end deployment using the SageMaker SDK, including how to reference the model package ARN and how to test an endpoint after deployment. A tic-tac-toe example demonstrates the model’s generation speed and code-generation quality, producing a complete, functional game with HTML, CSS, and JavaScript in a single response and achieving 528 tokens per second in that scenario.

Why it matters (impact for developers/enterprises)

- Diffusion-based Mercury models offer high-speed inference by generating multiple tokens in parallel, which is especially valuable for latency-sensitive AI workloads such as chat, coding assistants, and content generation pipelines.

- Availability through Bedrock Marketplace and JumpStart lowers the barrier to access for developers and enterprises, enabling rapid experimentation and iteration without extensive in-house model training or infrastructure setup.

- The ability to run in a secure AWS environment and inside a customer’s VPC supports regulatory and security requirements common to enterprise deployments. This is important for industries that require data isolation and controlled networking while leveraging advanced generative capabilities.

- Mercury’s tool-use capabilities expand the potential for AI agents to automate and orchestrate external tasks, APIs, and databases, enabling more capable assistants and autonomous workflows.

- The integration with SageMaker features such as Pipelines, Debugger, and container log visibility helps teams monitor performance, enforce MLOps practices, and manage the lifecycle of production-grade models.

Technical details or Implementation

Mercury and Mercury Coder are offered in multiple sizes (Mini and Small) to accommodate latency and cost considerations. Access paths include Bedrock Marketplace and SageMaker JumpStart, each with its own deployment flow:

- Bedrock Marketplace: Model detail pages provide capabilities, pricing, and implementation guidelines. You can subscribe, test in the Bedrock playground, and then deploy endpoints. The Converse API can be used to invoke the model from Bedrock tooling.

- SageMaker JumpStart: Mercury models appear in the JumpStart model hubs and can be deployed through SageMaker Studio or programmatically via the SageMaker Python SDK. After deployment, you can evaluate performance and MLOps controls with AWS features such as SageMaker Pipelines, SageMaker Debugger, and container logs. Deployment steps (high level):

- Ensure you have the appropriate SageMaker IAM role permissions to deploy the model, including AWS Marketplace subscription permissions if needed.

- Subscribe to the model package or use an existing subscription in your account.

- Obtain the model package ARN and specify it when creating a deployable model via Boto3 (or via the JumpStart interface).

- Deploy the endpoint and test with sample payloads to validate behavior and latency.

- Monitor performance and costs; use the provided guidance to clean up resources when finished to avoid charges. A small example in the post demonstrates Mercury’s capabilities by asking the model to generate a tic-tac-toe game. The response includes a complete, functional game with minimax AI, delivered at 528 tokens per second for that specific run, including HTML, CSS, and JavaScript in a single response. This example illustrates both the coding-gen strengths and the speed advantages of diffusion-based generation.

Tables: quick facts

| Model family | Access paths | Sizes supported |---|---|---| | Mercury | Bedrock Marketplace, SageMaker JumpStart | Mini, Small |Mercury Coder | Bedrock Marketplace, SageMaker JumpStart | Mini, Small |

Key takeaways

- Mercury brings diffusion-based speed to production-grade language models on AWS via Bedrock Marketplace and JumpStart.

- Access is designed for rapid experimentation, testing, and scale within secure AWS environments and customer VPCs.

- Tool-use capabilities enable the creation of AI agents capable of invoking external functions, APIs, and databases.

- Lifecycle and monitoring support through SageMaker features helps operators manage production workloads.

FAQ

- Q: What are Mercury and Mercury Coder models? A: They are diffusion-based foundation models from Inception Labs, available through Bedrock Marketplace and SageMaker JumpStart on AWS.

- Q: How can I access them on AWS? A: You can subscribe to the model package via Bedrock Marketplace or deploy them from SageMaker JumpStart, then test in the Bedrock playground or in SageMaker Studio/SKD.

- Q: What kind of speed and capabilities were demonstrated? A: A tic-tac-toe game was generated with complete code (HTML/CSS/JS) and minimax AI at 528 tokens per second in the example, illustrating fast diffusion-based generation.

- Q: What security considerations exist? A: Endpoints are deployed in a secure AWS environment and within your VPC to support enterprise security needs, and cleanup steps are provided to avoid ongoing charges.

- Q: How do I manage model deployment and monitoring? A: Use SageMaker Pipelines, SageMaker Debugger, and container logs to manage MLOps, performance, and observability alongside JumpStart–provided tooling.

References

More news

NVIDIA HGX B200 Reduces Embodied Carbon Emissions Intensity

NVIDIA HGX B200 lowers embodied carbon intensity by 24% vs. HGX H100, while delivering higher AI performance and energy efficiency. This article reviews the PCF-backed improvements, new hardware features, and implications for developers and enterprises.

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

Prompting for precision with Stability AI Image Services in Amazon Bedrock

Amazon Bedrock now offers Stability AI Image Services, extending Stable Diffusion and Stable Image with nine tools for precise image creation and editing. Learn prompting best practices for enterprise use.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.