Google adds AI-powered language lessons and live translate in Translate app

Sources: https://www.theverge.com/news/765872/google-translate-ai-language-learning-duolingo, The Verge AI

TL;DR

- Google Translate adds AI-powered language-learning features in beta.

- Lessons are customized by your skill level and goal using Gemini AI models.

- Real-time conversation translation and live translation support across 70+ languages.

- Initial language pairs: English ↔ Spanish/French; Spanish/French/Portuguese ↔ English.

- Practice mode includes speaking, listening, and scenario-based exercises with progress tracking.

Context and background

The Verge reports that Google is embedding AI-powered language learning tools into its Translate app, rolling out the feature in beta. The initiative sits alongside a broader push to fuse translation capabilities with personalized education, using its Gemini AI models to tailor lessons to individual users. In practice, the new approach mirrors some aspects of language-learning platforms by offering structured scenarios and targeted practice, but it remains integrated within a translation app rather than a standalone learning service. The feature aligns with Google’s broader effort to expand AI-assisted cross-language capabilities within its ecosystem. The Verge notes that the beta focuses on a subset of language pairs to start, reflecting a measured rollout while the company collects feedback and data from users. The Verge The new live translation feature complements the learning tools by enabling back-and-forth conversations with someone who speaks a different language. The app can translate speech in real time, producing AI-generated transcriptions and audio translations for both speakers. Google’s product manager, Matt Sheets, described the exercises as tracking daily progress to help users build confidence in real-world communication. The live translation approach in Translate is presented as a distinct capability from previous mobile-native live translation experiences, signaling a broader strategy to combine education and real-time communication in a single app. The Verge This feature set is described as rolling out in beta and is currently oriented toward a few core languages, with live translation already available in the United States, India, and Mexico. It supports more than 70 languages for live translation, including Arabic, French, Hindi, Korean, Spanish, and Tamil. Google notes that the Pixel 10’s live-translation experience is not identical to the Translate app, with ongoing experimentation around how AI-generated audio should sound. The Verge

What’s new

- A new Practice button in Google Translate lets users tailor language learning from within the app. Users can select their current skill level and describe their learning goal, or choose from preset scenarios like professional conversations, everyday interactions, or talking with friends and family.

- Gemini AI models generate a customized lesson based on user input. For example, an intermediate Spanish learner studying abroad and planning to communicate with a host family might receive a lesson around meal times and related phrases.

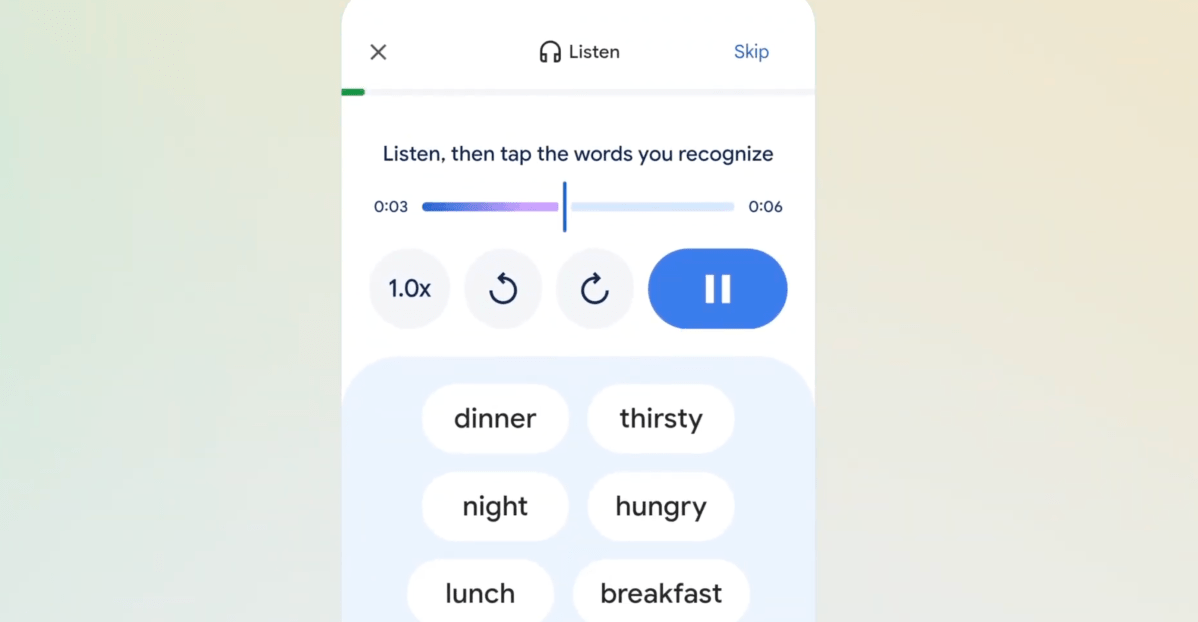

- Learners can choose to practice speaking on a topic with Translate or listen to conversational exchanges and tap the words they recognize. The system tracks daily progress and assists users in building practical language abilities. The Verge

- A live-translation feature supports back-and-forth conversations by translating spoken language into the listener’s preferred language and vice versa, with AI-generated transcription and audio translation. The app does not, at least in the current iteration, attempt to mimic the user’s voice in generated audio, with Google reportedly experimenting with different options. The Verge

Why it matters (impact for developers/enterprises)

This development demonstrates how an existing translation tool can be extended into a learning platform by embedding AI-driven personalization. By generating customized lessons based on skill level and stated goals, Google shows a path for integrating education-centric features directly into language services, potentially reducing friction for users who want both translation and learning in a single app. The live-translation capability further enhances cross-language collaboration by enabling real-time, bidirectional conversations, which can be valuable for multinational teams, travel-related use cases, and customer support scenarios where language barriers exist. The rollout in beta and the focus on real-time, AI-generated content highlight how AI can be leveraged to create adaptive, context-aware learning experiences within mainstream apps. The Verge

Technical details or Implementation

- User flow and customization: In Translate, tapping the new Practice button enables users to set their current skill level and describe their goal. Users can also select from preset scenarios (e.g., professional conversations, everyday interactions, talking with friends and family). Google uses its Gemini AI models to generate a lesson precisely tailored to the user’s responses. A practical example: an intermediate Spanish speaker aiming to converse with a host family while studying abroad would receive a scenario focused on meal times and related vocabulary.

- Modes of practice: After a lesson is generated, users can practice speaking about the topic or listen to conversations and tap the words they recognize. The exercises are designed to track daily progress and help users build confidence in real-world communication.

- Live translation: The app’s live translation feature enables back-and-forth conversations between speakers who do not share a language. It translates speech into the listener’s language and produces AI-generated transcriptions and translations in both directions. Acknowledging differences across devices, Google notes that the Translate app’s live translation differs from the Pixel 10 experience, and the company is experimenting with voice options. Live translation works in more than 70 languages, including Arabic, French, Hindi, Korean, Spanish, and Tamil, and is currently available to users in the US, India, and Mexico.

Key takeaways

- AI-driven language learning is being integrated directly into a translation app, with personalized lessons generated by Gemini models.

- The platform supports real-time conversation translation and live translation across a broad language set.

- Initial language support focuses on English↔Spanish/French and Spanish/French/Portuguese↔English, with broader live-translation language coverage.

- The feature emphasizes progress tracking and scenario-based learning to build practical communication skills.

- Availability is in beta with a limited geographic rollout, signaling an iterative update process.

FAQ

-

Which languages are supported for practice right now?

English speakers can practice Spanish and French, and Spanish, French, and Portuguese speakers can practice English.

-

How does the lesson generation work?

The Practice feature uses Gemini AI models to generate a lesson based on your stated skill level and goal, including preset scenarios.

-

How can I access live translation?

Live translation is available in the Translate app for conversations in more than 70 languages, with current availability in the US, India, and Mexico.

-

Will the AI-generated audio mimic my voice?

The current approach does not try to make the AI-generated audio sound like your voice, and Google is experimenting with different options there.

-

Where can I find the official information about this feature?

The Verge reported the beta rollout and features described here, with details provided by Google and product managers. [The Verge](https://www.theverge.com/news/765872/google-translate-ai-language-learning-duolingo)

References

- Google is putting AI-powered language learning tools into its Translate app. The Verge. https://www.theverge.com/news/765872/google-translate-ai-language-learning-duolingo

More news

First look at the Google Home app powered by Gemini

The Verge reports Google is updating the Google Home app to bring Gemini features, including an Ask Home search bar, a redesigned UI, and Gemini-driven controls for the home.

Meta’s failed Live AI smart glasses demos had nothing to do with Wi‑Fi, CTO explains

Meta’s live demos of Ray-Ban smart glasses with Live AI faced embarrassing failures. CTO Andrew Bosworth explains the causes, including self-inflicted traffic and a rare video-call bug, and notes the bug is fixed.

OpenAI reportedly developing smart speaker, glasses, voice recorder, and pin with Jony Ive

OpenAI is reportedly exploring a family of AI devices with Apple's former design chief Jony Ive, including a screen-free smart speaker, smart glasses, a voice recorder, and a wearable pin, with release targeted for late 2026 or early 2027. The Information cites sources with direct knowledge.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.