How to Spot (and Fix) 5 Common Performance Bottlenecks in Pandas Workflows

Sources: https://developer.nvidia.com/blog/how-to-spot-and-fix-5-common-performance-bottlenecks-in-pandas-workflows, https://developer.nvidia.com/blog/how-to-spot-and-fix-5-common-performance-bottlenecks-in-pandas-workflows/, developer.nvidia.com

TL;DR

- The article identifies five common pandas bottlenecks: slow CSV parsing, large joins, wide object/string columns, heavy groupby operations, and datasets that exceed CPU memory.

- CPU fixes include faster parsing with PyArrow, pre-filtering data, downcasting numerics, and using categorical encodings where appropriate.

- GPU fixes rely on NVIDIA cuDF’s pandas accelerator (cudf.pandas), which runs on GPU threads with no code changes, enabling near-instantial performance improvements for many workflows.

- You can test these boosts in Google Colab, where GPUs are available and cudf.pandas is pre-installed. The accelerator can also handle reads/writes for Parquet/CSV.

- The approach complements other tools (e.g., Polars’ GPU engine) and emphasizes a drop-in path to speed up pandas workflows without rewriting existing code. According to NVIDIA, these techniques and the cudf.pandas drop-in accelerator offer significant speedups across typical pandas workloads. See the original article for the full discussion of each bottleneck and practical steps.

Context and background

Pandas users frequently encounter performance bottlenecks that slow experimentation and iteration. The NVIDIA blog discusses five recurring pain points in pandas workflows and outlines practical workarounds you can apply on CPU with code tweaks. It also introduces a GPU-powered drop-in accelerator, cudf.pandas, which delivers order-of-magnitude speedups with no code changes. For those without a GPU, Colab provides a free environment where cudf.pandas and related libraries are available pre-installed. The broader message is that GPU acceleration can be introduced with minimal friction, while CPU-side optimizations remain a key first step.

What’s new

The central new capability highlighted is cudf.pandas, a drop-in GPU accelerator that enables pandas-like workloads to run on NVIDIA GPUs with parallel processing across thousands of GPU threads. Importantly, many operations—especially joins, string processing, and aggregations—can see dramatic improvements without rewriting existing pandas code. The post also emphasizes practical, CPU-first optimizations (like PyArrow parsing, column selection, and downcasting) and points to example notebooks and Colab references to illustrate the performance differentials.

Why it matters (impact for developers/enterprises)

- Speed and scale: GPU acceleration can transform multi-second to millisecond performance for data reads, joins, and aggregations on large datasets.

- Lower iteration cost: Faster data operations enable more interactive exploration, model development, and feature engineering cycles.

- Accessibility: Free, cloud-based options (e.g., Google Colab) allow teams to experiment with cudf.pandas without local GPU hardware.

- Compatibility: cudf.pandas provides a drop-in path—your existing pandas code can benefit from GPU speedups with minimal or no rewrites.

- Ecosystem compatibility: The approach is positioned as complementary to other accelerators (e.g., Polars with GPU backends) that offer similar drop-in acceleration for common DataFrame operations. For further context, NVIDIA frames the content as a practical guide for developers and enterprises aiming to accelerate data science workflows with minimal disruption to existing codebases. See the main NVIDIA post for details and examples.

Technical details or Implementation

The article frames five canonical bottlenecks and offers concrete CPU and GPU strategies. Below is a consolidated view of the suggestions and how they map to practical actions.

Pain point 1: Slow CSV parsing in pandas

- What it looks like: Large CSVs load slowly; CPU usage spikes; I/O-bound bottleneck prevents downstream work.

- CPU fix: Use a faster parsing engine like PyArrow; consider converting to Parquet/Feather for faster reads, loading only needed columns, or reading in chunks.

- GPU fix: With cuDF’s pandas accelerator, CSVs load in parallel across thousands of GPU threads, delivering near-instant loads and accelerating CSV/Parquet I/O.

Pain point 2: Large joins or merges in pandas

- What it looks like: High memory usage and CPU pressure during merges on tens of millions of rows.

- CPU fix: Use indexed joins where possible and drop unneeded columns before merging to reduce data movement.

- GPU fix: Load the cudf.pandas extension before importing pandas to run joins in parallel across thousands of GPU threads with minimal or no code changes required.

Pain point 3: Wide object/string columns and high-cardinality data

- What it looks like: Large object/string columns inflate memory; string operations and joins on strings slow down pipelines.

- CPU fix: Target low-cardinality string columns and convert them to category; keep truly high-cardinality columns as strings.

- GPU fix: cuDF accelerates string operations with GPU-optimized kernels, speeding up .str methods like len() and contains(), and joins on string keys even with high cardinality. The article highlights using %load_ext cudf.pandas to enable GPU acceleration.

Pain point 4: Groupby operations on large datasets

- What it looks like: Groupby with multiple keys or expensive aggregations consume CPU cycles and memory.

- CPU fix: Reduce data size before aggregation (drop unused columns, filter rows, pre-compute simpler features); consider observed=True for categorical keys to skip unused category combinations.

- GPU fix: cuDF’s pandas accelerator distributes groupby work across thousands of GPU threads, enabling massive speedups for large groupings and aggregations.

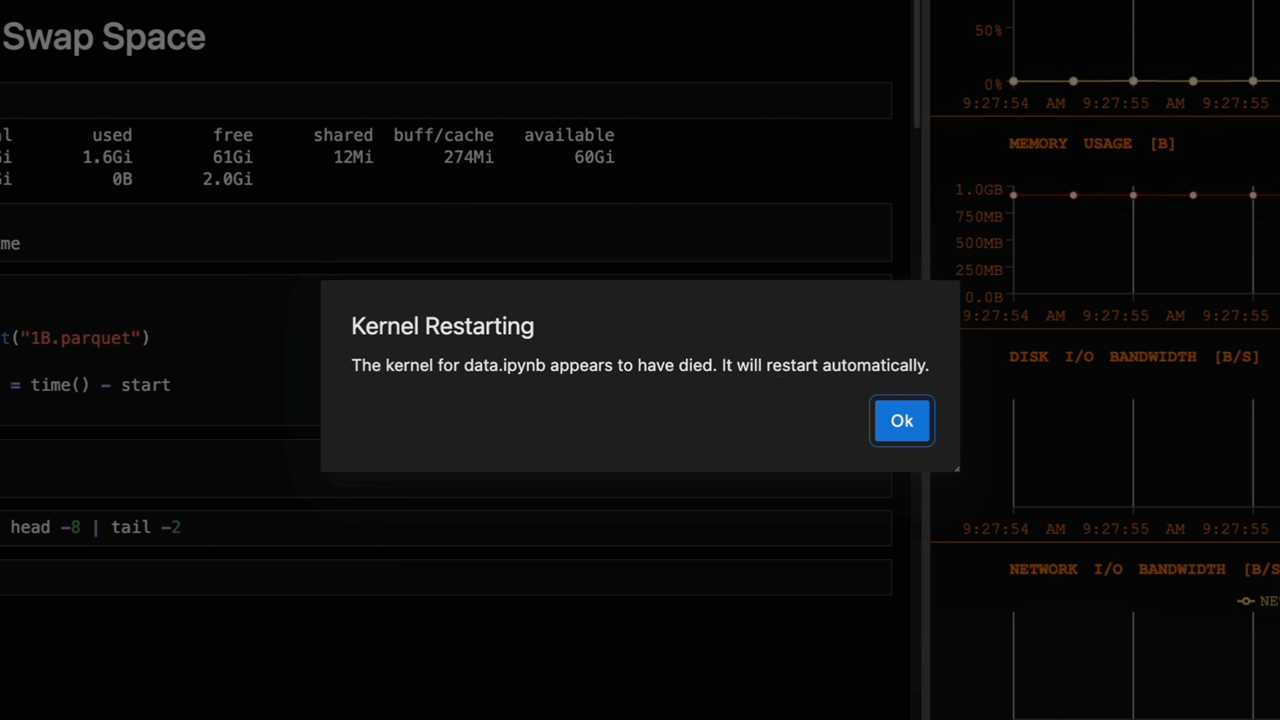

Pain point 5: Data too large for CPU RAM

- What it looks like: MemoryErrors, kernel restarts, or the need to sample data due to RAM limits.

- CPU fix: Downcast numeric types and convert low-cardinality string columns to category; optionally load a subset with nrows for quick inspection.

- GPU fix: cudf.pandas extension uses Unified Virtual Memory (UVM) to combine GPU VRAM and CPU RAM into a single pool, paging data between GPU and system memory to process datasets larger than GPU memory while retaining GPU speed. NVIDIA also points to quick CPU fixes first, followed by drop-in GPU acceleration as the next step. Free GPU access via Google Colab is highlighted for testing.

Quick reference table: CPU vs GPU fixes by bottleneck

| Bottleneck | CPU fix | GPU fix |---|---|---| | Slow CSV parsing | PyArrow parser, read only needed columns, read in chunks, Parquet/Feather | cudf.pandas loads CSVs in parallel on GPU, faster I/O, writes/reads Parquet too |Large joins | Indexed joins, drop unneeded columns | cudf.pandas extension enables parallel joins on GPU without code changes |Wide object/string columns | Convert low-cardinality strings to category; keep high-cardinality as string | GPU-accelerated string kernels; fast .str methods and string-key joins |Groupby on large datasets | Filter first, drop columns, pre-compute features; observed=True for categoricals | GPU-accelerated groupby across thousands of threads; large-scale aggregations in milliseconds |Datasets exceeding RAM | Downcast numerics, sample with nrows | Unified Virtual Memory to handle datasets larger than RAM; data paged between GPU and CPU | Context: The article also notes practical examples and notebooks to demonstrate these accelerations, including a reference to a notebook showing 25M NYC parking violations processed in milliseconds with GPU acceleration.

Reference notebooks and practical notes

- The piece mentions reference notebooks and Colab demonstrations to illustrate typical pandas workflows accelerated by cuDF, including reading, joining, and string processing. It emphasizes a no-code-change path to faster execution via cudf.pandas.

- It also notes that Polars’ GPU engine, powered by NVIDIA cuDF, offers similar drop-in acceleration for joins, groupbys, aggregations, and I/O, reinforcing the ecosystem-wide benefits of GPU acceleration for DataFrame workloads.

Key takeaways

- GPU acceleration can deliver order-of-magnitude speedups for common pandas operations with minimal or no code changes.

- CPU-side optimizations remain important: use PyArrow, limit data moved in merges, downcast numerics, and convert suitable string columns to categoricals.

- Unified memory technology (UVM) enables processing datasets larger than GPU memory, broadening feasible data sizes for GPU-accelerated workflows.

- Colab provides accessible, free GPU environments to prototype and test cudf.pandas without local hardware investment.

- The GPU acceleration approach complements other tools and can fit into existing workflows with minimal disruption.

FAQ

-

What is cudf.pandas and how does it relate to pandas?

cudf.pandas is a drop-in GPU accelerator that enables pandas-like workflows to run on NVIDIA GPUs with parallel processing across thousands of GPU threads; it requires no code changes to your existing pandas code.

-

Can I try this without owning a GPU?

Yes. Google Colab offers free GPU access with cudf.pandas pre-installed, letting you experiment with accelerated pandas workloads.

-

How does memory management work when data exceed GPU memory?

The cudf.pandas extension uses Unified Virtual Memory (UVM) to combine GPU VRAM and CPU RAM into one memory pool. Data is automatically paged between GPU and system memory, enabling processing of datasets larger than GPU memory while maintaining performance advantages of GPU execution.

-

Do these changes require rewriting existing code?

No—the cudf.pandas GPU accelerator provides a drop-in path; you can enable GPU acceleration with minimal or no changes to your current pandas code and libraries.

References

More news

NVIDIA HGX B200 Reduces Embodied Carbon Emissions Intensity

NVIDIA HGX B200 lowers embodied carbon intensity by 24% vs. HGX H100, while delivering higher AI performance and energy efficiency. This article reviews the PCF-backed improvements, new hardware features, and implications for developers and enterprises.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

Kaggle Grandmasters Playbook: 7 Battle-Tested Techniques for Tabular Data Modeling

A detailed look at seven battle-tested techniques used by Kaggle Grandmasters to solve large tabular datasets fast with GPU acceleration, from diversified baselines to advanced ensembling and pseudo-labeling.

Microsoft to turn Foxconn site into Fairwater AI data center, touted as world's most powerful

Microsoft unveils plans for a 1.2 million-square-foot Fairwater AI data center in Wisconsin, housing hundreds of thousands of Nvidia GB200 GPUs. The project promises unprecedented AI training power with a closed-loop cooling system and a cost of $3.3 billion.

Speculative Decoding to Reduce Latency in AI Inference: EAGLE-3, MTP, and Draft-Target Approaches

A detailed look at speculative decoding for AI inference, including draft-target and EAGLE-3 methods, how they reduce latency, and how to deploy on NVIDIA GPUs with TensorRT.