Enhance Geospatial Analysis with Amazon Bedrock: LLMs, RAG, and GIS Workflows

TL;DR

- Amazon Bedrock provides a secure, flexible platform to host and invoke AI models and to integrate them with geospatial information systems (GIS) and related workflows.

- Retrieval Augmented Generation (RAG) and agentic workflows enable geospatial use cases by combining knowledge bases with live data and with task-based actions against external providers.

- Knowledge bases hosted on data sources such as Amazon S3 and SharePoint support unstructured documents; tools can retrieve live information or control external processes via AWS Lambda, all orchestrated by Bedrock Agents.

- The article demonstrates an earthquake analysis agent that connects Amazon Redshift data with geospatial queries to illustrate end-to-end capabilities and governance.

- A practical, end-to-end pipeline is described, including IAM permissions, AWS CLI setup, data preparation, Redshift configuration, a knowledge base, and an agent, to show how to operationalize Bedrock in GIS contexts.

Context and background

Geospatial data is defined by position on the Earth (latitude, longitude, altitude), and GIS (Geographic Information Systems) provide a way to store, analyze, and visualize this information. In GIS applications, geospatial data is frequently presented on maps showing streets, buildings, and vegetation. As data volumes grow and information systems become more complex, stakeholders need solutions that reveal quality insights and enable intuitive workstreams. LLMs, a subset of foundation models, can transform inputs such as text or images into outputs and are used for a variety of natural language tasks. Amazon Bedrock is a comprehensive, secure, and flexible service for building generative AI applications and agents. It provides hosting and invocation for AI models and enables integration with surrounding infrastructure. LLMs support many generalized tasks in natural language processing, and in geospatial contexts they can be used to augment GIS workflows, support data exploration, and help with decision-making. To tailor LLM performance for specific use cases, approaches such as RAG and agentic workflows have been developed. RAG retrieves policies and general knowledge for geospatial needs from a knowledge base at model invocation, while agentic workflows handle more complex data analysis and orchestration tasks. With RAG, contextual information from a knowledge base is injected into the model’s prompt during invocation. Bedrock offers managed knowledge bases connected to data sources such as Amazon S3 and SharePoint, enabling supplemental information like city development plans, intelligence reports, or regulatory policies to accompany AI-generated responses. When the AI model responds using RAG-sourced material, it can provide references and citations to its sources. In geospatial contexts, however, many datasets are structured and reside in GIS, so tools and agents can connect the GIS directly to the LLM instead of relying solely on knowledge bases. Many LLMs (for example, Claude on Amazon Bedrock) support tool descriptions that allow the model to generate text to invoke external processes—such as retrieving live weather data, querying structured stores, starting workflows, or adding layers to a map. Common geospatial functionality you might integrate with an LLM via tools includes: invoking data queries against a spatial database, triggering workflows, drawing on a map, or querying live information such as weather. Tools are typically implemented in AWS Lambda, which runs code without the overhead of managing servers. Bedrock also provides Bedrock Agents to simplify orchestration and integration with geospatial tools. Agents follow instructions for LLM reasoning to break down a user prompt into smaller tasks and to perform actions against identified task providers. The integration supports a range of GIS solutions by enabling natural-language interactions that drive geospatial analysis, data discovery, and decision support. The article includes a concrete demonstration: an earthquake analysis agent built on a knowledge base connected to Amazon Redshift. The Redshift instance contains two tables—one for earthquakes (date, magnitude, latitude, longitude) and another for California counties described as polygons. The geospatial capabilities of Redshift relate these datasets to answer questions such as which county experienced the most recent earthquake or which county has had the most earthquakes in the last 20 years. The Bedrock agent can generate geospatially informed queries in natural language. This end-to-end pipeline illustrates how RAG and agent-based workflows maintain data accuracy while connecting AI models to structured data and knowledge sources. To implement the approach, you need an AWS account with the appropriate IAM permissions for Amazon Bedrock, Amazon Redshift, and Amazon S3. The article lists steps to set up the AWS CLI, validate the environment, configure Redshift and Bedrock variables, prepare data for storage in S3, transform geospatial data formats, set up the Redshift cluster, create the database schema, establish a knowledge base, and create and configure an agent. While the article provides code blocks and commands to illustrate these steps, the key takeaway is the end-to-end approach: connect LLM-powered reasoning with live geospatial data and organizational knowledge to enable dynamic, context-aware GIS workflows. The integration of LLMs with GIS creates intuitive systems for users across technical levels to perform complex spatial analysis through natural-language interactions. By employing both RAG and agent-based workflows, organizations can maintain data accuracy while seamlessly connecting AI models to knowledge bases and structured data systems. Amazon Bedrock provides the platform to host models, retrieve knowledge, and orchestrate these workflows in a geospatial context. For additional details and visual diagrams, refer to the original AWS blog post.

What’s new

| Aspect | Description |

|---|---|

| RAG | Dynamically inject contextual information from a knowledge base during model invocation to support geospatial inquiries. |

| Agentic workflows | Break down a user prompt into smaller tasks and perform actions against identified task providers to complete geospatial analyses. |

| Knowledge bases | Managed connections to data sources like Amazon S3 and SharePoint enable augmentation with unstructured documents and policy references. |

| Tools and orchestration | Tools (often AWS Lambda) enable live data retrieval, map interactions, or workflow control, coordinated by Bedrock Agents. |

| End-to-end example | Earthquake analysis agent demonstrates querying Redshift data to produce spatially aware insights and maps. |

Why it matters (impact for developers/enterprises)

- Simplified analysis: AI-assisted GIS reduces manual steps and enables faster exploration of spatial data.

- Enhanced decision-making: Contextual AI responses support informed planning and policy interpretation with traceable sources.

- Flexible integration: Bedrock provides a unified way to host models, manage knowledge bases, and connect to existing data stores and workflows.

- Role-inclusive value: The solution benefits technical staff, business users, and leadership by delivering accessible, data-driven insights.

- Live, auditable outputs: When using RAG, the system can cite sources; agents execute tasks that connect to live data and tools, reducing the risk of stale results.

Technical details or Implementation (high-level)

This section summarizes the implementation approach as described in the post. The overall pattern combines Bedrock-hosted models, RAG-enabled knowledge retrieval, and Bedrock Agents that orchestrate tasks against external data sources and tools.

Architecture overview

- Data sources: GIS data stored in a structured GIS database (e.g., Redshift polygons for counties) and live geospatial datasets.

- AI models: Bedrock-hosted LLMs act as the reasoning and natural-language interface.

- Knowledge layer: Managed knowledge bases in S3 or SharePoint provide unstructured documents and policy references.

- Orchestration: Bedrock Agents translate user prompts into a set of tasks and call external processes or data stores (often implemented as Lambda functions).

- Tools: External processes can retrieve live information, query spatial data stores, or modify GIS layers; these tools are invoked by the LLM through tool descriptions.

Data sources and storage (example)

- The earthquake analysis example uses an Amazon Redshift instance with two tables: earthquakes (date, magnitude, latitude, longitude) and California counties (polygon shapes). Redshift’s geospatial capabilities relate these datasets to answer questions like which county had the most recent earthquake or which county had the most earthquakes in the last 20 years. The Bedrock agent can generate geospatially focused queries in natural language, relying on the Redshift data and the agent’s workflow to execute the analysis.

- Knowledge bases in Bedrock can connect to data sources such as S3 and SharePoint to provide supplementary information, including development plans, policy documents, or regulations that inform AI-generated outputs.

End-to-end pipeline (high level)

- Prepare AWS IAM permissions for Bedrock, Redshift, and S3 access.

- Configure the AWS CLI environment and validate the setup.

- Create and initialize Bedrock and Redshift variables, including roles that enable data access.

- Prepare geospatial data and store it in S3 for use by the workflow.

- Transform geospatial data formats as needed to align with Redshift capabilities.

- Set up the Redshift cluster and create the relevant database schema.

- Create and load the knowledge base with documents needed for RAG-enabled responses.

- Create and configure an Amazon Bedrock Agent to handle the egregious workflow: interpret user intent, retrieve supporting information, query data stores, and modify GIS layers when appropriate.

Example prompts and capabilities

- The article demonstrates prompts such as: Summarize which zones allow for building of an apartment. Here, the LLM performs retrieval with a RAG approach, using the retrieved zoning documents as context to answer in natural language. Another example asks for a report on how housing data can be used to plan development, where the LLM retrieves planning code documents and produces a standardized report. A third example asks to show low-density properties on a map by location; the LLM creates markers on the map by invoking a drawing tool. Finally, a prompt asks whether a specific site is suitable for building an apartment; the system uses map state, UI context, and policy data to respond with a reasoned answer.

- The examples illustrate prompt templates, RAG, summarization, chain-of-thought reasoning, tool use, and UI control. They also show how a state engine can relay frontend context to the LLM input, enabling more accurate, context-aware responses.

Implementing and testing (high level)

- After creating the agent, you can test it via natural-language inputs and observe how the LLM uses RAG to retrieve sources and how the agent triggers tools to produce maps, queries, or reports.

- The article emphasizes end-to-end testing, including the ability to clean up resources to avoid charges.

Key considerations for practitioners

- IAM, data governance, and cost management are important when deploying Bedrock-based GIS workflows.

- RAG improves contextual accuracy by referencing knowledge bases, while agentic workflows handle multi-step tasks and orchestration across data stores and tools.

- The combination of Bedrock, Redshift, S3, and Lambda enables end-to-end geospatial AI pipelines that integrate with existing GIS systems and organizational policies.

Key takeaways

- Bedrock provides a comprehensive platform to host models, manage knowledge bases, and orchestrate AI-powered GIS workflows.

- RAG and agentic workflows enable context-aware geospatial analysis and multi-step automation across data sources and tools.

- Knowledge bases connected to S3 and SharePoint augment AI responses with relevant documents and policies, while GIS data stored in Redshift supports precise spatial reasoning.

- Bedrock Agents simplify the orchestration of LLM reasoning with real-time tool invocations, enabling interactive GIS experiences through natural language.

- The Earthquake Analysis example demonstrates an end-to-end pipeline that connects LLM-driven reasoning with geospatial data and live data stores, preserving accuracy and traceability.

FAQ

-

What is an Amazon Bedrock Agent in this GIS context?

Bedrock Agents are designed to simplify orchestration and integration by breaking down a user prompt into smaller tasks and performing actions against identified task providers.

-

How does RAG support geospatial use cases?

RAG dynamically injects contextual information from a knowledge base during model invocation, allowing the AI to reference sources and provide citations when answering spatial questions.

-

What data sources are used in the earthquake analysis example?

The example uses an Amazon Redshift dataset with an earthquakes table (date, magnitude, latitude, longitude) and a California counties polygon table. Redshift geospatial capabilities tie the datasets together to answer location-based questions.

-

What setup is required to implement this approach?

An AWS account with IAM permissions for Bedrock, Redshift, and S3; setup of the AWS CLI; configuration of Redshift and Bedrock variables; data preparation and storage in S3; data transformation; creation of a knowledge base; and creation and configuration of an agent.

References

More news

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

Prompting for precision with Stability AI Image Services in Amazon Bedrock

Amazon Bedrock now offers Stability AI Image Services, extending Stable Diffusion and Stable Image with nine tools for precise image creation and editing. Learn prompting best practices for enterprise use.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.

Scale visual production using Stability AI Image Services in Amazon Bedrock

Stability AI Image Services are now available in Amazon Bedrock, delivering ready-to-use media editing via the Bedrock API and expanding on Stable Diffusion models already in Bedrock.

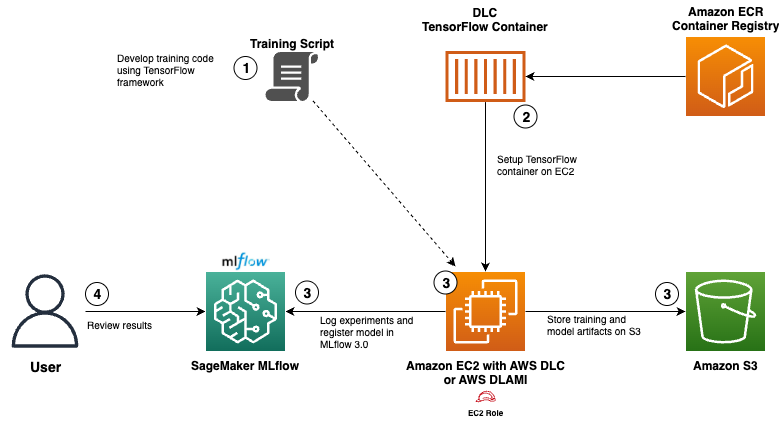

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.