Beyond the basics: A comprehensive framework for foundation model selection in generative AI

TL;DR

- Enterprises should extend evaluation beyond accuracy, latency, and cost to capture real-world performance.

- Use Amazon Bedrock Evaluations and the model information API to filter candidates down to 3–7 models for detailed assessment.

- Consider agentic AI capabilities and multi-agent collaboration; test prompts, edge cases, and domain-specific vulnerabilities.

- The framework is iterative and forward-compatible with evolving foundation-model landscapes, helping balance performance, cost, and business goals.

Context and background

Foundation models have transformed how enterprises build generative AI applications, enabling human-like understanding and content creation. Amazon Bedrock provides a fully managed service offering a broad set of high-performing foundation models from leading providers—AI21 Labs, Anthropic, Cohere, DeepSeek, Luma, Meta, Mistral AI, poolside (coming soon), Stability AI, TwelveLabs (coming soon), Writer, and Amazon—accessible through a single API. This API-driven approach enables seamless model interchangeability but also poses the challenge: which model will deliver the best performance for a given application while meeting operational constraints? Our work with enterprise customers reveals a common pitfall: many early projects select models based on limited manual testing or reputation, rather than a structured evaluation aligned to business requirements. This post presents a comprehensive evaluation methodology optimized for Amazon Bedrock implementations, aiming to provide a principled path from requirements to model selection using Bedrock Evaluations. For broader context on assessing large language model (LLM) performance, see the referenced guidance on LLM-as-a-judge in the Amazon Bedrock Model Evaluation ecosystem. Foundation models vary across multiple dimensions and interact in complex ways. To help navigate these trade-offs, we present a capability matrix with four core dimensions to consider when evaluating models on Bedrock: Task performance, Architectural characteristics, Operational considerations, and Responsible AI attributes. While these dimensions are listed in no particular order, they collectively shape business outcomes, ROI, user adoption, trust, and competitive advantage. Importantly, for agentic AI applications, evaluation must also cover agent-specific capabilities such as multi-agent collaboration testing. The overarching message is clear: model selection should be treated as an ongoing process that evolves with changing needs and advances in technology, not a one-off decision.

What’s new

The article introduces a structured evaluation methodology tailored for Amazon Bedrock implementations, combining theoretical frameworks with practical steps:

- Begin with a precise specification of application requirements and assign weights to each requirement to build an evaluation scorecard.

- Use the Amazon Bedrock model information API to filter models based on hard requirements, reducing candidates from dozens to a manageable 3–7 for detailed evaluation.

- When filtering, leverage the Bedrock model catalog to obtain additional information about models when the API filters are not sufficient.

- Implement structured evaluation with Amazon Bedrock Evaluations to transform evaluation data into actionable insights.

- Go beyond traditional testing with comparative testing using Bedrock’s routing capabilities to gather real-world performance data from actual users, and test vulnerabilities through prompt injections, edge-case handling, and domain-specific factual challenges.

- Assess combinations such as sequential pipelines, voting ensembles, and cost-efficient routing depending on task complexity.

- Design production systems to monitor performance across deployments and be mindful of sector-specific requirements.

- The framework supports forward compatibility as the landscape evolves, ensuring the selection process remains relevant over time. A central element of the approach is a four-dimension capability matrix that guides model comparisons and decision-making. In addition to the core dimensions, the methodology emphasizes agentic AI considerations, including reasoning, planning, and collaboration for autonomous agent scenarios.

Why it matters (impact for developers/enterprises)

For developers and enterprises, this framework helps translate business objectives into measurable evaluation criteria that can be systematically applied to Bedrock models. The approach seeks to prevent common pitfalls such as over-provisioning, misalignment with use-case needs, excessive operational costs, and late discovery of performance issues. By explicitly weighting requirements and validating models through structured evaluations and real-world routing data, organizations can optimize costs, improve performance, and deliver better user experiences. Moreover, as foundation models evolve, the methodology is designed to adapt. The framework supports ongoing reassessment and updates as new models and capabilities emerge, enabling organizations to maintain alignment with evolving business goals and technology capabilities. For agentic AI workflows, rigorous evaluation of reasoning, planning, and collaboration becomes essential to success, reinforcing the business value of a disciplined model-selection process.

Technical details or Implementation

The core of the framework rests on four critical dimensions used to evaluate foundation models in Bedrock:

| Dimension | Description |

|---|---|

| Task performance | Direct impact on business outcomes, ROI, user adoption, and trust. |

| Architectural characteristics | Influence model performance, efficiency, and task suitability. |

| Operational considerations | Feasibility, cost, and sustainability of deployments. |

| Responsible AI attributes | Governance and alignment with responsible-AI practices as a business imperative. |

| Implementation steps in practice: |

- Precisely specify application requirements and assign weights to create a formal evaluation-score foundation for model comparison.

- Apply hard requirements through the Bedrock model information API to filter candidates, typically narrowing the field to 3–7 models for deep evaluation.

- If the API filters are insufficient, supplement with information from the Bedrock model catalog to obtain richer model facts.

- Use Amazon Bedrock Evaluations to conduct structured, systematic assessments and transform data into actionable insights.

- Extend evaluation with comparative testing via Bedrock routing to collect real-user performance data. Test model vulnerabilities with prompt-injection attempts, challenging syntax, edge cases, and domain-specific factual challenges.

- Explore architectural and orchestration patterns such as sequential pipelines, voting ensembles, and cost-efficient routing tailored to task complexity.

- Design production-monitoring systems to track performance across deployments and sectors, enabling ongoing optimization.

- Remain mindful of agentic AI considerations: for autonomous agents, evaluate reasoning, planning, and collaboration capabilities, including multi-agent collaboration testing.

- Treat model selection as an evolving process that adapts to changing needs and capabilities, not a single event. Beyond the procedural steps, the approach emphasizes forward-looking planning to accommodate a moving landscape. The Bedrock Evaluations framework provides a practical, scalable path for enterprise teams to move from abstract requirements to data-driven, actionable model selections that align with business objectives. For practitioners seeking deeper guidance on LLМ evaluation, the linked Bedrock resources offer broader context on evaluating large language models in real-world settings.

Key takeaways

- Move beyond basic metrics to a comprehensive, requirement-driven evaluation framework for foundation models on Bedrock.

- Use Bedrock’s information API and catalog to filter models before in-depth testing, reducing candidate models to a manageable subset.

- Employ Bedrock Evaluations for structured assessments and leverage routing to gather real-world performance data.

- Test robustness and security through prompt-injection challenges and domain-specific scenarios; consider multi-model and ensemble strategies where appropriate.

- Treat model selection as an ongoing, adaptable process aligned to business goals and evolving technology.

FAQ

-

What is Amazon Bedrock Evaluations?

It is a structured evaluation approach mentioned in the Bedrock framework for assessing foundation models and transforming evaluation data into actionable insights.

-

How do you filter models for evaluation in Bedrock?

Start with the Amazon Bedrock model information API to filter models based on hard requirements, then optionally consult the Bedrock model catalog for additional details.

-

What additional considerations are there for agentic AI?

When evaluating agentic AI applications, assess capabilities related to reasoning, planning, and collaboration, including multi-agent collaboration testing.

-

Is the evaluation framework a one-time activity?

No, the methodology is designed to be iterative and forward-looking, adapting as the foundation-model landscape evolves.

References

More news

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Prompting for precision with Stability AI Image Services in Amazon Bedrock

Amazon Bedrock now offers Stability AI Image Services, extending Stable Diffusion and Stable Image with nine tools for precise image creation and editing. Learn prompting best practices for enterprise use.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.

Scale visual production using Stability AI Image Services in Amazon Bedrock

Stability AI Image Services are now available in Amazon Bedrock, delivering ready-to-use media editing via the Bedrock API and expanding on Stable Diffusion models already in Bedrock.

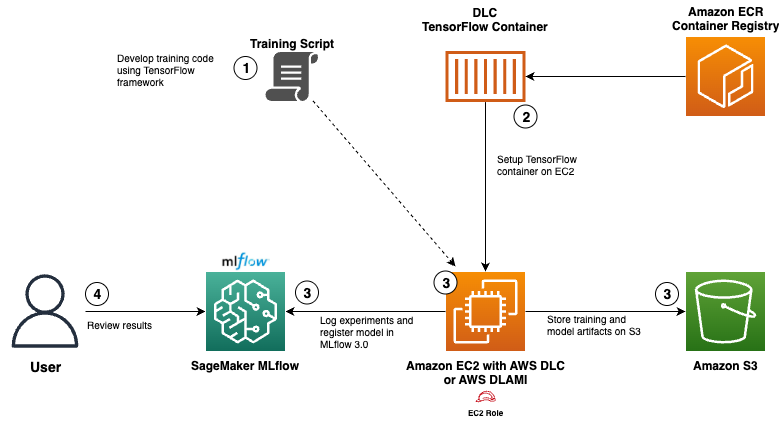

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.

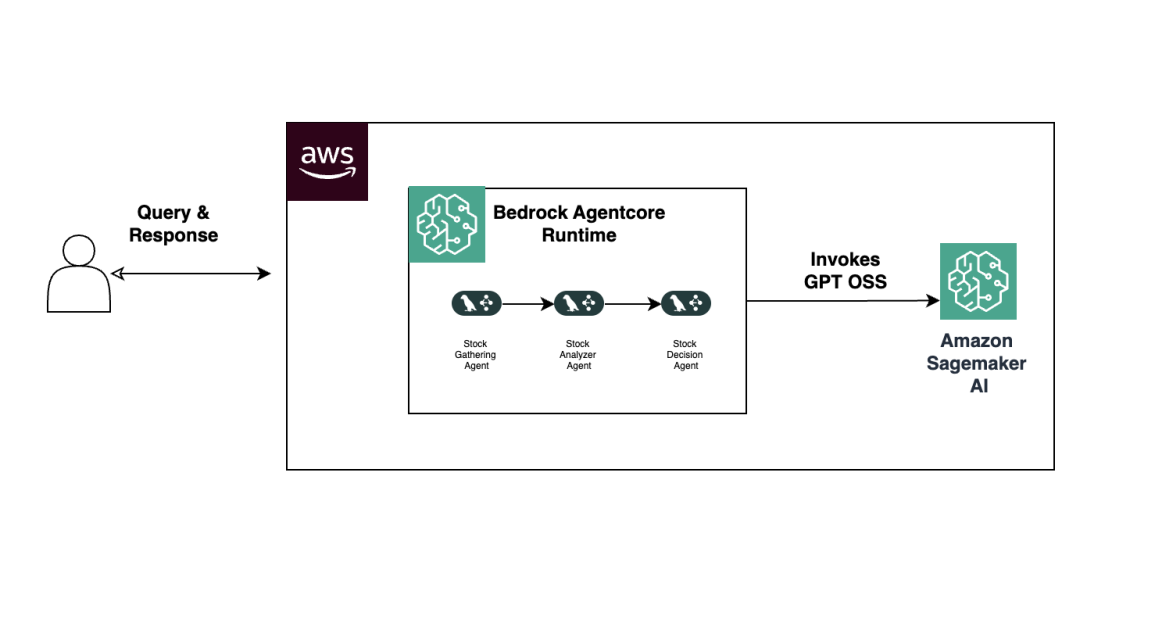

Build Agentic Workflows with OpenAI GPT OSS on SageMaker AI and Bedrock AgentCore

An end-to-end look at deploying OpenAI GPT OSS models on SageMaker AI and Bedrock AgentCore to power a multi-agent stock analyzer with LangGraph, including 4-bit MXFP4 quantization, serverless orchestration, and scalable inference.