A Comprehensive Bedrock Foundation Model Selection Framework for GenAI

Sources: https://aws.amazon.com/blogs/machine-learning/beyond-the-basics-a-comprehensive-foundation-model-selection-framework-for-generative-ai, aws.amazon.com

TL;DR

- A methodical evaluation framework for Bedrock foundation models considers four core dimensions: task performance, architectural characteristics, operational considerations, and responsible AI attributes.

- Start by precisely specifying requirements, assign weights, and use the Amazon Bedrock model information API to filter candidates, typically reducing the pool from dozens to 3–7 models for in‑depth evaluation.

- Leverage Amazon Bedrock Evaluations to transform evaluation data into actionable insights, conduct real‑world comparative testing, test vulnerabilities, and explore configurations like sequential pipelines or voting ensembles.

- Treat model evaluation as an evolving process that adapts to changing needs and advancing technology, with special attention to agentic AI capabilities such as reasoning, planning, and collaboration.

Context and background

Amazon Bedrock is a fully managed service that provides a single API to access a curated set of high‑performing foundation models from AI21 Labs,Anthropic,Cohere, DeepSeek, Luma, Meta, Mistral AI, poolside (coming soon), Stability AI, TwelveLabs (coming soon), Writer, and Amazon. This API‑driven model interchangeability offers flexibility for building generative AI applications with built‑in security, privacy, and responsible AI features. However, this flexibility also introduces a critical question for data scientists and ML engineers: which model will deliver optimal performance for a given application while meeting operational constraints? The framework discussed here merges theoretical evaluation models with practical Bedrock‑specific implementation strategies to help answer that question. Our research with enterprise customers indicates many early GenAI projects rely on limited manual testing or reputational signals rather than systematic evaluation aligned to business requirements, which can lead to suboptimal choices. The framework is designed for Bedrock implementations and uses two key capabilities: Amazon Bedrock Evaluations and the Amazon Bedrock model information API. The evaluated models vary along multiple dimensions that interact in complex ways, so a structured capability matrix helps teams navigate these tradeoffs and select models that best fit their use case. For teams exploring agentic AI applications, additional evaluation considerations cover reasoning, planning, and collaboration, including multi‑agent collaboration tests where multiple specialized agents work together. For readers seeking broader literature on LLM performance, the post also points to related material such as LLM‑as‑a‑judge on Amazon Bedrock Model Evaluation, which offers complementary evaluation insights. While the exact details of external research are outside this post’s scope, the Bedrock evaluations framework is designed to work hand in hand with such approaches.

What’s new

This post presents a comprehensive evaluation methodology optimized for Amazon Bedrock implementations. The core idea is to move beyond traditional metrics like accuracy, latency, and cost, and to incorporate four core dimensions that capture how models perform in real enterprise contexts: Task performance, Architectural characteristics, Operational considerations, and Responsible AI attributes. The approach includes forward‑compatible patterns as the foundation model landscape evolves, ensuring that evaluation remains relevant as new providers and models appear. A key emphasis is on agentic AI applications, where evaluating reasoning, planning, and collaboration capabilities becomes essential for success. The framework combines theoretical structures with practical Bedrock tools to help data scientists and ML engineers make informed, business‑aligned decisions. The Bedrock model information API serves as the primary mechanism to filter candidates by hard requirements, while the Bedrock model catalog provides supplementary data when API filters are not sufficient. The framework also stresses the importance of transforming raw evaluation data into actionable insights via Bedrock Evaluations and leveraging real‑world data collected through routing and other comparative testing techniques to guide model selection.

Why it matters (impact for developers/enterprises)

For developers and enterprises, selecting the right foundation model directly influences outcomes such as ROI, user adoption, trust, and long‑term sustainability of GenAI deployments. A thoughtful evaluation framework helps avoid common pitfalls like over‑provisioning, misalignment with use case needs, and excessive operational costs. By explicitly weighting requirements and systematically narrowing the candidate set, organizations can balance performance with cost and operational feasibility, while staying aligned with business objectives and user expectations. The methodology also supports ongoing adaptation as models evolve, reducing the risk of late discovery of performance issues and enabling continuous improvement in production AI systems.

Technical details or Implementation

The framework follows a practical, stepwise approach that teams can apply within Bedrock.

- Precisely specify application requirements: Define what success looks like for the use case and assign weights to requirements to form a scoring foundation for evaluation. This helps translate business priorities into measurable criteria.

- Filter candidates with Bedrock information: Use the Amazon Bedrock model information API to exclude models that fail to meet hard requirements. This initial filtering typically reduces candidates from dozens to a manageable 3–7 models for deeper analysis. If API filters are insufficient for a given scenario, consult the Bedrock model catalog to obtain additional information about models.

- Implement structured evaluation with Bedrock Evaluations: Collect evaluation data and transform it into actionable insights. Bedrock Evaluations provide a framework to organize and compare model performance across defined dimensions.

- Go beyond standard procedures with comparative testing: Use Bedrock’s routing capabilities to gather real‑world performance data from actual users. This helps capture how models behave under realistic workloads and traffic patterns.

- Test vulnerabilities and edge cases: Assess model robustness by attempting prompt injections, challenging syntax, edge cases, and domain‑specific factual challenges. Such testing informs resilience and reliability considerations.

- Explore combinations and configurations: Evaluate sequential pipelines, voting ensembles, and cost‑efficient routing strategies that match task complexity. This helps identify the most effective composition of models and routing patterns for a given workflow.

- Design production monitoring: Establish monitoring for production performance across sectors with unique requirements. Ongoing monitoring is essential to detect drift, regressions, or changing business needs and to adjust model selections accordingly.

- Recognize the evolving landscape: Different sectors have unique requirements that influence model selection, and the foundation model landscape continues to evolve. The framework is designed to stay relevant as models improve and new providers enter Bedrock. The approach highlights that evaluation is not a one‑time exercise but an ongoing process that adapts to changing needs and capabilities. For agentic AI applications, the framework calls for thorough evaluation of reasoning, planning, and collaboration capabilities, including cross‑agent coordination where applicable.

Key takeaways

- Four core dimensions—Task performance, Architectural characteristics, Operational considerations, and Responsible AI attributes—anchor Bedrock model evaluation.

- Begin with a precise requirements specification and use the Bedrock information API to narrow candidates before in‑depth testing.

- Bedrock Evaluations help translate evaluation data into actionable insights and guide data‑driven decisions.

- Real‑world testing, vulnerability assessment, and configuration strategies (e.g., routing and ensembles) improve reliability and cost efficiency.

- Evaluation must evolve as the model landscape changes, with special attention to agentic AI capabilities when relevant.

FAQ

-

What is Bedrock Evaluations and how does it help?

Bedrock Evaluations provide a framework to collect, organize, and transform evaluation data into actionable insights for Bedrock models, supporting structured, comparative analysis and decision making.

-

How many models should be considered before deep evaluation?

The methodology uses filtering to typically reduce candidates from dozens to 3–7 models worth detailed evaluation.

-

Should agentic AI capabilities be part of the evaluation?

Yes. For agentic AI applications, it is essential to evaluate reasoning, planning, and collaboration capabilities, including multi‑agent collaboration testing when multiple specialized agents are involved.

-

Is this framework specific to Bedrock, or applicable to any LLM evaluation?

The framework is optimized for Bedrock implementations and leverages Bedrock Evaluations and the Bedrock model information API as core tools.

References

More news

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Prompting for precision with Stability AI Image Services in Amazon Bedrock

Amazon Bedrock now offers Stability AI Image Services, extending Stable Diffusion and Stable Image with nine tools for precise image creation and editing. Learn prompting best practices for enterprise use.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.

Scale visual production using Stability AI Image Services in Amazon Bedrock

Stability AI Image Services are now available in Amazon Bedrock, delivering ready-to-use media editing via the Bedrock API and expanding on Stable Diffusion models already in Bedrock.

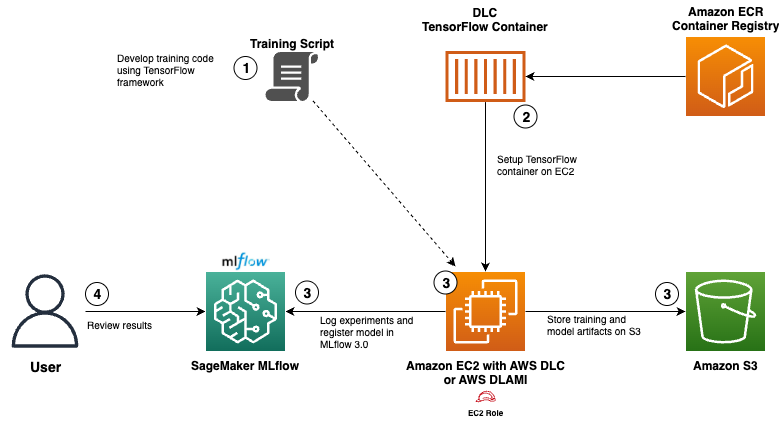

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.

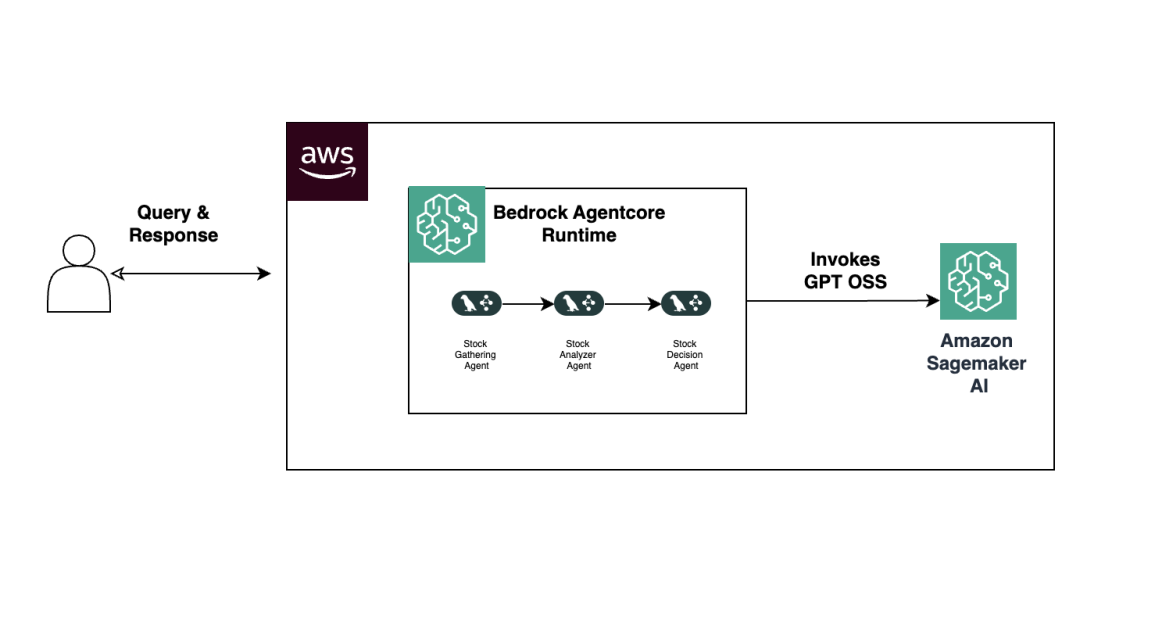

Build Agentic Workflows with OpenAI GPT OSS on SageMaker AI and Bedrock AgentCore

An end-to-end look at deploying OpenAI GPT OSS models on SageMaker AI and Bedrock AgentCore to power a multi-agent stock analyzer with LangGraph, including 4-bit MXFP4 quantization, serverless orchestration, and scalable inference.