Enhance AI Agents with Predictive ML Models via SageMaker AI and MCP

TL;DR

- AI agents can leverage predictive ML models hosted on Amazon SageMaker AI as tools to inform data-driven decisions.

- The Model Context Protocol (MCP) enables dynamic discovery of tools and decouples agent execution from tool execution for scalability and security.

- The Strands Agents SDK provides a model-driven approach to build AI agents quickly, with two access paths to SageMaker endpoints: direct endpoint calls or MCP-based tool invocation.

- For production-ready deployments, MCP is recommended due to its decoupled architecture and centralized permission handling; direct endpoint access remains a valid option for simpler scenarios.

- The workflow demonstrated trains a time-series forecasting model, deploys it to SageMaker AI, and exposes it to an AI agent for real-time predictions, illustrating end-to-end capability from data to decision.

Context and background

Machine learning (ML) has evolved from experiments to becoming integral to business operations. Organizations deploy ML models for sales forecasting, customer segmentation, and churn prediction. While generative AI has reshaped customer experiences, traditional ML remains essential for data-driven predictions, particularly in tasks like forecasting and segmentation where established algorithms (e.g., random forests, gradient boosting, ARIMA, LSTM, linear models) excel. Generative AI shines in creative and interactive tasks, but predictive accuracy for data-driven decisions often benefits from traditional ML methods. To enable AI agents to make data-driven decisions, this approach combines predictive ML models with Model Context Protocol (MCP)—an open protocol that standardizes how applications provide context to large language models (LLMs)—on Amazon SageMaker AI. The result is a workflow in which AI agents can access ML models hosted on SageMaker AI as tools, driving more informed actions while balancing cost and performance. The Strands Agents SDK—an open source toolkit—supports a model-driven agent design, allowing developers to define agents with prompts and a set of tools, enabling rapid development from simple assistants to complex autonomous workflows. Two primary paths exist for connecting agents to SageMaker endpoints: directly invoking endpoints from the agent code, or routing through MCP to enable dynamic tool discovery and decoupled execution. Direct endpoint access embeds tool calls in the agent, whereas MCP introduces an MCP server that discovers tools, enforces interfaces, and manages the flow between the agent and model endpoints. For scalable and secure implementations, the MCP approach is recommended, though the direct path is discussed to illustrate the alternatives. SageMaker AI provides efficient hosting for multiple models behind a single endpoint via inference components and multi-model endpoints. Although the demonstration uses a single model, the documentation highlights these methods for consolidating resources and optimizing response times when deploying multiple predictive models. The post presents a complete workflow: a user interacts with a chat-based interface powered by an LLM, the Strands Agent decides when a prediction is needed, and the agent calls the SageMaker AI endpoint to obtain a forecast. The response feeds back into the conversation or downstream processes, enabling real-time, intelligent responses. The project’s complete code is available on GitHub, and readers are encouraged to explore real implementations and related SageMaker AI documentation for deeper details.

What’s new

This approach showcases how AI agents can be enhanced with predictive ML by exposing ML models as tools that agents can call at runtime. The key innovations include:

- Integrating predictive ML models hosted on SageMaker AI with AI agents via Strands Agents SDK.

- Providing two invocation modalities: direct SageMaker endpoint calls embedded in agent code, and MCP-based tool invocation that enables discovery and decoupled execution.

- Recommending MCP for scalable and secure deployments, while also presenting the direct endpoint path for flexibility.

- Demonstrating hosting multiple models behind a single SageMaker AI endpoint using inference components or multi-model endpoints, which optimizes resource use and reduces latency.

- A practical workflow that trains a time-series forecasting model (XGBoost), deploys it to SageMaker AI, and exposes it to an AI agent for end-to-end decision-making.

- Guidance on how to expose the endpoint as a Strands Agent tool (via @tool) and how to invoke it from an agent, including docstrings to aid model-driven usage.

- The potential to integrate predictions into BI tools (e.g., Amazon QuickSight) or enterprise systems (ERP/CRM) to operationalize insights.

Why it matters (impact for developers/enterprises)

By combining predictive ML with conversational AI, organizations can empower AI agents to support data-driven decision-making in real time. This approach lowers the barrier to ML adoption by letting agents leverage predictive insights without requiring deep ML expertise in every development team. The MCP-driven workflow enables scalable and secure tool usage, allowing enterprises to add more predictive capabilities over time without tightly coupling agents to model endpoints. For developers, the approach provides a template for building intelligent agents that can reason about data, forecast outcomes, and integrate with business systems. For enterprises, it enables faster analytics-to-action cycles, better alignment between operational systems and forecasting models, and improved governance through a centralized MCP server layer that handles tool permissions. The combination of SageMaker AI’s hosting capabilities and the Strands Agents SDK’s model-driven design supports rapid experimentation and production-grade deployments alike.

Technical details or Implementation

The solution follows a clear lifecycle and architecture:

- Data and model preparation: Create synthetic time-series data to simulate demand with trend, seasonality, and noise. Perform feature engineering to extract temporal features such as day of week, month, and quarter to capture seasonality effects.

- Model training and deployment: Train an XGBoost regression model using the SageMaker AI 1P container to predict future demand. Package and deploy the model to a SageMaker AI endpoint to enable real-time inferences.

- Endpoint invocation: Implement a function like invoke_endpoint() with a descriptive docstring so that it can be used as a tool by LLMs. This function can be turned into a Strands Agent tool with the @tool decorator and then invoked from a Strands agent.

- Two integration paths:

- Direct endpoint access: The agent calls the SageMaker endpoint directly from its code, enabling straightforward access to model inference without extra infrastructure.

- MCP-based access: The client discovers tools exposed by the MCP server, formats requests using the tool interface, and calls the SageMaker inference endpoint through the MCP layer. The MCP approach decouples agent logic from tool execution and centralizes permission controls, improving security and scalability.

- MCP server and tool schemas: To use MCP at scale, implement an MCP server (e.g., with the FastMCP framework) that wraps the SageMaker endpoint and exposes it as a tool with a defined interface. A tool schema clearly defines input parameters and return values to facilitate straightforward use by AI agents.

- Resource considerations: SageMaker AI offers two methods to host multiple models behind a single endpoint—inference components and multi-model endpoints. While the post demonstrates a single-model setup for clarity, the guidance points toward efficient resource utilization when handling several models.

- End-to-end workflow: A user interacts via a chat or app, the Strands Agent processes the request, decides to invoke the ML model, and the SageMaker AI prediction is returned to the user interface. The system can then generate plots, feed BI tools, or update ERP/CRM systems as part of the business process.

- Practical notes: The article emphasizes that this pattern enables agents to access ML capabilities without deep ML expertise, illustrating a practical path to add predictive intelligence to AI agents.

Tool invocation options at a glance

| Aspect | Direct endpoint access | MCP-based tool invocation |---|---|---| | Discovery mechanism | Tool calls embedded in agent code | Dynamic discovery via an MCP server |Architectural component | No MCP server required | Introduces an MCP server to mediate calls |Security pattern | Permissions and calls managed at the agent/tool level | Centralized permissions and governance via MCP server |Deployment considerations | Simpler and faster to prototype | Scalable and secure for production; supports multiple tools |Recommendation | Useful for quick experiments and smaller setups | Recommended for scalable deployments and security controls |

- How to choose: For rapid prototyping or small-scale experimentation, direct endpoint calls can be sufficient. For production environments requiring dynamic tool discovery, centralized control, and robust security, MCP is the preferred path.

- Code and references: The complete code for this solution is available on GitHub, and readers are encouraged to consult the SageMaker AI documentation for details on inference components and multi-model endpoints.

Key takeaways

- Predictive ML models hosted on SageMaker AI can function as tools within AI agents to improve decision-making.

- MCP provides dynamic tool discovery and a decoupled architecture between agents and tools, with a dedicated server handling tool execution and permissions.

- The Strands Agents SDK enables model-driven agent development, letting developers define agents with prompts and tools rather than bespoke integration code.

- SageMaker AI supports hosting multiple models behind a single endpoint via inference components or multi-model endpoints, enabling efficient resource use.

- The demonstrated workflow combines synthetic time-series data, feature engineering, XGBoost modeling, endpoint deployment, and agent-based invocation to illustrate end-to-end predictive decision-making.

FAQ

-

What problem does MCP solve for AI agents?

MCP enables dynamic discovery of tools and decouples agent execution from tool execution by introducing an MCP server, improving scalability and security.

-

How can an AI agent access SageMaker AI models?

The agent can either invoke SageMaker endpoints directly or use MCP to discover and call the tools exposed by the MCP server.

-

What options exist for hosting multiple models behind a single endpoint?

SageMaker AI offers inference components and multi-model endpoints to consolidate models behind one endpoint, reducing resource use and latency when needed.

-

What is the end-to-end workflow in the example?

Train a time-series model, deploy it to SageMaker AI, expose it as a Strands Agent tool, invoke from the agent, and present predictions to users or downstream systems.

References

More news

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Prompting for precision with Stability AI Image Services in Amazon Bedrock

Amazon Bedrock now offers Stability AI Image Services, extending Stable Diffusion and Stable Image with nine tools for precise image creation and editing. Learn prompting best practices for enterprise use.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.

Scale visual production using Stability AI Image Services in Amazon Bedrock

Stability AI Image Services are now available in Amazon Bedrock, delivering ready-to-use media editing via the Bedrock API and expanding on Stable Diffusion models already in Bedrock.

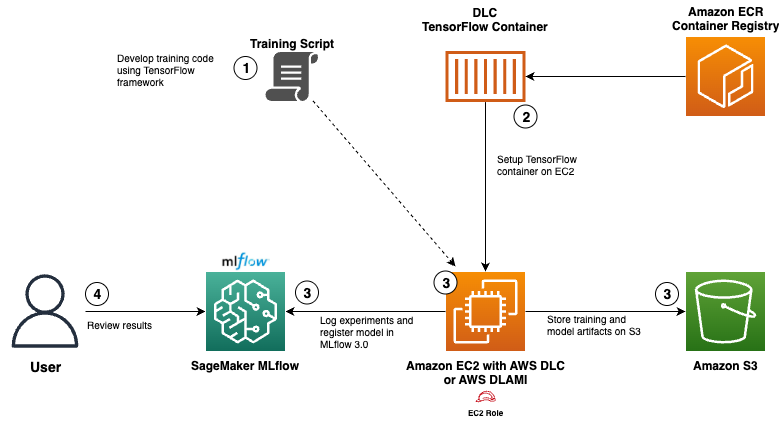

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.