Build a cost-effective travel planning agentic workflow with Amazon Nova

TL;DR

- AWS demonstrates a travel planning agent built with Amazon Nova models and LangGraph orchestration to simplify multi-step travel workflows.

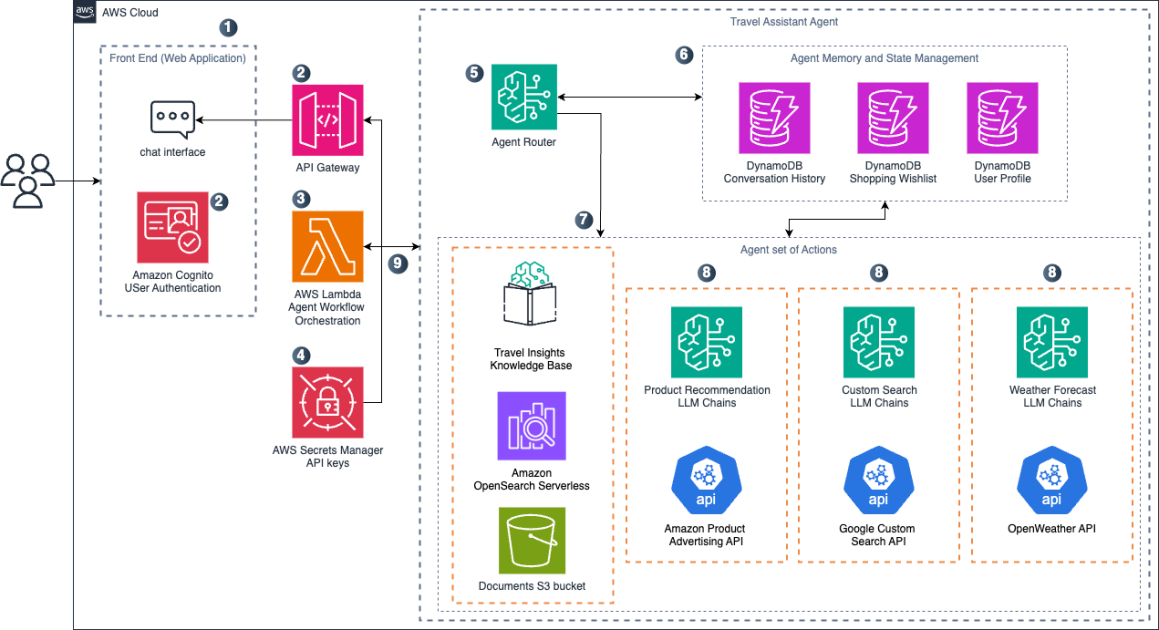

- The solution uses a three-layer, serverless architecture (frontend, core processing, integration) deployed with AWS CDK and CloudFormation.

- Amazon Nova Lite and Pro models are used selectively across 14 action nodes and a routing node; models support a 300,000-token context window and more than 200 languages.

Context and background

Travel planning requires coordinating multiple decisions — accommodations, activities, local transport, weather, and shopping — which can be time-consuming. Agentic workflows, where large language models (LLMs) access external tools, offer a new way to automate these multi-step and stateful processes. In a recent AWS Machine Learning blog post, the AWS Generative AI Innovation Center team describes a practical travel planning assistant that combines Amazon Nova foundation models and LangGraph orchestration to manage complex travel planning tasks while controlling operational cost. The project demonstrates how to apply LLMs not just for single-round generation but as part of a stateful, multi-node system that calls external services and keeps session context. The reference implementation emphasizes serverless deployment patterns and modular components so teams can adapt the architecture to their own APIs and data sources.

What’s new

- A fully described travel planning agent that runs on a serverless Lambda architecture using Docker containers and is deployable with the AWS Cloud Development Kit (AWS CDK).

- A LangGraph-based graph architecture with a router node and 14 action nodes, where the router analyzes queries and dispatches the appropriate action nodes.

- Selective use of Amazon Nova Lite for the router and simpler tasks and Amazon Nova Pro for five complex nodes that require advanced instruction following and multi-step operations.

- Demonstrated features including web research, personalized recommendations, weather lookups, product searches, and shopping cart integration with live Amazon.com product links.

Why it matters (impact for developers and enterprises)

- Practical cost-performance balance: By combining Amazon Nova Lite and Pro models where appropriate, teams can optimize latency, accuracy, and cost for production deployments.

- Extensible orchestration: The LangGraph-based core shows how to structure stateful agentic workflows with specialized nodes, making it easier to add new capabilities or integrate proprietary APIs and data stores.

- Serverless scalability: Implementing the solution with AWS Lambda, DynamoDB, and CDK enables horizontal scaling and a path to production without maintaining dedicated servers.

- Personalization and commerce integration: Sample profiles in DynamoDB and live product links demonstrate how conversational assistants can connect recommendations and commerce flows, useful for travel platforms and retail integrations.

Technical details or Implementation

Architecture overview The solution is implemented as a three-layer architecture:

- Frontend interaction layer: Handles user authentication and the web app interaction. The deployment outputs an API endpoint and a web application domain for the frontend.

- Core processing layer: A LangGraph-based graph where a router node orchestrates 14 action nodes. Nodes perform distinct functions such as itinerary planning, weather checks, and shopping cart actions. Each node runs an LLM chain.

- Integration layer: Interfaces to external data sources and APIs. The architecture is intentionally extensible so teams can plug in their own services. Model selection and capabilities

- Amazon Nova Lite is used for the router and simpler action nodes. It provides fast processing with strong accuracy at lower cost.

- Amazon Nova Pro is used for five complex nodes that require advanced instruction following and multi-step function calls.

- Both Nova Lite and Nova Pro models support a 300,000-token context window and can process text, image, and video inputs. They also support text in more than 200 languages. State management and data storage

- Conversation state is kept in an AgentState TypedDict (a typed Python dictionary) that stores conversation history, user profile data, processing status, and final outputs. This enforces data types and reduces errors when nodes read and update session state.

- Sample user profiles and upcoming trips are stored in DynamoDB for personalization. In production deployments, these profiles can be linked to customer databases and reservation systems. Deployment and operational notes

- The solution repository is available on GitHub (link provided in the blog post). Deployment uses AWS CDK to generate a CloudFormation template that provisions Lambda functions, DynamoDB tables, and API configurations.

- Before deploying, the solution requires API keys from three external services. These secrets must be stored in AWS Secrets Manager with a specified JSON structure.

- The deployment workflow includes creating a .env file in the project root, optionally bootstrapping the CDK environment if it is the first use in the account/region, and running the provided deployment script. After deployment, CloudFormation stack outputs provide values such as the API endpoint URL and web app domain.

- For testing, the blog authors created a business traveler persona and evaluated routing accuracy, function calling, response quality, and latency. The system preserved conversational context across multiple steps, integrating previous decisions into subsequent recommendations. Cleanup

- After experimentation, the CloudFormation stack can be deleted from the AWS CloudFormation console to remove the created resources.

Key takeaways

- Combining Amazon Nova Lite and Pro models enables a cost-conscious balance between performance and complex instruction-following.

- LangGraph provides a stateful orchestration framework to build multi-node agentic workflows that coordinate many specialized functions.

- A three-layer, serverless architecture supports extensibility and production-ready deployment with AWS CDK and CloudFormation.

- Typed session state (AgentState TypedDict) and DynamoDB-based profiles make personalization and reliable multi-step coordination feasible.

FAQ

-

Which models are used in the travel planning agent?

The solution uses Amazon Nova Lite for the router and simpler nodes, and Amazon Nova Pro for five complex action nodes.

-

How does the system keep track of a conversation?

The agent uses an AgentState TypedDict — a typed Python dictionary — to store conversation history, profile data, processing status, and outputs so nodes can safely read and update session state.

-

What deployment tools are used?

Deployment is implemented with the AWS Cloud Development Kit (AWS CDK), which generates a CloudFormation template to provision Lambda functions, DynamoDB tables, and API configurations.

-

Can the assistant handle non-English users?

Yes. The Amazon Nova models used in the solution support text processing across more than 200 languages.

-

Are the product recommendations live?

The demonstration uses live product links to Amazon.com and includes an "Add to Amazon Cart" interaction in the sample flows.

References

- AWS blog: Create a travel planning agentic workflow with Amazon Nova — https://aws.amazon.com/blogs/machine-learning/create-a-travel-planning-agentic-workflow-with-amazon-nova/

More news

First look at the Google Home app powered by Gemini

The Verge reports Google is updating the Google Home app to bring Gemini features, including an Ask Home search bar, a redesigned UI, and Gemini-driven controls for the home.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

Google expands Gemini in Chrome with cross-platform rollout and no membership fee

Gemini AI in Chrome gains access to tabs, history, and Google properties, rolling out to Mac and Windows in the US without a fee, and enabling task automation and Workspace integrations.