UICoder: Finetuning LLMs to Generate UI Code with Automated Feedback

Sources: https://machinelearning.apple.com/research/uicoder, machinelearning.apple.com

TL;DR

- UICoder investigates finetuning LLMs to generate UI code using automated feedback from compilers and multimodal models.

- The workflow starts with an existing LLM, which self-generates a large synthetic dataset; automated tools filter, score, and deduplicate to a refined high-quality dataset.

- The original LLM is then finetuned on this refined dataset to produce improved models.

- The approach was applied to several open-source LLMs and compared against baseline models using automated metrics and human preferences.

- Evaluations show the finetuned models outperform all other downloadable baselines and approach the performance of larger proprietary models.

Context and background

Programmers repeatedly interact with machine learning tutorials and code-generation tools in computational notebooks, yet LLMs still struggle to consistently produce UI code that compiles and yields visually relevant designs. Existing approaches to improve generation rely on expensive human feedback or distilling proprietary models. To address this, the work explores automated feedback (compilers and multi-modal models) to guide LLMs toward high-quality UI code. The method starts with an existing LLM and iteratively produces improved models by self-generating a large synthetic dataset using an original model, applying automated tools to aggressively filter, score, and de-duplicate the data into a refined higher quality dataset. The original LLM is improved by finetuning on this refined dataset. We applied our approach to several open-source LLMs and compared the resulting performance to baseline models with both automated metrics and human preferences. Our evaluation shows the resulting models outperform all other downloadable baselines and approach the performance of larger proprietary models. The work has been discussed in the context of contemporary research on LLM evaluation via pairwise preferences over model responses, a data signal used to guide feedback for model improvement. This research is positioned within a broader research program that includes contributions to Speech and Natural Language Processing and Human-Computer Interaction, and it was accepted at the IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC) 2024.

What’s new

- The method starts with an existing LLM and iteratively produces improved models by self-generating a large synthetic dataset using an original model, applying automated tools to aggressively filter, score, and de-duplicate the data into a refined higher quality dataset.

- The original LLM is improved by finetuning on this refined dataset.

- This approach was applied to several open-source LLMs and evaluated against baseline models using both automated metrics and human preferences.

- The evaluations showed that the resulting models outperform all other downloadable baselines and approach the performance of larger proprietary models.

Why it matters (impact for developers/enterprises)

For developers and organizations building UI-intensive applications, UICoder represents a pathway to more reliable UI code generation without relying on costly human feedback. By leveraging automated feedback signals from compilers and multimodal systems, teams may achieve higher quality UI code that compiles and aligns more closely with visual designs, potentially reducing development time and iteration cycles. The approach also demonstrates how open-source LLMs can be improved through self-generated data and automated quality controls, making state-of-the-art UI code generation more accessible to a broader base of developers and teams.

Technical details or Implementation

- Starting point: an existing LLM serves as the baseline model.

- Synthetic data generation: the baseline model self-generates a large synthetic dataset reflecting UI code tasks.

- Automated filtering, scoring, and de-duplication: the generated data is aggressively filtered, scored, and deduplicated using automated tools (including compilers and multimodal models) to form a refined, higher quality dataset.

- Fine-tuning: the original LLM is fine-tuned on the refined dataset to produce improved models.

- Evaluation: the improved models are evaluated against baseline models using automated metrics and human preferences to assess UI code quality, compilation success, and visual relevance.

- Scope: the approach was applied to several open-source LLMs, illustrating that automated feedback-driven finetuning can yield meaningful gains across different architectures.

Key takeaways

- Automated feedback can guide LLMs toward higher-quality UI code without heavy reliance on human annotations.

- A self-generated synthetic data pipeline, combined with automated filtering and deduplication, can produce richer training material for finetuning.

- Finetuning the original LLM on refined data can yield models that outperform downloadable baselines and approach large proprietary models.

- The methodology integrates compilers and multimodal evaluation tools as feedback signals to improve code quality and visual fidelity.

- The work contributes to the broader understanding of how automatic signals and self-generated data can accelerate progress in UI code generation.

FAQ

-

How is automated feedback used in UICoder?

Automated feedback comes from compilers and multimodal models that filter, score, and deduplicate a self-generated synthetic dataset used to fine-tune the base LLM.

-

Which models were evaluated?

The approach was applied to several open-source LLMs and compared against baseline models using automated metrics and human preferences.

-

What were the key outcomes?

The finetuned models outperformed all other downloadable baselines and approached the performance of larger proprietary models.

-

Where can I read more about UICoder?

The detailed work is published by Apple’s machine learning research program at https://machinelearning.apple.com/research/uicoder.

References

More news

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

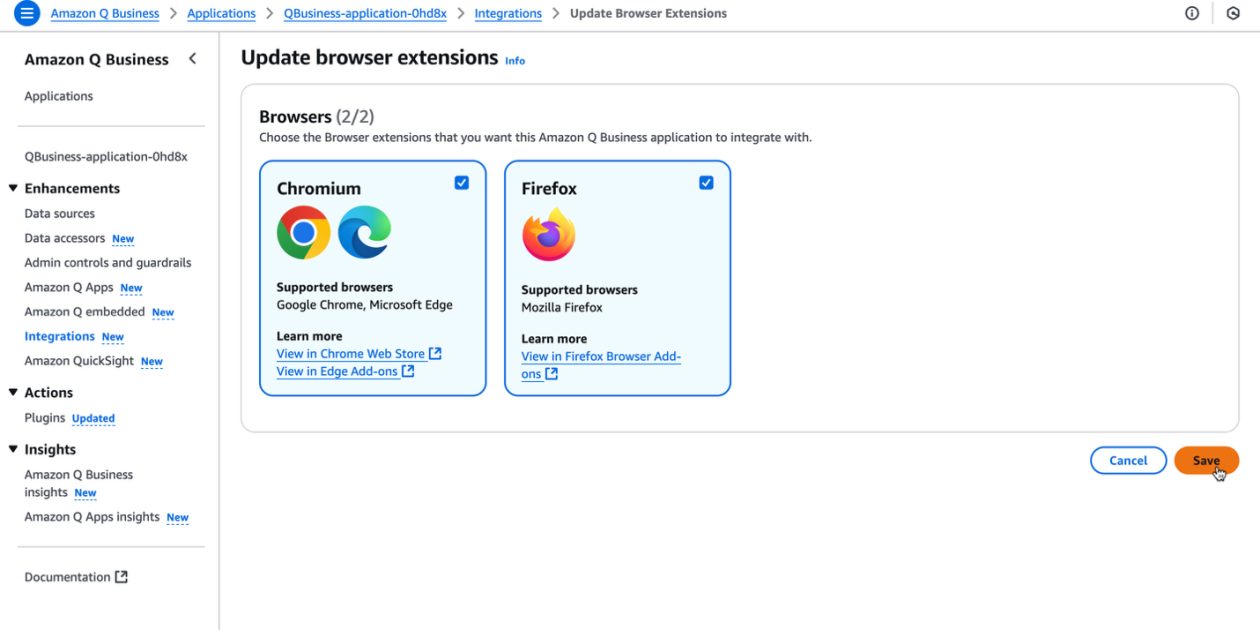

Supercharge your organization’s productivity with the Amazon Q Business browser extension

The Amazon Q Business browser extension brings context-aware, AI-driven assistance to your browser for Lite and Pro subscribers, enabling rapid, source-backed insights and seamless workflows.

Reducing Cold Start Latency for LLM Inference with NVIDIA Run:ai Model Streamer

A detailed look at how NVIDIA Run:ai Model Streamer lowers cold-start times for LLM inference by streaming weights into GPU memory, with benchmarks across GP3, IO2, and S3 storage.

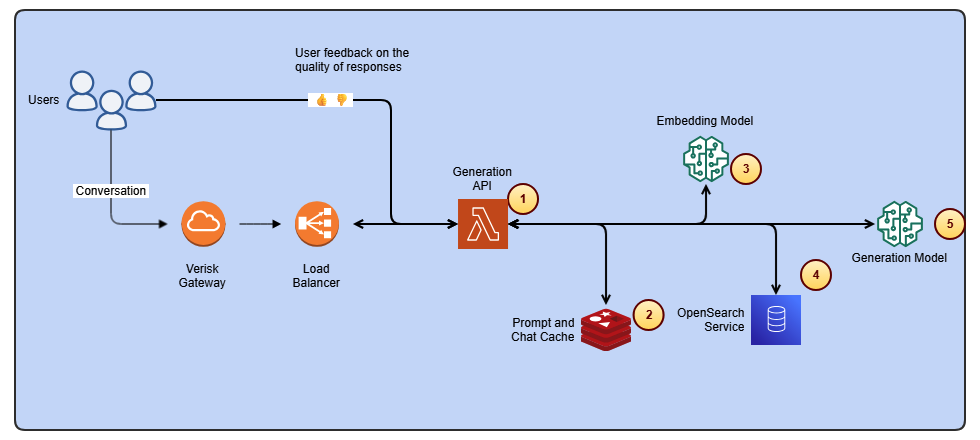

Streamline ISO-rating content changes with Verisk Rating Insights and Amazon Bedrock

Verisk Rating Insights, powered by Amazon Bedrock, LLMs, and RAG, enables a conversational interface to access ISO ERC changes, reducing manual downloads and enabling faster, accurate insights.

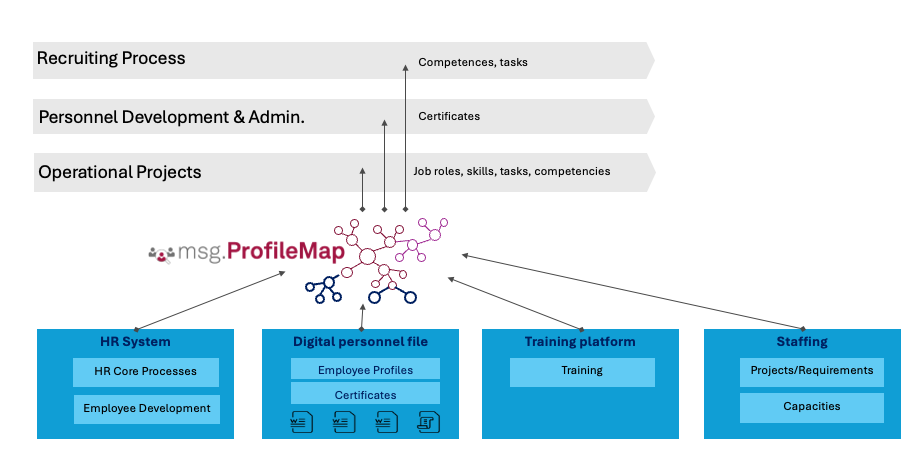

How msg enhanced HR workforce transformation with Amazon Bedrock and msg.ProfileMap

This post explains how msg automated data harmonization for msg.ProfileMap using Amazon Bedrock to power LLM-driven data enrichment, boosting HR concept matching accuracy, reducing manual workload, and aligning with EU AI Act and GDPR.

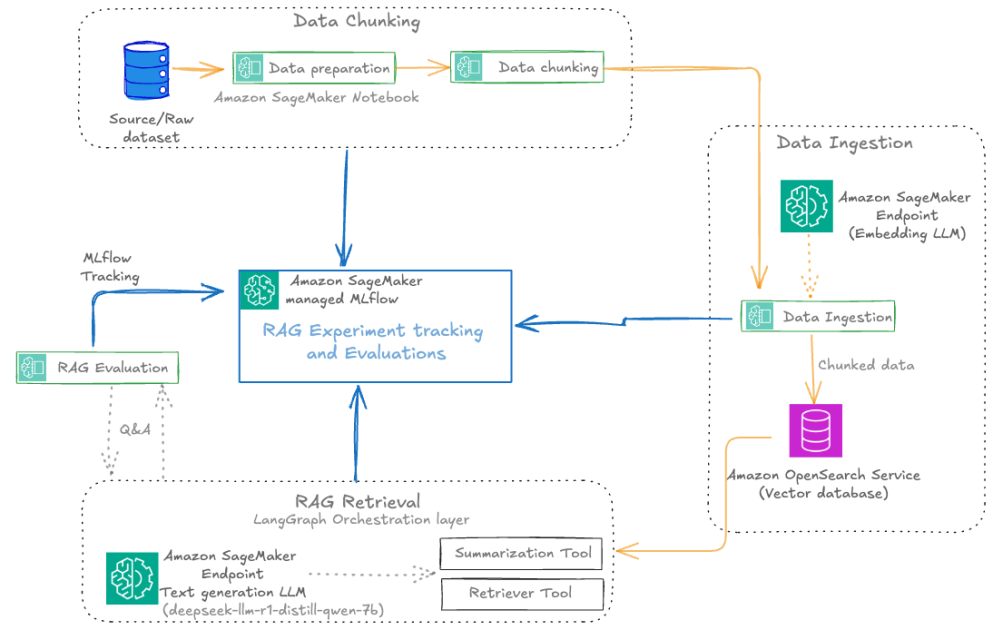

Automate advanced agentic RAG pipelines using Amazon SageMaker AI

Streamline experimentation to production for Retrieval Augmented Generation (RAG) with SageMaker AI, MLflow, and Pipelines, enabling reproducible, scalable, and governance-ready workflows.