Optimal Corpus Aware Training for Neural Machine Translation (OCAT) Boosts NMT Accuracy

Sources: https://machinelearning.apple.com/research/optimal-corpus, machinelearning.apple.com

TL;DR

- Corpus Aware Training (CAT) injects corpus metadata into each training example using a tagging approach, enabling models to learn quality and domain nuances directly from data.

- Optimal Corpus Aware Training (OCAT) fine-tunes a CAT pre-trained model by freezing most parameters and only adjusting a small set of corpus-related parameters.

- In WMT23 English→Chinese and English→German tests, OCAT achieved +3.6 and +1.8 chrF improvements over vanilla training, respectively.

- OCAT is lightweight, resilient to overfitting, and competitive with other state-of-the-art fine-tuning methods while being less sensitive to hyperparameters.

- The work highlights practical gains for neural machine translation with corpus-aware strategies and targeted, efficient fine-tuning.

Context and background

Corpus Aware Training (CAT) leverages valuable corpus metadata during training by injecting corpus information into each training example, commonly known as the “tagging” approach. Models trained with CAT inherently learn the quality, domain, and nuance between corpora directly from data, and can easily switch to different inference behavior. A key challenge with CAT, however, is how to identify and pre-define a high-quality data group before training starts, which can be error-prone and inefficient. The OCAT work proposes a practical alternative: instead of pre-selecting quality data in advance, fine-tune a CAT pre-trained model by targeting a small, corpus-related parameter set.

What’s new

The core idea of Optimal Corpus Aware Training (OCAT) is to start from a CAT pre-trained model and perform a lightweight fine-tuning process that freezes most of the model parameters. Only a small set of corpus-related parameters is updated during OCAT, making the process more parameter-efficient and potentially more robust to overfitting. The authors demonstrate the approach on widely used machine translation benchmarks and show that OCAT yields meaningful gains without requiring extensive hyperparameter search.

Why it matters (impact for developers/enterprises)

For developers and organizations building production MT systems, OCAT offers a pathway to improve translation quality with limited compute and data engineering overhead. By freezing the bulk of the model and only updating a compact set of corpus-related parameters, teams can deploy models that better reflect corpus quality and domain nuances without retraining large portions of the network. The reported results on standard benchmarks suggest that OCAT can be competitive with other fine-tuning techniques while reducing sensitivity to hyperparameters, which translates to more predictable deployments and faster experimentation cycles.

Technical details or Implementation

OCAT builds on the premise of Corpus Aware Training (CAT), where training data is augmented with corpus identifiers or metadata that signal data origin, quality, or domain. In OCAT, a CAT pre-trained model is fine-tuned by freezing most of its parameters and updating only a restricted set of corpus-related parameters. This approach is described as lightweight, resilient to overfitting, and effective in boosting translation accuracy. The authors test OCAT on two translation tasks drawn from the WMT23 suite: English→Chinese and English→German. In both settings, OCAT delivers notable chrF improvements over vanilla training: +3.6 chrF for EN→ZH and +1.8 chrF for EN→DE. These gains are presented as being on par with or slightly better than other state-of-the-art fine-tuning techniques while exhibiting reduced sensitivity to hyperparameter choices. For context, the research is conducted within the Speech and Natural Language Processing domain and contributes to the broader effort to improve MT systems by explicitly leveraging corpus characteristics during training. The work also notes the practical advantages of a lightweight fine-tuning protocol in real-world deployment scenarios where computational budgets and data curation time are limited.

Key takeaways

- OCAT extends CAT by focusing fine-tuning on a small, corpus-related parameter subset.

- The method is lightweight and designed to resist overfitting compared with broader fine-tuning regimes.

- On WMT23 EN→ZH and EN→DE tasks, OCAT achieves +3.6 and +1.8 chrF improvements over vanilla training.

- OCAT matches or slightly exceeds other fine-tuning approaches while being less sensitive to hyperparameters.

- The approach offers a practical pathway to enhance MT quality without extensive data pre-selection or exhaustive hyperparameter tuning.

FAQ

-

What is OCAT?

OCAT stands for Optimal Corpus Aware Training. It fine-tunes a CAT pre-trained model by freezing most of the model parameters and updating a small set of corpus-related parameters.

-

What improvements were observed with OCAT?

On WMT23 EN→ZH and EN→DE, OCAT showed +3.6 chrF and +1.8 chrF improvements over vanilla training, respectively.

-

On which translation tasks was OCAT evaluated?

The approach was evaluated on English→Chinese and English→German translation tasks in the WMT23 benchmark.

-

How does OCAT compare to other fine-tuning methods?

OCAT is on-par or slightly better than other state-of-the-art fine-tuning techniques and is less sensitive to hyperparameter settings.

References

More news

Microsoft to turn Foxconn site into Fairwater AI data center, touted as world's most powerful

Microsoft unveils plans for a 1.2 million-square-foot Fairwater AI data center in Wisconsin, housing hundreds of thousands of Nvidia GB200 GPUs. The project promises unprecedented AI training power with a closed-loop cooling system and a cost of $3.3 billion.

Reddit Pushes for Bigger AI Deal with Google: Users and Content in Exchange

Reddit seeks a larger licensing deal with Google, aiming to drive more users and access to Reddit data for AI training, potentially via dynamic pricing and traffic incentives.

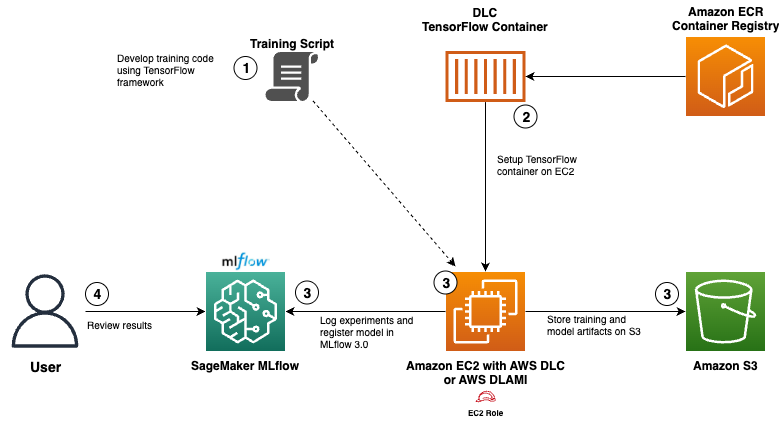

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.

GPT-5-Codex Addendum: Agentic Coding Optimized GPT-5 with Safety Measures

An addendum detailing GPT-5-Codex, a GPT-5 variant optimized for agentic coding within Codex, with safety mitigations and multi-platform availability.

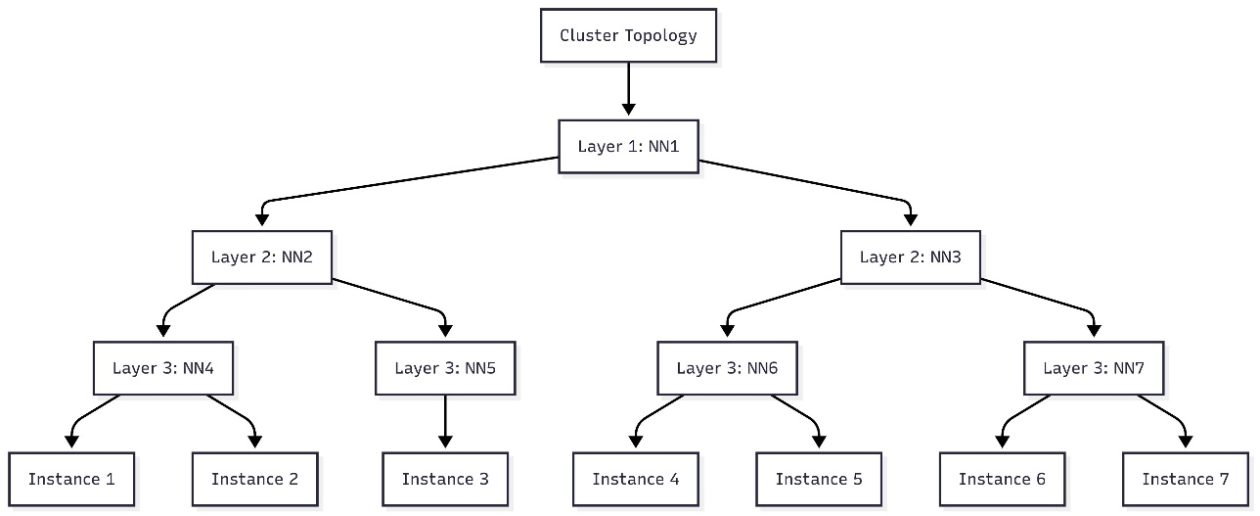

Schedule topology-aware workloads using Amazon SageMaker HyperPod task governance

AWS introduces topology-aware scheduling with SageMaker HyperPod task governance to optimize training efficiency and network latency on EKS clusters, using EC2 topology data to guide job placement.

How Quantization Aware Training Enables Low-Precision Accuracy Recovery

Explores quantization aware training (QAT) and distillation (QAD) as methods to recover accuracy in low-precision models, leveraging NVIDIA's TensorRT Model Optimizer and FP8/NVFP4/MXFP4 formats.