Dynamo 0.4 Delivers 4x Faster Performance, SLO-Based Autoscaling, and Real-Time Observability

Sources: https://developer.nvidia.com/blog/dynamo-0-4-delivers-4x-faster-performance-slo-based-autoscaling-and-real-time-observability, developer.nvidia.com

TL;DR

- Dynamo 0.4 introduces disaggregated serving, SLO-based autoscaling, and real-time observability for large-scale AI inference.

- OpenAI gpt-oss-120b on Dynamo + TensorRT-LLM on B200 delivers up to 4x faster interactivity for long input sequences.

- DeepSeek-R1 671B on GB200 NVL72 with Dynamo + TensorRT-LLM achieves 2.5x higher throughput per GPU without extra inference cost.

- AIConfigurator helps pick optimal PD disaggregation and model parallel strategies within a GPU budget and SLOs.

- Planner now supports SLO-based autoscaling and native Kubernetes integration for scalable deployments.

Context and background

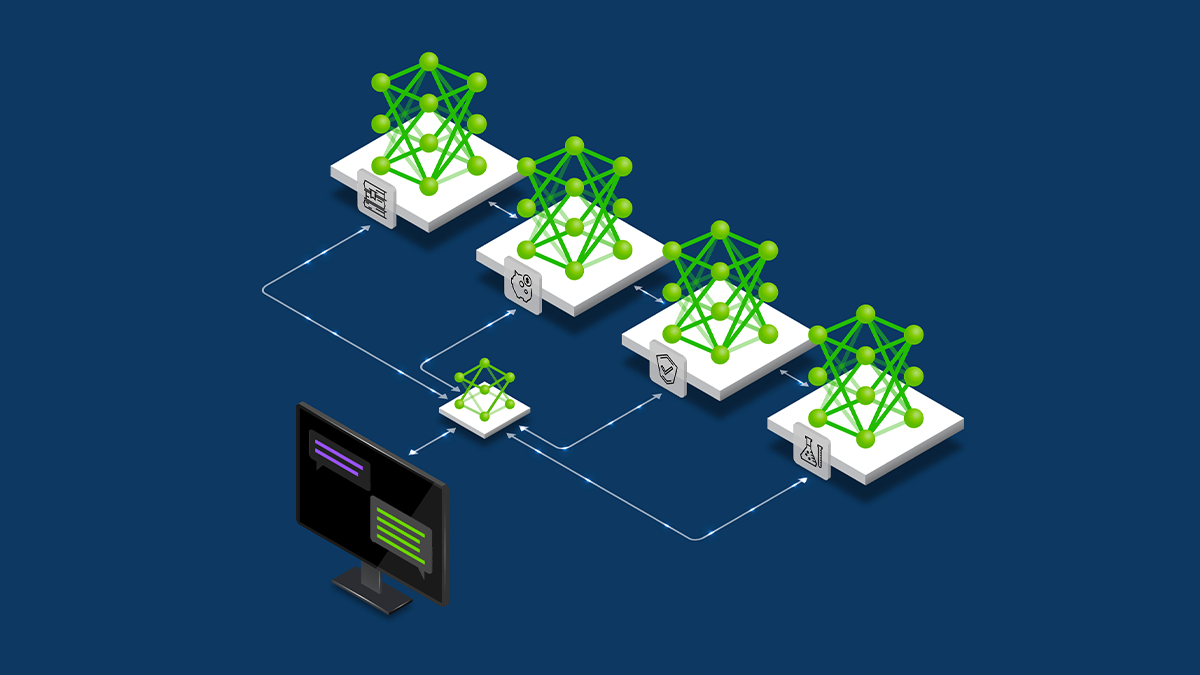

The Dynamo 0.4 release arrives as a continuation of NVIDIA’s effort to enable efficient, scalable inference for frontier models in disaggregated serving architectures. The update highlights improvements in performance, observability, and autoscaling tailored for large language models (LLMs) operating at scale. The release also notes ongoing work around open-source models and guided deployment, including references to frameworks like TensorRT-LLM and platforms such as Kubernetes. Dynamo’s disaggregated serving approach splits inference steps across multiple GPUs, decoupling prefill and decode phases to optimize resource allocation. This architectural choice enables more flexible model parallelism and improved efficiency, especially for lengthy input sequences common in agentic workflows, code generation, and summarization. The release also emphasizes the goal of reducing latency and cost while maintaining throughput.

What’s new

Dynamo 0.4 adds several capabilities designed to optimize large-model serving at scale. Key highlights include:

- Disaggregated serving enhancements that decouple prefill and decode across separate GPUs, enabling flexible GPU allocation and improved efficiency.

- AIConfigurator, a new tool that recommends optimal PD disaggregation configurations and model-parallel strategies based on a model and GPU budget to meet SLOs. It uses pre-measured performance data and supports various scheduling techniques.

- SLO-based Planner, which forecasts the impact of input/output sequence length changes and scales resources proactively to meet Time to First Token (TTFT) and Inter-Token Latency (ITL) targets.

- Native Kubernetes integration for deploying Dynamo and Planner, simplifying containerized AI workloads in production.

- Observability enhancements, with Dynamo components emitting Prometheus-based metrics that integrate with Grafana and other open-source tools. An API is provided to define and emit custom metrics.

- Fault tolerance and resilience improvements, including inflight request re-routing to online GPUs and faster failure detection that bypasses etcd in the control plane.

- MoE-focused deployment guidance, with deployment guides for MoE model serving with disaggregated serving to support researchers and enterprises exploring advanced architectures.

- Performance highlights: for the OpenAI gpt-oss-120b model running on B200 with Dynamo and TensorRT-LLM, interactivity increased up to 4x for long sequences without throughput loss; for DeepSeek-R1 671B on GB200 NVL72 with TensorRT-LLM and Dynamo, throughput rose by about 2.5x per GPU without higher costs. See the release notes for details.

- Planner improvements in the 0.4 release extend the earlier 0.2 version by adding SLO-based autoscaling that aims to maximize GPU utilization while minimizing inference costs. | Model/Setup | Observed Benefit | Notes |--- |--- |--- |OpenAI gpt-oss-120b on B200 with Dynamo + TensorRT-LLM | Up to 4x faster interactivity (tokens/second/user) for long inputs | No throughput tradeoffs reported |DeepSeek-R1 671B on GB200 NVL72 with Dynamo + TensorRT-LLM | 2.5x higher throughput (tokens/second/GPU) | Inference costs unchanged |

Why it matters (impact for developers/enterprises)

For teams building and operating large LLM workloads, Dynamo 0.4 offers several practical benefits. The disaggregated serving approach enables more efficient use of GPUs by tailoring resource allocation to prefill and decode phases, potentially lowering costs while sustaining throughput on long inputs. SLO-based autoscaling helps inference teams meet strict performance targets, reducing the risk of latency spikes that can affect user experience. The AIConfigurator tool provides guidance to configure PD disaggregation and model parallelism in a way that aligns with a given budget and SLOs, helping teams plan capacity in advance rather than relying solely on reactive scaling. Kubernetes-native deployment simplifies integration into existing containerized environments, while Prometheus-based observability and Grafana compatibility support real-time monitoring and faster issue diagnosis. The new fault-tolerance features reduce the impact of node or GPU failures on end users by rerouting inflight requests and accelerating failure detection. Businesses aiming to deploy frontier models at scale can leverage these capabilities to pursue performance gains, predictable latency, and cost-efficient operation across multi-node GPU clusters.

Technical details or Implementation (how it works)

Dynamo’s core concept remains disaggregated serving, which splits inference across multiple GPUs. The 0.4 release adds:

- Decoupled prefill and decode: the prefill phase can use one set of GPUs while the decode phase uses another, enabling more flexible model parallelism.

- PD disaggregation strategy and model-parallel planning via AIConfigurator: AIConfigurator analyzes model layers (attention, FFN, communication, memory) and scheduling methods (static batching, inflight batching, disaggregated serving) to propose PD configurations that satisfy user-defined SLOs within a GPU budget. It can generate backend configurations compatible with Dynamo.

- Planner with SLO-based autoscaling: monitors prefill queue and decode memory to scale inference workers up or down to maximize GPU utilization and minimize cost, now with forward-looking scaling that anticipates input/output sequence-length changes. Planner integrates with Kubernetes for straightforward deployment.

- Observability framework: Dynamo workers and each plane emit Prometheus metrics and are consumable by Grafana and similar tools; an API allows custom metrics to be defined for specialized workloads.

- Fault tolerance: inflight request re-routing preserves intermediate computations and forwards requests to online GPUs; faster failure detection reduces the recovery window by bypassing etcd for critical health signals.

- MoE-focused deployment guidance: the release includes guides for MoE model serving with disaggregated serving to support researchers and enterprises exploring advanced architectures. These capabilities build on the 0.2 release’s Planner to deliver a more proactive, SLO-aware autoscaling experience while expanding into broader inference frameworks and hardware support. The team notes ongoing expansion of tooling, with initial support for TensorRT-LLM on NVIDIA Hopper and plans for additional frameworks and hardware in future updates.

Key takeaways

- Dynamo 0.4 introduces SLO-based autoscaling and enhanced observability to support large-model inference at scale.

- Disaggregated serving decouples prefill and decode phases across GPUs to improve efficiency and flexibility.

- AIConfigurator automates the selection of PD configurations and model parallel strategies within GPU budgets and SLOs.

- Planner now forecasts and scales resources ahead of bottlenecks and integrates with Kubernetes for easy deployment.

- Observability metrics via Prometheus and Grafana enable real-time monitoring, while fault-tolerance features reduce disruption from node failures.

FAQ

-

What is new in Dynamo 0.4?

It adds SLO-based autoscaling, disaggregated serving, AIConfigurator, Planner enhancements, Kubernetes integration, and improved observability and fault tolerance. It also extends MoE model serving capabilities.

-

What performance gains were demonstrated?

For OpenAI gpt-oss-120b on B200 with Dynamo + TensorRT-LLM, interactivity increased up to 4x for long inputs; for DeepSeek-R1 671B on GB200 NVL72 with TensorRT-LLM and Dynamo, throughput rose 2.5x per GPU without increased costs.

-

What is AIConfigurator?

A tool that recommends optimal PD disaggregation configurations and model-parallel strategies based on a model and GPU budget to meet SLOs, using performance data across layers and scheduling options. It generates backend Dynamo configurations and offers CLI and web interfaces.

-

How does Dynamo handle failures?

It uses inflight request re-routing to online GPUs to preserve work and employs faster failure detection within the Dynamo smart router to bypass etcd and reduce recovery time.

References

More news

First look at the Google Home app powered by Gemini

The Verge reports Google is updating the Google Home app to bring Gemini features, including an Ask Home search bar, a redesigned UI, and Gemini-driven controls for the home.

NVIDIA HGX B200 Reduces Embodied Carbon Emissions Intensity

NVIDIA HGX B200 lowers embodied carbon intensity by 24% vs. HGX H100, while delivering higher AI performance and energy efficiency. This article reviews the PCF-backed improvements, new hardware features, and implications for developers and enterprises.

OpenAI reportedly developing smart speaker, glasses, voice recorder, and pin with Jony Ive

OpenAI is reportedly exploring a family of AI devices with Apple's former design chief Jony Ive, including a screen-free smart speaker, smart glasses, a voice recorder, and a wearable pin, with release targeted for late 2026 or early 2027. The Information cites sources with direct knowledge.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.