ICR2: Benchmarking In-Context Retrieval and Reasoning for Long-Context Language Models

Sources: https://machinelearning.apple.com/research/eliciting-in-context, machinelearning.apple.com

Long-context language models (LCLMs) are reshaping how researchers and practitioners approach Retrieval-Augmented Generation (RAG). By extending their context windows, LCLMs can ingest broader knowledge bases and, in principle, perform retrieval and reasoning directly within the prompt, a capability the authors call In-Context Retrieval and Reasoning (ICR2) [Source]. This article distills the core ideas, the new evaluation benchmark ICR2, and the practical methods proposed to boost LCLM performance in realistic settings. The discussion draws on work presented in ICML and related venues and highlights how these developments position LCLMs to rival or exceed traditional RAG pipelines under certain conditions.

TL;DR

- Long-context LLMs can handle retrieval and reasoning inside their extended prompts, defined as In-Context Retrieval and Reasoning (ICR2) [Source].

- The LOFT benchmark alone may overestimate LCLM performance; ICR2 introduces more challenging, realistic contexts with confounding documents [Source].

- Proposed methods to improve ICR2 performance include retrieve-then-generate fine-tuning, a retriever-trained retrieval head coupled with the generation head, and retrieval-attention-probing decoding [Source].

- Benchmarking across four well-known LCLMs on LOFT and ICR2 shows substantial gains for Mistral-7B: +17 and +15 on LOFT, +13 and +2 on ICR2, vs zero-shot RAG and in-domain supervised fine-tuned baselines, respectively; in many tasks, this approach even surpasses GPT-4 despite a smaller model size [Source].

- The work articulates pathways to more cost-effective long-context reasoning and has implications for enterprise deployments relying on large knowledge bases [Source].

Context and background

Large language models continue to face two intertwined challenges when operating over long textual horizons. First, inference costs tend to scale quadratically with sequence length, which can hinder deployment in real-world text processing tasks such as retrieval-augmented generation (RAG) where long prompts are commonplace [Source]. Second, there is the so-called “distraction phenomenon,” where irrelevant context in the prompt degrades model performance, especially as the context grows beyond what the model can efficiently utilize. In this landscape, long-context language models (LCLMs) offer a compelling vision: by extending context windows, they could absorb entire knowledge bases and perform retrieval and reasoning inside the model, reducing pipeline complexity and latency compared to traditional multi-component RAG stacks [Source]. The work on ICR2 explicitly frames this potential and asks how to evaluate and train LCLMs to realize it in practice. The research introduces ICR2 as a benchmark crafted to reflect realistic information-seeking tasks. Unlike prior benchmarks such as LOFT, ICR2 incorporates confounding documents retrieved by strong retrievers to simulate real retrieval noise and to prevent overly optimistic estimates of LCLM capabilities. In doing so, ICR2 aims to provide a more faithful evaluation and training environment for long-context models engaged in retrieval and reasoning tasks [Source]. These ideas were presented in a set of proceedings that include ICML, with related discussion arising from an UncertaiNLP workshop at EACL 2024, highlighting ongoing interest in robust, uncertainty-aware evaluation for LLMs in practical settings [Source].

What’s new

The core contributions in the ICR2 line of work can be summarized as follows:

- A new benchmark, ICR2, designed to evaluate in-context retrieval and reasoning under more challenging and realistic contexts, including confounding documents from strong retrievers. This complements LOFT by tightening the evaluation of long-context capabilities [Source].

- A trio of methods to bolster LCLM performance on ICR2:

- retrieve-then-generate fine-tuning to align retrieval behavior with generation objectives; 2) explicit modeling of a retrieval head that is trained jointly with the generation head; 3) retrieval-attention-probing decoding, which leverages attention heads to filter and refine long-context inputs during decoding [Source].

- Extensive benchmarking across four well-known LCLMs on both LOFT and ICR2 to assess gains and limitations. A notable finding is that the combination of these techniques, when applied to Mistral-7B, achieves substantial improvements across benchmarks: +17 and +15 on LOFT, and +13 and +2 on ICR2, compared to zero-shot RAG and in-domain supervised fine-tuned models, respectively. Importantly, these gains occur despite using a much smaller model than some large-scale baselines and can exceed GPT-4 on many tasks in this setting [Source].

- The work emphasizes practical deployment considerations, including more cost-effective handling of long contexts and the potential for simplified pipelines that still deliver strong retrieval and reasoning capabilities [Source]. A key takeaway is that, with the right training and decoding strategies, LCLMs can reclaim substantial portions of the performance that traditionally relied on larger retrieval pipelines, while potentially reducing system complexity and latency in enterprise deployments [Source].

Why it matters (impact for developers/enterprises)

For developers and enterprises, the ICR2 framework signals a shift toward more autonomous, self-contained reasoning over knowledge bases. If long-context models can effectively perform retrieval and reasoning within the model—without resorting to separate retrievers and post-hoc reranking stages—a simplified, potentially more compact deployment becomes feasible. The reported gains on LOFT and ICR2 indicate that carefully designed fine-tuning and decoding strategies can unlock strong performance even with smaller models. This matters for cost-sensitive deployments, where inference budgets, latency, and operational complexity are critical considerations. Additionally, the proposed retrieval-attention-probing approach provides a tangible method to filter long contexts during decoding, potentially reducing distraction and improving robustness in the face of noisy or confounded retrieval results [Source]. From an AI governance and reliability perspective, having benchmarks that incorporate confounding documents helps illuminate how systems behave under realistic, noisy information retrieval conditions. The ICR2 design thus contributes to safer and more transparent evaluation practices for long-context reasoning in practical settings [Source].

Technical details or Implementation

The ICR2 program centers on three methodological pillars:

- Retrieve-then-generate fine-tuning: this approach aligns the retrieval process with the downstream generation objective, teaching the model to use retrieved content in a way that supports the final answer rather than simply echoing retrieved text [Source].

- Explicit retrieval head co-trained with the generation head: by jointly training a dedicated retrieval component alongside the generation module, the model can develop a more coherent retrieval strategy that supports reasoning over long contexts [Source].

- Retrieval-attention-probing decoding: during decoding, attention heads are leveraged to filter and refine the long context, effectively pruning irrelevant or misleading information before it influences the output [Source]. The benchmarking study covered four well-known LCLMs and compared their performance on LOFT and ICR2, revealing that the best-performing configuration—when applied to the Mistral-7B model—achieved notable improvements: +17 and +15 on LOFT, and +13 and +2 on ICR2, relative to zero-shot RAG and in-domain supervised fine-tuned baselines, respectively. The authors emphasize that these gains are achieved with a model size smaller than some of the largest baselines, underscoring efficiency gains alongside accuracy improvements. In some tasks, the approach even surpassed GPT-4, illustrating the potential of carefully engineered long-context reasoning pipelines [Source]. The LOFT benchmark, while valuable, is not without limitations. The authors argue that LOFT often overestimates LCLM performance because it may not present sufficiently challenging contexts. By introducing confounding documents in ICR2, the benchmark more realistically probes how models handle retrieval noise and complex reasoning tasks, which is crucial for real-world deployments where signals are imperfect and context length is extensive [Source]. Technical takeaway for practitioners is clear: to harness ICR2 effectively, one should consider a combination of fine-tuning strategies, joint modeling of retrieval and generation components, and decoding-time filtering of long contexts. Together, these elements help long-context models navigate large knowledge bases and produce grounded, relevant responses.

Key takeaways

- ICR2 formalizes in-context retrieval and reasoning as a core capability for LCLMs and provides a more realistic benchmark than LOFT by including confounding documents [Source].

- A three-pronged approach—retrieve-then-generate fine-tuning, a jointly trained retrieval head, and retrieval-attention-probing decoding—yields meaningful gains on long-context tasks [Source].

- In evaluations with Mistral-7B, the best approach achieved +17 and +15 on LOFT and +13 and +2 on ICR2, respectively, compared with zero-shot RAG and in-domain supervised fine-tuning baselines; the method even outperformed GPT-4 on most tasks in this setting, despite a smaller model size [Source].

- The work highlights the potential for streamlined RAG pipelines and more cost-effective deployments in scenarios requiring long-context reasoning over broad knowledge bases [Source].

FAQ

-

What is In-Context Retrieval and Reasoning (ICR2)?

ICR2 is a benchmark and framework focused on evaluating and training long-context language models to perform retrieval and reasoning directly within extended context windows, as defined by the authors [Source].

References

More news

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

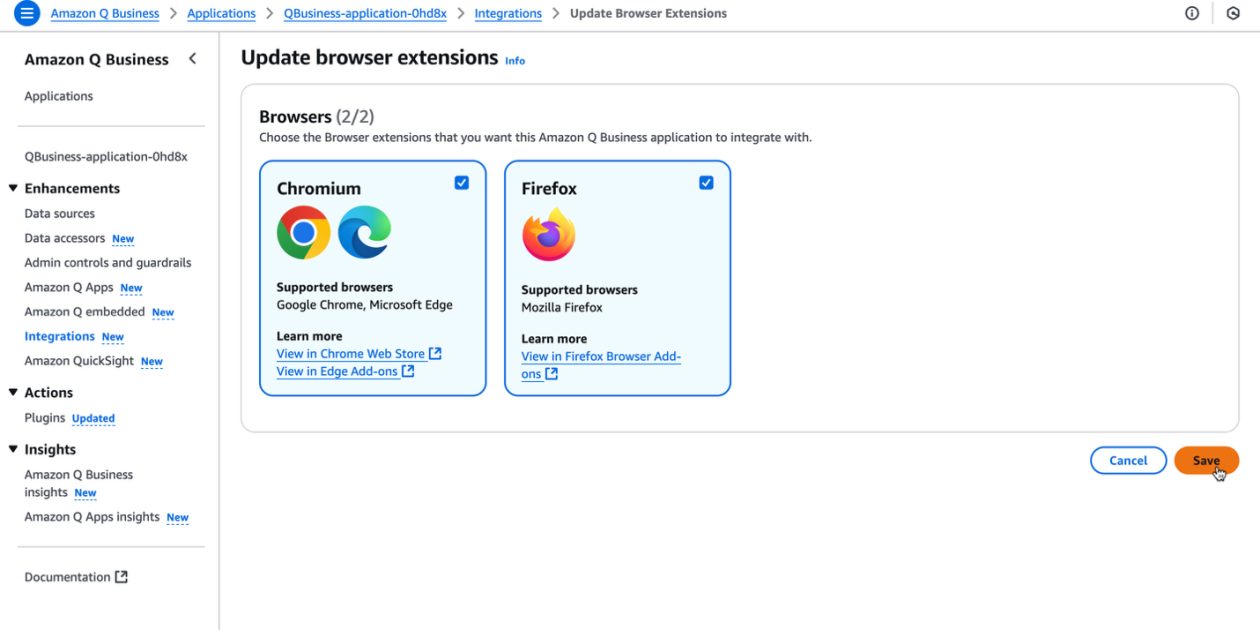

Supercharge your organization’s productivity with the Amazon Q Business browser extension

The Amazon Q Business browser extension brings context-aware, AI-driven assistance to your browser for Lite and Pro subscribers, enabling rapid, source-backed insights and seamless workflows.

Reducing Cold Start Latency for LLM Inference with NVIDIA Run:ai Model Streamer

A detailed look at how NVIDIA Run:ai Model Streamer lowers cold-start times for LLM inference by streaming weights into GPU memory, with benchmarks across GP3, IO2, and S3 storage.

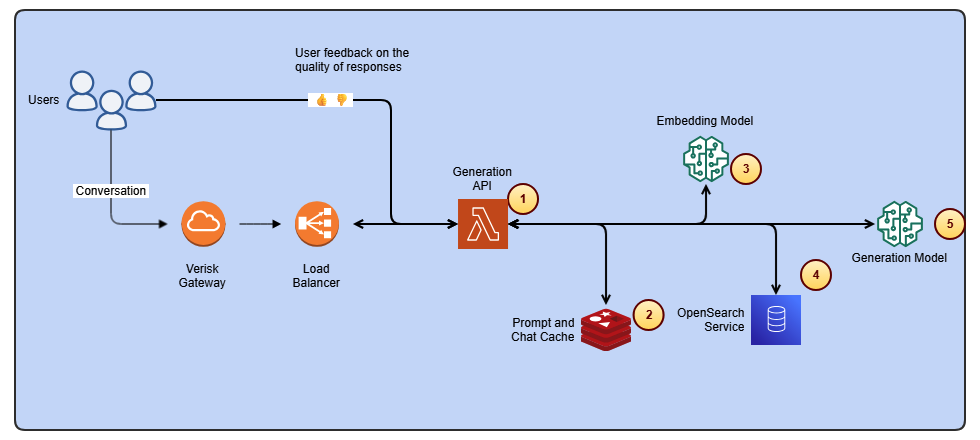

Streamline ISO-rating content changes with Verisk Rating Insights and Amazon Bedrock

Verisk Rating Insights, powered by Amazon Bedrock, LLMs, and RAG, enables a conversational interface to access ISO ERC changes, reducing manual downloads and enabling faster, accurate insights.

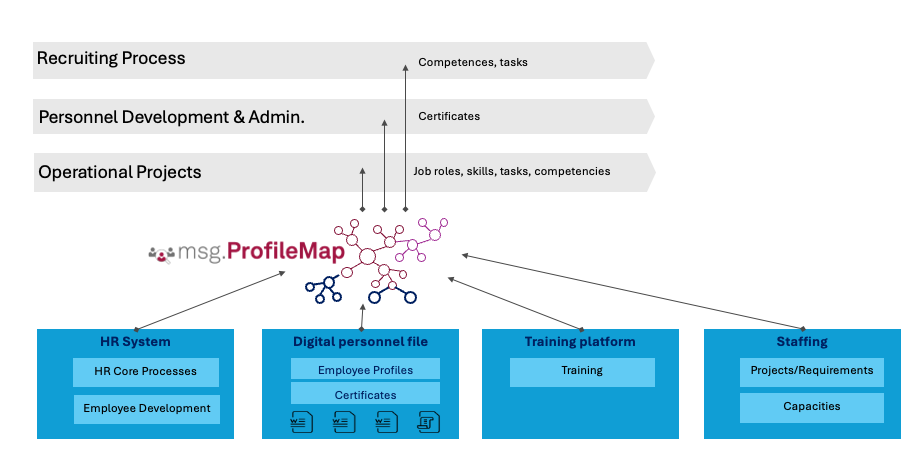

How msg enhanced HR workforce transformation with Amazon Bedrock and msg.ProfileMap

This post explains how msg automated data harmonization for msg.ProfileMap using Amazon Bedrock to power LLM-driven data enrichment, boosting HR concept matching accuracy, reducing manual workload, and aligning with EU AI Act and GDPR.

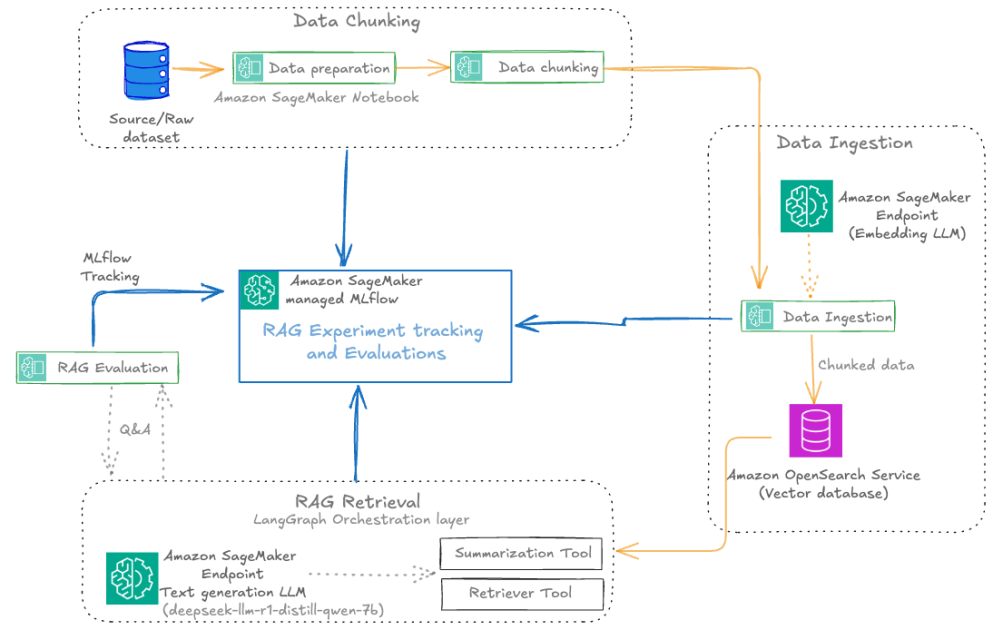

Automate advanced agentic RAG pipelines using Amazon SageMaker AI

Streamline experimentation to production for Retrieval Augmented Generation (RAG) with SageMaker AI, MLflow, and Pipelines, enabling reproducible, scalable, and governance-ready workflows.