Apple Workshop on Privacy-Preserving Machine Learning (PPML) 2025 — Recap and Resources

Sources: https://machinelearning.apple.com/updates/ppml-2025

TL;DR

- Apple hosted a two-day hybrid Workshop on Privacy-Preserving Machine Learning (PPML) earlier this year to discuss privacy, security, and AI.

- The workshop focused on four areas: Private Learning and Statistics; Attacks and Security; Differential Privacy Foundations; and Foundation Models and Privacy.

- Selected talks and publications, plus recordings of invited presentations, are available via Apple’s Machine Learning Research page.

Context and background

Apple states that privacy is a fundamental human right and emphasizes creating privacy-preserving techniques in parallel with advancing AI capabilities. The company noted its long-standing research in applying differential privacy to machine learning and hosted the Workshop on Privacy-Preserving Machine Learning (PPML) as a two-day hybrid event bringing together Apple researchers and the broader research community. The meeting concentrated on the intersection of privacy, security, and rapidly evolving artificial intelligence systems. Presenters and participants discussed both theoretical underpinnings and practical challenges in building AI systems that protect user privacy. A collection of selected talk recordings and a recap of the publications discussed at the workshop were shared on Apple’s Machine Learning Research site.

What’s new

The PPML workshop presented a wide range of recent work spanning theory, algorithms, empirical studies, and systems for privacy-preserving machine learning. Key themes included:

- Private learning and statistics, including mechanisms for private estimation and aggregation.

- Attacks and security, with analyses of inference and extraction risks related to personalized models and large language models.

- Differential privacy foundations, exploring amplification, composition, streaming privacy, and mechanism design.

- Foundation models and privacy, including approaches to generate differentially private synthetic data via foundation model APIs for images and text. Apple shared recordings of selected invited talks and provided a curated list of publications discussed during the workshop. Presentations included invited and contributed talks from academics and industry researchers across multiple institutions.

Why it matters (impact for developers/enterprises)

- Product design: As AI features become more personal and integrated into daily experiences, approaches discussed at PPML inform designers and engineers on how to build privacy-preserving components that respect users’ expectations.

- Research to practice: The mix of theoretical advances and system-focused talks helps bridge gaps between academic privacy research and deployable machine learning systems.

- Risk assessment: Talks on attacks and security emphasize the importance of auditing and threat modeling for models and APIs, relevant to teams deploying foundation models and personalized services.

- Compliance and trust: Differential privacy foundations and practical mechanisms presented at the workshop provide techniques that organizations can consider when aiming to meet privacy commitments and to build trust with users.

Technical details or Implementation

The workshop covered diverse technical topics. The list below samples invited talks and contributed papers presented during PPML; titles and authors are reproduced from the workshop materials shared by Apple.

Focus areas covered at the workshop

| Focus area | Representative talks or papers

| --- |

|---|

| Private Learning and Statistics |

| Attacks and Security |

| Differential Privacy Foundations |

| Foundation Models and Privacy |

Selected talks and contributions (partial list)

- Local Pan-Privacy — Guy Rothblum (Apple)

- Differential Private Continual Histograms — Monika Henzinger (IST&A)

- PREAMBLE: Private and Efficient Aggregation of Block Sparse… — Hannah Keller (Aarhus University)

- Tukey Depth Mechanisms for Practical Private Mean Estimation — Gavin Brown (University of Washington)

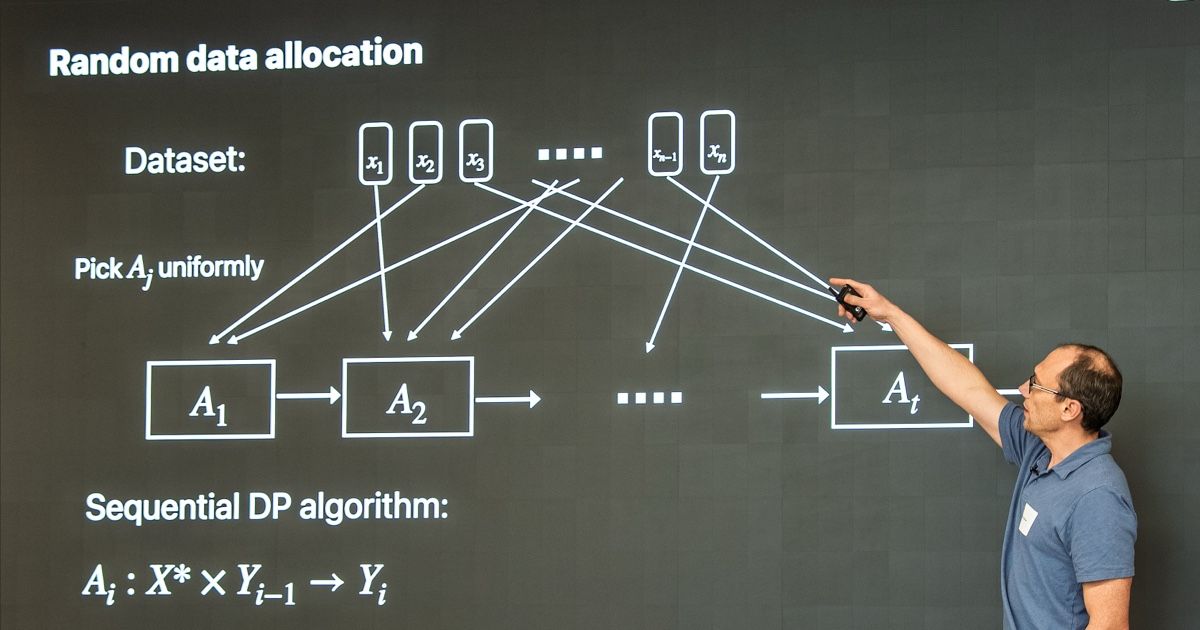

- Privacy Amplification by Random Allocation — Vitaly Feldman (Apple)

- Differentially Private Synthetic Data via Foundation Model APIs — Sivakanth Gopi (Microsoft Research)

- Scalable Private Search with Wally — Rehan Rishi and Haris Mughees (Apple)

- AirGapAgent: Protecting Privacy-Conscious Conversational Agents — Eugene Bagdasarian et al. (Google Research / DeepMind)

- A Generalized Binary Tree Mechanism for Differentially Private Approximation of All-Pair Distances — Michael Dinitz et al.

- Differentially Private Synthetic Data via Foundation Model APIs 1: Images — Zinan Lin et al. (Microsoft Research)

- Differentially Private Synthetic Data via Foundation Model APIs 2: Text — Chulin Xie et al.

- Efficient and Near-Optimal Noise Generation for Streaming Differential Privacy — Krishnamurthy (Dj) Dvijotham et al.

- Elephants Do Not Forget: Differential Privacy with State Continuity for Privacy Budget — Jiankai Jin et al.

- Improved Differentially Private Continual Observation Using Group Algebra — Monika Henzinger and Jalaj Upadhyay

- Instance-Optimal Private Density Estimation in the Wasserstein Distance — Vitaly Feldman et al.

- Leveraging Model Guidance to Extract Training Data from Personalized Diffusion Models — Xiaoyu Wu et al.

- Local Pan-privacy for Federated Analytics — Vitaly Feldman et al.

- Nearly Tight Black-Box Auditing of Differentially Private Machine Learning — Meenatchi Sundaram Muthu Selva Annamalai and Emiliano De Cristofaro

- On the Price of Differential Privacy for Hierarchical Clustering — Chengyuan Deng et al.

- Operationalizing Contextual Integrity in Privacy-Conscious Assistants — Sahra Ghalebikesabi et al. (Google DeepMind)

- PREAMBLE: Private and Efficient Aggregation via Block Sparse Vectors — Hilal Asi, Vitaly Feldman, Hannah Keller, Guy N. Rothblum, Kunal Talwar

- Privacy of Noisy Stochastic Gradient Descent: More Iterations without More Privacy Loss — Jason Altschuler and Kunal Talwar

- Privately Estimating a Single Parameter — John Duchi, Hilal Ali, and Kunal Talwar

- Scalable Private Search with Wally — Hilal Asi et al.

- Shifted Composition I: Harnack and Reverse Transport Inequalities — Jason Altschuler and Sinho Chewi

- Shifted Interpolation for Differential Privacy — Jinho Bok, Weijie Su, and Jason Altschuler

- Tractable Agreement Protocols — Natalie Collina et al.

- Tukey Depth Mechanisms for Practical Private Mean Estimation — Gavin Brown and Lydia Zakynthinou

- User Inference Attacks on Large Language Models — Nikhil Kandpal et al.

- Universally Instance-Optimal Mechanisms for Private Statistical Estimation — Hilal Asi et al.

- “What do you want from theory alone?” Experimenting with Tight Auditing of Differentially Private Synthetic Data Generation — Meenatchi Sundaram Muthu Selva Annamalai et al. The workshop also credited many contributors, including Hilal Asi, Anthony Chivetta, Vitaly Feldman, Haris Mughees, Martin Pelikan, Rehan Rishi, Guy Rothblum, and Kunal Talwar.

Key takeaways

- Privacy-preserving ML remains an active area bridging theory and systems work.

- Differential privacy foundations and amplification techniques continue to be important for practical deployments.

- Attacks and auditing remain central: understanding extraction and inference risks is necessary when deploying personalized or foundation-model–based services.

- Foundation-model APIs are being explored as tools to produce differentially private synthetic data for images and text.

- Community events like PPML help surface both theoretical advances and practical system-level research.

FAQ

-

What was PPML 2025?

PPML 2025 was a two-day hybrid Workshop on Privacy-Preserving Machine Learning hosted by Apple to discuss the state of the art in privacy-preserving ML.

-

What were the main focus areas?

The workshop focused on Private Learning and Statistics; Attacks and Security; Differential Privacy Foundations; and Foundation Models and Privacy.

-

Are recordings or materials available?

Apple shared recordings of selected talks and a recap of publications on its Machine Learning Research site; see References for the link.

-

Who contributed to the workshop?

Presenters included researchers from Apple, academic institutions, and other industry labs. Many contributors are listed on the workshop page.

-

How does this relate to product development?

The workshop discussed practical challenges and theoretical foundations that are relevant to designing privacy-conscious AI systems and assessing privacy risks.

References

- Workshop page and recordings: https://machinelearning.apple.com/updates/ppml-2025

More news

NVIDIA Unveils New RTX Neural Rendering, DLSS 4 and ACE AI Upgrades at Gamescom 2025

NVIDIA announced updates to DLSS 4, RTX Kit, ACE and developer tools at Gamescom 2025 — expanding neural rendering, on‑device ASR, DirectX Cooperative Vectors, GeForce NOW integrations and Unreal Engine support.

Anthropic tightens Claude usage policy, bans CBRN and high‑yield explosive assistance

Anthropic updated Claude’s usage policy to explicitly ban help developing CBRN and high‑yield explosives, tighten cybersecurity prohibitions, refine political rules, and clarify high‑risk requirements.

Build a scalable containerized web application on AWS using the MERN stack with Amazon Q Developer – Part 1

In a traditional SDLC, a lot of time is spent in the different phases researching approaches that can deliver on requirements: iterating over design changes, writing, testing and reviewing code, and configuring infrastructure. In this post, you learned about the experience and saw productivity gains

Building a RAG chat-based assistant on Amazon EKS Auto Mode and NVIDIA NIMs

In this post, we demonstrate the implementation of a practical RAG chat-based assistant using a comprehensive stack of modern technologies. The solution uses NVIDIA NIMs for both LLM inference and text embedding services, with the NIM Operator handling their deployment and management. The architectu

GPT-5: smaller-than-expected leap, but faster, cheaper, and stronger at coding

OpenAI's GPT-5 delivered incremental accuracy gains but notable improvements in cost, latency, coding performance, and fewer hallucinations. The launch met heavy hype and mixed reactions.

Sam Altman: ‘Yes,’ AI Is in a Bubble — What He Told The Verge

OpenAI CEO Sam Altman told The Verge he believes AI is in a bubble, compared it to the dot‑com era, warned about exuberant startup valuations, and said OpenAI expects massive data‑center spending.