Maximize Robotics Performance with Post-Training NVIDIA Cosmos Reason

Sources: https://developer.nvidia.com/blog/maximize-robotics-performance-by-post-training-nvidia-cosmos-reason, developer.nvidia.com

TL;DR

- NVIDIA introduced Cosmos Reason at GTC 2025 as an open, fully customizable reasoning vision-language model (VLM) for physical AI and robotics. NVIDIA blog

- The system converts video into tokens via a vision encoder and a projector, fusing them with a text prompt and processing them through a core model that uses a mix of LLM modules to reason step-by-step.

- Fine-tuning on physical AI tasks boosts the base model by over 10%; reinforcement learning adds another ~5% gain, reaching a 65.7 average score across robotics and autonomous-vehicle benchmarks.

- Developers can download checkpoints from Hugging Face and obtain inference scripts and post-training resources from GitHub; Cosmos Reason is optimized for NVIDIA GPUs and can run from edge to cloud via Docker or native environments.

Context and background

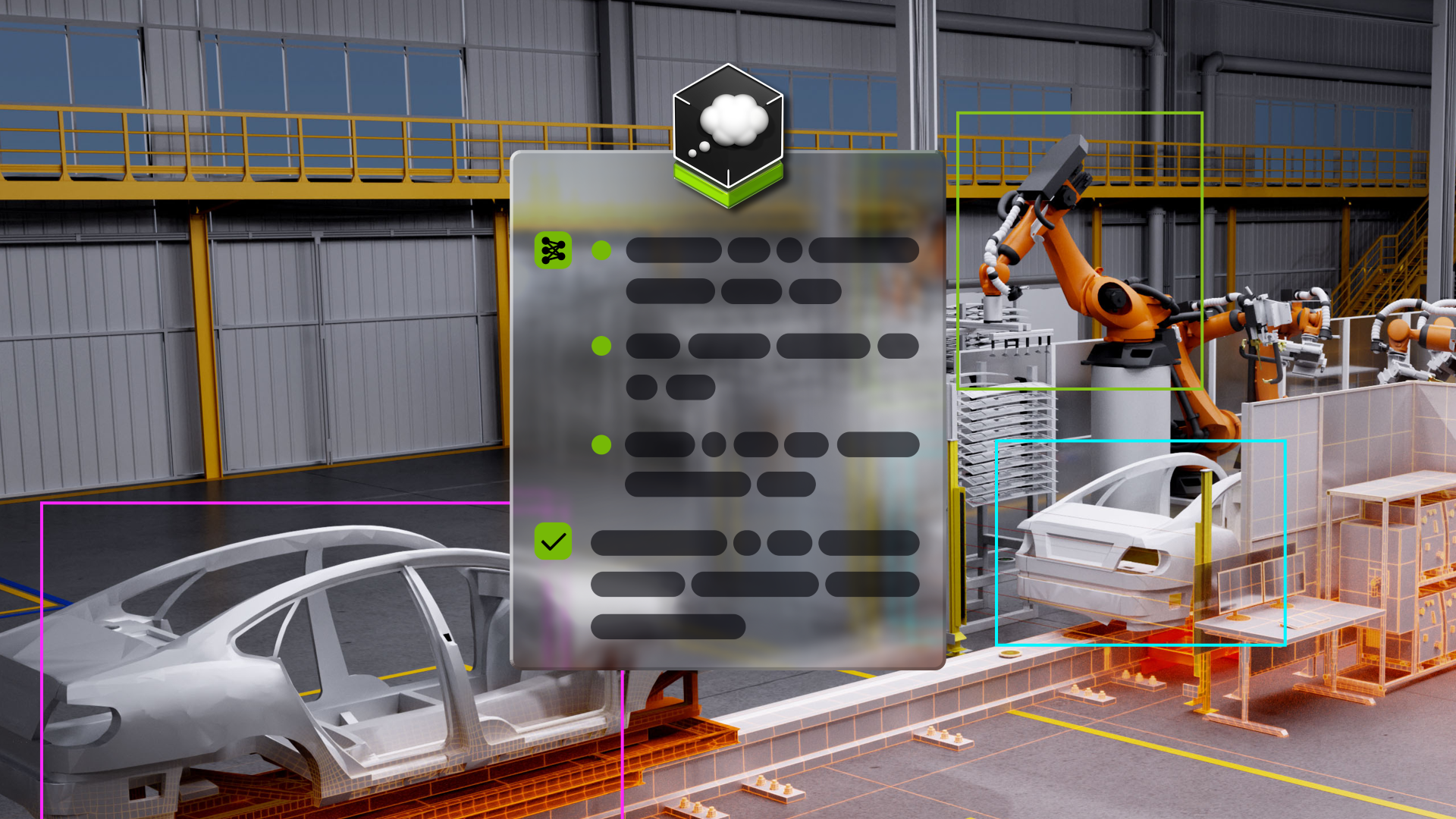

NVIDIA describes Cosmos Reason as an open and fully customizable reasoning VLM designed for real-world physical AI and robotics applications. It enables robots and vision AI agents to reason with prior knowledge, physics understanding, and common sense to perceive, interpret, and act in the real world. The system processes a video and a text prompt by turning the video into tokens with a vision encoder and a special translator called a projector. These video tokens are then combined with the text prompt and fed into the core model, which leverages a mix of large-language-model (LLM) modules and techniques. The model performs step-by-step reasoning and delivers detailed, logical responses. Cosmos Reason is built using supervised fine-tuning and reinforcement learning to bridge multimodal perception with real-world decision-making, and it uses chain-of-thought reasoning capabilities to understand world dynamics without human annotations. This positioning situates Cosmos Reason as a pathway to more capable physical AI agents that can reason about actions in physical environments. This overview is drawn from NVIDIA’s presentation and blog materials announcing the new Cosmos Reason capabilities.

What’s new

Cosmos Reason introduces several notable advancements:

- Post-training refinements: Fine-tuning on physical AI tasks boosts the base model by over 10%, and reinforcement learning adds roughly 5% more gain. This combination yields a 65.7 average score on key robotics and autonomous-vehicle benchmarks.

- Multimodal reasoning pipeline: Given a video and a text prompt, the system converts video frames to tokens via a vision encoder and a projector, merges them with the prompt, and processes them through a core model that uses multiple LLM modules to reason step-by-step.

- Chain-of-thought capability: The model employs step-by-step reasoning to understand world dynamics without relying on additional human annotations, enabling clearer explanations and more reliable decision-making.

- Accessibility and deployment: Developers can download model checkpoints from Hugging Face and access inference scripts and post-training resources from GitHub. The model accepts videos at varying resolutions and frame rates, guided by a text prompt that communicates the developer’s intent. A prompt upsampler is available to enhance prompt quality.

- Task coverage: Fine-tuning on robotics-specific tasks (e.g., VQA on robotics scenarios using the robovqa dataset) demonstrates improved performance in targeted applications.

Why it matters (impact for developers/enterprises)

Cosmos Reason matters because it bridges perception and decision-making in physical environments, enabling more capable autonomous robotics and vision AI agents. By combining supervised fine-tuning with reinforcement learning, the model improves performance on tasks that require real-world reasoning, such as robotics visual question answering. The ability to deploy from edge to cloud and to run on industry-standard NVIDIA GPUs makes it practical for enterprises seeking scalable, hardware-accelerated AI solutions for robotics, automated inspection, logistics, and autonomous operations. The openness of the model, along with downloadable checkpoints and open inference scripts, lowers barriers for researchers and developers to experiment, customize, and integrate advanced reasoning into real-world robotic systems.

Technical details or Implementation

Video processing and multimodal input

Cosmos Reason processes a video input by first turning the video frames into tokens using a vision encoder. A specialized translator, called a projector, converts raw visual data into a form compatible with the model’s reasoning components. These video tokens are then combined with a text prompt to guide the model’s reasoning process.

Core model and reasoning architecture

The core model is a composition of LLM modules and techniques designed to perform step-by-step reasoning. The system “thinks” in a chain-of-thought style, producing detailed, logical responses that reflect an understanding of physical dynamics and environmental constraints. This approach helps the model to reason about actions before executing them, aligning with real-world decision-making needs.

Training regimen

Cosmos Reason is built with a combination of supervised fine-tuning (SFT) and reinforcement learning (RL). The SFT phase targets physical AI tasks to lift general capability, while RL tuned for real-world decision-making further refines performance. The design emphasizes bridging multimodal perception with practical action in the physical world.

Prompt handling and inference tools

The model accepts a video input in various resolutions and frame rates, together with a user-provided text prompt that encodes the developer’s intent (e.g., a question or explanation). A prompt upsampler is available to improve the quality of prompts that guide the model’s reasoning. Inference can be demonstrated through representative snippets provided by NVIDIA in their materials.

Deployment and runtime options

Cosmos Reason is optimized to run on NVIDIA GPUs, including options suitable for edge-to-cloud pipelines. Developers can set up a Docker environment or run the model in their own environments. For vision AI pipelines, deployment targets span GPUs such as NVIDIA DGX Spark, NVIDIA RTX Pro 6000, NVIDIA AI H100 Tensor Core GPUs, and NVIDIA Blackwell GB200 NVL72 on NVIDIA DGX Cloud. Detailed documentation and tutorials are available in NVIDIA’s Cosmos resources to support implementation and practical use cases.

Fine-tuning and demonstrations

Alongside base capabilities, fine-tuning on robotics-specific tasks via datasets like robovqa has shown improvements in robotics visual question answering scenarios. NVIDIA notes that more information and fine-tuning scripts can be found on GitHub, reinforcing Cosmos Reason’s accessibility to developers seeking practical enhancements.

Key takeaways

- Cosmos Reason is an open, customizable VLM designed for physical AI and robotics.

- It integrates vision and language through a token-based pipeline and a multi-LLM reasoning core.

- Post-training refinements (SFT + RL) yield measurable performance gains (>10% + ~5%).

- The model supports diverse input resolutions and is deployable from edge to cloud on NVIDIA GPUs.

- Resources (checkpoints, inference scripts, post-training) are available via Hugging Face and GitHub.

FAQ

-

What is Cosmos Reason primarily designed for?

It is an open, fully customizable reasoning vision-language model for physical AI and robotics that reasons with prior knowledge, physics, and common sense.

-

How does the system process video and text prompts?

The video is tokenized by a vision encoder and projector, combined with a text prompt, and passed through a core model with LLM modules to reason step-by-step.

-

What training approaches improve performance?

Supervised fine-tuning on physical AI tasks and reinforcement learning contribute to performance gains, with a reported 65.7 average score on robotics and autonomous-vehicle benchmarks.

-

Where can developers access Cosmos Reason resources?

Model checkpoints and inference/post-training resources are available on Hugging Face and GitHub, with NVIDIA providing deployment guidance.

-

On which hardware can Cosmos Reason run?

It is optimized for NVIDIA GPUs and can run in Docker or native environments, including edge-to-cloud deployments on DGX systems and other NVIDIA GPUs.

References

More news

NVIDIA HGX B200 Reduces Embodied Carbon Emissions Intensity

NVIDIA HGX B200 lowers embodied carbon intensity by 24% vs. HGX H100, while delivering higher AI performance and energy efficiency. This article reviews the PCF-backed improvements, new hardware features, and implications for developers and enterprises.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

Kaggle Grandmasters Playbook: 7 Battle-Tested Techniques for Tabular Data Modeling

A detailed look at seven battle-tested techniques used by Kaggle Grandmasters to solve large tabular datasets fast with GPU acceleration, from diversified baselines to advanced ensembling and pseudo-labeling.

Monitor Amazon Bedrock batch inference using Amazon CloudWatch metrics

Learn how to monitor and optimize Amazon Bedrock batch inference jobs with CloudWatch metrics, alarms, and dashboards to improve performance, cost efficiency, and operational oversight.