Anthropic’s Claude Can Now Remember Past Conversations Across Devices

Sources: https://www.theverge.com/news/757743/anthropic-claude-ai-search-past-chats, theverge.com

TL;DR

- Claude now remembers past conversations and can reference them on demand across web, desktop, and mobile.

- The memory feature is available to Claude’s Max, Team, and Enterprise plans, with a toggle under Settings > Profile > Search and reference chats.

- It does not create a persistent user profile; Claude only retrieves past chats when asked and can keep separate projects/workspaces distinct.

- The rollout signals ongoing competition with OpenAI on memory-like capabilities and workflow stickiness.

Context and background

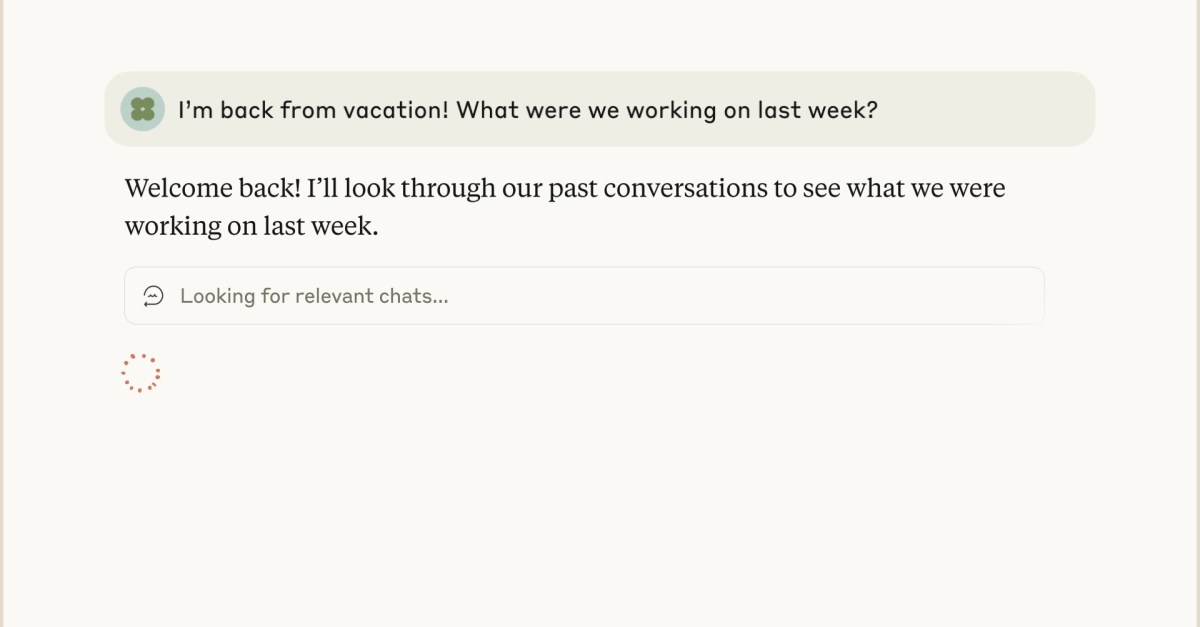

Anthropic has introduced a memory function for its Claude chatbot, enabling it to access and summarize prior chats when the user requests it. The capability was demonstrated in a YouTube video in which a user asked Claude what they had discussed before leaving for a vacation. Claude then searches through past conversations to read and summarize them for the user, and can prompt to continue working on the same project. The company has framed the feature as a way to keep projects on track and reduce the need to restart work from scratch. The memory feature works across Claude’s web, desktop, and mobile experiences and is designed to keep different projects and workspaces separate. The update began rolling out to Claude’s Max, Team, and Enterprise subscription tiers, with other plans expected to gain access soon. The change aligns with broader industry trends as major AI players compete over memory, context, and user engagement features. On the industry landscape, memory features have become a point of contention and interest, with surveys and discussions surrounding how AI systems reference users’ past conversations. In parallel, the industry has seen other major players push forward with related capabilities such as voice modes and larger context windows as they seek to attract and retain users on a single chatbot service. For context, OpenAI recently announced GPT-5 and has been cited as a competing force in the race to add memory-like features. Analysts and executives note that memory and continuity capabilities matter for enterprise workflows, where keeping track of projects and prior discussions can significantly affect productivity and collaboration.

What’s new

Claude’s new memory feature lets the assistant retrieve past chats on demand and summarize them for ongoing work. The capability is designed to help users reference prior discussions, revisit decisions, and continue projects without starting from scratch. It operates across Claude’s web, desktop, and mobile interfaces and can distinguish between multiple projects and workspaces, so a user can keep different lines of work separate. Enablement is currently rolling out to Claude’s Max, Team, and Enterprise plans. To enable, users can navigate to Settings under Profile and switch on the option labeled Search and reference chats. The company indicated that other plans would receive access soon, signaling a staged rollout. Important caveats accompany the feature: it is not a fully persistent memory feature that builds a user profile. Claude will only retrieve and reference past chats when the user explicitly asks for them, and it does not construct a lasting profile of the user. This aligns with Anthropic’s stance that memory is opt-in and controlled by the user, not an automatic background process. The feature’s release feeds into a broader narrative about how AI systems balance memory, privacy, and productivity. It also underscores a competitive dynamic between Anthropic and OpenAI, where memory, context, and related features are part of a broader arms race for developer attention and enterprise adoption.

Why it matters (impact for developers/enterprises)

For developers and enterprises, Claude’s memory feature represents a step toward more seamless project workflows. By enabling memory on a per-project basis and offering easy toggling in settings, teams can reduce context-switching and improve continuity across sessions and devices. The ability to separate workspaces is especially relevant for organizations that juggle multiple projects with different collaborators and data. The on-demand retrieval model also addresses privacy concerns by avoiding automatic long-term profiling, a concern many enterprises monitor closely when evaluating AI tools. As companies weigh toolchains and AI services, memory features that improve “stickiness” — the tendency of users to return to a single platform — are increasingly valued. Memory in this context is about continuity and reference, not lifelong profiling. The Verge notes that both Anthropic and OpenAI are racing to offer capabilities that keep users within a single chatbot ecosystem for longer periods, which can translate into higher engagement and more predictable workflows for teams.

Technical details or Implementation

How it works

- Claude retrieves and references past chats only when the user asks for them. It does not automatically build a user profile or remember preferences outside of explicit requests.

- The memory feature is designed to support multiple projects or workspaces, with clear separation so one project’s history isn’t conflated with another’s.

- The retrieval is an on-demand operation, meaning users control when past conversations are used to inform current work.

Availability and enablement

- Availability began rolling out to Claude’s Max, Team, and Enterprise plans. Other plans are slated to gain access soon.

- Activation is via Settings > Profile > Search and reference chats, allowing users to switch the feature on or off.

- The feature is accessible across web, desktop, and mobile interfaces.

Implementation notes for developers

- The memory feature is opt-in and user-controlled, aligning with privacy and security considerations common in enterprise deployments.

- There is a clear emphasis on workspace isolation, so different projects remain distinct in Claude’s memory context.

- As a non-persistent memory feature, it contrasts with fully persistent user profiles and long-term profiling approaches.

Table: key facts at a glance

| Aspect | Description

| --- |

|---|

| Memory behavior |

| Availability |

| Platform support |

| Enablement |

| Workspace handling |

Key takeaways

- Claude’s memory feature is opt-in and context-specific, designed to on-demand reference past conversations.

- The feature supports cross-platform usage and explicit workspace separation, signaling workflow-focused use in enterprises.

- It marks a continuing trend in the AI industry toward memory-like capabilities to improve continuity and engagement while addressing privacy concerns.

FAQ

-

What exactly can Claude remember?

Claude can retrieve and summarize past chats when asked, and it can keep multiple projects or workspaces separate.

-

Is memory automatic or persistent?

Memory is not persistent by default and does not create a user profile; it is retrieved only when the user asks for it.

-

How do I enable memory for Claude?

Go to Settings > Profile > Search and reference chats and toggle the feature on.

-

-

References

More news

First look at the Google Home app powered by Gemini

The Verge reports Google is updating the Google Home app to bring Gemini features, including an Ask Home search bar, a redesigned UI, and Gemini-driven controls for the home.

Meta’s failed Live AI smart glasses demos had nothing to do with Wi‑Fi, CTO explains

Meta’s live demos of Ray-Ban smart glasses with Live AI faced embarrassing failures. CTO Andrew Bosworth explains the causes, including self-inflicted traffic and a rare video-call bug, and notes the bug is fixed.

OpenAI reportedly developing smart speaker, glasses, voice recorder, and pin with Jony Ive

OpenAI is reportedly exploring a family of AI devices with Apple's former design chief Jony Ive, including a screen-free smart speaker, smart glasses, a voice recorder, and a wearable pin, with release targeted for late 2026 or early 2027. The Information cites sources with direct knowledge.

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Predict Extreme Weather in Minutes Without a Supercomputer: Huge Ensembles (HENS)

NVIDIA and Berkeley Lab unveil Huge Ensembles (HENS), an open-source AI tool that forecasts low-likelihood, high-impact weather events using 27,000 years of data, with ready-to-run options.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.