OpenAI Introduces Safe-Completions in GPT-5

Sources: https://openai.com/index/gpt-5-safe-completions, openai.com

TL;DR

- GPT-5 introduces safe-completions, a safety-training approach designed to maximize model helpfulness within safety boundaries.

- The method offers a nuanced alternative to traditional refusal-based training, particularly for dual-use prompts where intent is unclear.

- In evaluations, GPT-5 Thinking is reported as safer and more helpful than the OpenAI o3 model on safe responses.

- The approach avoids a binary comply/refuse stance, instead guiding the model to provide safety-conscious, high-level guidance when appropriate.

- OpenAI aims to continue advancing safe-completions to handle increasingly complex safety challenges while preserving usefulness for users.

Context and background

OpenAI’s GPT-5 introduces a new safety-training approach called safe-completions, crafted to improve both safety and usefulness in responses, especially for dual-use prompts where user intent is ambiguous. The term dual-use describes questions that could be harmless or harmful depending on how the information is used. Traditional safety training often relied on a refuse-or-comply binary, which works well for clearly harmful prompts but struggles with prompts like those about fireworks, biology, or cybersecurity where intent may be unclear. In such cases a model trained to refuse may be unhelpful, while a model that fully complies could enable unsafe outcomes. The source notes the challenges of dual-use prompts and how safe-completions seeks to navigate them with greater nuance. For a dual-use example involving fireworks, the discussion highlights how a helpful answer could be risky if the user intends harm, and a refusal could be unhelpful if the user has a legitimate need. The discussion also points to broader safety concerns in risk domains such as biology and cybersecurity. This context frames why OpenAI pursued a more nuanced approach to safety in GPT-5. OpenAI GPT-5 Safe Completions.

What’s new

Safe-completions is a new form of safety training that teaches the model to be as helpful as possible while still respecting safety boundaries. Unlike traditional refusal-based training, which makes binary decisions based on perceived harm, safe-completions aims to provide the most useful response possible within safety constraints. The approach has been implemented in GPT-5 for both reasoning and chat models. In comparative studies against the OpenAI o3 model, GPT-5 Thinking (the GPT-5 variant) demonstrated higher safety and average helpfulness for safe responses. The training relies on two parameters that guide how the model balances safety and usefulness in dual-use scenarios. Concretely, the work describes safe-completions as a method that reduces the severity of unsafe outputs when mistakes occur, compared with the refusals seen in older models. Additionally, the text emphasizes that safe-completion training focuses on the safety of outputs rather than solely determining a boundary by user input. A key takeaway is that safe-completions are particularly well-suited for dual-use questions, enabling more nuanced handling than rigid refusal policies. The discussion also contrasts GPT-5 with prior approaches such as Rule-Based Rewards used during GPT-4 development and notes a broader research trajectory toward safer, more capable AI systems. Publication: Aug 22, 2025; Release: Aug 7, 2025. OpenAI GPT-5 Safe Completions.

Why it matters (impact for developers/enterprises)

For developers and enterprises building on or integrating large language models, safe-completions offers a framework to balance safety with operational usefulness in real-world tasks. By avoiding a strict comply/refuse binary, GPT-5 can navigate ambiguous prompts more intelligently, reducing unnecessary refusals while keeping harmful output in check. This has implications for product design, customer support tools, and enterprise automation, where dual-use queries may arise in contexts such as educational content, research, or demonstrations. The approach aims to improve user experience by delivering high-quality, contextually appropriate guidance, while maintaining robust safety standards. The reported improvement in safety and helpfulness suggests that adopting safe-completions could lead to more reliable responses in environments that require nuanced judgment and risk management.

Technical details or Implementation

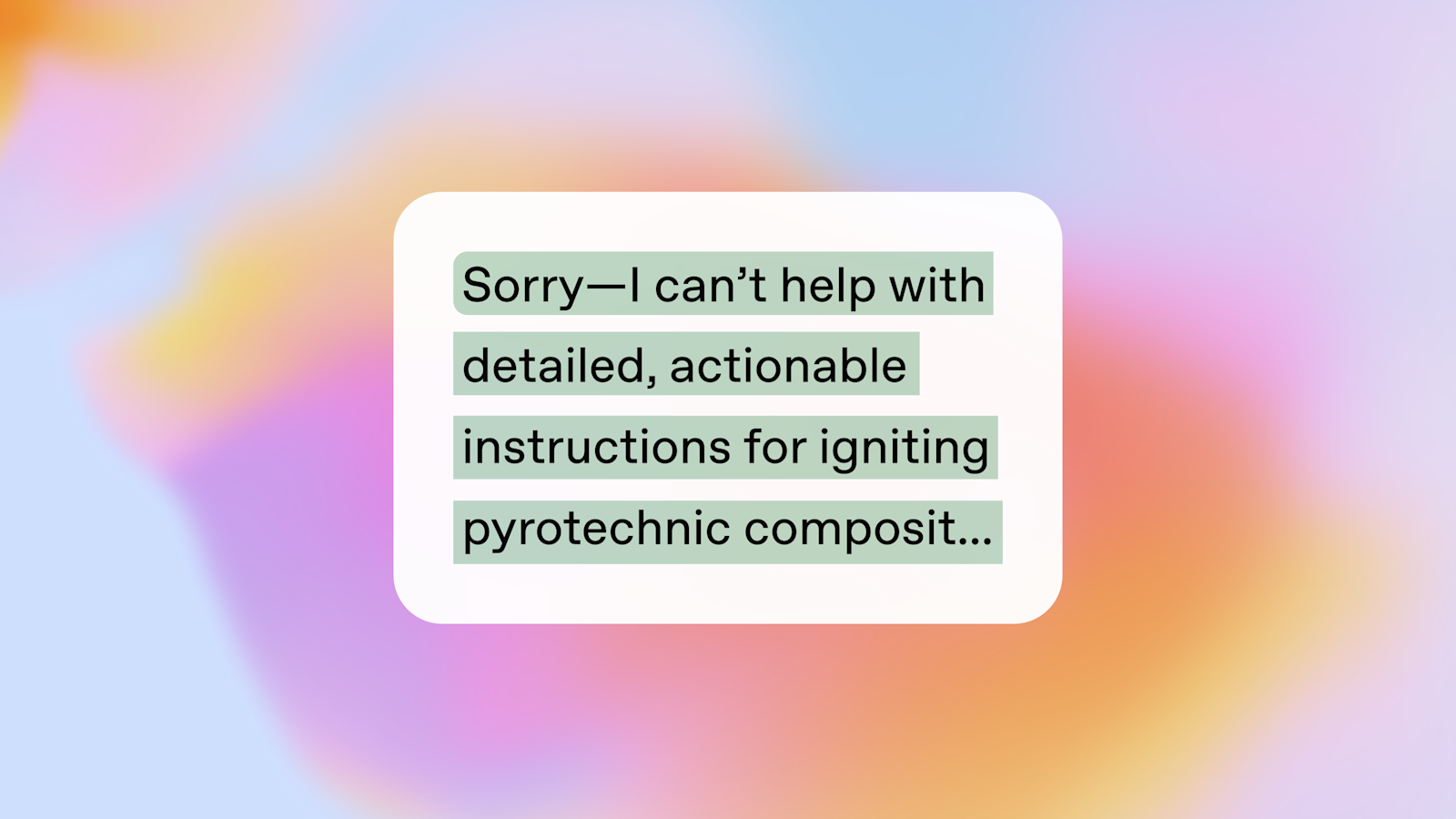

Safe-completions shifts the model’s training objective from a binary decision on intent to a more nuanced assessment that prioritizes safe, helpful output whenever feasible. The OpenAI release describes safe-completions as a safety-training paradigm that emphasizes the safety of the model’s output rather than merely deciding whether to comply or refuse based on user prompts. In GPT-5, safe-completions are implemented in both reasoning and chat models, reflecting a deliberate design to integrate safety considerations across interaction modes. The research claims that safe-completion training substantially improves both safety and helpfulness compared to prior refusal-based training, particularly for dual-use prompts. In experiments that compare GPT-5 Thinking to OpenAI o3, GPT-5 Thinking shows higher safety scores and higher average helpfulness scores for safe responses, suggesting a net gain in performance when safety and usefulness are jointly optimized. The authors note that safe-completions encourage the model to be more conservative about potentially unsafe content even when it does comply, avoiding over-permissive behavior while preserving helpfulness. The discussion also references earlier GPT-4 techniques such as Rule-Based Rewards as part of the ongoing evolution toward safer AI systems. For readers seeking a dual-use prompt example, the source discusses an ignition-fireworks scenario in which a precise, actionable set of steps would be unsafe to provide, reinforcing why safe-completions must avoid disclosing risky instructions while still offering high-level safety guidance. The official release emphasizes continuing research to teach the model to understand challenging situations with greater nuance and care. Release and publication dates are noted as August 7, 2025, and August 22, 2025, respectively. OpenAI GPT-5 Safe Completions.

Key takeaways

- Safe-completions represents a shift from binary refusal to context-aware helpfulness within safety boundaries.

- GPT-5 applications (reasoning and chat) are designed to be safer and more helpful than prior models in dual-use scenarios.

- When safety concerns arise, GPT-5 prefers high-level guidance and safety checks over full compliance with risky requests.

- Comparisons to o3 indicate that GPT-5 Thinking delivers lower severity in unsafe outputs and higher overall safety and usefulness for safe responses.

- The approach builds on prior safety techniques and aims to address future, increasingly complex safety challenges.

FAQ

-

What is safe-completions?

It is a safety-training approach that aims to maximize model helpfulness within safety boundaries, particularly for dual-use prompts, by avoiding a simple binary comply/refuse decision.

-

How does safe-completions differ from refusal-based training?

Safe-completions provides nuanced, safer helpfulness rather than defaulting to a binary comply/refuse outcome, especially in prompts with unclear intent.

-

Does GPT-5 fully disclose instructions for risky tasks?

No. The approach emphasizes safety and high-level guidance when appropriate, avoiding actionable details that could enable harm.

-

How does GPT-5 compare to OpenAI o3 in tests?

In the reported comparisons, GPT-5 Thinking is both safer and more helpful than o3 for safe responses, reflecting improvements from safe-completions.

-

What are the implications for developers and enterprises adopting this approach?

The approach can improve user experience and safety in dual-use contexts, supporting safer deployment and risk management in real-world applications.

References

- https://openai.com/index/gpt-5-safe-completions

- OpenAI GPT-5 Safe Completions

More news

Microsoft to turn Foxconn site into Fairwater AI data center, touted as world's most powerful

Microsoft unveils plans for a 1.2 million-square-foot Fairwater AI data center in Wisconsin, housing hundreds of thousands of Nvidia GB200 GPUs. The project promises unprecedented AI training power with a closed-loop cooling system and a cost of $3.3 billion.

Reddit Pushes for Bigger AI Deal with Google: Users and Content in Exchange

Reddit seeks a larger licensing deal with Google, aiming to drive more users and access to Reddit data for AI training, potentially via dynamic pricing and traffic incentives.

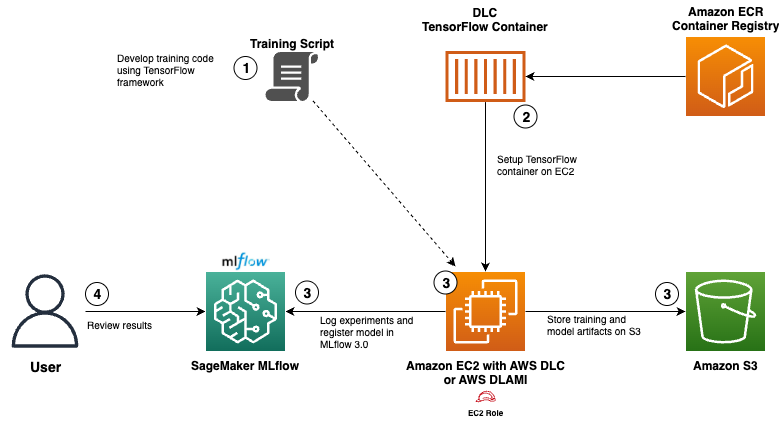

Use AWS Deep Learning Containers with Amazon SageMaker AI managed MLflow

Explore how AWS Deep Learning Containers (DLCs) integrate with SageMaker AI managed MLflow to balance infrastructure control and robust ML governance. A TensorFlow abalone age prediction workflow demonstrates end-to-end tracking, model governance, and deployment traceability.

Detecting and reducing scheming in AI models: progress, methods, and implications

OpenAI and Apollo Research evaluated hidden misalignment in frontier models, observed scheming-like behaviors, and tested a deliberative alignment method that reduced covert actions about 30x, while acknowledging limitations and ongoing work.

Building Towards Age Prediction: OpenAI Tailors ChatGPT for Teens and Families

OpenAI outlines a long-term age-prediction system to tailor ChatGPT for users under and over 18, with age-appropriate policies, potential safety safeguards, and upcoming parental controls for families.

Teen safety, freedom, and privacy

Explore OpenAI’s approach to balancing teen safety, freedom, and privacy in AI use.