GPT-5 System Card: A Unified Routing System for Fast, Smart AI

Sources: https://openai.com/index/gpt-5-system-card, openai.com

TL;DR

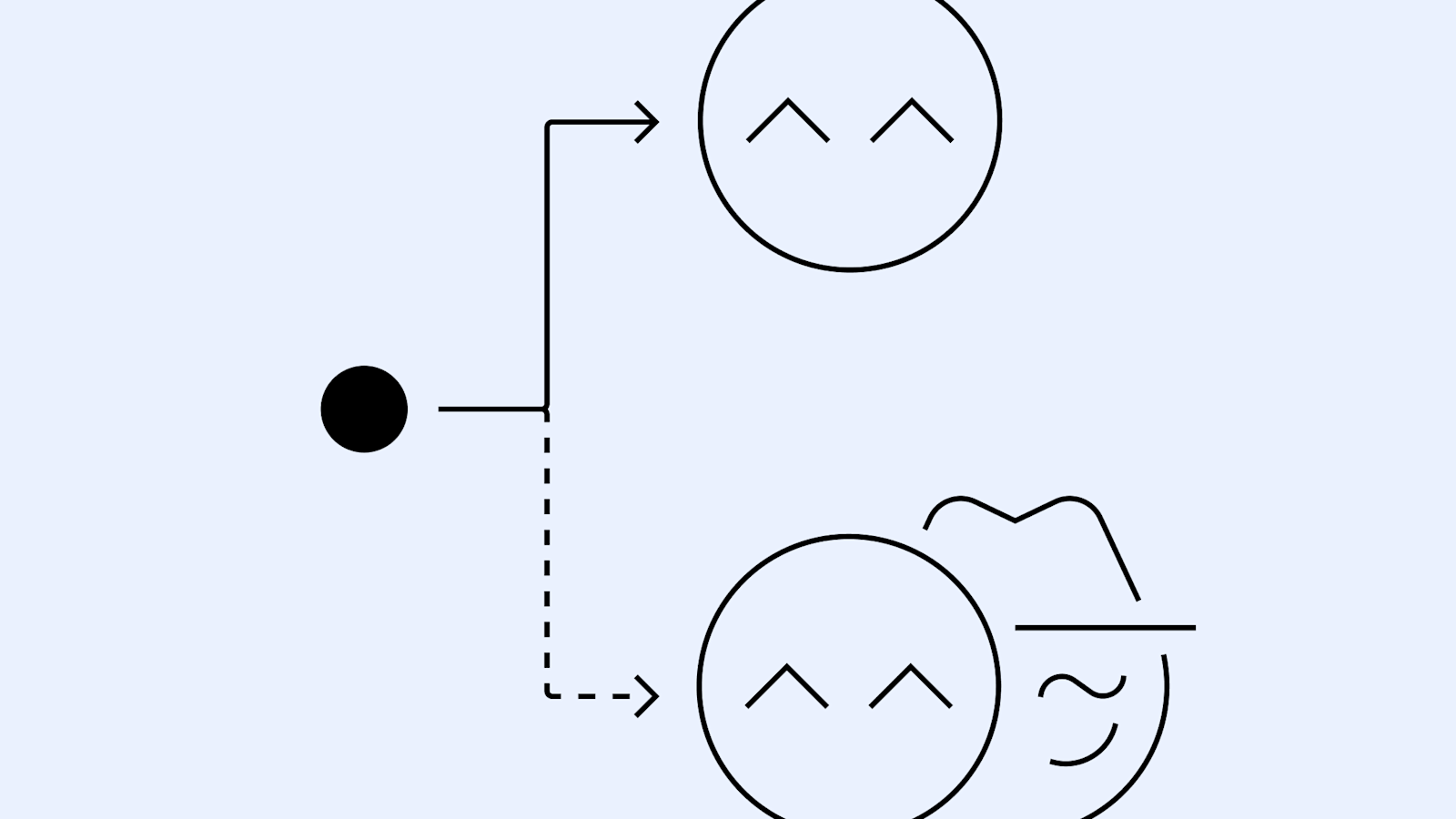

- GPT-5 is a unified system combining a fast model, a deeper reasoning model, and a real-time router that selects the correct model for each interaction. OpenAI GPT-5 System Card

- The router uses conversation type, complexity, tool needs, and explicit prompts to route queries, and it continuously learns from real user signals.

- Model labels include gpt-5-main, gpt-5-main-mini, gpt-5-thinking, and gpt-5-thinking-mini; API access also includes a nano thinking model for developers, and a thinking-pro variant in ChatGPT.

- Safety features called safe-completions and a precautionary approach in high capability domains are part of GPT-5, with notable focus on writing, coding, and health tasks.

- The system plans to integrate capabilities into a single model in the near future, with mini models handling excess load when usage limits are reached.

Context and background

GPT-5, announced in August 2025, introduces a unified system that blends a smart and fast model for answering most questions, a deeper reasoning model for harder problems, and a real-time router that decides which model to use based on the type of conversation, its complexity, tool needs, and explicit user intent. For example, prompting with a request to think deeply can nudge the router toward the thinking model. The router is continuously trained on real signals, including how users switch models, response preference rates, and observed correctness, with the goal of improving over time. When usage limits are reached, a mini version of each model takes over remaining queries, ensuring continued throughput. The designers envision integrating these capabilities into a single model in the near future. The system card labels the fast high-throughput options as gpt-5-main and gpt-5-main-mini, while the deeper reasoning options are gpt-5-thinking and gpt-5-thinking-mini. An API in parallel provides direct access to the thinking model, its mini, and a smaller, faster nano version specifically for developers (gpt-5-thinking-nano). In ChatGPT, users can access gpt-5-thinking via a setting that leverages parallel test time compute, known there as gpt-5-thinking-pro. This aligns with prior naming conventions where GPT-5 models map to earlier OpenAI offerings. A mapping table in the card ties older model names to the new GPT-5 labels, showing that the GPT-5 system is designed to supersede previous models while keeping compatibility with existing references. The GPT-5 family is positioned as a step up in benchmarks, speed, and real-world usefulness, with notable gains in reducing hallucinations, improving instruction following, and minimizing bias or sycophancy. In real-world use, GPT-5 is reported to offer improvements in writing, coding, and health applications. All GPT-5 models include safe-completions to prevent disallowed content. The system card also notes that gpt-5-thinking is treated as High capability within the Biological and Chemical domain under OpenAI’s Preparedness Framework, with safeguards activated accordingly. While there is no definitive evidence that the model could meaningfully enable novice harm, a precautionary approach is applied using a defined threshold for High capability. Publication dates include August 22, 2025 for the broader release, with safety notes published August 7 and August 5, 2025. The card emphasizes that evaluations for other models live in the appendix and that the focus remains on gpt-5-thinking and gpt-5-main. For readers seeking a concise technical anchor, the GPT-5 system is described as a unified routing system that both speeds up responses and enhances reasoning capacity, with a roadmap toward deeper integration and greater developer accessibility. See the GPT-5 system card for the primary source of these claims: OpenAI GPT-5 System Card.

What’s new

- A real-time model router that selects among fast and thinking models based on conversation type, complexity, tool needs, and explicit user intent. The router is continuously trained on real-time signals to improve routing accuracy.

- Distinct model labels for fast processing (gpt-5-main, gpt-5-main-mini) and deeper reasoning (gpt-5-thinking, gpt-5-thinking-mini), plus a nano variant for developers (gpt-5-thinking-nano).

- API access that includes the thinking model, its mini, and the nano thinking model for developers, while ChatGPT offers gpt-5-thinking with parallel test time compute under gpt-5-thinking-pro.

- A plan to consolidate capabilities into a single model in the near future, alongside a strategy to use mini models to manage load when usage limits are reached.

- A safety framework with safe-completions across models, and a precautionary High capability designation for gpt-5-thinking in the Biological and Chemical domain under the Preparedness Framework, with safeguards activated.

- Notable performance gains in real-world tasks, especially in writing, coding, and health, alongside reduced hallucinations and improved instruction following.

- A labeled mapping that connects GPT-5 labels with prior model names to maintain continuity across generations and references. See the main card for full details. For a precise mapping and official text, refer to the GPT-5 system card here: OpenAI GPT-5 System Card.

Why it matters (impact for developers/enterprises)

The GPT-5 system introduces a modular architecture that can be customized to workload and policy constraints. By routing queries to the most appropriate model, organizations can potentially achieve faster throughputs for common tasks while reserving deeper reasoning capacity for complex prompts. The availability of multiple access points, including an API for thinking models and a nano variant for developers, expands integration possibilities in software development, data processing, and enterprise workflows. The safety enhancements offered by safe-completions reflect an emphasis on responsible AI usage, which is critical for enterprise deployments that must comply with internal policies and regulatory requirements. The explicit mention of High capability in sensitive domains and the Preparedness Framework indicates a proactive stance toward risk management, which can influence procurement decisions, governance practices, and vendor selection for AI platforms. In ChatGPT contexts, the gpt-5-thinking-pro option suggests a capacity for higher compute usage for complex tasks timed to test time computation, which could impact user experience and cost considerations for end users and businesses relying on advanced reasoning features. From a product strategy viewpoint, the plan to integrate the capabilities into a single model signals a long-term simplification path that could affect API design, model lifecycle management, and developer tooling. The emphasis on reducing hallucinations and improving instruction following supports enterprise needs for reliable, auditable AI behavior across writing, coding, and health tasks.

Technical details or Implementation (choose appropriately)

- Real-time router architecture: A single routing component makes decisions on which model to deploy based on conversation type, complexity, tool needs, and explicit intent. The router is trained on real signals such as user model switches, response preference rates, and measured correctness, with continuous improvement over time.

- Model labeling and access: The fast and high-throughput models are labeled as gpt-5-main and gpt-5-main-mini, while the deeper reasoning models are labeled as gpt-5-thinking and gpt-5-thinking-mini. Developers can access gpt-5-thinking and its variants via API, including gpt-5-thinking-nano for lightweight tasks.

- ChatGPT integration: In ChatGPT, gpt-5-thinking is accessible with a setting that leverages parallel test time compute, referred to as gpt-5-thinking-pro. The system card also provides mappings to older OpenAI model names to ease cross references.

- Load management: When usage limits are reached, a mini version of each model takes over the remaining queries to preserve throughput while continuing to deliver results.

- Safety and governance: All GPT-5 models feature safe-completions to prevent disallowed content. The thinking model is designated as High capability in the Biological and Chemical domain under the Preparedness Framework, activating the associated safeguards. Actions are precautionary even when definitive evidence of potential harm is not present.

- Evaluation focus: The card highlights improvements in reducing hallucinations, improving instruction following, and minimizing sycophancy, with explicit gains in writing, coding, and health domains.

- Future integration: The card states an intention to integrate these capabilities into a single model in the near future, signaling a potential shift in how models are consumed and managed by developers.

Key takeaways

- GPT-5 introduces a unified architecture combining fast responses with deeper reasoning via a real-time router.

- Multiple model variants provide options for throughput, reasoning depth, and developer access, including a nano variant for lightweight tasks.

- API and ChatGPT access options enable developers to leverage thinking models with specialized compute settings.

- Safety improvements and a preparedness oriented governance approach address disallowed content and domain specific risk, particularly in biology and chemistry.

- The roadmap includes consolidating capabilities into a single model, while mini models handle excess load during usage limits.

FAQ

-

What is the GPT-5 system card about?

It describes a unified routing system that uses fast and thinking models plus a real-time router to optimize responses, with safety and developer access features. [OpenAI GPT-5 System Card](https://openai.com/index/gpt-5-system-card).

-

How does the router decide which model to use?

The router bases its decision on conversation type, complexity, tool needs, and explicit user intent, and it is continuously trained on real signals such as model switching and response accuracy. [OpenAI GPT-5 System Card](https://openai.com/index/gpt-5-system-card).

-

What is gpt-5-thinking-nano?

It is a smaller, faster nano version of the thinking model designed for developers to use via API. [OpenAI GPT-5 System Card](https://openai.com/index/gpt-5-system-card).

-

What does safe-completions imply for usage?

Safe-completions represent the latest safety training to prevent disallowed content across GPT-5 models. [OpenAI GPT-5 System Card](https://openai.com/index/gpt-5-system-card).

-

What is gpt-5-thinking-pro in ChatGPT?

It refers to a variant of gpt-5-thinking that uses parallel test time compute within ChatGPT for higher capability tasks. [OpenAI GPT-5 System Card](https://openai.com/index/gpt-5-system-card).

References

More news

Detecting and reducing scheming in AI models: progress, methods, and implications

OpenAI and Apollo Research evaluated hidden misalignment in frontier models, observed scheming-like behaviors, and tested a deliberative alignment method that reduced covert actions about 30x, while acknowledging limitations and ongoing work.

Building Towards Age Prediction: OpenAI Tailors ChatGPT for Teens and Families

OpenAI outlines a long-term age-prediction system to tailor ChatGPT for users under and over 18, with age-appropriate policies, potential safety safeguards, and upcoming parental controls for families.

Teen safety, freedom, and privacy

Explore OpenAI’s approach to balancing teen safety, freedom, and privacy in AI use.

OpenAI, NVIDIA, and Nscale Launch Stargate UK to Enable Sovereign AI Compute in the UK

OpenAI, NVIDIA, and Nscale announce Stargate UK, a sovereign AI infrastructure partnership delivering local compute power in the UK to support public services, regulated industries, and national AI goals.

OpenAI introduces GPT-5-Codex: faster, more reliable coding assistant with advanced code reviews

OpenAI unveils GPT-5-Codex, a version of GPT-5 optimized for agentic coding in Codex. It accelerates interactive work, handles long tasks, enhances code reviews, and works across terminal, IDE, web, GitHub, and mobile.

How People Are Using ChatGPT: Broad Adoption, Everyday Tasks, and Economic Value

OpenAI's large-scale study shows ChatGPT usage spans everyday guidance and work, with gender gaps narrowing and clear economic value in both personal and professional contexts.