Project Ire autonomously identifies malware at scale

Sources: https://www.microsoft.com/en-us/research/blog/project-ire-autonomously-identifies-malware-at-scale, microsoft.com

TL;DR

- Project Ire autonomously identifies malware at scale by classifying software without context.

- It replicates the gold standard in malware analysis through reverse engineering.

- The approach streamlines a complex, expert-driven process to enable faster, more consistent detection.

- This work aims to improve scalability for large security workloads in cloud and enterprise environments.

- The post highlights implications for developers, security teams, and risk management.

Context and background

Malware analysis has long relied on deep expertise and manual reverse engineering to determine whether software is malicious and to understand its behavior. This analysis is resource-intensive and does not easily scale to the volume of software seen in modern cloud environments. Project Ire addresses these challenges by design to classify software without context, mirroring the gold-standard malware-analysis workflow through reverse engineering. By automating and standardizing a previously expert-driven process, it seeks to deliver faster, more consistent malware detection across large-scale software corpora.

What’s new

Project Ire introduces an autonomous capability to identify malware at scale. It emphasizes context-free classification and reverse-engineering–inspired analysis to reproduce the gold standard without manual, per-sample interpretation. This shift promises improved throughput and consistency when processing large numbers of software samples, aligning with security objectives in cloud services and enterprise risk management.

Why it matters (impact for developers/enterprises)

For developers and security teams, Project Ire represents a potential shift toward integrating scalable malware identification into broader security workflows. By focusing on software classification without relying on deployment context, the approach aims to reduce the manual burden on analysts and speed up triage. For enterprises operating large software portfolios, the ability to detect malware at scale with consistent results can enhance threat-hunting capabilities and overall security posture in cloud and on-premises environments.

Technical details or Implementation

The core idea is to classify software without context, while replicating the traditional gold-standard malware-analysis process through reverse engineering. This approach seeks to automate and streamline expert-driven steps, aiming to produce faster and more consistent malware detection across large datasets. While specific implementation details are not disclosed in this summary, the emphasis is on automating the decision-making workflow that mirrors reverse-engineering-based analysis, enabling scale without sacrificing the fidelity associated with the gold standard.

Key takeaways

- Context-free malware classification can match the rigor of traditional reverse-engineering-based analysis.

- Automation and standardization can accelerate malware detection at scale.

- Reducing reliance on contextual signals may simplify integration into large security workflows.

- The approach is positioned to improve consistency across vast software corpora.

- This work highlights a path toward scalable cloud-security solutions and enterprise threat detection.

FAQ

-

What is Project Ire?

Project Ire autonomously identifies malware at scale by classifying software without context and replicating the gold-standard malware analysis through reverse engineering.

-

How does it achieve scalability?

By automating and standardizing the expert-driven malware analysis process, enabling faster, more consistent malware detection across large software sets.

-

Does it rely on context signals?

No; it is designed to classify software without context.

-

When was this announced?

The Microsoft Research blog post announcing Project Ire was published on November 12, 2024.

References

More news

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Move AI agents from proof of concept to production with Amazon Bedrock AgentCore

A detailed look at how Amazon Bedrock AgentCore helps transition agent-based AI applications from experimental proof of concept to enterprise-grade production systems, preserving security, memory, observability, and scalable tool management.

OpenAI introduces GPT-5-Codex: faster, more reliable coding assistant with advanced code reviews

OpenAI unveils GPT-5-Codex, a version of GPT-5 optimized for agentic coding in Codex. It accelerates interactive work, handles long tasks, enhances code reviews, and works across terminal, IDE, web, GitHub, and mobile.

GPT-5-Codex Addendum: Agentic Coding Optimized GPT-5 with Safety Measures

An addendum detailing GPT-5-Codex, a GPT-5 variant optimized for agentic coding within Codex, with safety mitigations and multi-platform availability.

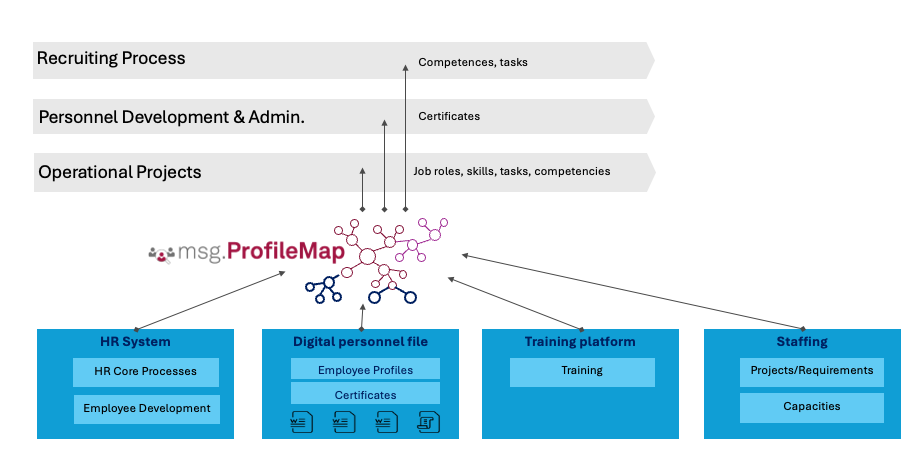

How msg enhanced HR workforce transformation with Amazon Bedrock and msg.ProfileMap

This post explains how msg automated data harmonization for msg.ProfileMap using Amazon Bedrock to power LLM-driven data enrichment, boosting HR concept matching accuracy, reducing manual workload, and aligning with EU AI Act and GDPR.

OpenAI partners with US CAISI and UK AISI to strengthen AI safety and security

OpenAI expands collaboration with the US Center for AI Standards and Innovation (CAISI) and the UK AI Security Institute (UK AISI) to advance safer frontier AI deployment through joint red-teaming, end-to-end testing, and rapid vulnerability response.