Build an AI Shopping Assistant with Gradio MCP Servers

TL;DR

- Gradio Model Context Protocol (MCP) lets LLMs call external models and tools hosted on Hugging Face Hub.

- The demo combines IDM-VTON for virtual try-on, Gradio MCP, and VS Code AI Chat to create an AI shopping assistant.

- The core server is an MCP-enabled Gradio app; docstrings describe tools and parameters for LLM use.

- A minimal setup walks through running a Gradio MCP server, wiring it to VS Code via mcp.json, and launching a Playwright MCP server for web browsing.

Context and background

Gradio provides a fast path for developers to empower language models with the capabilities of external models and tools through its Model Context Protocol (MCP) integration. By connecting an LLM to thousands of AI models and Spaces hosted on the Hugging Face Hub, you can create assistants that go beyond answering questions to actually solving real tasks. This approach aligns with the idea of pairing general reasoning with specialized tools to tackle everyday problems. The post outlines a concrete demonstration: an LLM-powered AI shopping assistant that can browse online clothing stores, locate garments, and use a virtual try-on model to visualize outfits on a user-provided photo. The goal is to show how Gradio MCP servers can act as a bridge between an LLM and the domain-specific capabilities contained in other models—like the IDM-VTON diffusion model for virtual try-on. This IDM-VTON space is available through Hugging Face Spaces, and the integration leverages Gradio’s MCP workflow to call it programmatically. This project also emphasizes the workflow for developers using familiar tools. Gradio is used to expose Python functions as MCP tools, with mcp_server=True in the launch() call enabling automatic conversion. The docstrings associated with those Python functions are leveraged to generate human-readable descriptions of the tools and their parameters, helping LLMs understand how to use them. Although the IDM-VTON space initially shipped with Gradio 4.x—before MCP’s automatic tooling—the demo shows how you can still query the original space via the Gradio API client from a Gradio interface configured for MCP. The overarching message is that combining Gradio MCP with specialized models and a friendly UI can unlock practical, AI-assisted workflows. For readers and developers, the source material highlights a practical path to building similar assistants that solve real problems, not just produce text. For more context, see the original Hugging Face post describing these components: Hugging Face blog on Gradio MCP and IDM-VTON.

What’s new

The post introduces a complete workflow to assemble an LLM-powered shopping assistant around three main pieces:

- IDM-VTON Diffusion Model: Handles the virtual try-on functionality by editing photos to reflect a chosen garment.

- Gradio: Serves as the MCP-enabled interface layer, exposing Python functions as MCP tools so that an LLM can call them.

- Visual Studio Code’s AI Chat: Acts as the user-facing interface that supports adding arbitrary MCP servers, enabling a practical, desktop-based interaction flow. Key new ideas in the demonstration include exposing a single main MCP tool via the Gradio MCP server and using the functions’ docstrings to automatically generate tool descriptions for the LLM. The demo also shows how to query the IDM-VTON space through the Gradio API client, even though the original space predates MCP. To run the demonstration, you start by running the Python script that defines the MCP server, with mcp_server=True to enable MCP tooling. The next step is to connect this server to VS Code’s AI chat by editing the mcp.json file. The configuration in mcp.json must include both the vton-related MCP server and the Playwright-based MCP server that enables web browsing. The blog also includes a note about the need to run the Playwright MCP server to enable browsing and the requirement to install Node.js to operate it. In short, you wire together Gradio MCP, IDM-VTON, and VS Code AI Chat to create an end-to-end AI shopping assistant that can browse stores, select items, and render virtual try-ons. Reference to the workflow and steps can be found in the original article from Hugging Face: Hugging Face blog on Gradio MCP and IDM-VTON.

Why it matters (impact for developers/enterprises)

This approach demonstrates a practical pathway for developers to augment LLMs with domain-specific capabilities, turning generic reasoning into action. By combining:

- A generalist model (LLM) with

- A collection of domain-focused models (e.g., IDM-VTON for image editing/virtual try-on) and web-browsing tools (via Playwright MCP), and

- A production-grade UI and tooling pipeline (Gradio MCP servers and VS Code AI Chat), developers can build AI assistants that perform real tasks rather than just generate text. For enterprises, MCP-enabled workflows can accelerate the prototyping and deployment of AI-assisted tools across e-commerce, media, and customer support. Instead of building bespoke connectors for each model, teams can leverage MCP’s ability to describe tools and parameters through docstrings and expose them as actionable capabilities to an LLM. From a developer experience perspective, the integration emphasizes a familiar, local development loop: write a Python function, decorate it for MCP, run a script, configure mcp.json, and interact with the result through VS Code’s AI chat. This reduces the friction of orchestrating multiple services and speeds up experimentation with AI-powered workflows. The broader implications align with Hugging Face’s ecosystem strategy: enabling LLMs to leverage a wide catalog of models and spaces, thereby expanding the practical reach of AI across industries. This is particularly relevant for teams exploring personalized customer experiences, where virtual try-ons and real-time product discovery can be combined with natural language interfaces.

Technical details or Implementation (how the pieces fit)

The technical backbone of the demo rests on three pillars:

- IDM-VTON: The diffusion-based model responsible for virtual try-on imagery. The space is hosted on Hugging Face Spaces and is leveraged to edit photos so they reflect a specified garment on a user’s figure. The project notes that the IDM-VTON space was originally implemented with Gradio 4.x, which predates MCP’s automatic tool-generation feature.

- Gradio MCP server: This is the core of the shopping assistant. By calling launch() with mcp_server=True, Gradio automatically exposes Python functions as MCP tools that an LLM can call. The docstrings attached to these functions are used to generate tool descriptions that guide the LLM’s usage.

- VS Code AI Chat: The interface that enables developers to interact with MCP-enabled servers. It supports adding arbitrary MCP servers, and in the demo, the mcp.json configuration is edited to point to both the IDM-VTON integration and the Playswright-based web-browsing server. The workflow described in the post emphasizes a practical sequence:

- Create a Gradio MCP server that exposes one or more tools. The server’s main tool(s) are driven by Python functions whose docstrings describe their capabilities and parameters.

- Run the Gradio script with the mcp_server flag to enable automatic MCP tool generation.

- Use the Gradio API client to query the IDM-VTON space from the MCP-enabled interface when the original space predates MCP.

- Configure mcp.json in VS Code to connect the AI chat to the MCP server, ensuring the vton and browsing servers are present and reachable.

- If web browsing is required, run the Playwright MCP server, which requires Node.js to be installed. The end-to-end flow lets a user issue natural-language directives such as browsing a particular store for blue T-shirts and requesting a virtual try-on with a user-provided photo, with the LLM orchestrating model calls and image editing steps behind the scenes. For precise steps and the code scaffolding, refer to the Hugging Face post and experiment with the Gradio MCP server setup described there: Hugging Face blog on Gradio MCP and IDM-VTON.

Key takeaways

- MCP enables LLMs to call external models and tools hosted on Hugging Face Hub, making AI systems more capable in real-world tasks.

- IDM-VTON provides a practical virtual try-on workflow that can be invoked from an MCP-enabled LLM.

- Gradio serves as the bridge, exposing Python functions as MCP tools via a launch() customization using mcp_server=True.

- VS Code AI Chat can host and interact with MCP servers, enabling a convenient desktop interface for AI-powered workflows.

- The demo illustrates how to connect a Gradio MCP server to the IDM-VTON space and to a web-browsing MCP server (Playwright) to create a complete shopping assistant experience.

FAQ

-

What is MCP in Gradio?

MCP stands for Model Context Protocol, which lets an LLM call external models and tools exposed as MCP tools via Gradio.

-

How do you expose a Gradio MCP server tool?

By calling launch() with mcp_server=True; the tool descriptions come from the functions’ docstrings.

-

Do I need to query an external space like IDM-VTON via MCP?

The demo shows querying the original IDM-VTON space through the Gradio API client when the space predates MCP, enabling virtual try-on capabilities within the MCP-enabled workflow.

-

What about web browsing in the demo?

The Playwright MCP server enables web browsing within the AI shopping assistant; you need Node.js installed to run it.

-

How do I connect the MCP server to VS Code AI Chat?

Edit the mcp.json configuration in VS Code to include the MCP server URLs (the vton server and the Playwright browsing server) so that the AI chat can issue MCP-enabled commands.

References

- Hugging Face blog post describing Gradio MCP, IDM-VTON, and the integration workflow: https://huggingface.co/blog/gradio-vton-mcp

More news

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

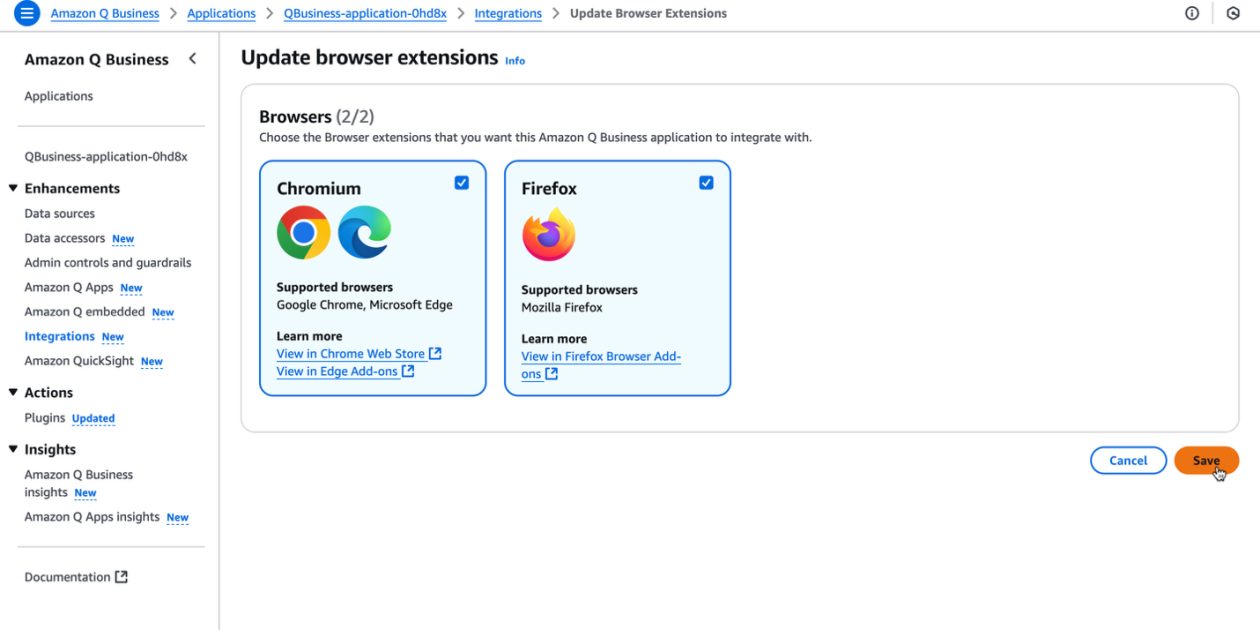

Supercharge your organization’s productivity with the Amazon Q Business browser extension

The Amazon Q Business browser extension brings context-aware, AI-driven assistance to your browser for Lite and Pro subscribers, enabling rapid, source-backed insights and seamless workflows.

Reducing Cold Start Latency for LLM Inference with NVIDIA Run:ai Model Streamer

A detailed look at how NVIDIA Run:ai Model Streamer lowers cold-start times for LLM inference by streaming weights into GPU memory, with benchmarks across GP3, IO2, and S3 storage.

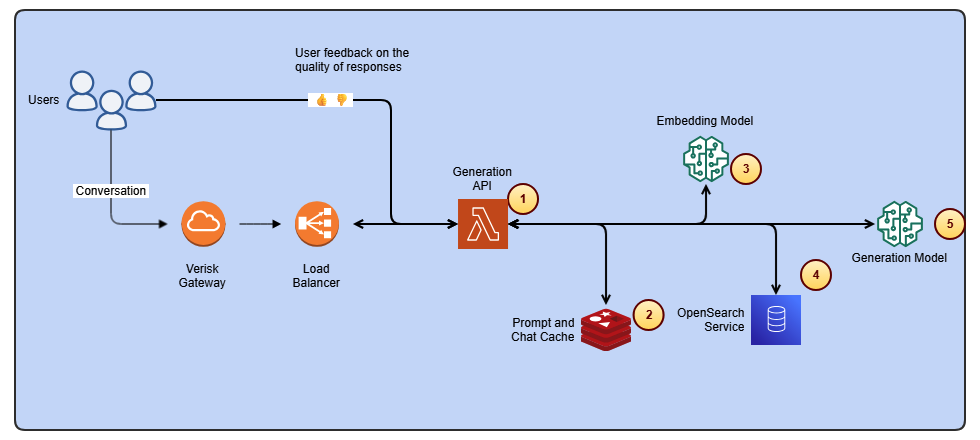

Streamline ISO-rating content changes with Verisk Rating Insights and Amazon Bedrock

Verisk Rating Insights, powered by Amazon Bedrock, LLMs, and RAG, enables a conversational interface to access ISO ERC changes, reducing manual downloads and enabling faster, accurate insights.

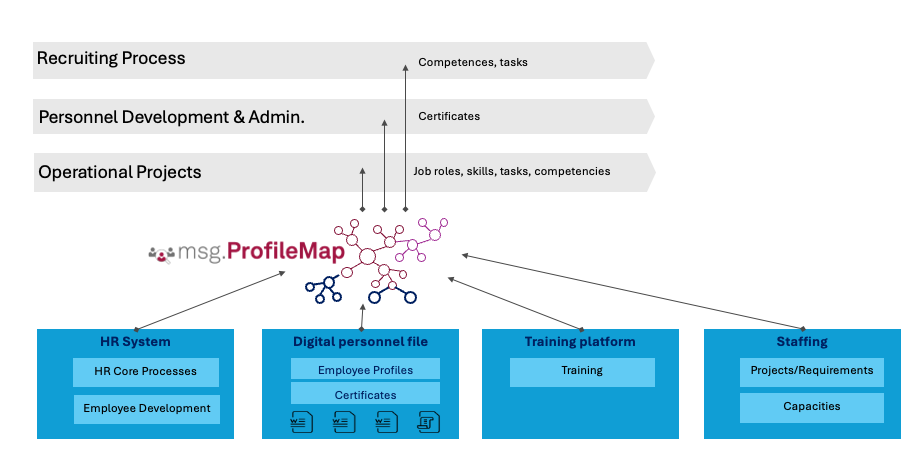

How msg enhanced HR workforce transformation with Amazon Bedrock and msg.ProfileMap

This post explains how msg automated data harmonization for msg.ProfileMap using Amazon Bedrock to power LLM-driven data enrichment, boosting HR concept matching accuracy, reducing manual workload, and aligning with EU AI Act and GDPR.