TimeScope Benchmark: How Long Can Vision-Language Models Understand Long Videos?

Sources: https://huggingface.co/blog/timescope-video-lmm-benchmark, Hugging Face Blog

TL;DR

- TimeScope is an open-source benchmark hosted on Hugging Face that measures how well vision-language models understand long videos by embedding short needle clips (~5–10 seconds) into base videos ranging from 1 minute to 8 hours. TimeScope blog

- It evaluates three task types and three needle types to push beyond surface-level retrieval toward synthesis, localization, and fine-grained motion analysis. TimeScope blog

- Gemini 2.5-Pro stands out by maintaining strong accuracy on videos longer than one hour, while Qwen 2.5-VL (3B and 7B) and InternVL 2.5 (2B, 4B, 8B) show long-video curves nearly identical to their smaller counterparts. TimeScope blog

- The benchmarks reveal that hour-long video understanding remains challenging and that open-sourcing TimeScope can help guide targeted improvements in model training and evaluation. TimeScope blog

Context and background

Long-context capabilities in vision-language models have been a topic of growing interest, paralleling trends in long-context language models. While some systems advertise context windows spanning thousands of frames, there is skepticism about whether such claims translate to genuine temporal understanding. Traditional video benchmarks like Video Needle in a Haystack (VideoNIAH) inject static images into videos, evaluating visual search rather than true temporal reasoning. This gap has left questions about how models truly comprehend sequences of events over time. HELM and RULER have shown fragility in long-context text benchmarks, underscoring the risk of overclaiming capabilities when tasks demand more than retrieval. TimeScope blog TimeScope enters this landscape as an open-source benchmark designed to test deeper temporal understanding in long videos. It complements existing text-based benchmarks by focusing on video comprehension across extended durations. TimeScope blog TimeScope is hosted as an open-source project on Hugging Face and serves as a concrete tool to probe how well current vision-language models can reason over long video narratives rather than relying solely on short excerpts or frame-level cues. The goal is to move beyond headline promises about “hour-long” or “thousand-frame” capabilities toward robust evaluation of temporal reasoning, synthesis, and motion analysis. TimeScope blog

What’s new

TimeScope introduces a novel testing paradigm for long videos. A long base video (ranging from 1 minute to 8 hours) serves as the haystack, into which one or more hand-curated short video needles (approximately 5–10 seconds each) are inserted at random positions. The task is not merely to spot the needle; models must deeply understand the needle content within the broader video context to answer questions or perform analyses. TimeScope blog Three distinct task types together with three needle types drive the evaluation:

- Task types test retrieval, synthesis, and localization, with emphasis on integrating information across time. For example, a question might ask about a mode of transportation shown across dispersed needle clips. TimeScope blog

- Needle types include text-based needles (2–4 short clips displaying words on screen) that require identifying and ordering dispersed text in chronological order, simulating extraction of timestamps or key facts. TimeScope blog

- Motion-focused needles probe dynamics within short clips, so single-frame sampling is insufficient; the model must perceive motion across frames. Example: How many times did the man swing his axe? TimeScope blog With videos of varying lengths and random needle placements, TimeScope measures how much video a model can truly handle and how performance changes as content length grows. In early tests, a mix of open-source models and large players—from Qwen 2.5-VL to Gemini 2.5-Pro—were evaluated. The benchmark highlights where models succeed or fail in temporal reasoning, information synthesis, and motion perception, guiding future training and evaluation efforts. For detailed results and visualizations, see the Hugging Face Space accompanying the project. TimeScope blog

Why it matters (impact for developers/enterprises)

TimeScope addresses a core question for real-world deployment: can a system truly understand long video narratives, or are advances in frame-level retrieval being mistaken for long-context understanding? Real-world applications—robotics, continuous operation analysis, and long-form video summarization—demand a model that can reason over hours of footage, not just retrieve relevant frames. TimeScope’s open-source nature enables researchers and enterprises to benchmark their models consistently, compare approaches, and iterate on training strategies that explicitly target temporal reasoning and motion fidelity. By exposing where current models struggle—such as long-horizon temporal reasoning and motion counting—TimeScope provides actionable signals for data curation and model design. TimeScope blog The findings also serve as a reminder that high parameter counts alone do not guarantee longer temporal horizons. The benchmark shows that increasing model size does not automatically extend the effective context window past a certain point, reinforcing the need for training regimes and architectures optimized for long-range temporal understanding. TimeScope blog

Technical details or Implementation

TimeScope rests on a simple but powerful premise: embed short needles into longer videos and require holistic understanding of the entire input to solve tasks. The needles are deliberately short (about 5–10 seconds) to force models to connect information across the timeline rather than rely on dense sampling or localized cues. The base video length spans from 1 minute to 8 hours, creating a broad range of long-context scenarios for evaluation. TimeScope blog Implementation highlights:

- Needle insertion: A long base video serves as the haystack, and one or more short video needles are inserted at random positions. The needles contain the key information needed to solve the task, challenging models to process the entire input without shortcuts. TimeScope blog

- Three needle types, each targeting a different aspect of long-video comprehension: (1) text-based needles for identifying and ordering dispersed words, (2) motion-oriented needles to test dynamics across frames, and (3) localization-oriented needles that probe pinpointing content within the timeline. The design encourages deep temporal processing rather than surface retrieval. TimeScope blog

- Tasks evaluate retrieval, information synthesis, localization, and motion analysis across videos of increasing length. The set-up asks models to detect, synthesize, or analyze content from needles embedded at varying depths in the timeline. TimeScope blog In early run results, Gemini 2.5-Pro emerges as the only model that maintains robust accuracy on videos longer than one hour, illustrating that some architectures handle long horizons better than others. In contrast, Qwen 2.5-VL (3B and 7B) and InternVL 2.5 (2B, 4B, 8B) show long-video learning curves that are nearly indistinguishable from their smaller counterparts, plateauing at about the same context length. These patterns emphasize that scaling parameters alone does not guarantee extended temporal understanding. TimeScope blog TimeScope is open source, with all components released for community use. The project also includes a Hugging Face Space that provides detailed results and visualizations. This transparency supports researchers and developers in diagnosing weaknesses and pursuing targeted improvements in model training and evaluation. TimeScope blog

Tables and quick comparisons

| Model | Long-video behavior | Notes |---|---|---| | Gemini 2.5-Pro | Maintains strong accuracy on videos longer than 1 hour | Standout among tested models |Qwen 2.5-VL 3B | Long-video curve similar to smaller counterparts | Excels in OCR-based information synthesis; weaker in motion counting |Qwen 2.5-VL 7B | Long-video curve similar to 3B | Similar trend to 3B; requires robust temporal understanding |InternVL 2.5 (2B/4B/8B) | Long-video curves resemble smaller variants | Plateau at a similar horizon across sizes |

Key takeaways

- Hour-long video understanding remains aspirational; claims of processing thousands of frames are not yet matched by robust long-video performance. TimeScope blog

- Model performance degrades as video length increases, highlighting the need for true temporal reasoning capabilities beyond static retrieval. TimeScope blog

- Gemini 2.5-Pro stands out for longer videos, but most models show plateauing behavior, suggesting limits of current architectures or training data. TimeScope blog

- Simply scaling parameters does not guarantee a longer temporal horizon; the benchmark reveals nuanced trade-offs across tasks like information synthesis and motion perception. TimeScope blog

- TimeScope’s open-source nature invites the community to improve data, training regimes, and evaluation methods to move closer to real long-video understanding. TimeScope blog

FAQ

-

What is TimeScope?

TimeScope is an open-source benchmark hosted on Hugging Face that tests long-video understanding by inserting short needles into base videos to probe temporal reasoning and motion analysis.

-

How are needles inserted and what do they test?

Short video needles (~5–10 seconds) are embedded at random positions in base videos (1 minute to 8 hours). They test retrieval, synthesis, localization, and motion analysis by requiring deep temporal understanding rather than simple frame-level retrieval.

-

What have results shown so far?

Gemini 2.5-Pro maintains strong accuracy on videos longer than one hour, while Qwen 2.5-VL and InternVL 2.5 show long-video curves similar to smaller variants, plateauing at roughly the same horizon.

References

More news

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

Reducing Cold Start Latency for LLM Inference with NVIDIA Run:ai Model Streamer

A detailed look at how NVIDIA Run:ai Model Streamer lowers cold-start times for LLM inference by streaming weights into GPU memory, with benchmarks across GP3, IO2, and S3 storage.

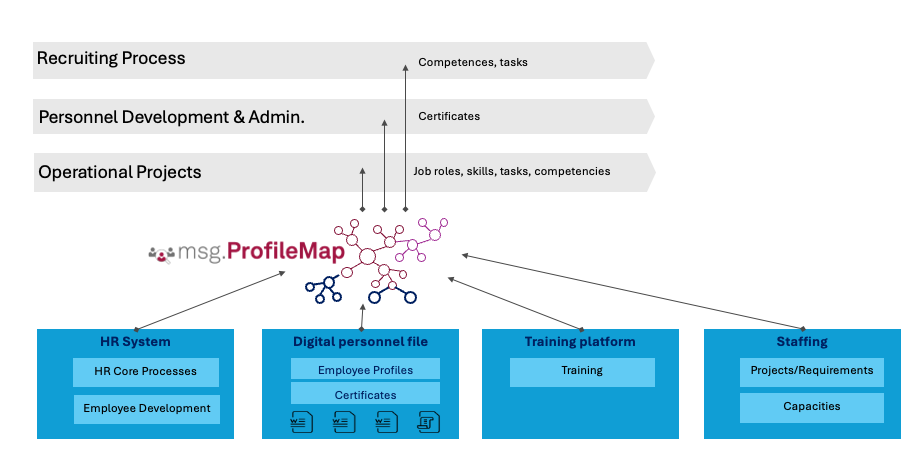

How msg enhanced HR workforce transformation with Amazon Bedrock and msg.ProfileMap

This post explains how msg automated data harmonization for msg.ProfileMap using Amazon Bedrock to power LLM-driven data enrichment, boosting HR concept matching accuracy, reducing manual workload, and aligning with EU AI Act and GDPR.

How Quantization Aware Training Enables Low-Precision Accuracy Recovery

Explores quantization aware training (QAT) and distillation (QAD) as methods to recover accuracy in low-precision models, leveraging NVIDIA's TensorRT Model Optimizer and FP8/NVFP4/MXFP4 formats.

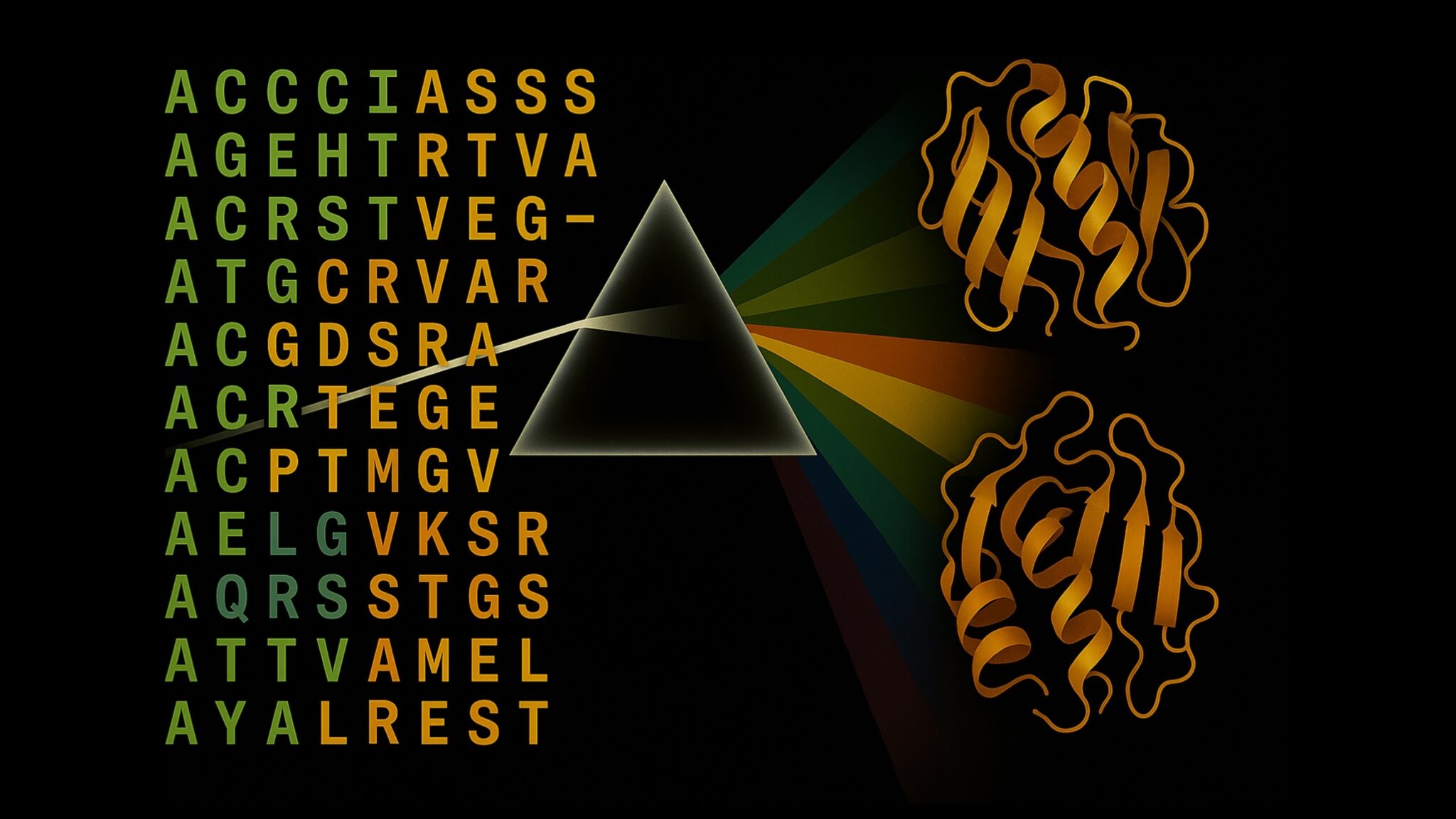

Accelerate Protein Structure Inference Over 100x with NVIDIA RTX PRO 6000 Blackwell Server Edition

NVIDIA’s RTX PRO 6000 Blackwell Server Edition dramatically speeds protein-structure inference, enabling end-to-end GPU-resident workflows with OpenFold and TensorRT—achieving up to 138x faster folding than AlphaFold2.

Why language models hallucinate—and how OpenAI is changing evaluations to boost reliability

OpenAI explains that hallucinations in language models stem from evaluation incentives that favor guessing over uncertainty. The article outlines how updated scoring and uncertainty-focused benchmarks can reduce confident errors and improve reliability.