Five Major Improvements to Gradio MCP Servers

Sources: https://huggingface.co/blog/gradio-mcp-updates, Hugging Face Blog

TL;DR

- Gradio adds a File Upload MCP server so agents can upload files directly to Gradio applications, eliminating the need for public URLs.

- Progress notifications now stream in real time to MCP clients, enabling live monitoring of longer tasks.

- Backend APIs can be integrated into MCP-compatible LLMs with a single line of code, automating what used to be manual wiring.

- OpenAPI support is added via gr.load_openapi, which creates a Gradio app from an OpenAPI schema and can be launched as an MCP server with mcp_server.

- Authentication headers can be surfaced and passed into your functions using gr.Header, and tool descriptions can be customized with api_description. Source attribution: Hugging Face Blog. Learn more at https://huggingface.co/blog/gradio-mcp-updates.

Context and background

Gradio is an open-source Python package for creating AI-powered web applications. It is compliant with the MCP server protocol and powers thousands of MCP servers hosted on Hugging Face Spaces. The Gradio team is betting big on Gradio and Spaces as the best way to build and host AI-powered MCP servers. The updates described here come from the 5.38.0 release, reflecting a focus on reducing friction in MCP server development and operation. This release note highlights features designed to simplify file handling, monitoring, API integration, API description, and header management for MCP-based workflows. For developers and teams building with MCP, the improvements address common pain points such as file inputs across remote servers, long-running tasks, and backend integration.

What’s new

File Upload MCP server

Previously, when a Gradio MCP server ran on a different machine, inputs like images, videos, or audio required publicly accessible URLs for remote download. The new File Upload MCP server lets tools upload files directly to the Gradio application, removing the manual step of hosting inputs publicly. This change is particularly helpful in agent-enabled workflows where files must be supplied by the agent without extra steps. The documentation notes how to start this server when your MCP tools require file inputs. Hugging Face Blog

Real-time progress streaming

Long-running AI tasks can take time, and users benefit from visibility into progress as work proceeds. Gradio now streams progress notifications to the MCP client, enabling real-time monitoring of status updates during task execution. Developers are encouraged to implement their MCP tools to emit these progress statuses. Hugging Face Blog

Automated backend API integration with a single line of code

Mapping existing backend APIs to MCP tools can be error-prone and time-consuming. With this release, Gradio automates the process so you can connect a backend API to an MCP-compatible LLM with a single line of code. This reduces the boilerplate and potential misconfiguration when exposing backend capabilities through MCP.

OpenAPI support with gr.load_openapi

OpenAPI is a widely adopted standard for describing RESTful APIs. Gradio now includes a gr.load_openapi function that creates a Gradio application directly from an OpenAPI schema. You can then launch the app with mcp_server=True to automatically create an MCP server for the API. This feature streamlines API exposure to MCP-enabled agents and LLMs. Hugging Face Blog

Header-based authentication and tool description customization

Many MCP servers rely on authentication headers to call services on behalf of users. You can declare MCP server arguments as gr.Header, and Gradio will extract the header from the incoming request (if present) and pass it to your function. This makes the required headers visible in the connection documentation, improving clarity for users. The example shows extracting an X-API-Token header and passing it as an argument to an API call. The release also notes that Gradio now automatically generates tool descriptions from function names and docstrings, with api_description allowing further customization. Hugging Face Blog

Tool descriptions and api_description

Beyond automatic generation, tool descriptions can be refined using the api_description parameter. This enables more precise and user-friendly descriptions for MCP tools, aligning documentation with how you intend tools to be used in the MCP ecosystem. Hugging Face Blog

Context on guides and community guidance

The blog points readers toward Gradio Guides for deeper guidance on using the new MCP server features and for security considerations. These resources are recommended for developers implementing the new MCP-related capabilities. Hugging Face Blog

Why it matters (impact for developers/enterprises)

- Lower integration friction: File Upload and OpenAPI integration reduce the manual steps required to bring external data and APIs into MCP workflows, accelerating development timelines.

- Improved observability: Real-time progress streaming gives operators and researchers better visibility into long-running MCP tasks, enabling faster triage and performance tuning.

- Safer and clearer integration: Header extraction and automatic documentation of required headers help developers communicate and enforce proper credential handling without guessing which headers are needed.

- Consistent tool descriptions: Automatically generated tool descriptions, with optional api_description customization, improve usability for end-users and reduce documentation gaps in MCP backends.

- Faster backend wiring: The one-line API integration capability lowers the barrier to connecting business backends to MCP-enabled LLMs, enabling more rapid experimentation and deployment on Hugging Face Spaces.

Technical details or Implementation

- File Upload: The new MCP server supports direct file uploads to the Gradio application, addressing the limitation where inputs on remote servers required publicly accessible URLs. This is designed to simplify workflows where agents provide file inputs without manual hosting steps. Hugging Face Blog

- Progress streaming: Gradio streams progress updates to MCP clients so users can monitor status in real time during long tasks. Developers are encouraged to emit these progress statuses from MCP tools. Hugging Face Blog

- Backend integration: A single line of code can integrate a backend API into any MCP-compatible LLM, automating what was previously a manual mapping task. Hugging Face Blog

- OpenAPI support: The gr.load_openapi function creates a Gradio app from an OpenAPI schema; launching with mcp_server=True automatically creates an MCP server for the API. Hugging Face Blog

- Headers and function calls: You can declare MCP server arguments as gr.Header. Gradio will extract the header from the request (if present) and pass it to your function, enabling headers to be reflected in the MCP connection docs. The X-API-Token example demonstrates the flow. Hugging Face Blog

- Tool descriptions and api_description: Gradio automatically generates tool descriptions from function names and docstrings, with api_description allowing further customization to tailor descriptions for human readers and MCP consumers. Hugging Face Blog

Key takeaways

- File Upload MCP server reduces manual steps for file inputs from remote clients.

- Real-time progress streaming improves task visibility and user experience.

- One-line backend integration lowers the barrier to connecting APIs with MCP-enabled LLMs.

- OpenAPI support enables API exposure through MCP with minimal setup via gr.load_openapi.

- gr.Header-based argument passing clarifies required credentials and improves documentation generation.

- Automatic tool descriptions, plus api_description customization, enhance MCP tool usability and clarity.

FAQ

- Q: What is the File Upload MCP server? A: It allows tools to upload files directly to a Gradio application, removing the need for public URLs for input files.

- Q: How does progress streaming help MCP users? A: It streams progress notifications to the MCP client, enabling real-time monitoring of task status.

- Q: How can I integrate a backend API with an MCP-enabled LLM? A: Use a single line of code to connect your backend API to any MCP-compatible LLM, automating the integration process. Hugging Face Blog

- Q: What does gr.load_openapi do? A: It creates a Gradio application from an OpenAPI schema, and you can launch it as an MCP server with mcp_server=True. Hugging Face Blog

- Q: How does gr.Header help with authentication and tool docs? A: You can declare headers as gr.Header, Gradio extracts them from the request and passes them to your function; connection docs can display the required headers. Hugging Face Blog

References

More news

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

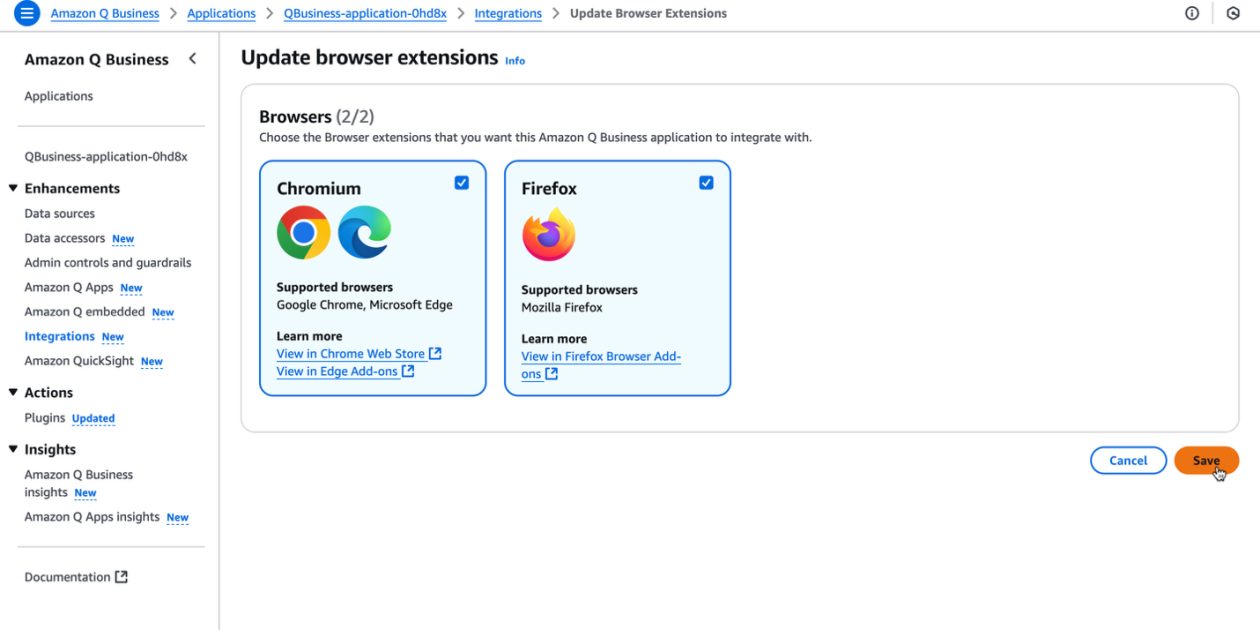

Supercharge your organization’s productivity with the Amazon Q Business browser extension

The Amazon Q Business browser extension brings context-aware, AI-driven assistance to your browser for Lite and Pro subscribers, enabling rapid, source-backed insights and seamless workflows.

Reducing Cold Start Latency for LLM Inference with NVIDIA Run:ai Model Streamer

A detailed look at how NVIDIA Run:ai Model Streamer lowers cold-start times for LLM inference by streaming weights into GPU memory, with benchmarks across GP3, IO2, and S3 storage.

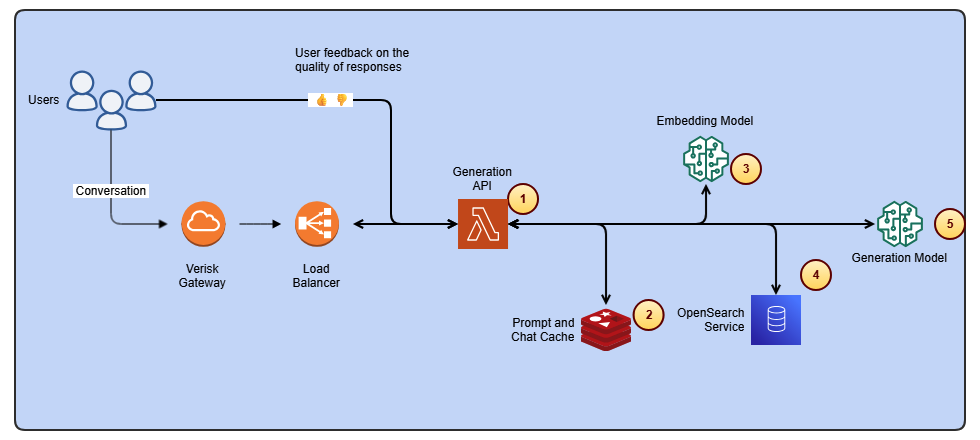

Streamline ISO-rating content changes with Verisk Rating Insights and Amazon Bedrock

Verisk Rating Insights, powered by Amazon Bedrock, LLMs, and RAG, enables a conversational interface to access ISO ERC changes, reducing manual downloads and enabling faster, accurate insights.

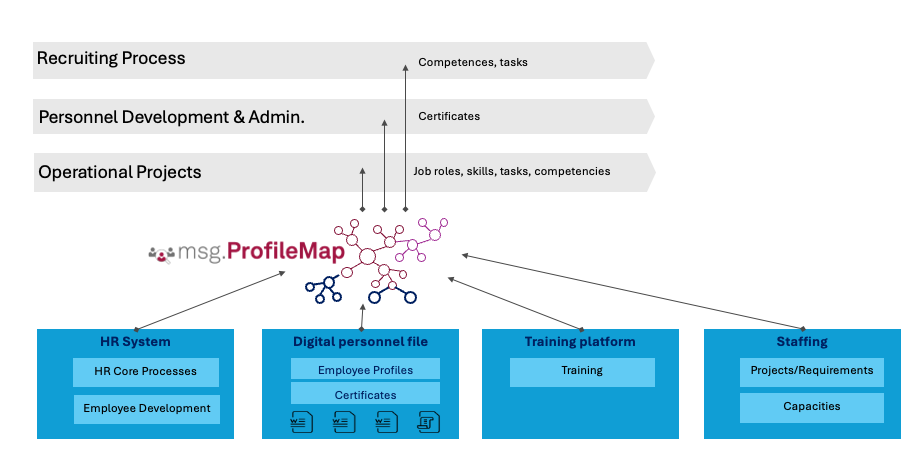

How msg enhanced HR workforce transformation with Amazon Bedrock and msg.ProfileMap

This post explains how msg automated data harmonization for msg.ProfileMap using Amazon Bedrock to power LLM-driven data enrichment, boosting HR concept matching accuracy, reducing manual workload, and aligning with EU AI Act and GDPR.