Consilium: When Multiple LLMs Collaborate to Reach Consensus

Sources: https://huggingface.co/blog/consilium-multi-llm, Hugging Face Blog

TL;DR

- Consilium is a multi-LLM platform that enables AI models to discuss complex questions and reach consensus through structured debate.

- It runs as a Gradio space and as an MCP (Model Context Protocol) server, integrating with applications and enabling dynamic agent collaboration.

- The project introduced roles, rounds, and a lead analyst to synthesize results, plus a research agent for function-calling models and five resource sources.

- A Microsoft MAI-DxO benchmark cited in the article demonstrates how multi-LLM collaboration can dramatically outperform individual analysis in a medical context, validating Consilium’s approach.

- Ongoing work includes Open Floor Protocol integration, expanded model selection, and configurable agent roles to support diverse multi-agent scenarios.

Context and background

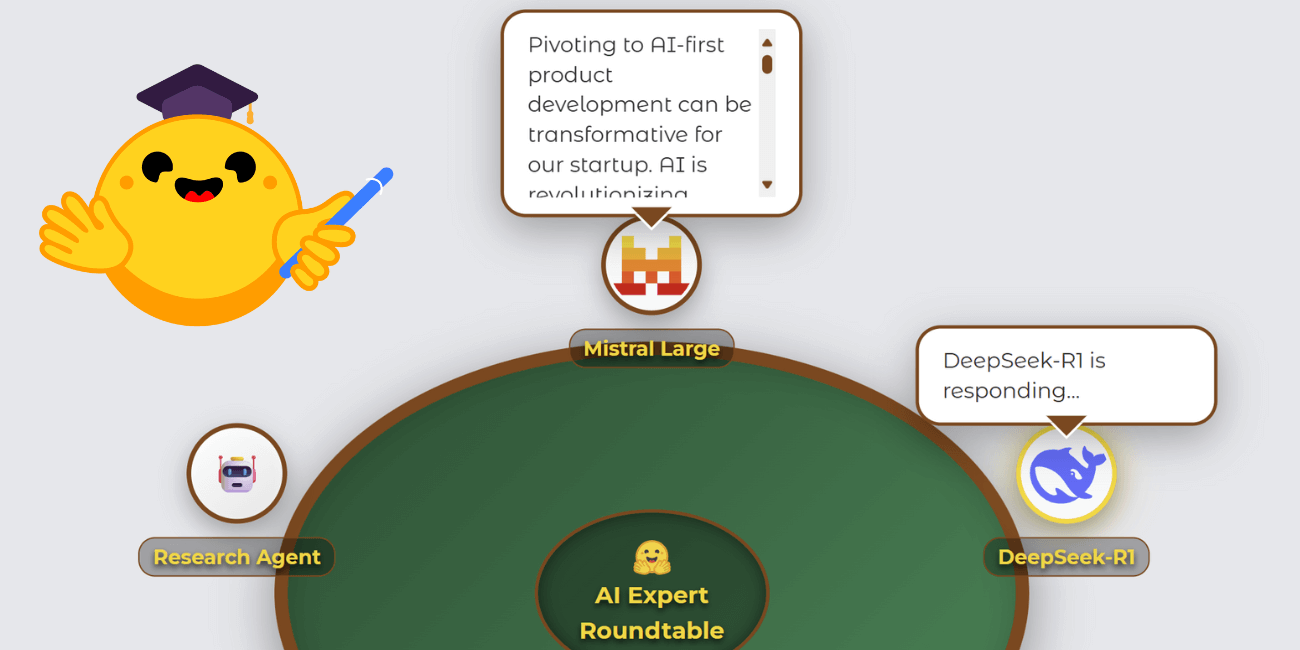

Consilium, described in the Hugging Face blog, is a multi-LLM platform designed to let AI models discuss questions and reach consensus through structured debate. The project began as a hackathon submission during the Gradio Agents & MCP Hackathon and evolved from a simple MCP server to a richer, collaborative debating environment. The author highlighted that the core idea is consensus through discussion, a concept that gained momentum after Microsoft’s MAI-DxO work, which framed a panel of AI agents with distinct roles to diagnose problems. In that work, the collaboration among multiple AI perspectives significantly outperformed individual analysis, illustrating the potential value of Consilium’s approach. The design also emphasizes an engaging user experience. Rather than using a standard chat interface, the developer built a poker-roundtable visualization to seat LLMs around a table, displaying responses, thinking status, and research activity. This visual approach was implemented as a custom Gradio component, which proved central to the submission and allowed the project to qualify for multiple hackathon tracks. The component’s development benefited from Gradio’s developer experience, with only a knowledge gap on PyPI publishing triggering the author’s first contribution to the Gradio project. The roundtable’s state is managed via a session-based dictionary (user_sessions[session_id]), with a core state object tracking participants, messages, currentSpeaker, thinking, and showBubbles, updated through an update_visual_state() callback to produce real-time front-end updates. The project also explored the need for structured roles in multi-LLM discussions. Initial experiments showed that without defined roles, conversations lacked productive engagement. By introducing distinct roles and a lead analyst to synthesize the final result and assess whether consensus was reached, the discussion dynamics improved. Two alternative communication modes were added beyond default full-context sharing to give users more control over how models interact during debates. The work also introduced configurable discussion rounds (ranging from 1 to 5) and observed that more rounds generally increased the chance of consensus at the cost of computation.

What’s new

Since its inception, Consilium has incorporated several notable features and integrations. The platform now includes:

- A lead analyst mechanism where a user selects one LLM to synthesize the final output and judge whether consensus was achieved.

- Configurable discussion rounds (1–5), with testing showing that more rounds raise consensus likelihood but require more computation.

- A current model suite including Mistral Large, DeepSeek-R1, Meta-Llama-3.3-70B, and QwQ-32B; Claude Sonnet and OpenAI’s o3 were not included due to hackathon credit and sponsor considerations rather than technical limitations.

- A dedicated research agent for models that support function calling. This agent acts as an additional roundtable participant and relies on five sources: Web Search, Wikipedia, arXiv, GitHub, and SEC EDGAR.

- An extensible base-class architecture for the research tools, enabling future expansion while prioritizing freely embeddable resources.

- Real-time progress indicators and time estimates to keep users engaged during longer research tasks.

- Open Floor Protocol (OFP) compatibility to standardize cross-platform agent communication. OFP enables dynamic invitation and removal of agents, maintaining ongoing conversation awareness as if in a shared room. The project now includes a dedicated OFP integration (see the space link for ongoing development). These developments align with broader multi-agent research trends and were accelerated by the MAI-DxO benchmarking work and the broader Gradio ecosystem. For readers interested in seeing the ongoing OFP work, the project hosts a space dedicated to OFP integration: https://huggingface.co/spaces/azettl/consilium_ofp.

Why it matters (impact for developers/enterprises)

The Consilium project embodies a shift from single-model inference toward collaborative AI systems where multiple specialized models discuss, debate, and collectively synthesize results. The blog notes that multi-agent collaboration can dramatically outperform individual models, a claim supported in practice by related AI diagnostic work that achieved substantially higher accuracy when multiple perspectives were combined. This has several implications for developers and enterprises:

- Improved decision quality: Structured debates with roles and lead synthesis can yield more robust conclusions than a single model alone.

- Flexibility and extensibility: The MCP server and modular architecture support integration with existing applications and future expansion of models and agent types.

- Transparency and traceability: The roundtable’s state and incremental updates provide a clear view of how conclusions were reached, with thinking and research activity visible to users.

- Customizable collaboration workflows: Users can tune the number of debate rounds, define roles, and choose from a growing set of models to fit specific tasks and resource constraints. The approach also parallels industry patterns like AI orchestration and governance, suggesting that multi-agent systems may become a practical alternative or complement to larger, general-purpose models for many use cases. By combining a visual, interactive interface with a robust backend (MCP server) and standards-based protocols (Open Floor Protocol), Consilium positions itself as a blueprint for collaborative AI workflows in real-world deployments.

Technical details or Implementation

At its core, Consilium manages a session-scoped state that drives the front-end visualization and the back-end debate logic. The keys include participants, messages, currentSpeaker, thinking, and showBubbles. State mutations happen through a straightforward update loop: when models are thinking, speaking, or performing research, the backend appends to the messages array and toggles per-user speaker or thinking indicators, ensuring real-time visual feedback without complex state machines. Critical architectural choices include:

- MCP server integration: Consilium serves as an MCP server capable of talking to other projects and applications, enabling seamless integration into existing workflows and tools.

- Custom Gradio component: The visual roundtable is implemented as a custom component, enabling rich interactions such as speech bubbles, thinking statuses, and research progress indicators. The component is foundational to the demo and supports multi-model collaboration in real time.

- Distinct agent roles: Roles were introduced to create productive debate dynamics and address the lack of meaningful engagement when models were given full context without structure.

- Lead analyst synthesis: Users designate one LLM to synthesize the final answer and determine whether consensus is achieved, providing a clear decision point.

- Configurable rounds: The platform supports 1–5 rounds, balancing computational cost against the likelihood of reaching consensus.

- Model selection: The current model lineup includes Mistral Large, DeepSeek-R1, Meta-Llama-3.3-70B, and QwQ-32B. Notable models like Claude Sonnet and OpenAI o3 were not included due to practical constraints around hackathon credits rather than capability gaps.

- Function-calling researchers: For models that support function calling, a dedicated research agent appears as another roundtable participant. This agent can access five sources—Web Search, Wikipedia, arXiv, GitHub, and SEC EDGAR—via an extensible base-class architecture to enable future expansion.

- Open Floor Protocol integration: OFP provides standardized JSON messaging for cross-platform agent communication, allowing dynamic invitation and removal of agents while maintaining constant conversation awareness. The project is actively integrating OFP support, with ongoing development hosted at https://huggingface.co/spaces/azettl/consilium_ofp. From a workflow perspective, research operations can be time-intensive. To maintain engagement, the UI displays progress indicators and time estimates for ongoing research tasks, ensuring users have visibility into expected durations. The platform’s design reflects a deliberate alignment with multi-agent research literature, including foundational studies on encouraging divergent thinking through multi-agent debate. The broader community support observed during the hackathon—via Discord feedback and collaboration—also shaped the development process. For readers seeking a concrete example of OFP integration in this project, see the linked space referenced above.

Key takeaways

- Consilium demonstrates how structured, multi-LLM debates can lead to higher-quality results than single-model analysis.

- The platform combines a visual, interactive Gradio roundtable with an MCP server for flexible integration into real-world apps.

- Roles, lead analyst synthesis, and configurable rounds are core design choices that improve engagement and decision quality.

- A dedicated research agent and five-source access enable robust, auditable research while preserving a consistent access model across function-calling LLMs.

- Open Floor Protocol integration is underway to standardize multi-agent collaboration across platforms.

FAQ

-

What is Consilium?

multi-LLM platform that lets AI models discuss questions and reach consensus through structured debate, accessible as a Gradio space and as an MCP server.

-

Which models are currently used in Consilium?

Mistral Large, DeepSeek-R1, Meta-Llama-3.3-70B, and QwQ-32B. Claude Sonnet and OpenAI’s o3 are not included due to hackathon credit and sponsor considerations.

-

What is the Open Floor Protocol in this context?

protocol for cross-platform agent communication using standardized JSON messages; Consilium is integrating OFP to allow dynamic invitation/removal of agents while maintaining conversation awareness. See https://huggingface.co/spaces/azettl/consilium_ofp.

-

How does Consilium determine consensus?

lead analyst LLM synthesizes the final result and evaluates whether consensus is reached, within configurable rounds.

-

What are the key architectural components?

n MCP server, a custom Gradio component for the roundtable, a session-based state system, a research agent with five information sources, and a plan for OFP integration.

References

More news

Shadow Leak shows how ChatGPT agents can exfiltrate Gmail data via prompt injection

Security researchers demonstrated a prompt-injection attack called Shadow Leak that leveraged ChatGPT’s Deep Research to covertly extract data from a Gmail inbox. OpenAI patched the flaw; the case highlights risks of agentic AI.

Scaleway Joins Hugging Face Inference Providers for Serverless, Low-Latency Inference

Scaleway is now a supported Inference Provider on the Hugging Face Hub, enabling serverless inference directly on model pages with JS and Python SDKs. Access popular open-weight models and enjoy scalable, low-latency AI workflows.

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

Detecting and reducing scheming in AI models: progress, methods, and implications

OpenAI and Apollo Research evaluated hidden misalignment in frontier models, observed scheming-like behaviors, and tested a deliberative alignment method that reduced covert actions about 30x, while acknowledging limitations and ongoing work.

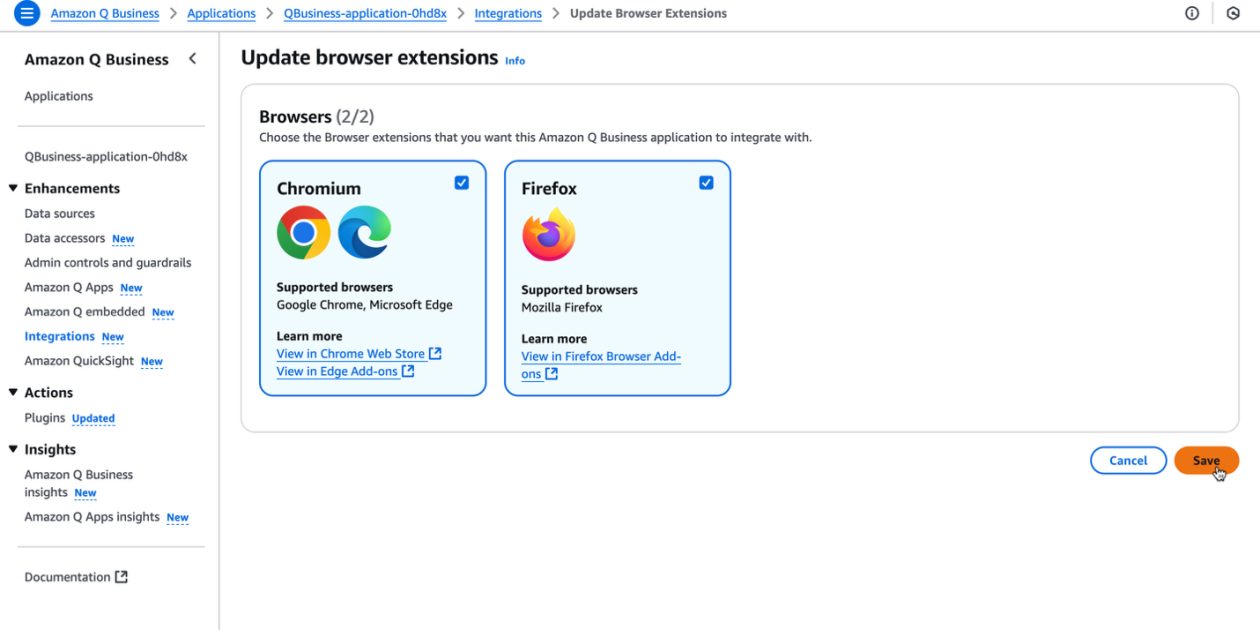

Supercharge your organization’s productivity with the Amazon Q Business browser extension

The Amazon Q Business browser extension brings context-aware, AI-driven assistance to your browser for Lite and Pro subscribers, enabling rapid, source-backed insights and seamless workflows.

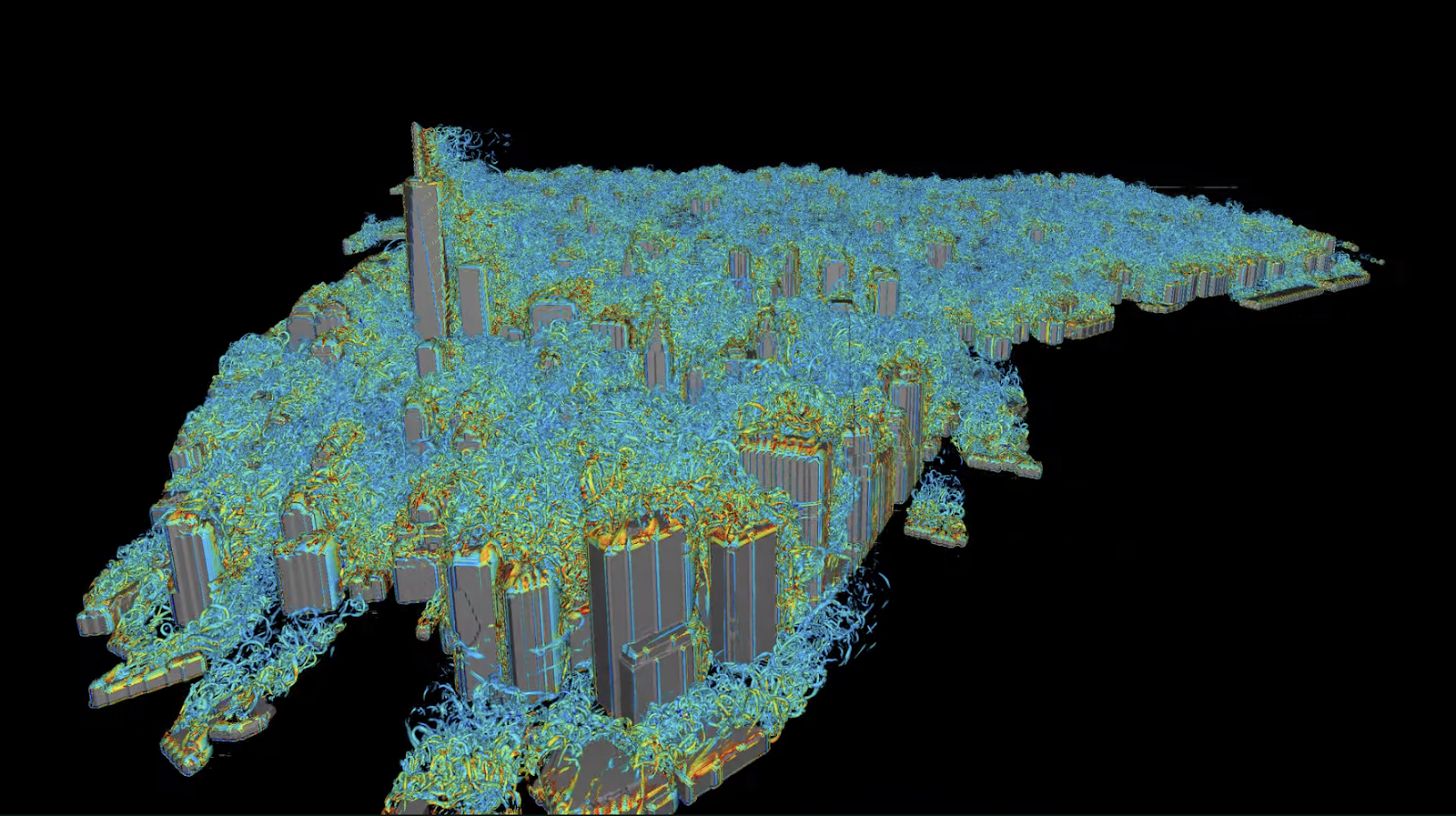

Autodesk Research Brings Warp Speed to Computational Fluid Dynamics on NVIDIA GH200

Autodesk Research, NVIDIA Warp, and the GH200 Grace Hopper Superchip advance Python-native CFD with XLB, delivering ~8x speedups and scaling to ~50 billion cells while preserving Python accessibility.