AGI Is Not Multimodal: Why Embodiment Beats Modality Gluing in AI

TL;DR

- True artificial general intelligence (AGI) needs physical grounding and embodiment, not just combining many modalities.

- Merely scaling multimodal networks is unlikely to yield human-level AGI capable of sensorimotor reasoning, motion planning, and social coordination.

- Language models (LLMs) may reflect patterns or heuristics rather than a robust world model of reality.

- Embodiment and interaction with the environment should be treated as primary; modality-based processing can emerge from this grounding, not the other way around.

- The critique engages with world-model theories, cautions against equating language-based predictions with true world understanding, and points to fields like model-based RL and task-and-motion planning as evidence for embodied intelligence.

Context and background

The debate around achieving artificial general intelligence (AGI) has increasingly centered on whether scaling large multimodal systems can produce general intelligence akin to humans. Proponents of the multimodal approach argue that integrating multiple sensing modalities creates a broadly capable system. However, the piece argues that disembodied, modality-centered definitions of AGI miss essential problem spaces that require engagement with the physical world. A true AGI, the author contends, must be capable across domains that originate in physical reality—solving real-world tasks such as repairing a car, untying a knot, or cooking—rather than being reducible to symbol manipulation alone. This view situates embodiment and environment interaction as foundational, with modality fusion seen as an emergent property rather than a starting point. The discussion also questions the idea that large language models (LLMs) build explicit world models simply by predicting the next token, instead suggesting that LLMs may rely on memorized heuristics and patterns learned from data. For broader debate on these ideas, see discussions surrounding world models, symbol manipulation, and embodied cognition in AI research. The article also references related work and perspectives in the field (e.g., discussions about world modeling, model-based RL, and planning in robotics).

What’s new

The central claim is that genuine AGI will emerge from systems deeply grounded in the physical world and capable of interacting with their environment, not from patchwork architectures that glue together many modalities. In this view, embodiment is primary and the appearance of generality across modalities should be treated as emergent rather than fundamental. This perspective challenges the notion that multimodal scale alone will yield robust, sensorimotor intelligence and social coordination. The author contends that while LLMs may appear to understand language and even reflect human-like world intuitions, they often do so through surface-level patterns rather than a grounded model of physics and causal structure. The discussion cites evidence from the difficulty of transferring purely symbolic reasoning to real-world tasks and from studies showing that predictive success on sequences can occur without a true, high-fidelity world model. The work also cites fields in AI where world models and embodied approaches are actively developed, such as model-based reinforcement learning, task and motion planning in robotics, causal world modeling, and computer vision applied to physical reality.

Why it matters (impact for developers/enterprises)

- For AI system design: prioritizing embodied, environment-interacting architectures could be more effective for achieving generality than adding more modalities to purely symbolic, next-token-driven models.

- For robotics and automation: sensorimotor reasoning, planning, and coordination with real objects in the world may require grounding in a physical world model rather than reliance on language-based proxies.

- For product strategy: investments focused on modality fusion without grounding may yield diminishing returns toward true AGI, suggesting a shift toward embodied AI research and integration with real-robot or embodied simulators.

- For risk and governance: understanding the limits of current LLM-based systems helps set realistic expectations about near-term capabilities and the need for robust, embodied evaluation environments.

Technical details or Implementation

The argument situates several technical strands as evidence for embodiment over pure modality blending:

- World models and model-based reasoning, including model-based reinforcement learning, task and motion planning in robotics, and causal world modeling, are highlighted as critical to solving physical-world tasks. While LLMs can perform impressive linguistic feats, they are not guaranteed to be running accurate physics simulations in their latent state when predicting next tokens. The piece argues that the world-model hypothesis can be true in some systems, but LLMs often rely on a large store of heuristics and memorized rules rather than a faithful, general model of the world.

- A noted counterpoint is the Othello example, where hidden states of a transformer model trained on sequences of moves allowed prediction of the board state. The author cautions that such results do not trivially generalize to natural-language understanding of the real world, since games like Othello are symbolic and can be solved with pen-and-paper rules, whereas real-world tasks (like sweeping a floor or driving a car) require grounded perception and physics that go beyond symbolic inference.

- The discussion engages with debates about whether language structure mirrors reality and whether linguistic competence implies semantic and pragmatic understanding. It argues that some explanations for LLM capabilities—such as the idea that “language mirrors the structure of reality”—should not be taken literally when it comes to world grounding. The synthesis emphasizes that true semantic understanding likely requires more than syntactic manipulation and abstraction over language; it requires a grounded model of the world.

A small comparison of approaches (GFM table)

| Approach | Focus and implication |

|---|---|

| Next-token prediction | May reflect surface patterns and syntactic rules; does not guarantee a world model of physical reality |

| World modeling / model-based reasoning | Aimed at predicting high-fidelity observations and planning in the physical world; supported by areas like model-based RL and causal world modeling |

| Embodiment and interaction | Central to solving sensorimotor tasks, planning, and social coordination; modality processing may emerge from grounded interaction |

- For practitioners, the author points to established AI subfields that explicitly seek grounded, predictive models of the world, such as model-based reinforcement learning, task and motion planning, and causal world modeling, suggesting these areas as more promising foundations for progress toward AGI than modality gluing alone. The discussion also implies that language capabilities in isolation may not suffice for robust general intelligence in embodied real-world contexts.

Key takeaways

- Grounding in a physical world model and embodied interaction is proposed as essential for true AGI, not just multimodal fusion.

- LLMs’ language prowess does not automatically entail a comprehensive, causal model of the real world; their success may reflect memorized patterns rather than true world understanding.

- Embodiment should be treated as foundational, with modality-specific processing emerging from interaction with environment and sensors.

- Real-world progress toward AGI likely requires advances in model-based reasoning, sensorimotor planning, and embodied cognition, rather than relying solely on scaling multimodal architectures.

- The critique highlights the limits of equating symbolic sequence prediction with world-modeling capabilities, urging careful distinction between linguistic competence and genuine grounded understanding.

FAQ

-

What is the main claim of the article?

The article argues that true AGI requires embodiment and interaction with the physical world, and that simply scaling multimodal networks will not yield human-level AGI.

-

How does this view relate to language models and world models?

It suggests LLMs may rely on memorized rules and heuristics rather than building robust, high-fidelity world models of physical reality.

-

What evidence is cited to question world-model claims in LLMs?

Examples like predictive tasks in sequence data and the OthelloGPT finding are discussed to illustrate that surface-level sequence prediction does not guarantee a grounded understanding of the real world.

-

What are the practical implications for developers and enterprises?

Emphasize embodied AI research, sensorimotor reasoning, and environment-grounded planning over purely multimodal, patchwork architectures for work that involves real-world tasks.

-

What fields are highlighted as relevant to embodiment?

Model-based reinforcement learning, task and motion planning in robotics, causal world modeling, and computer vision relating to physical reality.

References

More news

How to Reduce KV Cache Bottlenecks with NVIDIA Dynamo

NVIDIA Dynamo offloads KV Cache from GPU memory to cost-efficient storage, enabling longer context windows, higher concurrency, and lower inference costs for large-scale LLMs and generative AI workloads.

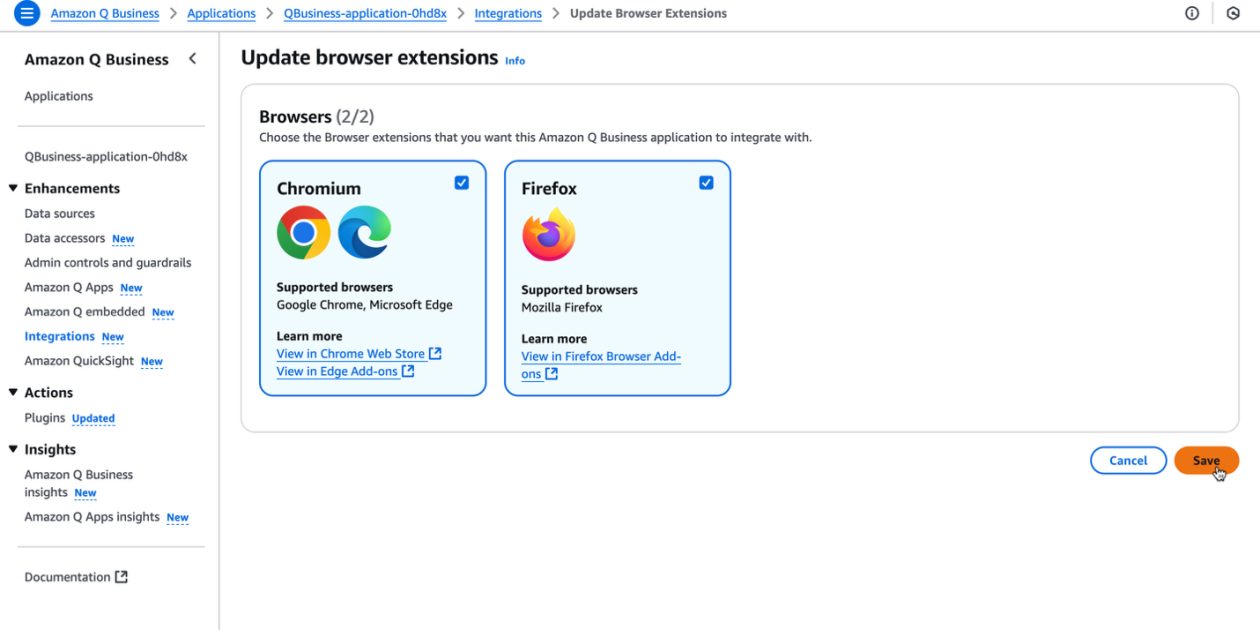

Supercharge your organization’s productivity with the Amazon Q Business browser extension

The Amazon Q Business browser extension brings context-aware, AI-driven assistance to your browser for Lite and Pro subscribers, enabling rapid, source-backed insights and seamless workflows.

Reducing Cold Start Latency for LLM Inference with NVIDIA Run:ai Model Streamer

A detailed look at how NVIDIA Run:ai Model Streamer lowers cold-start times for LLM inference by streaming weights into GPU memory, with benchmarks across GP3, IO2, and S3 storage.

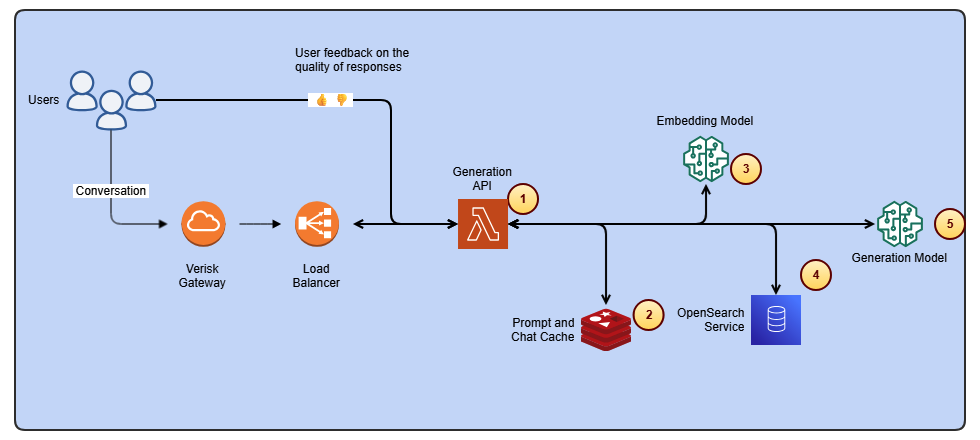

Streamline ISO-rating content changes with Verisk Rating Insights and Amazon Bedrock

Verisk Rating Insights, powered by Amazon Bedrock, LLMs, and RAG, enables a conversational interface to access ISO ERC changes, reducing manual downloads and enabling faster, accurate insights.

GPT-5-Codex Addendum: Agentic Coding Optimized GPT-5 with Safety Measures

An addendum detailing GPT-5-Codex, a GPT-5 variant optimized for agentic coding within Codex, with safety mitigations and multi-platform availability.

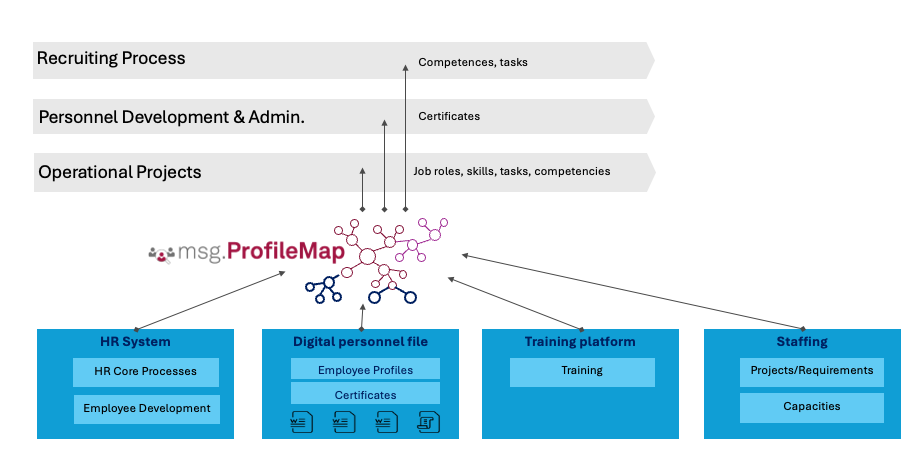

How msg enhanced HR workforce transformation with Amazon Bedrock and msg.ProfileMap

This post explains how msg automated data harmonization for msg.ProfileMap using Amazon Bedrock to power LLM-driven data enrichment, boosting HR concept matching accuracy, reducing manual workload, and aligning with EU AI Act and GDPR.